Document 10477730

advertisement

Energy-Efficient Approximate Computation in Topaz

by

Sara Achour

Submitted to the Department of Electrical Engineering and Computer

Science

in partial fulfillment of the requirements for the degree of

Master of Science in Computer Science and Engineering

at the

S0

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

February 2015

@2015 Sara Achour

All rights reserved

The author hereby grants to MIT permission to reproduce and to distribute publicly paper

and electronic copies of this thesis document in whole or in part in any medium now

known or hereafter created.

Signature redacted

Author .....................

.............................................

Department of Electrical Engineering and Computer Science

January 30, 2015

Signature redacted

.........................

Certified by...................................

Martin C. Rinard

Science

of

Computer

Professor

Thesis Supervisor

Signature redacted

Accepted by...............

T

J6

Leslie A. Kolodziejski

Chairman, Department Committee on Graduate Theses

j

2

Energy-Efficient Approximate Computation in Topaz

by

Sara Achour

Submitted to the Department of Electrical Engineering and Computer Science

on January 30, 2015, in partial fulfillment of the

requirements for the degree of

Master of Science in Computer Science and Engineering

Abstract

The increasing prominence of energy consumption as a first-order concern in contemporary computing systems has motivated the design of energy-efficient approximate

computing platforms [37, 14, 18, 10, 31, 6, 1, 11, 7]. These computing platforms

feature energy-efficient computing mechanisms such as components that may occasionally produce incorrect results.

We present Topaz, a new task-based language for computations that execute on approximate computing platforms that may occasionally produce arbitrarily inaccurate

results. The Topaz implementation maps approximate tasks onto the approximate

machine and integrates the approximate results into the main computation, deploying

a novel outlier detection and reliable reexecution mechanism to prevent unacceptably

inaccurate results from corrupting the overall computation.

Because Topaz can work effectively with a very broad range of approximate hardware designs, it provides hardware developers with substantial freedom in the designs

that they produce. In particular, Topaz does not impose the need for any specific

restrictive reliability or accuracy guarantees. Experimental results from our set of

benchmark applications demonstrate the effectiveness of Topaz in vastly improving

the quality of the generated output while only incurring 0.2% to 3% energy overhead.

Thesis Supervisor: Martin C. Rinard

Title: Professor of Computer Science

3

Acknowledgments

I would like to thank Martin Rinard, my advisor, for being a constant source of guidance and inspiration throughout this experience. His insight and perspective have

proved invaluable throughout this process and I have learned a great deal.

I would also like to thank Stelios Sidiroglou-Douskos, Sasa Misailovic, Fan Long,

Jeff Perkins, Michael Carbin, Deokhwan Kim, Damien Zufferey and Michael Gordon

for fielding my questions and providing advice and support when Martin was away. I

am especially grateful to Sasa Misailovic and Mike Carbin for introducing me to the

area of approximate computation and including me in their project, Chisel.

I would like to thank my friends at CSAIL and in other departments for making

graduate life an enjoyable and academically diverse experience.

A special thanks to my family for fostering my love for science. and unconditionally

supporting me all these years.

4

Contents

1

Introduction

13

1.1

Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

13

1.1.1

Approximate and Unreliable Hardware Platforms . . . . . . .

14

1.1.2

Robust Computations

. . . . . . . . . . . . . . . . . . . . . .

15

1.1.3

Map-Reduce Pattern . . . . . . . . . . . . . . . . . . . . . . .

16

1.2

Topaz

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

16

1.3

Contributions . ... . . . ... . . . . . . . . . . . . . . . . . . . . . . .

17

1.4

Thesis Organization . . . . . . . . . . . . . . . . . . . . . . . . . . . .

19

2 Topaz Programming Language and Runtime

2.1

O verview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

21

2.2

Language

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

21

Topaz Compiler . . . . . . . . . . . . . . . . . . . . . . . . . .

23

Topaz Runtime . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

24

2.3.1

Application Stack . . . . . . . . . . . . . . . . . . . . . . . . .

24

2.3.2

Task Lifetime . . . . . . . . . . . . . . . . . . . . . . . . . . .

25

2.3.3

Persistent Data . . . . . . . . . . . . . .... . . . . . . . . . .

26

2.3.4

Abstract Output Vectors . . . . . . . . . . . . . . . . . . . . .

27

2.3.5

Outlier Detection . . . . . . . . . . . . . . . . . . . . . . . . .

30

2.3.6

Failed Task Detection

. . .... . . . . . . . . . . . . . . . . .

32

2.2.1

2.3

3

21

33

Example

3.1

Example Topaz Program . . . . . . . . . . . . . . . . . . . . . . . . .

5

33

3.1.1

Approximate Execution

36

3.1.2

Outlier Detection ..

36

3.1.3

Precise Reexecution .

37

4 Experimental Setup and Results

4.1

39

39

Benchmarks . . .

4.2 Hardware Model

40

4.2.1

Conceptual Hardware Model:

40

4.2.2

Hardware Emulator .

40

4.2.3

Energy Model . . . .

41

4.2.4

Benchmark Executions

43

4.2.5

Task and Output Abstraction

43

4.3 Outlier Detector Analysis

45

·4.4 Output Quality Analysis

4.5

5

46

4.4.1

Barnes . . .

46

4.4.2

BodyTrack.

47

4.4.3

StreamCluster .

47

4.4.4

Water .....

48

4.4.5

Blackscholes .

48

Energy Savings

49

4.5.1

Barnes.

49

4.5.2

Bodytrack

50

4.5.3

Streamcluster

50

4.5.4

Water ....

50

4.5.5

Blackscholes . .

50

4.6 Implications of No DRAM Refresh

51

4.7 Related Work . . . . . . . . . . . .

52

4.7.1

Approximate Hardware Platforms

52

4.7.2

Programming Models for Approximate Hardware

52

55

Conclusion

6

6 Appendices

6.1

57

Outlier Detector Bounds over Time . . . . . . . . . . . . . . . . . . .

57

6.1.1

Barnes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

58

6.1.2

Bodytrack . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

60

6.1.3

Water . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

62

6.1.4

Streamcluster . . . . . . . . . . . . . . . . . . . . . . . . . . .

66

6.1.5

Blackscholes . . . . . . . . . . . . . . . . . . . . . . . . . . . .

67

7

8

List of Figures

2-1

Topaz Taskset Construct . . . . . . . . . . . . . . . . . . . . . . . . .

22

2-2

Topaz Application Stack . . . . . . . . . . . . . . . . . . . . . . . . .

24

2-3

Overview of Implementation . . . . . . . . . . . . . . . . . . . . . . .

25

2-4

AOV Testing Algorithm

31

2-5

Outlier Detector Training Algorithm

. . . . . . . . . . . . . . . . . .

31

2-6

Outlier Detector Merge Construct . . . . . . . . . . . . . . . . . . . .

32

3-1

Example Topaz Program . . . . . . . . . . . . . . . . . . . . . . . . .

34

3-2

Output Quality for Bodytrack . . . . . . . . . . . . . . . . . . . . . .

37

4-1

Visual Representation of Output Quality in Barnes. . . . . . . . . . .

46

4-2

Output Quality for Bodytrack . . . . . . . . . . . . . . . . . . . . . .

47

4-3

Visual Representation of Output Quality in Streamcluster. . . . . . .

47

4-4

Visual Representation of Output Quality in Water.

48

6-1

Acceleration Amplitude, Outlier Detector Bounds over time for Barnes. 58

6-2

Velocity Amplitude, Outlier Detector Bounds over time for Barnes.

6-3

Phi, Outlier Detector Bounds over time for Barnes.

. . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . .

.

58

. . . . . . . . . .

59

6-4

Size, Outlier Detector Bounds over time for Barnes. . . . . . . . . . .

59

6-5

Weight, Outlier Detector Bounds over time for Bodytrack.

60

6-6

X Position, Outlier Detector Bounds over time for Bodytrack. ....

60

6-7

Y Position, Outlier Detector Bounds over time for Bodytrack. ....

61

6-8

Z Position, Outlier Detector Bounds over time for Bodytrack. ....

61

6-9

X Force, Molecule 1, Outlier Detector Bounds over time for Water/Interf. 62

9

. . . . . .

6-10 Y Force, Molecule 1, Outlier Detector Bounds over time for Water/Interf. 62

6-11 Z Force, Molecule 1, Outlier Detector Bounds over time for Water/Interf. 63

6-12 X Force, Molecule 2, Outlier Detector Bounds over time for Water/Interf. 63

6-13 Y Force, Molecule 2, Outlier Detector Bounds over time for Water/Interf. 63

6-14 Z Force, Molecule 2, Outlier Detector Bounds over time for Water/Interf. 64

6-15 Outlier Detector Bounds over time for Water/Interf, outputs for increment. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

64

6-16 Potential Energy, X Dimension Outlier Detector Bounds over time for

W ater/Poteng.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

64

6-17 Potential Energy, Y Dimension Outlier Detector Bounds over time for

Water/Poteng.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

65

6-18 Potential Energy, Z Dimension Outlier Detector Bounds over time for

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

65

6-19 Gain, Outlier Detector Bounds over time for Streamcluster. . . . . . .

66

6-20 Price Change, Outlier Detector Bounds over time for Blackscholes. . .

67

Water/Poteng.

10

List of Tables

4.1

Medium and Heavy Hardware Model Descriptions . . . . . . . . . . .

41

4.2

Overall Outlier Detector Effectiveness . . . . . . . . . . . . . . . . . .

45

4.3

End-to-end Application Accuracy Measurements.

46

4.4

Energy Consumption Table for medium and heavy energy models

. .

49

4.5

DRAM Refresh Rate . . . . . . . . . . . . . . . . . . . . . . . . . . .

51

11

. . . . . . . . . . .

12

Chapter 1

Introduction

The increasing prominence of energy consumption as a first-order concern in contemporary computing systems has motivated the design of energy-efficient approximate

computing platforms [37, 14, 18, 10, 31, 6, 1, 11, 7]. These computing platforms

feature energy-efficient computing mechanisms such as components that may occasionally produce incorrect results. For approximate computations that can acceptably tolerate the resulting loss of accuracy, these platforms can provide a compelling

alternative to standard precise hardware platforms. Examples of approximate computations that can potentially exploit approximate hardware platforms include many

financial, scientific, engineering, multimedia, information retrieval, and data analysis

applications [28, 22, 34, 2, 3, 30, 18].

1.1

Background

The following section includes brief summaries of relevant areas in the field of approximate computation. This background information sets up the research space and

provides context for the design decisions made.

13

1.1.1

Approximate and Unreliable Hardware Platforms

An approximate component consistently produces a correct or reasonably approximate result, while an unreliable component sporadically produces a sometimes unacceptable result. A component that produces the correct answer all the time is an

exact, or reliable component. Approximate and unreliable hardware platforms occasionally or inconsistently produce subtly or grossly inaccurate results. By reducing

the accuracy of the component, the component may be more cost-effective, energy

efficient or performant. In recent years, hardware researchers have proposed several

kinds of approximate and unreliable components:

* Approximate FPUs: These FPUs conserve energy by reducing the mantissa

width of a floating point number [31]. These components consistently produce

relatively small numerical errors which may compromise numerically unstable

computations.

* Low Voltage SRAMS: These SRAMS are undervolted, and therefore consume

less energy. Undervolting SRAMs decreases the integrity of the data, and leads

to read failures and write upsets, which sporadically produce arbitrarily bad

results [31].

" Low-Refresh DRAMS: These DRAMS passively consume less energy by reducing the refresh rate of the memory banks, which results in data corruptions.

These corruptions occur sporadically may yield arbitrarily bad results[31].

In architectural models that leverage approximate/unreliable hardware, these

components are usually used in conjunction with their reliable/exact counterparts

since some portions of the computation must be exact.

For example, instruction

cache and program counter address calculations should be executed precisely in order

to preserve the integrity of the program. Some proposed architectural models expose

the unreliable/approximate hardware to the programmer via and instruction set extension and a specification of the hardware fault characteristics.

Programming models and optimization systems such as Rely and Chisel leverage this

14

fine grain control when transforming programs into approximate versions of themselves.

Programming Models for Unreliable Hardware

Programming models and optimization systems such as Rely and Chisel (an extension

of Rely) transform programs into approximate versions of themselves by utilizing

fine grain unreliable instructions and data stores. These systems require a hardware

fault specification and hardware energy specification to operate and do not take into

account the cost of switching between unreliable and reliable components.

1.1.2

Robust Computations

Robust applications are particularly good candidates for unreliable hardware.

A

robust computation contains some sort of inbuilt redundancy or ambiguity, or is

tolerant to error. We characterize classes of applications as follows:

* Sensory Applications: graphics, audio processing. These applications produce visual or auditory output that is interpreted by humans. Since human

sensory perception is naturally imprecise, it is forgiving of error.

" Convergence Algorithms: simulated annealing. These applications run until

some sort of error criteria is met. These computations are robust to error since

it will not converge until the cumulative error is reasonable.

" Simulations: physics, geological simulations. Simulations are approximations

of phenomena, so an approximation of a simulation is acceptable if the result

falls within some error accepted by domain specialists.

" Machine Learning: clustering. Many machine learning algorithms are naturally resilient to outliers and can tolerate sporadic error since they are already

operating on a statistical distribution.

15

1.1.3

Map-Reduce Pattern

A map-reduce computation is a pattern of computation comprised of independent,

computationally intensive map tasks and a reduce task. The reduce task combines

the results from the map tasks into global state. This computational pattern is very

common for large workloads.

1.2

Topaz

Topaz seeks to provide a robust programming interface for a class of approximate

hardware existing approximate computation platforms cannot fill. Using Topaz, programmers are able to implement a wide array of robust computations that leverage

approximate hardware while still producing acceptable output. Topaz uses concepts

from many of the topics we discussed previously:

" Target Hardware Platform: Topaz targets approximate hardware platforms

that (1) lack a well defined specification or (2) do not allow for fine grain control

of unreliable operations.

" Target Applications: Topaz targets robust computations - including many

of the application classes listed in the background section.

" Programming Model: Topaz provides a map-reduce C language extension

to implement computationally intensive, approximatible, routines in. Refer to

1.1.3 for details on the map-reduce pattern.

Topaz itself can be decomposed into four key components:

* Computational Model: The computational model describes the interface

between Topaz and the underlying hardware and is corresponds to the bulk of

the Topaz implementation. We assume one main node - which is running on

conventional, reliable hardware and any number of approximate worker nodes.

The main node maintains the application's persistent state and occasionally

distributes tasks to the worker nodes. The worker nodes complete the allotted

16

work and return the results back to the main node, which incorporates the result

into the persistent state of the application.

* Programming Language: The programming language is the interface between the programmer and Topaz. The programmer implements her application

in the C programming language and reworks computationally intensive routines

with a map-reduce pattern to use the Topaz C language extension. The map

operations become Topaz tasks, and the reduction operation is used to integrate

the task output into the program's persistent state. See

" Outlier Detector: The outlier detector is a key contribution that extends the

system to something more than a conventional map-reduce system. The outlier

detector determines if the task output returned by the approximate node is

acceptable - if it is, Topaz accepts the task and integrates the task output.

Otherwise, Topaz rejects the task and the task is re-executed on the main node.

See

* Experimental Evaluation: We evaluate the efficacy of Topaz using an approximate hardware emulator on a set of standard benchmarks (SciMark, Parsec) from the imaging, machine learning and simulation application domains.

These components will be discussed in more depth later in the thesis.

1.3

Contributions

This paper makes the following contributions:

* Topaz: It presents Topaz, a task-based language for approximate computation.

Topaz supports a reliable main computation and approximate tasks that can

execute on energy-efficient approximate computing platforms. Each Topaz task

takes parameters from the main computation, executes its approximate computation, and returns the results to the main computation. If the results are

17

acceptably accurate, the main computation integrates the results into the final

results that it produces.

The Topaz language design exposes a key computational pattern (small, selfcontained, correctable tasks) that enables the successful utilization of emerging

approximate computing platforms.

* Outlier Detection: Approximate computations must deliver acceptably accurate results.

To enable Topaz tasks to execute on approximate platforms

that may produce arbitrarily inaccurate results, the Topaz implementation uses

an outlier detector to ensure that unacceptably inaccurate results are not integrated into the main computation.

The outlier detector enables Topaz to work with approximate computation platforms that may occasionally produce arbitrarily inaccurate results. The goal

is to give the hardware designer maximum flexibility when designing hardware

that trades accuracy for energy.

" Intelligent Outlier Task Reexecution: Instead of simply discarding outlier

tasks or indiscriminately re-executing all tasks, Topaz reliably reexecutes the

tasks that produced outliers and integrates the generated results into the computation. Since the task is re-executed on the reliable platform that is used to

perform the main computation - the resulting output is correct. This intelligent

re-execution maintains relatively low re-execution rate while rectifying results

that disproportionately affect the output.

" Experimental Evaluation: We evaluate Topaz with a set of benchmark Topaz

programs executing on an approximate computing platform. This platform features a reliable processor and an approximate processor. The approximate processor uses an unreliable floating-point cache to execute floating-point intensive

tasks more energy-efficiently than the reliable processor. It also contains DRAM

that eliminates the energy required to perform the DRAM refresh.

Our results show that Topaz enables our benchmark computations to effectively

18

exploit the approximate computing platform, consume less energy, and produce

acceptably accurate results.

1.4

Thesis Organization

In this thesis, I will first describe the overall design of the system, then discuss

the technical details of each component of the design. I will then walk through an

illustrative example that outlines how to implement a body tracking application in

Topaz. Finally, I will discuss the experimental results of a larger set of applications.

19

20

Chapter 2

Topaz Programming Language and

Runtime

2.1

Overview

The Topaz implementation consists of (1) a compiler (2) the basic runtime and (3) an

outlier detector. The compiler compiles Topaz's taskset language extension into a

Topaz API call enriched C program. The Topaz runtime executes the compiled Topaz

application and intelligently re-executes tasks with bad outputs, which it identifies

using the outlier detector.

2.2

Language

We have implemented Topaz as an extension to C. Topaz adds a single statement, the

taskset construct. When a taskset construct executes, it creates a set of approximate

tasks that execute to produce results that are eventually combined back into the main

computation. Figure 2-1 presents the general form of this construct.

Each taskset construct creates an indexed set of tasks. Referring to Figure 21, the tasks are indexed by i, which ranges from 1 to u-1. Each task uses an in

clause to specify a set of in parameters x1 through xn, each declared in corresponding

declarations di through dn. Topaz supports scalar declarations of the form double x,

21

taskset name(int i = 1; i < u; i++) {

compute in

(d1

out (01,

...

el,

=

...

, dn = en)

oj) {

,

<task body>

}

transform out(vl,

...

,

vk) {

<output abstraction>

}

combine { <combine body> }

}

Figure 2-1: Topaz Taskset Construct

f loat x, and int x, as well as array declarations of the form double x [N], f loat x [N],

and int x [N], where N is a compile-time constant. The value of each in parameter

xi is given by the corresponding expression ei from the in clause. To specify that

input parameters do not change throughout the lifetime of a taskset, we prepend

the const identifier to the input type.

Each task also uses an out clause to specify a set of out parameters yl through

ym, each declared in corresponding declarations ol through om. As for in parameters,

out parameters can be scalar or array variables.

Topaz imposes the requirement that the in and out parameters are disjoint and

distinct from the variables in the scope surrounding the taskset construct. Task

bodies have no externally visible side effects -

they write all of their results into the

out parameters.

The transform body computes over the in parameters dl,

rameters ol, .

. .

... ,

dn and out pa-

, oj to produce a numerical abstract output vector (AOV) vi,

. . . ,

The AOV identifies, combines, appropriately normalizes, and appropriately selects

and weights the important components of the task output. As appropriate, it also

removes any input dependences. The outlier detector operates on the AOV.

The combine body integrates the task output into the main body of the com22

vk.

putation.

All of the task bodies for a given taskset are independent and can, in

-

theory (although not in our current Topaz implementation) execute in parallel

only the combine bodies may have dependences. More importantly for our current

implementation, the absence of externally visible side effects enables the transparent

Topaz task reexecution mechanism (which increases the accuracy of the computation

by transparently reexecuting outlier tasks to obtain guaranteed correct results).

2.2.1

Topaz Compiler

As is standard in implementations of task-based languages that are designed to run on

distributed computing platforms [29], the Topaz implementation contains a compiler

that translates the Topaz taskset construct into API calls to the Topaz runtime. The

Topaz front-end was written in OCaml. The front-end generates task dispatch calls

that pass in the input parameters, defined y the in construct, the task index i, and

the identifier of the task to execute. The front end generates the code required to send

the task results, defined as out parameters to the processor running the computation.

The front end reports the taskset specification to Topaz and properly extracts the

transformation and task operations into functions.

The Topaz runtime provides a data marshaling API and coordinates the movement of tasks and results between the precise processor running the main Topaz

implementation and the approximate processor that runs the Topaz tasks.

23

2.3

Topaz Runtime

The Topaz implementation is designed to run on an approximate computing platform

with one precise processor and one approximate processor. The precise processor executes the main Topaz computation, which maps the Topaz tasks onto the approximate

processors for execution. Topaz is comprised of four components: (1) a front end that

translates the taskset construct into C-level Topaz API calls (2) a message-passing

runtime for dispatching tasks and collecting results. The runtime consists (1) an outlier detector that prevents unacceptably inaccurate task results from corrupting the

final result (2) a fault handler for detecting failed tasks.

The Topaz implementation works with a distributed model of computation with

separate processes running on the precise and approximate processors. Topaz includes a standard task management component that coordinates the transfer of tasks

and data between the precise and approximate processors. The current Topaz implementation uses MPI [12] as the communication substrate that enables these separate

processes to interact. The application runtime is executed using the topaz runtime

environment.

2.3.1

Application Stack

0

0

0

Worker Nods

M.in Rod*

Example Hardware Configurations:

Application

Machine

A

Topaz

B

Machin-

C

I

1

MaCh-o. 2

t..iatoz

(b) Topaz Hardware Configurations.

(a) Topaz Software Stack.

Figure 2-2: Topaz Application Stack

Once compiled, the Topaz applications may be executed using the Topaz runtime

framework. The framework is written in MPI and models MPI processes as virtual

nodes. the nodes are labeled main and worker nodes in the system configuration file,

which is passed into the Topaz runtime. The mapping between the nodes and hard24

ware is up to the programmer and not visible to the Topaz runtime. The programmer

may run virtual nodes on different machines on the network (B, Figure 2-2a), on different hardware components in the same machine (A, Figure 2-2b), or in an emulator

(C, Figure 2-2b).

Task Lifetime

2.3.2

The Topaz application executes on the main node until a taskset is encountered. The

typical taskset lifetime is as follows:

Application

I~~~t

task

taskset Icombine

accept & combine

Outlier

Topaz

Application

task

tske

'-

eTopa

Detector

-----------------

task outputs

--------------------------------

_____________________________task

4_-----------

in~puts

Hardware

Worker Node

Main Node

Figure 2-3:

Overview of Implementation

1. Taskset Creation: The taskset is created.

2. For each task in the taskset:

(a) Task Creation: The Topaz runtime marshals the task input in a taskset

and sends the task to a worker node through the MPI interface (orange

line, Figure 2-3).

(b) Task Execution: The worker node runs the task construct on the received inputs then marshals and sends the outputs back to the main node

(green line, Figure 2-3).

(c) Task Response: The worker node sends a message containing the task

results back to the main node running the Topaz main computation.

25

(d) Outlier Detect: The main node transforms the task output into an abstract output vector (AOV). The main node then analyzes the AOV and

determines whether to accept or reject the task.

" On Accept: If the task is accepted, the output is passed onto the

next step. (green line, Figure 2-3).

" On Reject: If the task is rejected, it is re-executed on the main node,

and the correct output is passed on to the next step (red line, Figure

2-3).

(e) Outlier Detector Update: Topaz uses the correct result to update the

outlier detector (see Section 2.4.1).

(f) Task Combination: The task output is incorporated into the main computation by executing the combine block

2.3.3

Persistent Data

To amortize communication overhead, Topaz divides task data into two types: persistent

data and transient data. Persistent data does not vary over the lifetime of the

taskset and does not need to be sent over with every task. Transient data is specific

to each task and needs to be sent over with every task. Persistent data is sent over to

the approximate machine with the first task and reused, while transient data is sent

over with each task.

To execute a taskset, we modify the taskset lifetime above as follows:

1. Taskset Creation: The persistent data is sent to the worker node.

2. For each task in the taskset:

(a) Task Creation: The main node sends the transient data for the task to

the .worker node.

(b) Task Execution, Task Response, Outlier Detection, Outlier Detector Update and Task Combination: Topaz proceeds as it would in

the basic case.

26

(c) Persistent Data Resend: If the task was rejected, Topaz resends the

persistent data to the approximate processor. The rationale is that generating an outlier may indicate that the persistent data has become corrupted. To avoid corrupting subsequent tasks, Topaz resends the persistent

data to ensure that the approximate processor has a fresh correct copy of

the persistent data.

2.3.4

Abstract Output Vectors

The programmer-defined AOV has a significant impact on the behavior of the outlier

detector. The defined AOV may be brittle or flexible. A brittle AOV strongly enforces

an invariant on the output and leaves little choice to the outlier detector. A brittle

AOV is beneficial if the programmer really wants to enforce an invariant on the

output, but is not as adaptive to error. A flexible AOV provides a weak interface,

such as a score, to the outlier detector and leaves it up to the system to determine

what the cutoffs are. Flexible AOVs are more amenable to different types of unreliable

hardware, but provide weaker guarantees.

A task computation may provide complete or incomplete information. If we have

complete information, the output may easily be normalized so that it is no longer

dependent on the input. If we have incomplete information, the output is difficult

to transform into an input independent representation, or it is to computationally

inefficient to do so.

The distinction between these four categories may be highlighted with the following example: Consider an application whose task takes in a stock price, s, and a

change variable, r and produces s', the predicted price.

27

With Complete Information:

The predicted price is generated from the normal distribution N(p

=

s, o

=

r). We

propose the following four AOVs:

Brittle AOV (Weak Invariant): We know all prices must be greater than 0. The

resulting price must be greater than zero, regardless of the input. This AOV enforces

the invariant s' > 0.

AOV = s'> 0

Brittle AOV (Strong Invariant): We could enforce a stronger invariant - that all

accepted values fall within one standard deviation of the mean and the price is nonnegative.

AOV

< 1 As'>0

r

Flexible A OV: Since we know the computation is a normal distribution, we determine

the number of standard deviations the predicted price is from the mean. The outlier

detector adaptively determines how many standard deviations is acceptable before a

price is considered an outlier. Consider this - if we had a runtime log of the cutoff

of the standard deviations over time, we could experimentally determine how many

standard deviations of the mean the erroneous values fall in.

AOV =

s

r

With Incomplete Information:

Now consider a computation where the predicted price is generated in a heavily inputdependent way, and it is unclear to the programmer how to construct a strong checker

(brittle AOV), or a scoring method (flexible AOV) for the output. Let's assume the

programmer does know the change in price and the rate are positively correlated.

Brittle AOV: We know the relationship between 6s and the rate, r are positively

correlated. We know, regardless of input, the price should go up if the rate is positive

28

(and vice versa). Even with incomplete knowledge, we found an invariant to enforce.

AOV = (s' - s > 0 A r > 0) V (s'

-

s

=

0 A r = 0) V (s' - s <0 A r < 0)

Flexible A OV: The relationship between the 6s and rate, r, is unknown - but correlated. We use the two dimensional vector (6s, r) as the AOV and rely on the outlier

detector to learn which regions in R2 are acceptable. Notice that even with a runtime log of the regions accepted, it is difficult to state anything concrete about the

erroneous values since the computation is heavily input dependent.

AOV = [s' - s,r]

29

2.3.5

Outlier Detection

Topaz performs outlier detection on a user-defined input-output abstraction which

takes the form of a numerical vector, which we call the abstract output vector (AOV).

The user-defined transform block is executed to transform an input-output pair into

an AOV. For each taskset, Topaz builds an outlier detector designed to separate

erroneous AOVs from correct AOVs.

Topaz performs outlier detection when a task is received but prior to result integration. First the inputs and outputs are transformed into an AOV. Then the validity

of the AOV is tested using the outlier detector. If the AOV is rejected, Topaz reexecutes the task on the precise processor to obtain correct results and re-sends the

persistent task data. The transformation of the correct output is used to train the

outlier detector.

The outlier detector maintains a list of regions ri G R, R is the set of regions the

outlier detector uses. Each region is a n dimensional block, where n is the dimension

of the AOV. These regions represent blocks in R" x R n and are defined as ri

{Vmin, i

max},

=

which define the lower (i'min) and upper bounds (ilmax) of a particular

block. We bound the number of regions in R such that JRI < rmax. This bound limits

the complexity of the outlier detector.

The outlier detector performs two functions after each task execution. First, it

tests an AOV and reports whether the task output is correct (accept) or erroneous

(reject). Second, if the outlier detector rejects the AOV, it re-executes the task to

obtain the correct AOV. Then the outlier detector updates its knowledge of the system using the correct AOV.

Testing

Consider V', an AOV generated from results of the executing task. To test for acceptance of V', the outlier detector determines if V' resides in any of its regions and accepts

if there exists an ri that contains T:

30

1 for vector i':

2

for each riER:ri={fminmax}

3

if i E biock(Vmin,Vmax)

vEri-+ accept

4

end

5

end

6

7

reject

Figure 2-4: AOV Testing Algorithm

Training

Figure 2-5 presents the algorithm for training the outlier detector. Given a rejected

AOV X, we re-execute the task to get output', and transform the output into some

guaranteed-correct AOV Vkey. If the correct output

Vkey

is not in any ri E R, create

a new region rk that contains only that vector and add it to R. If JRI is now the

maximum allowable size, rmax, find the two most alike regions and merge them. Two

regions are considered alike if they are overlapping or close together.

1

2

3

for rejected vector Vut:

reexecute task -+taskot

apply abstraction -+Vkey

4

5

if

vkey

some riER:

{Vkey, Vkey}

R= RU {rk}

6

7

8

rk

=

9

if

10

11

JRI == rmax:

bs=0 , best._pair=nil

f or each (ri,r3 ) E R x R:

12

currscore=score(ri,r,)

13

14

15

if bs < currscore:

bs = currscore

bestpair = (ri,r)

16

17

merge (bestpair)

Figure 2-5:

Algorithm

Outlier Detector Training

Figure 2-6 presents the procedure for merging two regions. The algorithm constructs a new region that encapsulates the two old regions. This region is defined by

31

the computed Vmi, and vma, vectors.

1 for regions ri={vin,

2

rk = {Vinlmaxi

3

4

in, Vmnax

:

for each x in IVkn:

vin[x] = min(v4

inV iv

Viax [X] = max(v ax , Vax)

)

5

vax}, r ={v

6

Figure 2-6: Outlier Detector Merge Construct

2.3.6

Failed Task Detection

Topaz also handles cases where the task fails to complete execution on the approximate processor.

In the event a task fails, the approximpate processor notifies the

main processor. Topaz re-executes the task on the main processor and refreshes the

persistent data on the approximate machine.

32

Chapter 3

Example

We next present an example that illustrates the use of Topaz in expressing an approximate computation and how Topaz programs execute on approximate hardware

platforms.

3.1

Example Topaz Program

Our example is the bodytrack benchmark from the Parsec benchmark suite [5]. Bodytrack is a machine vision application that, in each step, computes the estimated position of the human in a frame. The main part of the computation computes weights

for each of the candidate body poses.

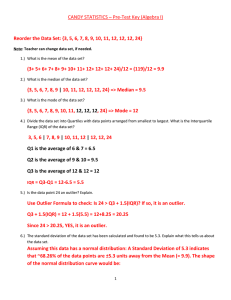

Figure 3-1 presents the bodytrack calcweights computation, which, given a

tracking model, scores the fit of each pose in a set of poses against an image. This

computation is implemented as a set of Topaz tasks defined by the Topaz taskset

construct. When it executes, the taskset generates a set of tasks, each of which executes three main components: the task compute block (which defines the approximate

computation of the task), transform block (which defines the task's AOV), and a

combine block (which integrates the computed result into the main computation).

Compute block:

The compute block defines the inputs (with section in), outputs

(with section out) and computation (following code block) associated with an ap33

// compute the weights for each valid pose.

taskset calcweights(i=0; i < particles.size();

compute

in

float

const

const

const

const

const

const

tpart[PSIZE] =

(float*) particles[i],

float tmodel[MSIZE] = (float*) mdl-prim,

char timg[I-SIZE] = (char *) img-prim,

int nCams = mModel->NCameraso,

int nBits = mModel->getBytesPerPixel(),

int width = mModel->getWidtho,

int height =mModel->getHeight()

14

out

15

{

(

float tweight

)

)

13

tweight = CalcWeight(tpart,

tmodel, timg, nCams, width,

16

17

height,

}

18

19

20

transform

21

out

22

{

23

24

25

26

(bweight, bpl,

bp2, bp3)

bweight =

tweight;

bpl=tpart[3];

bp2=tpart[4];

bp3=tpart [5];

}

27

28

29

30

31

32

33

i+=1){

(

1

2

3

4

5

6

7

8

9

10

11

12

combine

{

mWeights[i] = tweight;

}

}

Figure 3-1: Example Topaz Program

34

nBits);

proximate task.

In our example, the compute block computes the weight for a

particular pose by invoking the CalcWeight function, which is a standard C function. The taskset executes a compute task for each pose.

In total, it executes

particles. size ( tasks.

Each task takes as input two kinds of data: fixed data, which has the same value for

all tasks in the taskset (fixed data is identified with the const keyword) and dynamic

data (which has different values for each task in the taskset). In our example each task

has one dynamic input, tpart, the ith pose to score. It additionally takes several fixed

inputs that remain unchanged throughout the lifetime of the taskset: (1) tmodel, the

model information (2) timg, the frame to process, (3) nCams, the number of cameras

(4) nBits, the bit depth of the image. The task computation produces one output:

tweight, the score of the pose on the frame.

Transform block:

The transform construct defines an abstract output vector.

Topaz performs outlier detection on this abstraction when the task finishes execution.

In this example we use the the pose position from the task input (bpl,bp2, bp3) and

pose weight from the task output (bweight) as an abstraction. The rationale behind

this abstraction is the location of the pose and the scoring are heavily correlated.

We cannot remove the input dependence from the output since the pose weight is

dependent on the image. This abstraction is a flexible abstraction for a task that

exposes incomplete information.

Combine block:

When the task completes, its combine block executes to incorporate

the results from the out parameters into the main Topaz computation (provided the

outlier detector accepts the task). In our example, the combine blocks commit the

weight to the mWeights global program variable and update the number of included

particles.

35

3.1.1

Approximate Execution

Topaz's computational model consists of a precise processor that executes all critical regions of code and an approximate processor that executes tasks defined by

the compute construct. Critical portions of code include non-taskset code and the

transform and combine blocks of executed tasks. Since the taskset construct is

executed approximately, the computed results may be inaccurate. In our example

the output, tweight (the computed pose weight) may be inaccurate. That is, the

specified pose may be assigned an inappropriately high or low weight for the provided

frame.

If integrated into the computation, significantly inaccurate tasks can unacceptably

corrupt the end-to-end result that the program produces. In the combine block of our

example, the computed weights are written to a persistent weight array mWeights.

The bodytrack application uses this weight array to select the highest weighted pose

for each frame.

Therefore, if we incorporate an incorrect rate, we may select an

incorrect pose as the best pose for the frame.

3.1.2

Outlier Detection

Topaz therefore deploys an outlier detector that is designed to detect tasks that produce unacceptably inaccurate results.

The outlier detector uses the user defined

transform block to convert the task inputs and outputs into a numerical vector,

which we call the abstract output vector (AOV). This vector captures the key features

of the task output. In our example's transform block, we define a four dimensional

AOV <bweight,bpl,bp2,bp3> comprised of the weight and pose position. We use

this abstraction because we expect poses in the same location to be scored similarly.

The outlier detector uses this numerical vector to perform outlier detection online

(see Section 2.4.1 for more details). The outlier detector accepts correct AOVs and

rejects AOVs that are outliers. In our example, the outlier detector rejects tasks

that produce high weights for bad poses - poses that are positioned in parts of the

frame without a person. Similarly, the outlier detector rejects tasks that produce low

36

weights for good poses - poses near the person.

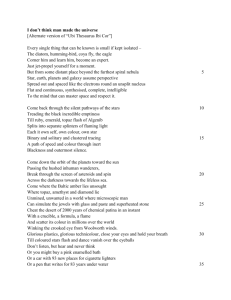

3.1.3

Precise Reexecution

(a) Precise Execution

(b) No Outlier Detection

(c) Outlier Detection

Figure 3-2: Output Quality for Bodytrack

When the detector rejects a task, Topaz precisely reexecutes the task on the precise

main machine to obtain the correct task result, which is then integrated into the main

computation. The numerical vector attained by transforming the task result is used

to further refine the outlier detector. In order to minimize any error-related skew, we

only use correct outputs attained via reexecution to train the outlier detector. In our

example, the tasks that assign abnormally high weights to bad poses or low weights

to good poses. After all tasks have been combined and the taskset has completed

execution, the highest weight in the persistent weight array belongs to a pose close to

the correct one. This technique maximizes the accuracy of the overall computation.

37

Figure 3-2a presents the correct pose for a particular video frame when the computation has no error. Figure 3-2b presents the selected pose for the same frame run

on approximate hardware with no outlier detection. Note that the selected pose is unacceptably inaccurate and does not even loosely cover the figure. Figure 3-2c presents

the selected pose for the same frame on the same approximate hardware with outlier detection. Observe the chosen pose is visually indistinguishable from the correct

pose (Figure 3-2a). The numerical error for the computation with outlier detection

is 0.28% (compared to 73.65% error for the computation with no outlier detection).

The outlier detector reexecutes 1.2% of the tasks, causing a 0.2% energy degradation

when compared to the computation without outlier detection. The overall savings for

executing the program approximately are 15.68%.

38

Chapter 4

Experimental Setup and Results

4.1

Benchmarks

We present experimental results for Topaz implementations of our set of five benchmark computations:

" BlackScholes: A financial analysis application from the Parsec benchmark

suite that solves a partial differential equation to compute the price of a portfolio

of European options [5].

* Water: A computation from the SPLASH2 benchmark suite that simulates

liquid water [39, 28].

" Barnes-Hut: A computation from the SPLASH2 benchmark suite that simulates a system of N interacting bodies (such as molecules, stars, or galaxies) [39].

At each step of the simulation, the computation computes the forces acting on

each body, then uses these forces to update the positions, velocities, and accelerations of the bodies [4].

" Bodytrack: A computation from the Parsec benchmark suite that tracks the

pose of the subject, given a series of frames [5].

o Streamcluster: A computation from the Parsec benchmark suite that performs hierarchical k-means clustering [5].

39

4.2

Hardware Model

We perform our experiments on a computational platform with one precise processor,

which runs the main computation, and one approximate processor, which runs the

Topaz tasks. These two processes are spawned by MPI and communicate via MPI's

message passing interface as discussed in Section 2.3.

4.2.1

Conceptual Hardware Model:

To evaluate Topaz, we simulate an approximate hardware architecture which contains

an approximate SRAM cache for floating point numbers and no-refresh DRAMs for

holding task data. Table 4.1 presents the fault and energy characteristics of these

two models. We use the SRAM failure rates and energy savings from Enerj's medium

model and a hybridized medium-aggressive model, which we obtain by interpolating

between the two models [31]. We call this the heavy model.

We store all fixed and transient data associated with task execution in no-refresh

DRAMs. We assume 75 percent of the DRAM memory on the approximate machine

is no-refresh. The remaining DIMMs are used to store critical information, including

Topaz state, MPI state, critical control flow information, and program code. We

construct the per-millisecond bit flip probability model by modeling the regression of

Flikker's DRAM error rates and use the energy consumption associated with DRAM

refresh to compute the savings [18].

4.2.2

Hardware Emulator

To simulate the approximate hardware we implemented an approximate hardware

emulator using the Pin [191 binary instrumentor. We based this emulator on on iAct,

Intel's approximate hardware tool [231. Our tool instruments floating point memory

reads so the value is probabilistically corrupted.

40

Property

SRAM read upset probability

SRAM write upset probability

Supply Power Saved

Medium

Model

i0-7

i0-4.94

80%

Heavy

Model

10-5

10-4

85%

Dram per-word error

probability over time

Dram Energy Saved

3 - 10- 7 . t2 .6908

33%

3 - 10-7 . t2.6908

33%

Table 4.1: Medium and Heavy Hardware Model Descriptions

The no-refresh DRAMs are conservatively simulated by instrumenting all noncontrol flow memory reads with probabilistic bit flips. Every 106 instructions, or 1

millisecond (for a 1GHZ machine), we increase the probability of a DRAM error occurring in accordance with the model. We have included a specialized API for setting

the hardware model, enabling and disabling injection. We conservatively assume corruptions occur in active memory and instrument all non control-flow memory reads.

Note that an excess of reads are instrumented, since in our conceptual hardware

model, only task data is stored in the no-refresh Drams.

4.2.3

Energy Model

We next present the energy model that we use to estimate the amount of energy

that we save through the use of our approximate computing platform. We model the

total energy consumed by the computation as the energy spent executing the main

computation and any precise reexecutions on the precise processor plus the energy

spent executing tasks on the approximate processor.

For the approximate and precise processors, we experimentally measure (1) ttask,

the time spent in tasks (2) tt*Pa,, the time spent in topaz (3) tnain, the time spent

in the main computation (4) tab,, the time spent abstracting the output (5) tdet, the

time spent using the outlier detector. We denote time measurements of a certain type

(topaz,main,det,abs,task) that occur on the precise processor with an m subscript (ex:

tCtyre>), measurements that occur on the approximate processor with a w subscript

(ex: t<tyPe>), and use the shorthand tt2pe> to express t*type> + t<tyPe>.

41

Using the experimental measurements, we compute the fraction of time spent

on the approximate machine vs the precise machine without reexecution,

fnoreexec,

excluding the time devoted to abstracting the output, re-executing the tasks and

performing outlier detection:

ttask + tmain + ttopaz

=task

tnoreexec+ t4 faz +

tmain

7

6

noreexec = fnoreexec * 6 urei

In the re-execution energy approximation, we include output abstraction, detection

and re-execution time.

ttsk

reexec

,

-

6

+

+ tmain + topaz

+ tmain + tabs + tdet

t~m

reexec = freexec * 6 urei

We compute the percent energy saved on the approximate machine as follows:

DRAMs consume 45% of the total system energy and the CPU, which includes

SRAMS,FPUs and ALUs consumes 55% of the total system energy [31].

6urei = 0.45 S 6 dram + 0.55 -6 cpu

We specified in our hardware model that 75% of our DRAMs are no refresh -

DRAM

refresh consumes 33% of the energy allotted to the DRAM [18].

6

dram = 0.75 . or,

= 0.75 - 0.33

SRAMs consume 35% of the energy allotted to the CPU [31]. In our hardware model,

we specified 50% of the SRAMS are unreliable. The percent savings unreliable SRAMs

incur, 6,rel depends on the model used - we use 6 SRAM in the equation (see Table

4.1).

6epu = 0.35 - 6sram = 0.35 - 0.50 - 6,rel

42

The final energy consumption formula for the approximate machine:

6

total =

6

0.45 - 0.75 -0.33 + 0.35 - 0.50 - 6

total =

0.111375 + 0.175 - 6 SRAM

Refer to Table 4.4 for the per-hardware-model maximum energy savings.

4.2.4

Benchmark Executions

We present experimental results that characterize the accuracy and energy consumption of Topaz benchmarks under a variety of conditions:

" Precise: We execute the entire computation, Topaz tasks included, on the

precise processor. This execution provides the fully accurate results that we use

as a baseline for evaluating the accuracy and energy savings of the approximate

executions (in which the Topaz tasks execute on the approximate processor).

" No Topaz: We attempt to execute the full computation, main Topaz computation included, on the approximate processor. For all the benchmarks, this

computation terminates with a segmentation violation.

" No Outlier Detection: We execute the Topaz main computation on the

precise processor and the Topaz tasks on the approximate processor with no

outlier detection. Constant data is only resent on task failure. We integrate all

of the results from approximate tasks into the main computation.

* Outlier Detection: We execute the Topaz main computation on the precise

processor and the Topaz tasks on the approximate processor with outlier detection and reexecution as described in Section 2.3.

4.2.5

Task and Output Abstraction

We work with the following output abstractions. These output abstractions are designed to identify/normalize the most important components of the task results.

43

"

barnes: Each task is the force calculation computation for a particular body.

We use the amplitude of the velocity and acceleration vectors as our output

abstraction.

" bodytrack: Each task is the weight calculation of a particular pose in a given

frame on the approximate machine.

The output abstraction is the position

vector and weight.

" streamcluster: Each task is a subclustering operation in the bi-level clustering scheme. The output abstraction is the gain of opening/closing the chosen

centers.

* water Two computations execute on the approximate machine: (1) the intermolecular force calculation, which determines the motion of the water molecules

(interf) (2) the potential energy estimation, which determines the potential energy of each molecule (poteng).

- interf: The output abstraction is the cumulative force exerted on the

H,O,H atoms in the x,y and z directions.

- poteng: The output abstraction is the potential energy for each molecule.

" blackscholes: Each task is a price prediction on the approximate machine. We

use the price difference between the original and predicted future price as our

output abstraction.

44

4.3

Outlier Detector Analysis

Benchmark

barnes

bodytrack

water-interf

water-poteng

streamcluster

blackscholes

barnes

bodytrack

water-interf

water-poteng

streamcluster

blackscholes

Hardware

Model

medium

medium

medium

medium

medium

medium

heavy

heavy

heavy

heavy

heavy

heavy

% Accepted

Correct

96.460 %

98.422 %

99.844 %

99.897 %

96.610 %

99.979 %

73.338 %

91.062 %

98.674 %

96.493 %

82.678 %

99.818 %

% Accepted

Error

2.496 %

0.848 %

0.047 %

0.032 %

0.781 %

0.008 %

18.842 %

7.676 %

0.617 %

3.060 %

5.330 %

0.083 %

% Rejected

Error

1.011 %

0.726 %

0.109 %

0.071 %

2.583 %

0.012 %

6.340 %

1.194 %

0.706 %

0.445 %

11.371 %

0.098 %

% Rejected

Correct

0.025 %

0.004 %

0.000 %

0.000 %

0.025 %

0.000 %

1.434 %

0.086 %

0.004 %

0.002 %

0.621 %

0.000 %

% Rejected

1.036%

0.730%

0.109%

0.071%

2.608%

0.012%

7.775%

1.280%

0.709%

0.447%

11.992%

0.098%

Table 4.2: Overall Outlier Detector Effectiveness

Table 4.2 presents true and false positive and negative outlier detector rates.

These numbers indicate that the outlier detector is (1) able to adequately capture

outliers (2) falsely accepts only a small proportion of low-impact errors in the task.

Each benchmark and hardware model (columns 1,2) has a row that contains the

associated percentage of correct output that was correctly accepted and correctly

rejected (columns 3,5) and the percentage of erroneous output that was incorrectly

accepted and correctly rejected (columns 4,6). We report the total percentage of

rejected tasks (columns 7).

These numbers highlight the overall effectiveness of the outlier detector. The vast

majority of tasks integrated into the main computation are correct; there are few

reexecutions and few incorrect tasks. The only source of error affecting the main

computation is captured in the accepted erroneous tasks (column 3).

45

I ....

......................

.."- ,. ..

........

..............

'-------, 11

...............

...... _

'_--. _._' ............

.......

_'_-

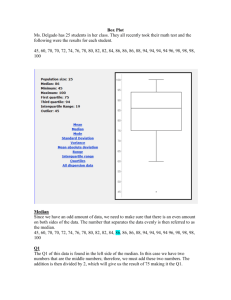

4.4

Output Quality Analysis

Benchmark

barnes

bodytrack

streamcluster

water

blackscholes

barnes

bodytrack

streamcluster

water

blackscholes

Hardware

Model

medium

medium

medium

medium

medium

heavy

heavy

heavy

heavy

heavy

No

Topaz

crash

crash

crash

crash

crash

crash

crash

crash

crash

crash

No Outlier

Detection

inf/nan

9.09%

0.344

inf/nan

3.37E+37%

inf/nan

73.65%

0.31

inf/nan

inf

Outlier

Detection

0.095%

0.2287%

0.502

0.00035%

0.0082%

0.394%

0.279%

0.31

0.0004%

0.086%

Table 4.3: End-to-end Application Accuracy Measurements.

Table 4.3 presents the end to end output quality metrics for the (1) All Computation Approximate (2) No Outlier Detector, and (3) Outlier Detector cases.

These results show that it is infeasible to run the entire application the approximate hardware. All applications contain critical sections that must execute exactly

if not, all applications crash. With one exception (streamcluster), no outlier detection

produces unacceptably inaccurate results:

4.4.1

Barnes

520

3

122-

(a) Correct

152

0

(b) No Outlier Detection

(c) Outlier Detection

Figure 4-1: Visual Representation of Output Quality in Barnes.

The end to end output quality metric is the percent positional error (ppe) of each

body. The ppe with outlier detection is a fraction of a percent while the ppe without

46

outlier detection is large. With outlier detection, the difference in output quality

between the exact and approximate executions is visually indistinguishable in the

output. Refer to Figure 4-1c and 6-13b for a visual comparison.

4.4.2

BodyTrack

(a) Precise Execution

(b) No Outlier Detection

(c) Outlier Detection

Figure 4-2: Output Quality for Bodytrack.

The metric is the weighted percent error in the selected pose for each frame. In the

heavy hardware model, we observe mildly (9%, medium model) to wildly incorrect

pose choices (almost 75%, heavy model). With outlier detection, the selected poses

are visually good matches for the figure. Refer to Figure 4-2c and 4-2a for a visual

comparison.

4.4.3

2

StreamCluster

00

0,2

0.4

06

0.8

(a) Correct

10

17

-=

2

010

a2

0.4

0.6

0.0

110

12

(b) No Outlier Detection

-.

2

Q.D

0.2

0.4

0.6

0.9

10

1.

(c) Outlier Detection

Figure 4-3: Visual Representation of Output Quality in Streamcluster.

The metric is the weighted silhouette score as a measure of cluster quality - the

closer to one, the better the clusters. Visually, the clusters with and without outlier

47

. .............. .....

......................

-_..

detection look acceptable. We attribute this result to the fact that the computation

itself is inherently non-deterministic and robust to error. Moreover, the precise toplevel clustering operation as implemented in the application itself is robust in the

presence of extreme results. Even without Topaz outlier detection, the final result

will be adequate as long as erroneous tasks do not contribute a substantial proportion

of the top level points. Refer to Figure 4-3c and 4-3a for a visual comparison.

4.4.4

Water

a

(a) Correct

10

(b) No Outlier Detection

(c) Outlier Detection

Figure 4-4: Visual Representation of Output Quality in Water.

The metric is the percent positional error (ppe) of each molecule. The ppe with

outlier detection is a fraction of a percent while the ppe without outlier detection

is very large. With outlier detection, the difference in output quality between the

exact and approximate executions is visually indistinguishable in the output. Refer

to Figure 4-4c and 4-4a for a visual comparison.

4.4.5

Blackscholes

The metric is the cumulative error of the predicted stock prices with respect to the

total portfolio value. Without outlier detection, the percent portfolio error is many

times more than the value of the portfolio. With outlier detection, the percent portfolio error is a fraction of a percent.

48

..

.........

....

.............

....

........

_ _ ".

4.5

Energy Savings

Benchmark

barnes

bodytrack

water

streamcluster

blackscholes

barnes

bodytrack

water

streamcluster

blackscholes

Hardware

Model

medium

medium

medium

medium

medium

heavy

heavy

heavy

heavy

heavy

No

Topaz

19.18%

19.18%

19.18%

19.18%

19.18%

19.66%

19.66%

19.66%

19.66%

19.66%

No Outlier

Detection

18.78

15.88 %

12.52 %

16.00 %

10.23 %

17.92%

15.90%

12.83%

11.98%

11.46%

Outlier

Detection

16.89%

15.68%

9.49%

15.70%

8.37%

17.42%

15.62%

9.44%

11.39%

9.09%

Table 4.4: Energy Consumption Table for medium and heavy energy models

We evaluate the energy savings for the benchmarks in Table 4.4. Each benchmark

and hardware model combination occupies a row where the maximum savings (column

3), the savings with no re-execution (column 4) and the savings with reexecution

(column 5) are listed. Overall, we observe that the task computations make up a

significant portion of the entire computation - eclipsing any Topaz overhead - since

each benchmark attains more than half of the potential energy savings without reexecution. Adding in outlier detection subtly degrades energy savings - reducing the

savings anywhere from 0.2% to 3%. We discuss the benchmark-specific energy trends

below.

4.5.1

Barnes

Barnes attains a large fraction of the possible energy savings mostly because the force

calculation step is very large compared to the rest of the computation. Furthermore,

adding in outlier detection does not drastically impact the energy savings. This is

attributed to the simplicity of the output abstraction and the complexity of the task

when compared to the outlier detection overhead.

49

4.5.2

Bodytrack

We observe bodytrack attains most of,but not all of, the energy savings.

This is

because there are a few steps (annealing, pose setup) that are not done on the Approximate machine but are a non-trivial part of the computation. We do not see

a significant degradation in energy savings after applying outlier detection because

a very small subset of the tasks are re-executed and the abstraction is very simple

compared to the kernel.

4.5.3

Streamcluster

We see a significant difference between the medium and heavy energy savings because

the precise top-level clustering operation takes longer on the heavy error model - this

is likely because the subclustering operations did not provide adequately good output,

causing the top level clustering operation to take longer to converge. There isn't a

significant dip in the energy savings when we use outlier detection - the abstraction

is simple and a relatively small number of tasks are re-executed.

4.5.4

Water

Since the kernel computation of water is relatively small, we see a larger dip in energy

savings when we apply outlier detection. We also see a smaller proportion of maximum

savings achieved since other components of the computation overshadow the water

computation..

4.5.5

Blackscholes

Since the kernel computation for the blackscholes benchmark is relatively small, We

see a larger dip in energy savings when we apply outlier detection. We also see a

smaller proportion of the maximum savings achieved since the price predictions do

not overpower other components of the computation.

50

4.6

Implications of No DRAM Refresh

Benchmark

Hardware

Model

barnes

bodytrack

water-interf

water-poteng

streamcluster

blackscholes

barnes

bodytrack

water-interf

water-poteng

streamcluster

blackscholes

medium

medium

medium

medium

medium

medium

heavy

heavy

heavy

heavy

heavy

heavy

DRAM

Refresh

Rate

(ref/msec)

0.0156

0.0156

0.0156

0.0156

0.0156

0.0156

0.0156

0.0156

0.0156

0.0156

0.0156

0.0156

Task

Failure

Rate

(fail/msec)

16.9186

1.9430

24.0756

15.8858

2.0362

5.5080

121.4956

10.9450

204.1373

540.8827

10.1080

48.9866

Outlier

Rejection

Rate

(rej/msec)

4.9998

0.9008

16.8519

10.8981

1.5786

3.3248

37.5098

1.5790

109.4444

69.0448

7.2581

26.5767

Table 4.5: DRAM Refresh Rate

We analyzed the number of observed DRAM errors in all of our applications (recall

that our approximate hardware does not refresh the DRAM that stores the task data)

and found there is only one DRAM error in the entire benchmark set. We attribute

this phenomenon to the fact that 1) Topaz rewrites the task data after every failed

or reexecuted task, 2) all of the tasks make significant use of the SRAM floating

point cache, and 3) SRAM floating point read errors are significantly more likely that

DRAM read errors. Table 4.5 presents the per-millisecond refresh rate of the task

data (column 6) compared to the minimum per-millisecond refresh rate of DRAM

as specified by the JEDEC standards commission (column 3) [35]. We observe other

errors cause tasks to generate incorrect results frequently enough so that the task in

the no-refresh DRAM is almost always fresh enough to incur a negligible chance of

encountering a DRAM corruption.

51

4.7

Related Work

We discuss related work in software approximate computing, approximate memories,

and approximate arithmetic operations for energy-efficient hardware.

4.7.1

Approximate Hardware Platforms

Researchers have previously proposed multiple hardware architecture designs that improve the performance or energy consumption of processors by reducing precision or

increasing the incidence of error [26, 24, 17, 16, 31, 10, 37, 14, 38, 36, 9]. Researchers

have also explored various designs for approximate DRAM and SRAM memories to

save energy. To save energy of DRAM memories, researchers proposed downgrading the refresh rate [18, 10] or using different underlying substrate [32], For SRAM

memories, researchers proposed decreasing operating voltage [10, 33]. Topaz targets

a wide variety of approximate hardware, and has been tested on a subset of these

models.

Researchers have designed approximate hardware systems with a single precise

core and many approximate cores. Researchers have adopted the many approximate

core-single precise core computational model into hardware [17, 25].

Not all approximate hardware is easy to model. Researchers have done experiments on systems with hard to quantify fault characteristics - such as undervolted

and overclocked hardware - and found them hard to model or quantify [15, 17, 20]. In

industry, hardware component manufacturers sacrifice some performance, die space

and energy into error correction hardware to reduce the effects of manufacturing

defects [131. These difficult to describe pieces of hardware are good targets for Topaz.

4.7.2

Programming Models for Approximate Hardware

Researches have previously investigated using task-checker structures to utilize approximate hardware. Relax is a task-based programming language and ISA extension

that supports manually developed checker computations [8]. If a checker computation fails, Relax allows a developer to specify a custom recovery mechanism. Though

52

Topaz is also task-based, it operates entirely in software, and does not require ISA

extensions to work. In contrast to Relax, Topaz uses online outlier detector as an automatic checking mechanism and automatically re-executes tasks as a default recovery

mechanism.

Researchers have also investigated language features that allow developers to annotate approximate data and operations. Flikker provides a C API, which developers

can use to specify data to store in unreliable memory. Enerj provides a type system

to specify data that may be placed in unreliable memories or computed using approximate computations. Rely provides a specification language that developers can

use to specify quantitative reliability requirements and an analysis that verifies that

Rely programs satisfy these requirements when run on approximate hardware [6].

Unlike these systems, Topaz does not require the developer to provide such fine grain

annotations.

Recently, researchers have sought to lessen programmer burden by automatically

determining the placement of approximate structures and annotations. Chisel allows a

developer to express accuracy and reliability constraints and automates the placement

of unreliable operations for a Rely program by framing the provided energy model

and program as an integer programming problem [21]. Like Chisel, ExpAX searches

for approximations that minimize energy usage subject to accuracy and reliability

constraints using genetic programming [27].

Both Chisel and ExpAX remove the

need for the programmer to specify approximate operations. But, unlike Topaz, they

still require the programmer provide software and hardware specifications.

53

54

Chapter 5

Conclusion

As energy consumption becomes an increasingly critical issue, practitioners will increasingly look to approximate computing as a general approach that can reduce the

energy required to execute their computations while still enabling their computations

to produce acceptably accurate results.

Topaz enables developers to cleanly express the approximate tasks present in their

approximate computations. The Topaz implementation then maps the tasks appropriately onto the underlying approximate computing platform and manages the resulting

distributed approximate execution. The Topaz execution model gives approximate

hardware designers the freedom and flexibility they need to produce maximally efficient approximate hardware -

the Topaz outlier detection and reexecution algo-

rithms enable Topaz computations to work with approximate computing platforms

even if the platform may occasionally produce arbitrarily inaccurate results. Topaz

therefore supports the emerging and future approximate computing platforms that

promise to provide an effective, energy-efficient computing substrate for existing and

future approximate computations.

55

56

Chapter 6

Appendices

The following appendices contain supplementary figures.

6.1

Outlier Detector Bounds over Time

The following series of plots outlines the evolution of the outlier detector regions over

time. The y axis is the execution time, the x axis is the range of values. The shaded

boxes are the hypercubes the outlier detector keeps track of. The green crosses are

correct data points. The red triangles are erroneous data points. Each plot is one

element of the AOV for a particular taskset.

57