ON CENTRAL LIMIT THEOREMS FOR VECTOR RANDOM MEASURES AND MEASURE-VALUED PROCESSES

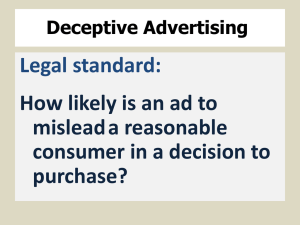

advertisement

THEORY PROBAB. APPL.

Vol. 46, No. 3

ON CENTRAL LIMIT THEOREMS FOR VECTOR RANDOM

MEASURES AND MEASURE-VALUED PROCESSES∗

Z. G. SU†

Abstract. Let B be a separable Banach space. Suppose that (F, Fi , i 1) is a sequence of

independent identically distributed (i.i.d.) and symmetrical independently scattered

n (s.i.s.) B-valued

random measures. We first establish the central limit theorem for Yn = √1n

F by taking the

i=1 i

viewpoint of random linear functionals on Schwartz distribution spaces. Then, let (X, Xi , i 1) be

a sequence of i.i.d. symmetric B-valued random vectors and (B, Bi , i 1) a sequence of independent

standard Brownian motions on [0,1] independent

of (X, Xi , i 1). The central limit theorem for

n

measure-valued processes Zn (t) = √1n

Xδ

, t ∈ [0, 1], will be investigated in the same

i=1 i Bi (t)

frame. Our main results concerning Yn differ from D. H. Thang’s [Probab. Theory Related Fields,

88 (1991), pp. 1–16] in that we take into account F as a whole; while the results related to Zn are

extensions of I. Mitoma [Ann. Probab., 11 (1983), pp. 989–999] to random weighted mass.

Key words. central limit theorems, Gaussian processes, random vector measures, Schwartz

spaces

PII. S0040585X97979111

1. Introduction. The concept of measure-valued processes has its origin at the

evolution in time of the population. Consider a population, each of whose individuals

is represented by its state x ∈ R. Assume that the state of the population is completely described by the states of the individuals {xi , i ∈ I(t)}, where I(t) is the set

of living individuals at time t. A well-established representation for such a population

can be obtained by setting

(1.1)

X(t) =

ε δxi ,

i∈I(t)

where δx denotes the Dirac measure at point x, and ε is a normalizing factor which

may be one or may represent the mass of each particle if the individuals are particles.

In many situations one is led to consider sequences Xn (·) of this type of processes

and to study the limit of their laws. We refer to [5], [7], and [10]. Let {Bk (t), t ∈ [0, 1]},

k = 1, 2, . . . , be a sequence of independent one-dimensional Brownian motions with

Bk (0) = 0 for each k 1. Define a sequence of measure-valued processes Xn (t, ·) as

follows.

For a Borel subset A ∈ B(R),

Nn (t, A) =

n

δBk (t) (A)

k=1

∗ Received by the editors September 16, 1997. This work was supported by the Foundations of

National Natural Science of China and Zhejiang Province.

http://www.siam.org/journals/tvp/46-3/97911.html

† Department of Mathematics, Hangzhou University, 310028, China (zgsu@mail.hz.zj.cn).

448

ON CENTRAL LIMIT THEOREMS FOR VECTOR RANDOM MEASURES

449

and

(1.2)

1 Xn (t, A) = √ Nn (t, A) − ENn (t, A)

n

n

1

=√

δBk (t) (A) − P Bk (t) ∈ A

n

.

k=1

Since Xn (t, A) turns out to be extremely irregular as a measure in A when n becomes

large, one cannot expect the limit process X(t) to be a measure-valued process. However, letting S be the Schwartz space, we can consider Xn (t, ·) as a distribution-valued

stochastic process Xn (t) by setting

Xn (t)(ϕ) =

(1.3)

ϕ(x) Xn (t, dx)

n

1

=√

ϕ Bk (t) − E ϕ Bk (t)

n

R

,

ϕ ∈ S.

k=1

Both Itô [5] and Mitoma [7] show that there exists a distribution-valued stochastic

process X whose sample paths are elements in C([0, 1], S ) such that Xn converges

weakly to X.

One principal purpose of this paper is to extend the above result by assigning

a random mass to each individual. Let B be a separable Banach space, and let

(Xi , i 1) be a sequence of independent identically distributed (i.i.d.) symmetric

B-valued random variables and independent of all the Brownian motions. Define

(1.4)

n

1 Zn (t) = √

Xi δBi (t) ,

n i=1

t ∈ [0, 1].

Theorem 4.3 gives the limit law of the corresponding processes Zn , n 1.

For a fixed point t0 ∈ [0, 1], we have

n

1 Zn (t0 ) = √

Xi δBi (t0 ) ,

n i=1

n 1.

This is a sequence of normalized sums of vector random measures and has a more

general form. Let (Fi , i 1) be a sequence of i.i.d. and symmetric independently

scattered (s.i.s.) B-valued random measures defined on (R, R). Let

(1.5)

n

1 Xn = √

Fi .

n i=1

In section 3 we will study the limit law for Xn . Our basic ideas differ from

Thang’s [8] in that we regard Xn as random linear functionals defined on the Schwartz

space S.

Section 2 contains some notation about vector random measures and Schwartz

distribution spaces. Lemma 2.1, Proposition 2.1, and Proposition 4.1 are of fundamental importance for weak convergence of distribution-valued random variables,

while Proposition 2.2 shows how to realize random linear functionals.

Throughout the paper, unless specifically stated otherwise, c will denote a positive

constant, which may be different from line to line.

450

Z. G. SU

2. Basic concepts and preliminary statements. Let B be a separable Banach space and (R, R, µ) be a Lebesgue measure space. A set function F : R →

L0 (Ω, F, P; B) is called a B-valued s.i.s. random measure on R if

(i) for every sequence (En ) of disjoint sets from R the random variables F (E1 ),

F (E2 ), . . . are symmetric and independent;

(ii) for every sequence (En ) of disjoint sets from R we have

∞

∞

(2.1)

En =

F (En )

F

n=1

n=1

a.s. in the norm topology of B.

The Lebesgue measure µ on R is called a control measure for F if F (E) = 0 a.s.

whenever µ(E) = 0.

We can define random integrals of real-valued functions with respect to vectorvalued s.i.s. random measures as usual. Suppose that F is a B-valued s.i.s. random

measure withthe control measure µ. If f is a real-valued simple function defined

n

on R, f =

i are disjoint sets, then the integral with respect

i=1 ai IAi , where

A

n

to F is defined by R f dF = i=1 ai F (Ai ). Moreover, a function f on R is said to

be F -integrable if there exists a sequence of simple functions fn such that

(i) fn (t) → f (t), µ-a.e.;

(ii) the sequence R fn dF converges in probability.

If f is F -integrable, then we put R f dF = P-limn→∞ R fn dF .

By saying that {Fn , n 1} are i.i.d. random measures, we mean that {Fn (E),

E ∈ R}, n 1, are i.i.d. as a sequence of random processes on (Ω, F, P) indexed by

σ-field R. We refer to Theorem 4.3 in [8] for comparison. The fact that {Fn , n 1}

are independent copies of F only means that, for each E, {Fn (E), n 1} are independent and have the same distributions as F (E). Suppose that {F, Fn , n 1} is

a sequence of B-valued s.i.s. random measures on R. Note for any finite sequence

(E1 , E2 , . . . , Ek ) in R there exists a finite family Ap = (A1 , A2 , . . . , Ap ) of disjoint

sets such that each Ei is the union of some members from the family Ap . This implies

that each Fn (Ei ) can be expressed as a linear combination of {Fn (Ai ), 1 i p},

Fn (Ei ) =

p

bij Fn (Aj ),

j=1

where bij = 0 or 1 and do not depend on n. Consequently, for all n 1,

Fn (E1 )

Fn (A1 )

..

.

(2.2)

. = B .. ,

Fn (Ek )

Fn (Ap )

where B = (bij ). From this we see that “for each E ∈ R, Fn (E) has the same

distribution as F (E)” is equivalent to saying that {Fn (E), E ∈ R} has the same

distribution as {F (E), E ∈ R}.

Now assume that we are given an s.i.s. random measure

nF and a sequence

{Fn , n 1} of independent copies of F . Define Xn = √1n i=1 Fi , n 1. As

usual we hope to regard F as a random vector in some topological vector space. Denoting by F(R; B) the set of all countably additive set functions from R into B, we

see at a glance that such a topological vector space should be F(R; B) equipped with

ON CENTRAL LIMIT THEOREMS FOR VECTOR RANDOM MEASURES

451

the weak topology (i.e., Fn converges weakly to F if for each bounded continuous

function f , limn→∞ R f dFn = R dF in the norm of B; see [1] for the weak topology). Unfortunately, the preceding definition of random measure does not guarantee

F (ω) ∈ F(R; B) for all or almost all ω in Ω. In addition, it is very hard for us to

discuss the weak topology in F(R; B) itself. To avoid difficulties we shall turn to

the study of a sequence of random linear mappings induced by Xn , as Itô [5] and

Mitoma [7] did.

Next we recall some known facts on the Schwartz distributions. D and D denote

the C ∞ -functions of compact support and the Schwartz distributions, respectively.

Hn is the Hermite polynomial of degree n and hn (x) is the corresponding Hermite

2

functions, i.e., hn (x) = cn Hn (x) e−x /2 , n = 0, 1, 2, . . . , where cn = ( π2 2n n!)−1/2 . The

Hermite functions form an orthonormal basis (ONB) in L2 (R). The p-norm · p

in L2 (R) and the (−p)-norm · −p in D are defined as follows:

ϕ2p

=

(2.3)

f 2−p

=

∞

n=0

∞

(ϕ, hn )2 (2n + 1)p ,

h2n (2n + 1)−p ,

n=0

where p = 0, 1, 2, . . . and f (ϕ) denotes the value evaluated at ϕ ∈ D.

It is clear that

f p = sup f (ϕ) : ϕ ∈ D, ϕp 1 ,

which implies

f (ϕ) f −p ϕp ,

f ∈ D , ϕ ∈ D.

Define Sp , (ϕ, ψ)p , Sp , and (f, g)−p as follows:

Sp = ϕ ∈ L2 (R) : ϕp < ∞ ,

Sp = f ∈ D : f −p < ∞ ,

∞

(ϕ, ψ)p =

(ϕ, hn )(ψ, hn )(2n + 1)p ,

(2.4)

(f, g)−p =

n=0

∞

f (hn ) g(hn )(2n + 1)−p .

n=0

Then (Sp , (·, ·)p ) and (Sp , (·, ·)−p ) are Hilbert spaces with the norms · p and · −p ,

respectively. As p increases, · p increases and · −p decreases. So Sp decreases

and Sp increases. The intersection ∩p Sp coincides with the space S of rapidly decreasing functions and the union ∪p Sp with the space S of tempered distributions. It

follows from the definition that S0 = L2 (R), · 0 = · , and (·, ·)0 = (·, ·). By identifying ψ ∈ L2 (R) with [ψ] ∈ D , where [ψ](ϕ) =: (ψ, ϕ), we have L2 = S0 , · = · −0

and (·, ·) = (·, ·)−0 . Hence

(2.5)

D ⊂ S ⊂ · · · ⊂ S2 ⊂ S1 ⊂ S0 = L2 (R) = S0 ⊂ S1 ⊂ · · · ⊂ S ⊂ D .

In other words, S and S are nuclear Frechet spaces.

452

Z. G. SU

Let L(S, B) be the set of all continuous linear mappings from S into B. Let us

equip L(S, B) with the strong topology, i.e., the topology of uniform convergence on

all bounded subsets of S, and denote it by Lb (S, B). This is a completely regular

topological vector space. Analogously, Lb (Sp , B) is a Banach space.

The following lemma due to Le Cam (see [10, Thm. 6.7]) is an extension of

Prokhorov’s theorem.

Lemma 2.1. Let E be a completely regular topological space such that all compact

sets are metricable. If {Pn , n 1} is a sequence of probability measures on E which

is uniformly tight, then there exist a subsequence (nk ) and a probability measure Q

such that Pnk ⇒ Q.

To apply Lemma 2.1 to Lb (S, B) we need the following proposition.

Proposition 2.1. If K is compact in Lb (S, B), then there exists a natural

number p such that K is compact in Lb (Sp , B).

Proof. For each ϕ ∈ S define πϕ : Lb (S, B) → B as πϕ T = T ϕ, T ∈ Lb (S, B).

If Tn → T in the strong topology, then πϕ Tn − πϕ T = Tn ϕ − T ϕ → 0. So πϕ

is a continuous linear mapping. Thus {πϕ T, T ∈ K} is compact in B whenever K is

compact in Lb (S, B). This implies

sup sup T ϕ < ∞.

(2.6)

ϕ∈S T ∈K

By the Banach–Steinhaus theorem (see [9, Thm. 33.1]), K is equicontinuous

in Lb (S, B); i.e., for any neighborhood of zero V in B there is a neighborhood of

zero U in S such that for all mappings T ∈ K we have that ϕ ∈ U implies T ϕ ∈ V . In

particular, letting V = {x : x 1}, U contains a basis element, say {ϕ : ϕq < ε},

such that T ϕ ∈ V . Thus

(2.7)

sup T ϕ T ∈K

cϕq

ε

for all ϕ ∈ S.

For such a q there exists a natural number p > q such that the natural embedding

i : Sp → Sq is nuclear, and hence compact. The closure S of {ϕ : ϕp 1} is

compact in Sq and so is {T ϕ, T ∈ K, ϕ ∈ S}.

On the other hand, supT ∈K T ϕ − T ψ cϕ − ψq . If we consider K as a subset

of C(S, B), the set of continuous mappings from S into B, the required result is easily

obtained.

Let us turn back to the existence of the random integral R ϕ dF for any given

ϕ ∈ S and s.i.s. random measure F on (R, R). Suppose that B is of type 2 space

2

and

n E F (E) cµ(E) for all E ∈ R; then for any simple function ϕn (t) =

i=1 ai IEi (t),

E

R

n

2

2

n

2

ϕn (t) dF =

E

a

F

(E

)

c

a2i E F (Ei )

i

i

i=1

(2.8)

c

n

i=1

a2i µ(Ei ) = c

i=1

R

ϕ2n (t) dµ(t).

Thus ϕ must be integrable with respect to random measure F and

E

R

2

ϕ(t) dF c

R

ϕ2 (t) dµ(t) = cϕ20 .

ON CENTRAL LIMIT THEOREMS FOR VECTOR RANDOM MEASURES

453

Whenever the random integral R ϕ dF exists for each ϕ ∈ S can we define a random

linear mapping TF : TF (ϕ) = R ϕ dF from S into B.

Proposition 2.2. Let Y = {Y (ϕ) : ϕ ∈ S} be a family of B-valued random

variables such that Y (ϕ) is

(L) almost linear,

Y (C1 ϕ1 + C2 ϕ2 ) = C1 Y (ϕ1 ) + C2 Y (ϕ2 )

a.s.,

where the exceptional ω-set may depend on the choice of (C1 , ϕ1 ; C2 , ϕ2 );

(B) p-bounded,

2

E Y (ϕ) ϕ2p .

Then Y has an Lb (Sp+2 , B) regularization X satisfying

E X2−p−2 π2 c

.

8

Here and in what follows we denote by X−p−2 the operator norm of X in

Lb (Sp+2 , B).

Proof. Conditions (L) and (B) imply that the map Y : ϕ → Y (ϕ) is a bounded

linear operator from the pre-Hilbert space (S, · p ) into L2 (Ω, F, P; B). Since S

is dense in Sp , this map can be extended to a unique linear operator from Sp into

L

J 2 (Ω, F, P; B) still denoted by the same notation Y . Let hn be the Hermite functions;

then εn = (2n + 1)(p+2)/2 hn ∈ Sp , n = 0, 1, 2, . . . , form an ONB in Sp+2 . The ONB

in Sp+2

dual to εn is denoted by {en }. It is clear that

E

(2.9)

∞

∞

Y (εn )2 c

εn 2p < ∞.

n=1

n=1

2

∞ < ∞}. Define

This implies that P(Ω1 ) = 1, where Ω1 = {ω ∈ Ω :

n=1 Y (εn )(ω)

∞

Y (εn )(ω) en on Ω1 ,

X(ω) = n=1

(2.10)

0

otherwise.

Then X(ω) ∈ Lb (Sp+2 , B) for every ω, and X(ω) is measurable in ω. Hence X is an

Lb (Sp+2 , B)-valued variable and

∞

2

2

E X−p−2 = E sup X(ω)(ϕ)B = E sup sup f Y (ε) (ω)(en , ϕ)

f

ϕ p+2 1

ϕ p+2 1

(2.11)

∞

n=1

E Xn 2B n=1

2

π c

.

8

Since em (εn ) = δmn and P(Ω1 ) = 1, it follows that X(ϕ) = Y (ϕ) a.s. for ϕ = εn ,

n = 1, 2, . . . , and so on for every finite linear combination of {εn } by (L). For a general

ϕ ∈ Sp+2 ⊂ Sp we have

ϕ − ϕn p+2 → 0

(n → ∞),

ϕn =:

n

k=0

(ϕ, εk )p+2 εk .

454

Z. G. SU

Since X ∈ Lb (Sp+2 , B), it follows that

X(ϕ) − X(ϕn ) = X−p−2 ϕ − ϕn p+2 −→ 0

for every ω. Also, we can use (B) to check that

(2.12)

2

E Y (ϕ) − Y (ϕn ) cϕ − ϕn 2p cϕ − ϕn 2p+2 → 0.

Thus X(ϕ) = Y (ϕ) a.s. for every ϕ ∈ Sp+2 .

3. The CLT for vector random

n measures. After making the preceding

preparations, we can consider Xn = √1n i=1 Fi as an Lb (S, B)-valued random vector

n

by setting Xn (ϕ) = √1n i=1 R ϕ dFi , ϕ ∈ S. It is on this viewpoint that we base

our studies of weak convergence for random measures.

Now we shall state and prove our main results.

Theorem 3.1. Suppose that B is the space of type 2 and that {F , Fn , n 1} is

a sequence of i.i.d. and s.i.s. random measures on (R, R, µ). If E F (E)4 cµ2 (E),

and if {F (E)/µ1/2 (E), E ∈ R, µ(E) > 0} is uniformly tight in B, then there exists

an Lb (S, B)-valued Gaussian vector G such that {Xn (ϕ), ϕ ∈ S} converges weakly

to G.

Proof. By Lemma 2.1 it suffices to verify the following two statements.

(i) Xn is uniformly tight in Lb (S, B); i.e., for any ε > 0 there is a compact set

K ⊂ Lb (S, B) such that

(3.1)

/ K < ε;

sup P ω : Xn (ω) ∈

n

(ii) for each f ∈ Lb (S, B), f (TF ) satisfies the classical CLT.

Let us first prove (i). If K is compact in Lb (Sq , B) for some q, then K is also

compact in Lb (S, B) since the injection of Lb (Sq , B) into Lb (S, B) is continuous.

In the scalar case (i.e., B = R), for every p ∈ N there is a q > p, q ∈ N, such

that the natural embedding i : Sq → Sp is nuclear and so compact; thus its adjoint

i∗ : Sp → Sq is also compact (see [2, Thm. VI.5.2]). Equivalently, any bounded subset

of Sp is relatively compact in Sq .

In the general case (i.e., where B is a separable Banach space), however, it is

possible that a bounded subset of Lb (Sp , B) is not necessarily relatively compact

in Lb (Sq , B). So we need to make some necessary changes as follows.

For each p ∈ N, k > 0, and compact subset KB in B, define

(3.2)

K = T : T −p k

T : T ϕ, ϕq 1 ⊂ KB ,

where q is such that i : Sq → Sp is nuclear.

We claim that K is also relatively compact in Lb (Sq , B).

Indeed, let S be the closure of {ϕ : ϕq 1} in Sp ; then S is compact in Sp .

Since T ϕ − T ψ kϕ − ψq , the set K is equicontinuous in Lb (Sq , B). In addition,

{T ϕ : T ∈ K; ϕ ∈ S} ⊂ KB . Thus considering K as a subset of C(S, B), the set of

continuous mappings from S into B, we easily deduce that K is relatively compact

in C(S, B) and thus in Lb (Sq , B).

Thus in order to verify (i) it is enough to show that

ON CENTRAL LIMIT THEOREMS FOR VECTOR RANDOM MEASURES

455

(i ) for each ε > 0, there exist p, q ∈ N (q > p), k > 0, and a compact subset

KB ⊂ B such that

sup P ω : Xn −p > k < ε,

(3.3)

n

(3.4)

/ KB for some ϕ, ϕq 1 < ε.

sup P ω : Xn (ϕ) ∈

n

Equivalently, according to the well-known fact (see [6, Lem. 2.2]), a subset KB

of B is relatively compact if and only if it is bounded, and for each ε > 0 there is a

finite-dimensional closed subspace F of B such that supx∈KB qF (x) < ε, where qF is

the quotient norm, we need only check the following statement.

For each ε > 0 there exist p, q ∈ N (q > p), k > 0, and a finite-dimensional closed

subspace F of B such that

sup P ω : Xn −p > k < ε,

(3.5)

n

(3.6)

sup P ω : sup qF Xn (ϕ) > ε < ε.

n

ϕ

q 1

This will in turn be done in two steps.

Step 1. For each ε > 0 there exist p ∈ N and k > 0 such that

(3.7)

sup P ω : sup Xn (ϕ) > k < ε.

n

ϕ

p 1

The idea behind the proof of (3.7) is similar to that of Theorem 6.12 in [10].

(1) For any ε > 0, there exist m and δ > 0 such that

ϕm < δ implies sup E Xn (ϕ) ∧ 1 < ε.

(3.8)

n

To see this, consider the function F (ϕ) = supn E (Xn (ϕ) ∧ 1), ϕ ∈ S. Then

(1.i) F (0) = 0,

(1.ii) F (ϕ) 0 and F (ϕ) = F (−ϕ),

(1.iii) F (aϕ) < F (bϕ) if |a| < |b|,

(1.iv) F is lower-semicontinuous on S.

Indeed, if ϕj → ϕ in S, then Xn (ϕj ) → Xn (ϕ) in L

J 0 (Ω, F, P; B), and hence

Xn (ϕj ) ∧ 1 → Xn (ϕ) ∧ 1 in probability. Then

F (ϕ) = sup E Xn (ϕ) ∧ 1 sup lim inf E Xn (ϕj ) ∧ 1

n

n

j

lim inf sup E Xn (ϕj ) ∧ 1 = lim inf F (ϕj ).

j

n

j

(1.v) limn F (ϕ/n) = 0.

For this, note that for any given ε > 0 and ϕ ∈ S there exists a k > 0 such that

E X (ϕ)2

E

n

sup P ω : Xn (ϕ) > k =

2

k

n

ϕ dF 2

cϕ20

ε

< .

2

2

k

k

2

R

Choose M large enough so that k/M < ε/2 < 1; then

! "

! "

ϕ

k

k

ϕ Xn (ϕ)

F

= sup E ∧

1

sup

>

+

< ε.

X

P

ω

:

n M M

M

M

M

n

n

456

Z. G. SU

Let V = {ϕ : F (ϕ) ε}, where V is closed symmetric absorbing. We claim that

it is a neighborhood of 0. Indeed S = ∪n nV , so by the Baire category theorem, one

and hence all, of the nV must have a nonempty interior. In particular, 12 V does.

Then V ⊂ 12 V − 12 V must contain a neighborhood of 0. This in turn must contain

an element of basis, say {ϕm < δ}.

(2) For all n 1,

"

!

ϕ2m

E sup Re 1 − ei f,Xn (ϕ) 2ε 1 +

(3.9)

, ϕ ∈ S.

δ2

f ∈B In fact, in view of (1) we have

#

$ 1 #

$2

Re 1 − ei f,Xn (ϕ) = 1 − cos f, Xn (ϕ) f, Xn (ϕ) ∧ 2 .

2

If ϕm < δ, then

E sup Re 1 − ei

f,Xn (ϕ)

f ∈B #

$2

1

E sup f, Xn (ϕ) ∧ 2

2 f ∈B 2E sup f, Xn (ϕ) ∧ 1 < 2ε.

f ∈B On the other hand, if ϕm δ, we replace ϕ by ψ = δϕ/ϕm and obtain

#

$2 ϕ2m

1

ϕ2m

E sup Re 1 − ei f,Xn (ϕ) E sup f, Xn (ϕ)

∧

2

2ε

.

2 f ∈B δ2

δ2

f ∈B The required equation (3.9) is proved.

(3) There are p > m and M such that for all n and k > 0,

"

!

M

−k−1 supϕp 1 Xn (ϕ) 2

(3.10)

2ε 1 + 2 .

E 1−e

kδ

Indeed, there exists a p > m such that the natural embedding i : Sp → Sm is a

Hilbert–Schmidt operator. Let (ej ) be a complete orthogonal normed system (CONS)

in S relative to · p . Let Y1 , Y2 , . . . be i.i.d. N (0, 2/k) random variables and put

N

ΦN = j=1 Yj ej . Then by (2),

!

"

i f,Xn (ΦN )

i f,Xn (ΦN )

= EY EX sup Re 1 − e

E sup Re 1 − e

Y

f ∈B !

f ∈B E 2ε 1 +

On the other hand,

E sup Re 1 − ei

"

ΦN 2m

.

δ2

N

i

= E sup Re 1 − e j=1

f,Xn (ΦN )

f ∈B f,Xn (ej ) Yj

f ∈B N

i

E sup E Re 1 − e j=1

f,Xn (ej ) Yj

f ∈B However, given X, the conditional distribution of

!

N

j=1 f, Xn (ej )Yj

"

N

2

2

N 0,

f, Xn (ej ) .

k j=1

is

X .

457

ON CENTRAL LIMIT THEOREMS FOR VECTOR RANDOM MEASURES

So the right-hand side of the last inequality is

N

−k−1

j=1

E sup 1 − e

f,Xn (ej )

2

f ∈B Since

sup Xn (ϕ) = sup

ϕ

p 1

ϕ

p 1

sup f, Xn (ϕ)

f ∈B %

= sup sup

f ∈B = sup

f ∈B .

∞

ai f, Xn (ej ),

i,j=1

∞

∞

&

a2i 1

i=1

1/2

f, Xn (ej )2

,

j=1

∞

then setting M =: 2 j=1 ej 2m , we easily obtain (3.10) as follows:

"

!

"

!

−1

−1

2

2

= E 1 − e−k supϕp 1 Xn (ϕ)

E 1 − e−k supϕp 1 Xn (ϕ)

!

"

∞

−k−1 supf ∈B f,Xn (ej ) 2

j=1

=E 1−e

∞

−k−1

f,Xn (ej ) 2

j=1

= E sup 1 − e

f ∈B = E sup lim

f ∈B N →∞

(3.11)

−k−1

1−e

N

j=1

N

−k−1

j=1

lim E sup 1 − e

N →∞

f ∈B (4) Markov’s inequality gives

2

P ω : sup Xn (ϕ) > k ϕ

p 1

f,Xn (ej )

2

f,Xn (ej )

2

"

!

M

2ε 1 + 2 .

kδ

−1

e

E 1 − e−k supϕp 1

e−1

Xn (ϕ)

2

.

The proof of (3.7) is complete.

Step 2. For any ε > 0 there exists a finite-dimensional closed subspace F in B

such that

sup P ω : sup qF Xn (ϕ) > ε < ε,

(3.12)

n

ϕ

q 1

where q is determined by p of Step 1.

The proof for (3.12) involves the same idea as for (3.7) but requires stronger

assumptions. Given ε > 0, let p be chosen as in Step 1. There exists q > p such that

∞

if (ej ) is a CONS in Sq ⊂ Sp , then C 2 =: j=1 ej 2p < ∞. Letting Y1 , Y2 , . . . be a

sequence of i.i.d. normal random variables N (0, 2/ε), we have

2

& !

%

"2 ∞

∞

ε

4C

P ω: Yj ej >

E

Yj ej ε

4C

j=1

j=1

p

p

"2 !

∞

ε

ε

(3.13)

E

Yj2 ej 2p .

4C

8

j=1

458

Z. G. SU

We continue to prove that there exists a finite-dimensional closed subspace F in B

such that

ε

ε

sup

(3.14)

< .

sup P ω : qF Xn (ϕ) >

8

8

ϕ p 4C/ε n

Since B is the space of type 2, then for any closed subspace F in B, B/F is also

of type 2. So

!

"

ε

64c

64

2 E qF2 Xn (ϕ) 2 E qF2

P ω : qF Xn (ϕ) >

(3.15)

ϕ dF .

8

ε

ε

R

Now the key to our problem is to estimate E qF2 ( R ϕ dF ). If we are able to prove

that, for any η > 0, there is a finite-dimensional closed subspace F in B such that for

any E ∈ R,

E qF2 F (E) c ηµ(E),

(3.16)

then it is not hard to obtain

!

2

E qF

(3.17)

"

R

ϕ dF

cη

R

ϕ2 dµ = c ηϕ20 .

Thus (3.14) can be proved by choosing η small enough, i.e., taking a finite-dimensional

closed subspace F .

Let us return to the proof of (3.16). Since {F (E)/µ1/2 (E), E ∈ R} is uniformly

tight, then for any η > 0 there exists a finite-dimensional closed subspace F such that

sup P ω : qF2 F (E) > ηµ(E) < η 2 .

E

Moreover, we have

E qF2 F (E) ηµ(E) +

{ω :

2 (F (E))>ηµ(E)}

qF

qF2 F (E) dP

4 1/2 P ω : qF2 F (E) > ηµ(E)

ηµ(E) + E F (E)

1/2

ηµ(E) + cµ(E)η (1 + c) ηµ(E).

After presenting the previous argument, we can now prove (3.12). In fact we

have, in a similar way to the proof of (3.10),

%

&

−1

2

e

2

P ω : sup qF Xn (ϕ) > ε E 1 − e−ε supϕq 1 qF (Xn (ϕN ))

e−1

ϕ q 1

e

(3.18)

lim E sup Re 1 − ei f,Xn (ΦN ) ,

e − 1 N →∞ f ∈F ⊥

where

ΦN =

N

j=1

Yj ej ,

{Yj , j 1}

ON CENTRAL LIMIT THEOREMS FOR VECTOR RANDOM MEASURES

459

is a sequence of i.i.d. N (0, 2/ε), {ej } is a CONS in S relative to · q , and F ⊥ is the

annihilator of F .

The limit term of (3.18) can be estimated as follows:

E sup Re 1 − ei f,Xn (ΦN ) = E sup Re 1 − ei f,Xn (ΦN ) I( ΦN p 4C/ε)

f ∈F ⊥

f ∈F ⊥

+ E sup Re 1 − ei

f,Xn (ΦN )

I(

f ∈F ⊥

ΦN

p >4C/ε)

4C

ϕN p 4C/ε) + 2P ω : ϕN p >

ε

∞

&

%

4C

2

sup

E qF Xn (ϕ) ∧ 1 + 4P ω : Yj ej >

ε

ϕ p 4C/ε

j=1

!

"

ε

ε

+

sup

<2

P ω : qF Xn (ϕ) >

8

8

ϕ p 4C/ε

%

&

∞

4C

(3.19) + 4P ω : < ε.

Yj ej >

ε

j=1

2E qF Xn (ΦN ) ∧ 1 I(

Up to now, we have completed the key statement (i), i.e., Xn is uniformly tight

in Lb (S, B). Next we shall go on to prove (ii).

For this let us recall some preliminary results concerning the integral representation formula of dual Lb (S, B) of Lb (S, B) (see [9] for details). Let B(S , B) denote

the space of continuous bilinear forms on S × B and carry the topology of uniform

' denote the projective

convergence on the products of bounded sets, and let S ⊗B

tensor product of S and B. Since S and S are nuclear spaces, then we have the

canonical isomorphism

(3.20)

' ∼

S ⊗B

= Lb (S, B),

' ∼

(S ⊗B)

= B(S , B),

' carries the strong dual topology. The following lemma is

where the dual (S ⊗B)

Proposition 49.1 in [9].

Lemma 3.1. A bilinear form u on S × B is continuous if and only if there is a

weakly closed equicontinuous subset A (respectively, M ) of S (respectively, B ) and a

positive Radon measure v on the compact set A × M , with the total mass 1, such

that for all ϕ ∈ S , x ∈ B,

(3.21)

u(ϕ , x) =

A×M ϕ , ϕ x , x dv(ϕ, x ).

For any given f ∈ Lb (S, B) and TF ∈ Lb (S, B) there exist ϕF ∈ S and xF ∈ B

such that TF is the image of ϕF ⊗ xF under the canonical isomorphism mapping,

and further there is a ψf ∈ B(S, B) such that ψf (ϕF , xF ) = f (TF ). From this

and Lemma 3.1 we derive that there are a weakly closed equicontinuous subset A

(respectively, M ) of S (respectively, B ) and a positive Radon measure v on the

compact set A × M with the total mass 1 such that

f (TF ) = ψf (ϕF , xF ) =

(3.22)

=

A×M A×M ϕF , ϕ x , xF dv(ϕ, x )

x , TF (ϕ) dv(ϕ, x ).

460

Z. G. SU

Thus we have

E f 2 (TF ) = E

(3.23)

!

A×M A×M "2

x , TF (ϕ) dv(ϕ, x ) 2

x 2 E TF (ϕ) dv(ϕ, x ) c

A×M Ex , TF (ϕ)2 dv(ϕ, x )

A×M x 2 ϕ20 dv(ϕ, x ).

In addition, since A and M are weakly compact in S and B , respectively, then A

and M are weakly bounded, and hence A is bounded with respect to the semi-norms

· p , 0 p < ∞, and M is bounded with respect to the dual norm. This, together

with (3.23), implies that E f 2 (TF ) < ∞. Thus (ii) is a direct consequence of the

classical CLT.

The proof of Theorem 3.1 is now complete.

4. The CLT for measure-valued processes arising from Brownian motions. Suppose that {X, Xn , n 1} is a sequence of i.i.d. symmetric B-valued

random vectors, and {B(t), Bi (t), i 1, 0 t 1} is a sequence of i.i.d. standard

Brownian motions. Assume furthermore that they are independent of each other. Set

n

(4.1)

1 Zn (t) = √

Xi δBi (t) ,

n i=1

0 t 1.

This is a measure-valued stochastic process. As in section 3 we shall consider Zn as

a sequence of Lb (S, B)-valued continuous random processes on [0, 1]. The reader is

referred to the case having no weighted term Xi in Zn . To make our problem clearer,

we will look at two special cases.

Theorem 4.1. Suppose that B is the space of type 2 and that {X, Xi , i 1}

and {B(t), Bi (t), i 1, 0 t 1} are as above with E X2 < ∞. Define

n

(4.2)

1 Zn (t0 ) = √

Xi δBi (t0 )

n i=1

for some t0 ∈ [0, 1].

Then {Zn (t)(ϕ), ϕ ∈ S} converges weakly to a Gaussian process G = {G(ϕ), ϕ ∈ S}.

Proof. This in fact is a direct consequence of the proof of Theorem 3.1. Observe

that in the proof of Theorem 3.1 the hypotheses that E F (E)4 cµ2 (E) for some

constant c > 0 and {F (E)/µ1/2 (E), E ∈ R} is uniformly tight in B are used only

in (3.16).

However, if we let F (E) = XδB(t0 ) (E), then

E qF2 F (E) = E qF2 (X) E δB(t0 ) (E) = E qF2 (X)P ω : B(t0 , ω) ∈ E .

Obviously, for any ε > 0 there exists a finite-dimensional closed subspace F in B such

that E qF2 (X) < ε whenever E X2 < ∞. In addition, P{ω : B(t0 , ω) ∈ E} cµ(E)

for some numerical constant c > 0. These imply (3.16) and hence finish the proof of

Theorem 4.1.

Theorem 4.2. Suppose that B is the space of type 2 and that {X, Xi , i 1}

and {B(t), Bi (t), i 1, 0 t 1} are as above with E X2 < ∞. Define

(4.3)

n

1 Xi ϕ Bi (t) ,

Zn (ϕ)(t) = √

n i=1

0 t 1,

for some ϕ ∈ S.

ON CENTRAL LIMIT THEOREMS FOR VECTOR RANDOM MEASURES

461

Then {Zn (ϕ)(t), 0 t 1} converges weakly to a Gaussian process Gϕ = {Gϕ (t),

0 t 1} in C([0, 1], B), the set of all bounded continuous mappings from [0, 1]

into B with the uniform norm topology.

Proof. It is clear that for two finite sequences (t1 , t2 , . . . , tm ) and (f1 , f2 , . . . , fm )

the m-dimensional random vector (f1 (X) ϕ(B(t1 )), . . . , fm (X) ϕ(B(tm ))) satisfies the

classical CLT. Also, it is easily seen that the class of all cylindrical sets having the

form

#

$

$

x ∈ C [0, 1], B :

f1 , x(t1 ) , . . . , fm , x(tm ) ∈ A, A ∈ B(Rm )

generates the Borel σ-field B(C([0, 1], B)). Thus we need only turn our attention to

the relative compactness of {Zn , n 1} in C([0, 1], B). By Theorem 4.4 in [4] and

Lemma 2.2 in [6], this will be shown if

(a) {f, Zn (ϕ)(t), t ∈ [0, 1]} is relatively compact in C([0, 1]) for each f ∈ B ;

(b) for any ε > 0 there exist a positive constant M and a finite-dimensional closed

subspace F in B such that

sup P ω : sup Zn (ϕ)(t) > M < ε,

(4.4)

n

sup P ω :

(4.5)

n

0t1

sup qF

0t1

Zn (ϕ)(t) > ε

< ε.

For (a) it is enough to verify that for any ε > 0 there are δ > 0 and M > 0 such

that

%

&

n

1 sup P ω : sup √

(4.6)

f (Xi ) ϕ Bi (t) − ϕ Bi (s) > ε < ε,

n

|t−s|<δ n i=1

%

&

n

1 (4.7)

f (Xi ) ϕ Bi (t) > M < ε.

sup P ω : sup √

n

0t1 n i=1

Let us prove (4.6) and (4.7) by making use of the metric entropy techniques. In

fact, if we denote

%

&

n

f 2 (Xi ) a2 n

D1 = ω :

i=1

we have

%

&

n

1 P ω : sup √

f (Xi ) ϕ Bi (t) − ϕ Bi (s) > ε

|t−s|<δ n i=1

%

n

1 (4.8) P ω : sup √

f (Xi ) ID1 ϕ Bi (t) − ϕ Bi (s)

n i=1

|t−s|<δ

and

(4.9)

&

> ε + P(D1 )

&

n

1 P ω : sup √

f (Xi ) ϕ Bi (t) > M

0t1 n i=1

&

%

n

1 f (Xi ) ID1 ϕ Bi (t) > M + P(D1 ),

P ω : sup √

0t1 n i=1

%

where a, δ, and M are to be specified later.

462

Z. G. SU

Taking a2 2Ef 2 (X)/ε, we have

%

&

n

ε

f 2 (Xi ) a2 n < .

sup P ω :

2

n

i=1

On the other hand, set β1 (ϕ) = supx∈R |dϕ(x)/dx| < ∞; then

ϕ Bi (t) − ϕ Bi (s) β1 (ϕ) Bi (t) − Bi (s),

and hence we obtain

%

&

n

1 1/2

P ω: √

f (Xi ) ID1 ϕ Bi (t) − ϕ Bi (s) > aβ1 (ϕ) |t − s| u

n

i=1

&

%

n

1 1/2

εi f (Xi ) ID1 ϕ Bi (t) − ϕ Bi (s) > aβ1 (ϕ) |t − s| u

= P √

n

i=1

&

%

n

1 Bi (t) − Bi (s) εi f (Xi ) ID1

2EX P ω : √

> au

n

|t − s|1/2 i=1

"

"

!

!

u2

u2 a2

n

.

4

exp

−

4EX exp −

2

(2/n) i=1 f 2 (Xi ) ID1

In this way, it is easy to see that

% √

n

( n)−1 i=1 f (Xi ) ID1 (ϕ(Bi (t)) − ϕ(Bi (s)))

,

aβ1 (ϕ)

&

t, s ∈ [0, 1]

is a sub-Gaussian process with the metric d(s, t) = |t − s|1/2 . By Theorem 11.6 in [6]

we know that

n

1 sup E sup √

f (Xi ) ID1 ϕ Bi (t) − ϕ Bi (s) n

|t−s|<δ n i=1

δ

1/2

log N [0, 1], d, ε

kaβ1 (ϕ)

(4.10)

dε,

0

n

1 E sup √

f (Xi ) ID1 ϕ Bi (t) 0t1 n i=1

1

1/2

log N [0, 1], d, ε

(4.11)

dε < ∞,

kaβ1 (ϕ)

0

where k is an absolute constant and N ([0, 1], d, ε) is the smallest number of balls of

radius ε in the metric d which covers [0, 1].

Now we can choose δ so small that (4.6) holds and M so large that (4.7) holds.

Thus the proof of (a) is concluded.

It remains to prove (b). The idea of its proof is similar to that used above; i.e.,

we can still apply the metric entropy techniques to some appropriate vector-valued

sub-Gaussian processes.

Set ψ2 (x) = exp x2 −1; ·ψ2 (dP) denote its Orlicz norm with respect to probability

space (Ω, F, P). We only prove (4.5) since the proof of (4.4) is similar and simpler.

ON CENTRAL LIMIT THEOREMS FOR VECTOR RANDOM MEASURES

By the well-known contractive principle we have

n

1 1 2

√

q

Xi ID2 ϕ Bi (t) − ϕ Bi (s)

E exp

F

2

C

n i=1

n

1 β12 (ϕ) 2

√

(4.12)

q

Xi ID2 Bi (t) − Bi (s)

E exp

F

2

C

n i=1

463

,

where C > 0 is arbitrary, a and F are to be specified below, and

%

&

n

2

2

D2 = ω :

qF (Xi ) a n .

i=1

Then

n

1 Xi ID2 ϕ Bi (t) − ϕ Bi (s)

qF √

n i=1

ψ2 (dP)

n

1 (4.13) β1 (ϕ) qF √

Xi ID2 Bi (t) − Bi (s) n i=1

c β1 (ϕ) a|t − s|1/2 .

ψ2 (dP)

From the above and noting Remark 11.5 in [6] we deduce

n

1 Xi ID2 ϕ Bi (t) − ϕ Bi (s)

E sup qF √

n i=1

0t1

1

1/2

log N [0, 1], d, ε

c β1 (ϕ) a

(4.14)

dε.

0

After taking a > 0 so small that

cβ1 (ϕ) a

1

0

log N [0, 1], d, ε

1/2

dε <

ε

,

2

we can choose a finite-dimensional closed subspace F in B such that E qF2 (X)/a2 <

ε/2. Moreover, we have

&

%

n

1 Xi ϕ Bi (t)

>ε

P ω : sup qF √

n i=1

0t1

&

%

n

1 (4.15)

Xi ID2 ϕ Bi (t)

> ε + P D2 < ε.

P ω : sup qF √

n i=1

0t1

Up to now we have shown (b), and hence Theorem 4.2.

At the end of this paper we shall prove the following theorem, which motivates

the present work.

Theorem 4.3. Suppose that B is the space of type 2 and that {X, Xi , i 1}

and {B(t), Bi (t), i 1, 0 t 1} are as above with E X2 < ∞. Define

(4.16)

n

1 Xi ϕ Bi (t) ,

Zn (ϕ)(t) = √

n i=1

0 t 1, ϕ ∈ S.

464

Z. G. SU

Then Zn converges weakly to a Gaussian process G in C([0, 1], Lb (S, B)), equipped

with the locally convex topology generated by a family of seminorms.

The proof of Theorem 4.3 is basically along the lines given in [7] and [10], with

some necessary changes made according to Theorem 3.1. For the reader’s convenience,

we give some key points below.

Proposition 4.1. If K is compact in C([0, 1], Lb (S, B)), then there exists a

p ∈ N such that K is also compact in C([0, 1], Lb (Sp , B)).

Proof. For each ϕ in S the set {x(ϕ), x ∈ K} is compact in C([0, 1], B); then the

following properties hold: {x(ϕ)(t), 0 t 1, x ∈ K} is relatively compact in B

and

lim sup sup x(ϕ)(t) − x(ϕ)(s) = 0.

(4.17)

δ→0 x∈K |t−s|<δ

Since supx∈K sup0t1 x(ϕ)(t) < ∞, the Banach–Steinhaus theorem shows that

there exist q ∈ N and L > 0 such that

(4.18)

sup sup x(ϕ)(t) Lϕq .

x∈K 0t1

Since S is nuclear,

there are a natural number r > q and a CONS (ej ) relative

∞

to Sr such that j=1 ej 2q < ∞, so that we have

sup sup

2

sup x(ϕ)(t) = sup sup

x∈K 0t1 ϕ

r 1

sup

x∈K 0t1 ϕ

r 1

= sup sup sup

x∈K 0t1

f ∈B1

sup sup sup

(4.19)

#

$2

sup f, x(ϕ)(t) f ∈B1

∞

#

$2

f, x(ej )(t)

j=1

∞

x∈K 0t1 f ∈B1 j=1

2

f 2 x(ej )(t) < ∞.

Since supx∈K sup|t−s|<δ x(ej )(t) − x(ej )(s)2 4L2 ej 2q , by the Lebesgue convergence theorem and (4.17) we get

lim sup sup sup x(ϕ)(t) − x(ϕ)(s)

δ→0 x∈K |t−s|<δ ϕ

= lim sup sup

r 1

sup

sup

δ→0 x∈K |t−s|<δ f ∈B1 ϕ

lim sup sup

sup

δ→0 x∈K |t−s|<δ f ∈B1

(4.20)

∞

#

r 1

∞

#

$

f, x(ϕ)(t) − x(ϕ)(s)

$2

f, x(ej )(t) − x(ej )(s)

1/2

j=1

2

lim sup sup x(ej )(t) − x(ej )(s)

j=1 δ→0 x∈K |t−s|<δ

1/2

= 0.

On the other hand, since S is nuclear, there exist a natural number p > r and a

CONS (ej ) relative to Sp such that

∞

j=1

ej 2r < ∞.

ON CENTRAL LIMIT THEOREMS FOR VECTOR RANDOM MEASURES

465

Then it follows from (4.19) that

lim

N →∞

∞

#

$

2

sup sup x(t), ej = 0,

j=N x∈K 0t1

so that {x(t), x ∈ K} is relatively compact in Lb (Sp , B) for each 0 t 1.

Since · −r · −p , by (4.20) we have

lim sup sup sup x(ϕ)(t) − x(ϕ)(s) = 0.

δ→0 x∈K |t−s|<δ ϕ

p 1

Thus K has a compact closure in C([0, 1], Lb (Sp , B)) and K is automatically closed

in C([0, 1], Lb (Sp , B)) by the definition of the topology on C([0, 1], Lb (Sp , B)). The

proof of Proposition 4.1 is completed.

Proposition 4.1 will enable us to use Lemma 2.1. Now for each ε > 0 we try to

prove the following two claims.

Claim 1. There exist p ∈ N and M > 0 such that

ε

(4.21)

sup P ω : sup sup Zn (ϕ)(t) > M < .

4

n

0t1 ϕ p 1

Claim 2. There exists a finite-dimensional closed subspace F in B such that

ε

sup P ω : sup sup qF Zn (ϕ)(t) > ε < ,

(4.22)

4

n

0t1 ϕ q 1

where q is such that the natural embedding i : Sq → Sp is nuclear.

Claim 1 can be proved in a completely similar way to (3.5). Before the proof

of Claim 2 is given in detail, let us see how we shall verify the relative compactness

of {Zn , n 1} in C([0, 1], Lb (S, B)). Given any ε > 0, p and q are taken by both

claims. Let (ej ) be a CONS in Sq , for each ej , and choose Kj ⊂ C([0, 1], Lb (S, B))

such that

ε

sup P ω : Zn (ej ) ∈ Kj > 1 − j+1 ,

2

n

lim sup sup sup Zn (ej )(t) − Zn (ej )(s) = 0.

δ→0 x∈Kj |t−s|<δ n

Now define

K = x:

sup

sup x(ϕ)(t) M

0t1 ϕ

p 1

(

∞

( x : x(ϕ)(t), 0 t 1, ϕq 1

is relatively compact in B

Kj .

j=1

/ K} < ε and K has compact closure in C([0, 1], Lb (Sp , B))

Thus supn P{ω : Zn ∈

for some p > r.

Since the injection of C([0, 1], Lb (Sp , B)) into C([0, 1], Lb (S, B)) is continuous,

the closure of K in C([0, 1], Lb (Sp , B)) is compact in C([0, 1], Lb (S, B)). All these

arguments show that {Zn , n 1} is relatively compact in C([0, 1], Lb (S, B)).

466

Z. G. SU

We now return to the proof of Claim 2. As in Step 2 in the proof of Theorem 3.1,

it is enough to show that for any ε > 0 and 0 < C < ∞ there exist a positive

constant M and a finite-dimensional closed subspace F in B such that

(4.23) sup P ω : sup Zn (ϕ)(t) > M < ε for all ϕp C < ∞,

n

0t1

(4.24) sup P ω :

n

sup qF

0t1

Zn (ϕ)(t) > ε

<ε

for all ϕp C < ∞.

We will only prove (4.24). This statement is slightly different from (4.5). The interested reader may make some comparisons to better understand the point of these

proofs. In fact, set

d0 (s, t) = |t − s|1/2 ,

d0 (s,t) log N [0, 1], d0 , ε

d(s, t) =

1/2

0

Then for D3 = {ω :

n

2

i=1 qF (Xi ) supt=s [(Bi (t)

P ω:

sup qF

0t1

%

(4.25)

P ω : qF

Zn (ϕ)(t) > ε

1/2

dε ≈ |t − s| log |t − s|

.

− Bi (s))/d(s, t)] a2 n},

n

1 √

εi Xi ϕ Bi (t) ID3

n i=1

&

>ε

+ P(D3 ) =: I1 + I2 ,

where a > 0 and F ⊂ B will be specified later.

To estimate I1 , observe that

n

1 2

εi Xi ID3 ϕ Bi (t) − ϕ(Bi (s)

Eε qF √

n i=1

n

2

1 2

qF (Xi ) ϕ Bi (t) − ϕ(Bi (s)

ID3

n i=1

! Bi (t)

"2

n

1 2

c

q (Xi )

ϕ (x) dx ID3

n i=1 F

Bi (s)

)

*

n

2

1 2

c

qF (Xi )

ϕ (x) dx Bi (t) − Bi (s) ID3

n i=1

R

c

(4.26)

c a d(s, t)ϕ 20 c a d(s, t),

where we have used ϕ 0 ϕ1 and ϕp C.

Thus it is not hard to get

n

1 Xi ID3 ϕ Bi (t) − ϕ Bi (s)

qF √

n i=1

Since

1

(log N ([0, 1], d, ε))1/2 dε

0

c a d(s, t).

ψ2 (dP)

< ∞, then by taking a (depending on C and

ON CENTRAL LIMIT THEOREMS FOR VECTOR RANDOM MEASURES

ε > 0) small enough, we have

467

n

1 √

E sup qF

Xi ID3 ϕ Bi (t)

n i=1

0t1

1

1/2

ε2

log N [0, 1], d, ε

ca

dε < .

2

0

(4.27)

This implies I1 < ε/2.

For the estimation of I2 , we give the following result concerning Brownian motion.

Lemma 4.1. E supt=s,0t,s1 [(B(t) − B(s))/d(s, t)] < ∞.

Proof. Let ψ2 (x) = exp x2 − 1 and · ψ2 (dP) denote the Orlicz norm of a

random variable with respect to probability space (Ω, F, P) and · ψ(dµ×dµ) the

Orlicz norm of a measurable function with respect to Lebesgue measure space

([0, 1] × [0, 1], B([0, 1]) × B([0, 1]), µ × µ). According to the main results in [3], we

have

sup

t=s

where

|B(t) − B(s)|

cY (ω),

d(s, t)

B(t) − B(s) Y (ω) = I(d0 (s,t)=0)

.

d0 (s, t) ψ2 (dµ×dµ)

So it is enough to show E Y (ω) < ∞. In fact, set

B(t) − B(s) < ∞;

M = sup d0 (s, t) t=s

ψ2 (dP)

then

P ω : Y (ω) > u

P ω:

P ω:

2−u

2

/M 2

"

|B(t) − B(s)|2

I

dµ(t)

dµ(s)

>

2

(d0 (s,t)=0)

u2 d20 (s, t)

[0,1]×[0,1]

"

!

|B(t) − B(s)|2

u2 /M 2

I

exp

dµ(t)

dµ(s)

>

2

(d0 (s,t)=0)

M 2 d20 (s, t)

[0,1]×[0,1]

!

exp

,

from which the required result holds. Furthermore, Y (ω) is exponentially square

integrable, although we will not use this fact.

Now I2 can be estimated as follows:

n

E i=1 qF2 (Xi ) sups=t (|B(t) − B(s)|/d(s, t))

I2 a2 n

1

|B(t)

− B(s)|

(4.28)

.

= 2 E qF2 (X) E sup

a

d(s, t)

s=t

Since E X2 < ∞ and E sups=t (|B(t) − B(s)|/d(s, t)) < ∞, for any ε > 0, after a

is determined to satisfy I1 < ε/2, we are able to choose a finite-dimensional closed

subspace F in B such that I2 < ε.

468

Z. G. SU

Summarizing the preceding arguments, we have shown that {Zn , n 1} is relatively compact in C([0, 1], Lb (S, B)).

Finally, it remains to verify that for each f in C ([0, 1], Lb (S, B)), f, Zn converges weakly to a normal random variable as in Theorems 3.1 and 4.2. The proof of

Theorem 4.3 is complete.

Acknowledgments. This paper is one part of the author’s Ph. D. dissertation

submitted to Fudan University at Shanghai. The author would like to express his

gratitude to Professors Z. Y. Lin and C. R. Lu for their guidance and encouragement. The author also thanks Professor X. Fernique who kindly communicated him

preprints. Many thanks are due to the anonymous referee for a careful reading and

valuable suggestions.

REFERENCES

[1] A. de Araujo and E. Gine, The Central Limit Theorem for Real and Banach Valued Random

Variables, Wiley, New York, 1980.

[2] N. Dunford and J. T. Schwartz, Linear Operators. I: General Theory, Wiley, New York,

1988.

[3] X. Fernique, Regularité des trajectoires des fonctions aléatoires gaussiennes, in École d’Été de

Probabilités de Saint-Flour, IV-1974, Lecture Notes in Math. 480, Springer-Verlag, Berlin,

1975, pp. 1–96.

[4] X. Fernique, Convergence en loi de fonctions aléatoires continues ou càdlàg, propriétés de

compacité des lois, in Séminaire de Probabilités, Lecture Notes in Math. 1485, SpringerVerlag, Berlin, 1991, pp. 178–195.

[5] K. Itô, Distribution-valued processes arising from independent Brownian motions, Math. Z.,

182 (1983), pp. 17–33.

[6] M. Ledoux and M. Talagrand, Probability in Banach Spaces, Springer-Verlag, Berlin, Heidelberg, 1991.

[7] I. Mitoma, Tightness of probabilities on C([0, 1], S ) and D([0, 1], S ), Ann. Probab., 11 (1983),

pp. 989–999.

[8] D. H. Thang, On the convergence of vector random measures, Probab. Theory Related Fields,

88 (1991), pp. 1–16.

[9] F. Treves, Topological Vector Spaces, Distributions and Kernels, Academic Press, New York,

London, 1967.

[10] J. B. Walsh, An introduction to stochastic partial differential equations, in École d’Été de Probabilités de Saint-Flour, XIV-1984, Lecture Notes in Math. 1180, Springer-Verlag, Berlin,

1986, pp. 265–437.