Modeling Mistakes 2008 Palisade Risk and Decision Analysis Conference Terry Reilly Babson College

advertisement

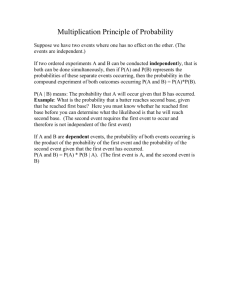

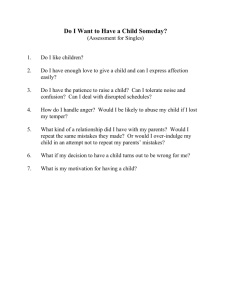

Modeling Mistakes 2008 Palisade Risk and Decision Analysis Conference Terry Reilly Babson College reilly@babson.edu History ¾ Advanced Decision Making ¾Over 250 students ¾Project Based ¾Decision Analysis ¾ Financial Modeling ¾Over 500 students ¾Project Based ¾Simulation Job Decisions Eye Surgery Marriage Children Location Sports Valuations Social Security Distillery Housing Getting Started ¾ Overwhelming ¾Scope of project ¾Scope of tools ¾ Drive for Perfection ¾Limits ‘playing around’ ¾Modeling by numbers ¾No Mistakes Frozen Modeling All Models are Wrong! You can never replicate the real system exactly. Modeling is not linear ¾Start simple ¾Add complexity/realism as you cycle through ¾ Ignore dependency relations at first ¾ If difficult to model, then Don’t! ¾Purposefully make mistakes/Demented What-If Analysis ¾Play, Play, Play If Wrong, Why Model? ¾ Models do not solve the question/problem. ¾ Models provide insights into the problem. ¾ Expected Values ¾ Distribution of payoffs/costs ¾ Nuanced Risk Analysis ¾ What-If Analysis ¾ Sensitivity Analysis Know Objectives Thoroughly understand what the model is to be used for and by whom. Purpose ¾ What questions are to answered? ¾ What measures are to used? ¾ What inputs will be available? ¾ Avoid Type III errors Stock Option Model: Keep stock or sell? (narrow) Balanced Portfolio (broad) Housing Model: Keep rental or sell? (narrow) Balanced Portfolio (broad) Know your Audience One of the most difficult concepts for quant heads. Who will be using model or reading model results? ¾ What is their sophistication? ¾ What do they want to know first? ¾ What measures do they understand? ¾ Do they hate or embrace uncertainty? ¾ Do they even understand uncertainty measures? This impacts not only the final product, but also how the model is constructed, e.g. distribution choice, output templates. Communicate Effectively One of the most important aspects of modeling, if not the most important. ¾Gulf between modeler and end user ¾ Knowledge of system vs. model ¾ Expected/desired output vs. actual ¾Use tools, but easy to overwhelm ¾ Graphs ¾ @RISK Templates ¾ Sliders People Abhor Uncertainty ¾ NIMBY ¾ Uncertainty ≠ Randomness ¾ Need to develop probabilistic thinking ¾ Compare to Worst-Case/Best-Case Analysis ¾ More Nuanced, e.g., leverage variability Analysts need not only understand probabilistic thinking, but how to communicate the analysis results to the end user in a meaningful way. Distribution Choice ¾ Step away from the normal ¾ Infinite Tails? ¾ What happens if output distribution is unusual? ¾ Taking the choice too seriously ¾ Fitting procedures tell us the best fits. ¾ Changing the choice easy (SA on distribution choice). Bidding Example Distribution for Profit 0.200 0.180 0.160 0.140 0.120 @RISK Student Version 0.100 For Academic Use Only 0.080 0.060 0.040 0.020 0.000 -15 -10 -5 0 5 10 90% 15 20 How We Think What is the next number in the following sequence? 2, 4, 6, ? Pot smokers are unmotivated and likely to commit crimes. Pot Smoker Upstanding Degenerate √ No Toker Disconfirming Evidence When working on a project, we look for confirming evidence and weigh anything that supports our ideas heavily. We tend to underweight disconfirming evidence, to the point of ignoring it. Contrary information is typically never sought out. Presumed Associations When assessing probabilities, we tend to think back to similar events and the easier it is to recall, the higher the assessed probability. (Availability Heuristic) ¾ Works well except it can lead us to overestimate the likelihood of vivid or recent events and underestimate the likelihood of more commonly occurring bland events. ¾ When assessing the likelihood of two events occurring, we tend to recall similar events occurring together. We forget that there are always at least three other combinations to think through. This fact is universally ignored. Overconfidence Others fail, but I won’t. Novices are boldly confident. When assessing a probability distribution, we tend to derive a too narrow range. With experience comes a more nuanced and complete understanding of what could go wrong and the range of possible results/outcomes. Limitations ¾ Understand your model’s limitations. ¾ Communicate the limitations. ¾ Remember, all models are wrong! Conclusion ¾ Knowing the decision maker’s objectives, what questions the model is to answer and why, the more focused the model and easier it is to communicate the results. Avoid Type III errors. ¾ Knowing your audience allows you to choose the appropriate output to report and how to interpret the output for the end user. ¾ Knowing the heuristics people tend to use helps you guide probability assessments around pitfalls and mistakes common to all of us.