An Orthogonal Subspace Minimization Method for Schr¨

advertisement

An Orthogonal Subspace Minimization Method for

Finding Multiple Solutions to the Defocusing Nonlinear

Schrödinger Equation with Symmetry

Changchun Wang∗ and Jianxin Zhou∗

January 11, 2013

Abstract: An orthogonal subspace minimization method is developed for

finding multiple (eigen) solutions to the defocusing nonlinear Schrödinger equation with symmetry. Since such solutions are unstable, gradient search algorithms are very sensitive to numerical errors, will easily break symmetry, and

will lead to unwanted solutions. Instead of enforcing a symmetry by the Haar

projection, the authors use the knowledge of previously found solutions to

build a support for the minimization search. With this support, numerical

errors can be partitioned into two components, sensitive vs insensitive to the

negative gradient search. Only the sensitive part is removed by an orthogonal

projection. Analysis and numerical examples are presented to illustrate the

method. Numerical solutions with some interesting phenomena are captured

and visualized by their solution profile and contour plots.

Keywords. Defocusing nonlinear Schrödinger equation, multiple (eigen) solutions, symmetry invariance, sensitive/insensitive error

1

Introduction

As a canonical model in physics, the nonlinear Schrödinger equation (NLS), is of the form

∫

d

∂w(x, t)

= −∆w(x, t) + v(x)w(x, t) + κf (x, |w(x, t)|)w(x, t),

|w(x, t)|2 dx = 0, (1.1)

i

∂t

dt Rn

where v(x) is a potential function, κ is a physical constant and f (x, u) is a nonlinear function

satisfying certain growth and regularity conditions, e.g., f (x, |w|)w is super-linear in w. The

∗

Department of Mathematics, Texas A&M University, College Station, TX 77843. ccwang81@gmail.com and

jzhou@math.tamu.edu. Supported in part by NSF DMS-0713872/0820372/1115384.

1

second equation in (1.1) is a conservation condition under which the NLS is derived, its solutions

will be physically meaningful [4] and the localized property will be satisfied. Equation (1.1) is

called focusing when κ < 0 and defocusing when κ > 0, such as the well-known Gross-Pitaevskii

equation in the Bose-Einstein condensate [2,3,4,11,12,14,19]. Those two cases are both physically

and mathematically very different. To study solution patterns, stability and other properties,

solitary wave solutions of the form w(x, t) = u(x)e−iλt are investigated where λ is a wave frequency

and u(x) is a wave amplitude function. The conservation condition in (1.1) will be automatically

satisfied. Then u(x) satisfies the following semi-linear elliptic PDE

λu(x) = −∆u(x) + v(x)u(x) + κf (x, |u(x)|)u(x).

(1.2)

If u(x) satisfies the localized property1 , (1.2) can be solved numerically on an open bounded

domain Ω ⊂ Rn with a zero Dirichlet boundary condition. This problem also arises from other

applications, such as nonlinear optics [1], etc.

There are two types of multiple solution problems associated to (1.2), (a) one views λ as a

given parameter and solves (1.2) for multiple solutions u; (b) one views λ as an eigenvalue, u as

a corresponding eigen-function and solves (1.2) for multiple eigen-solutions (λ, u). First we let

v(x) = 0 and λ be a parameter. Then (1.2) becomes

−∆u(x) − λu(x) + κf (x, |u(x)|)u(x) = 0, x ∈ Ω and u(x) = 0, x ∈ ∂Ω.

(1.3)

Denote Fw (x, w) = f (x, |w|)w. The variational energy at each u ∈ H = H01 (Ω) is given by

∫

λ

1

(1.4)

J(u) = [ |∇u(x)|2 − u2 (x) + κF (x, u(x))]dx.

2

Ω 2

Then solutions to (1.3) correspond to critical points u∗ of J, i.e., J ′ (u∗ ) = 0, in H. Let H =

H − ⊕ H 0 ⊕ H + be the spectrum decomposition of J ′′ (u∗ ) where H − , H 0 , H + are respectively

the maximum negative, null and maximum positive subspaces of the linear operator J ′′ (u∗ )

with dim(H 0 ) < +∞. The quantity dim(H − ) is called the Morse Index (MI) of u∗ , and is

denoted by MI(u∗ ). A critical point u∗ with MI(u∗ ) = k ≥ 1 is called an order k−saddle. u∗

is non-degenerate if H 0 = {0} and degenerate otherwise. So H − (H + ) contains descent (ascent)

directions of J at u∗ . For a non-degenerate solution u∗ , if MI(u∗ ) = 0, it is a local minimum

of J and a stable solution; If MI(u∗ ) ≥ 1, it is a saddle, and in any neighborhood N (u∗ ) of u∗ ,

there are w, v ∈ N (u∗ ) with J(w) > J(u∗ ) > J(v), so u∗ is unstable. The Morse index is used in

the literature to measure the instability of a critical point and provides important information

for system design and control. When F (x, u) is super-quadratic in u, J ′′ (0) = (−∆ − λI). If we

denote µ1 < µ2 , ... the eigenvalues of J ′′ (0), λ1 < λ2 , ... the eigenvalues of −∆ and v1 , v2 , ... their

we mean |u(x)| → 0 rapidly as |x| → ∞. For numerical visualization, it implies that a solution’s peaks

concentrate in a bounded region when the domain is large and stay in the region when the domain is larger

1

2

corresponding eigenfunctions, then λi − λ = µi . It is clear that 0 is a critical point of J with

MI(0) = k if λk < λ < λk+1 .

The purpose of this paper is to develop a new numerical method for finding multiple solutions

of the defocusing NLS (1.2) when λ is (a) a fixed parameter and (b) an eigenvalue. Such a

numerical method is not yet available in the literature.

We note that most numerical methods in the literature are designed for finding a single

solution, not multiple solutions. Numerical methods for finding one solution and for finding

multiple solutions are very different in functionality and complexity. One of the most significant

differences between those two types of methods is that the former does not use the knowledge of

a previously found solution to find a NEW solution. There are mainly four types of numerical

algorithms in the literature for finding solitary wave solutions to the NLS, namely:

(1) One level minimization methods that use the variational structure of the problem to find a

local minimum point of the variational energy functional J, e.g., [2, 3, 5, 10, 13]. These methods

are usually stable, have less dependence on an initial guess and can treat degeneracy. However,

they cannot find saddle type solutions unless the knowledge of previously found solutions is used

and some constraints are deliberately designed accordingly and enforced, such as the two-level

minimax method described in (4);

(2) Monotone iteration schemes that work directly on the equation. These methods are

simple to implement, but usually can find only the ground state. When the ground state is zero,

with certain normalization skill, they can also find a 1-saddle solution, e.g., [8, 9], but not other

types of saddle solutions;

(3) Linearization methods, such as various Newton (homotopy continuation) methods and

conjugate gradient methods, etc., [7, 15, 16, 28, 29], which also work directly on the equation

but not on its variational function. As a consequence, variational structure is not a concern.

The methods do not need to differentiate the defocusing case from the focusing case in the NLS

setting. These methods can usually solve more general non-variational equations, provide very

fast local convergence and find any predictable solution by selecting a good nearby initial guess.

The weaknesses of these methods in solving multiple solution problems are the following:

(a) they heavily depend on an initial guess. When a PDE is highly nonlinear, its solutions

are hard to predict. Then among many solutions, how can a good nearby initial guess to an

unknown solution be selected? It is difficult to do so. Even for a Newton homotopy continuation

method (NHCM), an initial guess has to be selected in a local basin containing the unknown

target solution. These methods do not use the knowledge of previously found solutions. Thus

one cannot guarantee that the next solution to be found will always be a new one;

(b) since these methods do not use the variational structure of a problem when it is available,

they are blind to the (instability/saddle) order of solutions defined by the variational structure.

Because of this, one only has physical understanding to tell whether a solution found by these

3

methods is a ground state or saddle type, but not the order of saddles.

(c) once one understands the variational structure of a problem and the Morse index, it

is clear that degeneracy always exists in a multiple solution problem, even if all solutions are

non-degenerate. So when an initial guess selected is not sufficiently close to an unknown target

solution, the methods may still encounter difficulties in dealing with the degeneracy.

(4) When κ < 0 (the focusing case), J has a typical mountain pass structure, i.e., J(tu) →

−∞ as t → +∞ for each u ̸= 0; each solution is of finite Morse index and for each u ∈

[v1 , ..., vk ]⊥ , u ̸= 0, there is tu > 0 such that tu = arg maxt>0 J(tu) (M-shaped). So a mountain

pass/linking approach can be used to prove the existence of multiple solutions [20, 22, 27]. A

local minimax method (LMM) can numerically find multiple solutions following the order of their

J-values (critical levels) or Morse index. Let us briefly introduce LMM [17, 18, 31, 32] below.

1.1

The Local Minimax Method (LMM)

Let H be a Hilbert space and J ∈ C 1 (H, R). For a given closed subspace L ⊂ H, denote

H = L ⊕ L⊥ and SL⊥ = {v ∈ L⊥ : ∥v∥ = 1}. For each v ∈ SL⊥ , denote [L, v] = span{L, v}.

Definition 1. The peak mapping P : SL⊥ → 2H is defined by

P (v) = the set of all local maxima of J on [L, v], ∀v ∈ SL⊥ .

A peak selection is a single-valued mapping p : SL⊥ → H s.t. p(v) ∈ P (v), ∀v ∈ SL⊥ .

If p is locally defined, then p is called a local peak selection.

J is said to satisfy the Palais-Smale (PS) condition in H, if any sequence {un } ⊂ H s.t.

{J(un )} is bounded and J ′ (un ) → 0 has a convergent subsequence.

The following theorems provided a mathematical justification for LMM and also established

an estimate for the Morse index of a solution found by LMM.

Theorem 1. If p is a local peak selection of J near v0 ∈ SL⊥ s.t. p is locally Lipschitz continuous

at v0 with p(v0 ) ̸∈ L and v0 = arg local- minv∈SL⊥ J(p(v)) then u0 = p(v0 ) is a saddle point of

J. If p is also differentiable at v0 and H 0 denotes the null subspace of J ′′ (u0 ), then

dim(L) + 1 = MI(u0 ) + dim(H 0 ∩ [L, v0 ]).

Theorem 2. Let J be C 1 and satisfy PS condition. If p is a peak selection of J w.r.t. L, s.t.

(a) p is locally Lipschitz continuous, (b) inf d(p(v), L) > 0 and (c) inf J(p(v)) > −∞, then

′

x∈SL⊥

there is v0 ∈ SL⊥ s.t. J (p(v0 )) = 0 and p(v0 ) = arg min J(p(v)).

v∈SL⊥

v∈SL⊥

Let M = {p(v) : v ∈ SL⊥ }. Theorem 1 states that local-min J(u) yields a critical point

u∈M

u , which is unstable in H but stable on M and can be numerically approximated by, e.g., a

steepest descent method. Then it leads to LMM. For L = {0}, M is called the Nehari manifold

in the literature, i.e., N = {u ∈ H : u ̸= 0, ⟨J ′ (u), u⟩ = 0} = M = {p(v) : v ∈ H, ∥v∥ = 1}.

∗

4

1.2

The Numerical Algorithm and Its Convergence

Let w1 , ..., wn−1 be n-1 previously found critical points, L = [w1 , ..., wn−1 ]. Given ε > 0, ℓ > 0

and v 0 ∈ SL⊥ be an ascent-descent direction at wn−1 , i.e., J(wn−1 + tv 0 ) is increasing in t for

small t and decreasing in t for large t.

Step 1: Let t00 = 1, vL0 = 0 and set k = 0;

Step 2: Using the initial guess w = tk0 v k + vLk , solve for

wk ≡ p(v k ) = arg max J(u) and denote tk0 v k + vLk = wk ≡ p(v k );

u∈[L,v k ]

Step 3: Compute the steepest descent vector dk := −∇J(wk );

Step 4: If ∥dk ∥ ≤ ε then output wn = wk , stop; else go to Step 5;

v k + sdk

∈ SL⊥ and find

∥v k + sdk ∥

}

{

tk0 ℓ

ℓ

m

k

k ℓ

k

k 2

k

: 2 > ∥d ∥, J(p(v ( m ))) − J(w ) ≤ − m+1 ∥d ∥ .

s := max

m∈N

2m

2

2

Step 5: Set v k (s) :=

Initial guess u = tk0 v k ( 2ℓm ) + vLk is used to find p(v k ( 2ℓm ))

where tk0 and vLk are found in Step 2. (track a peak selection)

Step 6: Set v k+1 = v k (sk ), wk+1 = p(v k+1 ), k = k + 1, then go to Step 3.

Remark 1. ℓ > 0 controls the maximum stepsize of each search. The condition v 0 ∈ SL⊥

does not have to be exact and can actually be relaxed. To find an unknown target solution,

the subspace L, called a support, is spanned by previously found/known solutions in a solution

branch with smaller J-values (lower critical levels) or smaller MI. So a local maximization on L

as in the inner loop of LMM prevents a minimizer search in the outer loop from descending to

an old solution in L. Consequently LMM finds a new solution with higher J-value (critical level)

or higher MI. If MI(0) = 0, LMM first starts with n = 0, L = {0} to find a solution w1 . Then

LMM starts with n = 1, L = span{w1 } to find another solution w2 . LMM continues in this way

with L being gradually expanded by previously found solutions in a solution branch.

Theorem 3. ([36]) If J is C 1 , satisfies PS condition, (a) p is locally Lipschitz continuous, (b)

/ L with ∇J(p(v ∗ )) = 0.

d(L, p(v k )) > α > 0 and (c) inf v∈SL⊥ J(p(v)) > −∞, then v k → v ∗ ∈

It is clear that in LMM, the outer loop is a minimization process that inherits the properties

of a minimization method as stated in (1) of the Introduction. The inner loop is designed to

use the knowledge of some previously found solutions to build a finite-dimensional support L in

order for the outer loop to find a new solution with a higher J-value.

5

However when κ > 0 ( the defocusing case), the super-quadratic term F brings a very different

variational structure to J. Indeed both J and −J have no mountain pass structure. Let us check

−J first. Although −J(tu) → −∞ as t → +∞ for each u ̸= 0, but each solution is of infinite

Morse index, e.g, −J ′′ (0) = ∆ + λI has infinitely many negative eigenvalues, or, MI(0) = +∞.

So the problem becomes infinitely unstable. Consequently an infinite-dimensional support L

has to be used in LMM, which causes serious implementation difficulty. So we stay with J and

observe that if λk < λ < λk+1 , then J(tu) → +∞ as t → +∞ for each u ∈ [u1 , ..., uk ]⊥ , u ̸= 0

(W-shaped). So it is clear that LMM cannot be applied.

In conclusion, the above mentioned four types of numerical methods cannot solve the defocusing NLS (1.2) for multiple solutions in an order. However LMM provides us a hint of using the

knowledge of previously found solutions to build a support L in finding new additional solutions.

We will develop a new numerical method to accomplish this. We first note that J is bounded

from below, always attains its global minimum and any critical point of J is of finite Morse

index, e.g., MI(0) = k if λk < λ < λk+1 since J ′′ (0) = −∆ − λI. When the super-quadratic term

is positive, for each u ∈ H, in order for J(tu) to have a critical point along t at certain tu > 0,

we must have

∫

[|∇u(x)|2 − λu2 (x)]dx < 0 and J(tu u) < 0.

(1.5)

Ω

So any nonzero critical point has a J-value less than J(0) = 0. The above observation leads to

a local max-min principle to characterize solutions:

max min J(u)

Sk ⊂H u∈Sk

(1.6)

where Sk is a subspace of H with co-dimension k = 0, 1, 2, .... Since a local minimum exists in

any subspace and its value is bounded from above by 0, the max-min problem (1.6) is always

theoretically and locally solvable. But a difficulty occurs in its numerical implementation, since

one cannot cover all subspaces Sk of co-dimension k. In this paper we show that, when our

nonlinear problem possesses certain symmetry, the above two-level max-min method can be

simplified to become a simple one-level orthogonal subspace minimization method (OSMM) for

finding multiple solutions. The basic idea is to use the property that when the problem has

certain symmetry, many different symmetric functional subspaces are orthogonal to each other

in L2 and H01 inner products. On the other hand, the Rayleigh-Ritz method (RRM) [33], a

simple orthogonal subspace minimization method

uk = arg

min

u∈[u1 ,...,uk−1

]⊥

R(u) =

F (u)

, k = 1, 2, ....

G(u)

(1.7)

is well-accepted in solving an eigen-solution problem of the form F ′ (u) = λG′ (u) where F ′ , G′

are self-adjoint linear operators [31]. Note that [u1 , ..., uk−1 ]⊥ is an orthogonal subspace to

[u1 , ..., uk−1 ] with co-dimension k − 1. So RRM simplifies the max-min characterization (1.6).

6

But it will, in general, not work for nonlinear problems since ∇R(uk )⊥[u1 , ..., uk−1 ]⊥ alone is not

enough for uk to be a critical point of R.

In this paper, we develop an OSMM through modifying RRM by introducing a support

spanned by previously found solutions for finding multiple solutions to the defocusing problem

(1.3). We first provide some mathematical background and description of our new method

in Section 2.1 and then in Section 2.2, we describe how we resolve an important numerical

implementation issue, i.e., how we remove the part of numerical errors sensitive to our algorithm

search by a projection to an infinite dimensional subspace. This is vitally important since we

are searching for unstable solutions. In Section 3, we carry out some numerical experiments on

some typical examples and display our numerical results by their profile and contour plots for

visualization, from which some observation on solution properties are presented.

2

2.1

An Orthogonal Subspace Minimization Method

Some Mathematical Background

Let L ⊂ be a closed subspace in H and u′ be a local minimum point of J restricted to L⊥ . So

∇J(u′ )⊥L⊥ or ∇J(u′ ) ∈ L. But there is no guarantee that ∇J(u′ ) = 0. So it will not work for

a critical point. In order for RRM or our OSMM to work, we need to use the symmetry of the

problem, a very common property in many application problems. To show the mathematical

background of our new approach, let us introduce two simple theorems.

Theorem 4. Let H be a Hilbert space, J ∈ C 1 (H, R) and S ⊂ H be a closed subspace s.t.

∇J : S → S. If u∗ is a critical point of J restricted to S, then u∗ is a critical point of J in H.

Proof. If u∗ ∈ S is a critical point J restricted to S, then ∇J(u∗ )⊥S. Since ∇J(u∗ ) ∈ S, we

conclude ∇J(u∗ ) = 0.

How to choose a subspace S ⊂ H s.t. ∇J : S → S? From [24,25], let G be a compact group

of actions (linear isometrically) on H and SG be the symmetry invariant subspace defined by

G, i.e., u ∈ SG if and only if gu = u ∀g ∈ G. Then J is G-invariant, i.e., J(gu) = J(u) for

all u ∈ H, g ∈ G, implies that ∇J(u) ∈ SG , ∀u ∈ SG . By Theorem 4, we have the well-known

(Principle of Symmetric Criticality, Palais, 1979) [24]:

Theorem 5. Let H be a Hilbert space and G be a compact group of actions on H and J ∈

C 1 (H, R) be G-invariant. If u∗ is a critical point of J restricted to SG , then u∗ is a critical point

of J in H.

So theoretically a critical point can be found by a gradient method as a local minimum point

of J restricted to SG in four steps:

7

Step 1: Given ε > 0, initial guess u0 ∈ SG . Set k = 0;

Step 2: Compute dk = ∇J(uk ). If ∥dk ∥ < ε, then output uk and stop; else go to Step 3;

Step 3: Set uk+1 = uk − sk dk ∈ SG where sk > 0 satisfies the Armijo’s step-size rule:

1

J(uk+1 ) − J(uk ) < − ∥dk ∥2 ;

2

(2.1)

Step 4: Set k = k + 1 and go to Step 2.

Theoretically the above algorithm is symmetry invariant and its convergence, with the Armijo’s

step-size rule, can be easily established if we do not consider numerical error. So a critical point

u∗ ∈ SG can be obtained. Motivated by LMM and RRM, we can also use the knowledge of

previously found solutions to introduce a support L for finding multiple critical points in an

order as described in the following flow chart:

Step 0: Given ε > 0. Set w0 = 0, n = 1;

Step 1: Set L = [w1 , ..., wn−1 ] where w1 , ..., wn−1 are previously found solutions. Choose a maximum symmetry invariant subspace (MSIS) SG ⊂ L⊥ = [w1 , ..., wn−1 ]⊥ and select an initial

guess u0 ∈ SG . Set k = 0;

Step 2: Compute dk = ∇J(uk ). If ∥dk ∥ < ε, then output wn = uk , n = n + 1 go to Step 1 else go

to Step 3;

Step 3: Set uk+1 = uk − sk dk ∈ SG where sk > 0 satisfies the Armijo’s step-size rule (2.1);

Step 4: Set k = k + 1 and go to Step 2.

So if the algorithm is successful, a new solution wn ∈ SG ⊂ L⊥ is found and we have J(wk ) ≤

J(wn ), k < n. The algorithm seems very simple, but actually contains a serious difficulty in its

implementation due to numerical errors in finding an unstable saddle point or a local minimum

point in a subspace not in H, since the algorithm does not enforce symmetry.

To address this issue, let ek denote the numerical error in approximating ∇J(uk ) ∈ SG where

uk ∈ SG and dk = ∇J(uk ) + ek . In general, ek is not symmetric or ek ∈

/ SG even if ek is

small in norm. Without enforcing symmetry, it leads to the search direction dk ∈

/ SG and then

uk+1 ∈

/ SG . Once the symmetry is broken, the symmetry invariance of the algorithm collapses

and the algorithm is no longer self-contained in the subspace SG . If we look for a local minimum

point in H, then there is no difficulty with such error since ek ∈ H anyway. Even if the symmetry

is broken, the minimizer search still leads to a local minimum point in H. But if we look for

a saddle point which is a local minimum of J in a subspace SG not in H, once the symmetry

8

invariance of the algorithm is broken, the minimizer search may sense a descent direction outside

SG and follow it to a point outside SG with a smaller J-value. So this part of error will be

significantly increased and eventually lead to a local minimum point outside SG , either not a

solution or an unwanted solution. The Haar projection of dk onto SG can be used to enforce the

symmetry on dk [24]. But when a symmetry is very complex or unknown, the Haar projection

becomes much more complex or even impossible. In particular, if a finite element mesh used in

a computation does not match the symmetry, then it is impossible to do the Haar projection.

So far the literature does not provide any alternative. Next we propose a new method to handle

such numerical error.

2.2

Removal of Sensitive Error in Numerical Implementation

The basic idea of the new method consists of two parts:

(1) When a minimizer search is concerned, the space H can be divided into two portions:

its ”lower portion” which contains critical points with smaller J- values, denoted by L and also

called a support and its complement L⊥ called ”upper portion” which contains critical points

with larger J-values. When descent directions into the ”lower portion” are blocked, the minimizer

search leads to a local minimum point w∗ in the ”upper portion” L⊥ . But in general this local

minimum point w∗ in the ”upper portion” need not be a critical point since PL (∇J(w∗ )) = 0

but not necessarily PL⊥ (∇J(w∗ )) = 0 where PA is the linear projection onto a subspace A;

(2) Select an MSIS SG ⊂ L⊥ and an initial guess u0 ∈ SG . Before the minimizer search, we

L

⊥

⊥

partition the numerical error ek into ek = eLk + e⊥

k where ek ∈ L, ek ∈ L . Since the minimizer

search is attracted only by eLk in the “lower portion” and may lead to much smaller J-values,

L

¯

but is not attracted by e⊥

k in the “upper portion”, we get rid of ek and modify dk = ∇J(uk ) by

its orthogonal projection dk onto the infinite-dimensional subspace L⊥ , i.e., we do

dk = d¯k − PL (d¯k ).

⊥

Note that the remaining part e⊥

k ∈ L in numerical error is not attractive to the minimizer search

and is dominated by ∇J(uk ) ∈ SG ⊂ L⊥ , thus e⊥

k will not be enlarged and the approximation

sequence {uk } stays close to SG . Consequently, the minimizer search finds an approximation of

a local minimum point w∗ ∈ SG ⊂ L⊥ of J restricted to L⊥ . So ∇J(w∗ )⊥SG . On the other hand

since w∗ ∈ SG implies ∇J(w∗ ) ∈ SG , we conclude ∇J(w∗ ) = 0.

It leads to the following orthogonal subspace minimization method (OSMM)

Step 0: Given ε > 0. Set w0 = 0, n = 1;

Step 1: Set a support L = [w1 , ..., wn−1 ] where w1 , ..., wn−1 are of previously found solutions.

Identify an MSIS SG ⊂ L⊥ = [w1 , ..., wn−1 ]⊥ and select an initial guess u0 ∈ SG . Set k = 0;

9

Step 2: Compute dk = ∇J(uk ). If n > 1, do dk = dk − PL (dk ). If ∥dk ∥ < ε, then output

wn = uk , n = n + 1 go to Step 1 else go to Step 3;

Step 3: Set uk+1 = uk − sk dk ∈ SG where sk > 0 satisfies the Armijo’s step-size rule (2.1);

Step 4: Set k = k + 1 and go to Step 2.

Remark 2. (1) To identify an MSIS SG , in case it is not unique, one should choose an initial

guess in SG accordingly. Consequently it may lead to multiple solution branches;

(2) One branch may bifurcate to two or more branches. On the other hand, two or more

branches may merge to one branch. This is the complexity one has to face with multiple solution

problems. When multiple solution branches exist, one should choose those previously found

solutions accordingly to form L;

(3) Since we do not enforce symmetry, an MSIS is invisible and selected only by the symmetry

of an initial guess. Even an initial guess is selected in a smaller symmetry invariant subspace

SG′ ⊂ SG , the algorithm will still lead to a critical point in an MSIS SG . To see this we further

G

′

′

⊥

G

partition e⊥

k = ek + ek where ek ∈ L \ SG is dominated by ∇J(uk ) and ek ∈ SG is kept in the

∗

algorithm. Thus eG

k attracts the minimizer search and helps it to find a local minimum point w

in SG \ SG′ . Note that in this case, there will also be a local minimum point in SG′ which is at

an upper energy level of w∗ . It means that the subspace L is not a maximum “lower portion” of

the minimizer search in SG′ , so it is not enough to support the minimizer search to find a local

minimum point in SG′ ;

(4) There is only one difficulty left, that is, what should we do if e⊥

k is not dominated by ∇J(uk )?

There are two possibilities:

(a) The numerical error e⊥

k is small and ∇J(uk ) is smaller. That means uk is already a good

approximation of a critical point. We may simply stop the iteration and output uk , or switch to

a Newton method to accelerate its local convergence;

⊥

(b) The numerical error e⊥

k is not small but ∇J(uk ) is small. Then we can reduce ek by using

a finer mesh, or a more symmetric mesh or switch to a Newton method to speed up its local

convergence, since a Newton method is insensitive to such numerical error [25].

A more detailed example will be presented in the last section to show how to identify an

MSIS SG ⊂ L⊥ in numerical computation.

(5) For MI(wn ), since in OSMM, the minimizer search is in an MSIS SG in the subspace L⊥ =

[w1 , ..., wn−1 ]⊥ but we do not enforce symmetry and allow eLk ∈ L⊥ to exist, we cannot obtain

a precise Morse index estimate as in Theorem 1. However, if codim(SG ) = k ≥ n − 1 and

{w1 , ..., wn−1 } are in the same solution branch, then we should have n − 1 ≤ MI(wn ) ≤ k and

MI(wi ) ≤MI(wn ), J(wi ) < J(wn ), i = 1, ..., n − 1. So solutions are found in this order. But

this order is not complete if w1 , ..., wn are not in the same solution branch. For example, with

L = [w1 ], by using two different MSIS SG and two different initial guesses in SG , we may find two

10

different solutions w21 , w22 with J(w1 ) < J(w2i ) and MI(w2i ) = 2, i = 1, 2. But we cannot compare

J(w21 ) with J(w22 ).

One may ask why cannot we use a Newton method at the very beginning? In addition to

the reasons mentioned in the Introduction, there is another one, i.e., the invariance of a Newton

method to symmetries is insensitive to numerical error [25]. So a Newton method may be easily

trapped in a symmetry invariant subspace defined by an initial guess whose symmetry is different

from that of a target solution. Note that u = 0 is a critical point in any symmetry invariant

subspace. This means that even when the symmetry of an initial guess is selected correctly, it

still needs to be scaled properly for a Newton method to stay away from the local basin of u = 0.

Without using a variational structure, it is hard to determine this scale.

3

Numerical Examples

In this section, by applying the OSMM developed in Section 2, we carry out numerical experiments on solving (1.3) and (1.2) for multiple solutions in the order of their energy values where

λ is (a) a fixed parameter and (b) an eigen-value. We find that the algorithm works well and is

efficient enough for us to carry out more numerical investigations on several solution properties

that are of interests.

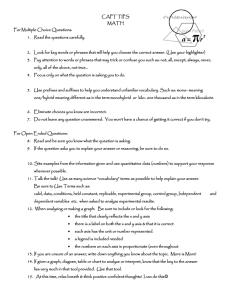

We use a symmetric finite element mesh as shown in Fig. 1 (a) or its locally refined mesh

such as the one shown in Fig. 2 (a) if necessary, so that the equation and the energy functional

J satisfy all symmetry invariant properties. Since NLS (1.1) is defined in the entire space while

equation (1.3) is defined in a bounded open domain, due to the conservation condition, the second

equation in (1.1), for (1.3) to be valid, its solutions must satisfy the localized property. So we will

closely check this property through solution contour plots with several different external trapping

potentials v(x). In addition, we note that numerical multiple solutions to focusing NLS, their

shape (peak profile), symmetry and other properties are available in the literature and some of

the solution properties are even mathematically verified; but multiple solutions to defocusing

NLS (1.2) and (1.3) are numerically captured and visualized for the first time. So we carried

out a large amount of numerical investigations on solutions’ localization property, peak profile,

pattern order and possible symmetry breaking phenomenon. We have selected a few typical cases

and present them here.

In order to clearly plot a solution profile and its contours in one figure we have shifted the

profile vertically in the figure. Since the problem is variational and u∗ is a solution if and only if

J ′ (u∗ ) = 0, we use ∥dk ∥H 1 < ε to control the error in our numerical computation. The algorithm

can always be followed, after ∥dk ∥H 1 < 10−2 (numerically it implies that the approximation

solution is in a local basin of the exact target solution), by a Newton method to speed up local

11

convergence. Since we want to see how accurate OSMM can go and OSMM turns out to be very

efficient in our numerical computation, we did not use a Newton method in this paper.

Example 1. (An autonomous defocusing case) In (1.3), we choose f (x, |u(x)|)u(x) = u3 (x),

κ = 1, λ = 200, Ω = (−0.5, 0.5)2 and ε = 10−4 in the algorithm. For each fixed λ, there are only

finite number of solutions. Then the algorithm can be described in detail as below:

1. The algorithm starts from L = {0}, SG = H, u0 ∈ H be any nonzero function. Denote the

solution by w1 which is a positive and even symmetric function about the lines x = 0, y =

0, y = x, y = −x;

2. Then we set L = [w1 ], denote MSIS SG to be the set of all odd functions about one of the

lines x = 0, y = 0, y = x, y = −x. It is clear that SG ⊂ L⊥ . So choose u0 ∈ SG . Denote

the solution by w2 which is odd symmetric about one of the four lines. Note that there are

multiple solutions w21 , ..., w2m here due to different initial guess that is odd symmetric about

a different line of the four. w21 , ..., w2m are not necessarily orthogonal, but their Morse indices

are the same;

3. Next we set L = [w1 , w2 ], denote MSIS SG to be the subspace of functions which are odd

symmetric about two lines, e.g. x = 0 and y = 0 or y = x and y = −x. It is clear that

SG ⊂ L⊥ . Choose u0 ∈ SG . Denote the solution by w3 that is odd symmetric about the two

lines of symmetry. Note that there may be multiple solutions due to different initial guess,

which may lead to multiple solution branches. But their Morse indices are the same;

4. Continue in this way. In order to find a solution with higher Morse index, we need to put

more previously found solutions from a branch in the support L;

5. The above algorithm finds a local minimum of J in MSIS SG ⊂ L⊥ . But SG is only an invisible

bridge not enforced. When an initial guess u0 is chosen in a smaller symmetry invariant

subspace (means more symmetric), due to computational error ek , the above algorithm still

leads to a solution in an MSIS in L⊥ .

At each point uk , dk = ∇J(uk ) is computed through solving a linear elliptic PDE of the form

−∆dk (x) + λdk (x) = −∆uk (x) + λuk (x) − κF (x, uk (x))

satisfying zero Dirichlet boundary condition. There are many numerical methods available in the

literature to solve such a problem. This is where a numerical error is generated. We use a finite

element method by calling Matlab subroutine ASSEMPDE. A symmetric finite element mesh is

generated by Matlab mesh generator INITMESH and REFINEMESH, and shown in Fig. 1 (a).

12

The first 7 numerical solutions u1 , ..., u7 are shown in Fig. 1 (b)∼(h). Their energy values J(ui ) = −5, 290.6; −3, 262.3; −3, 262.3; −2, 886.3; −2, 886.3; −1, 853.9; −1, 153.0; their norms

∥ui ∥ = 63.7635, 73.6002, 73.6002, 71.4513, 71.4513, 74.1284, 69.2141 and their supports L = [0],

[u1 ], [u1 ], [u1 ], [u1 ], [u1 , u2 , u3 ], [u1 , u4 , u5 ]. We have also solved the same problem with larger domains and different λ values. Since the external trapping potential v = 0, their solution contours

spread out all over the domain and are still dense near the boundary, which indicate that the

solutions’ peak(s) will not concentrate in this domain if the domain becomes larger. Thus it does

not show the localized property.

In our numerical computation, we have tried using: (1) a more symmetric initial guess in L⊥ ,

it leads to the same solution in L⊥ with less symmetry. This can be explained by Remark 2 (3);

(2) asymmetric initial guesses in L⊥ , it is amazing that as long as the support L is sufficient they

still lead to those solutions. But we are not able to establish any mathematical justification for

such a case so far.

0

0

−5

0

−10

−5

−5

−15

−10

−20

−15

−10

−25

−20

−30

0.5

−15

0.5

−25

0.5

−30

0.5

0.5

0

0

0

0

0.5

0

0

−0.3

−0.2

−0.1

0

0.1

0.2

0.3

0.4

0.5

−0.5

(a)

−0.5

−0.5

−0.5

−0.5

(b)

(c)

0

(d)

0

0

0

−5

−5

−10

−5

−10

−10

−15

−10

−15

−15

−15

−20

−20

−25

−25

−5

−20

−25

−20

−30

0.5

0.5

−25

0.5

0

0

−0.5

(e)

−0.5

−30

0.5

−30

0.5

0.5

−30

−0.5

0

0

0.5

0

0

0

−0.5

0.5

−0.5

−0.5

(f)

−0.5

(g)

0

−0.5

−0.5

(h)

Figure 1: A typical symmetric finite element mesh (a). The first 7 numerical solutions u1 , ..., u7

in (b)∼(h), to Example 1. The contours do not show the localized property.

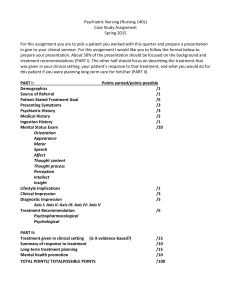

Example 2. (A non-autonomous defocusing case) In (1.3), we set f (x, |u(x)|)u(x) = |x|r u3 (x)

with r = 3, κ = 1, λ = 100, Ω = (−1, 1)2 and ε = 10−4 in the algorithm.

The first 6 numerical solutions u1 , ..., u6 are shown in Fig. 2 (b)∼(g). Their energy values J(ui ) = −130, 550; −32, 737; −32, 737; −32, 286; −32, 286; −13, 206; their solution norms

∥ui ∥2 = 389.4356, 224.2219, 224.2219, 225.5204, 225.5201, 173.1593 and their supports L = [0],

[u1 ], [u1 ], [u1 ], [u1 ], [u1 , u2 , u3 ], [u1 , u4 , u5 ]. We note that solution peaks are sharp, narrow and very

13

close. To compute a sign-changing solution, evenly meshed finite elements lost their accuracy,

so local mesh refinements in a small region near the center (0, 0) have to be used to maintain

our computational accuracy. A locally refined finite element mesh generated by Matlab mesh

generator INITMESH and REFINEMESH is shown in Fig 2 (a). Though the peaks are sharp

and narrow, the solutions look like localized, but are actually not since their contours still spread

out all over the domain and are not sparse enough near the boundary. We also note that there

is no symmetry breaking taken place, which is in contrast to its focusing counterpart such as the

Henon equation where solution peaks are apart and symmetry breaking may take place.

1

0

0.8

0

0.6

0

−50

0.4

−50

u−axis

−100

0

−50

−150

−100

u−axis

u−axis

0.2

−200

−0.2

−250

−100

−0.4

−300

1

−0.6

1

−0.8

0.5

0

−0.5

0

0.5

1

−0.5

−1

y−axis

−1

0.5

0.5

x−axis

y−axis

0

−150

1

0

−0.5

−1

−1

−150

1

1

0.5

0

−0.5

0.5

0

−0.5

x−axis

−1

−1

−0.5

−1

−1

(a)

(b)

0

−0.5

y−axis

0.5

1

x−axis

(c)

(d)

0

0

−10

0

−20

−50

−30

u−axis

u−axis

u−axis

−50

−100

−40

−50

−100

−60

−70

−150

1

0.5

1

0.5

0

y−axis

0

0

−0.5

−1

0.5

−0.5

0

−0.5

1

−150

−1

−0.5

0.5

−1

1

x−axis

−1

−80

1

0

−1

y−axis

x−axis

x−axis

1

0.5

0

−0.5

−1

y−axis

(e)

(f)

(g)

Figure 2: A typical locally refined finite element mesh (a). The first 6 numerical solutions

u1 , ..., u6 in (b)∼(g) to Example 2.

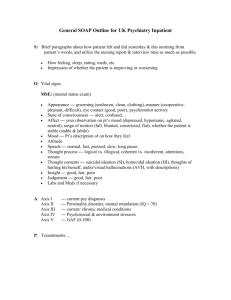

Example 3. (A defocusing case with a symmetric external trapping potential) In (1.2) we

choose a nonzero symmetric external trap potential v(x) = r|x|2 , r = 200, κ = 1, λ = 100,

f (x, |u(x)|)u(x) = u3 (x), Ω = (−1, 1)2 and ε = 10−4 . The variational energy of u is

∫

1

1

1

J(u) = { [v(x) − λ]u2 (x) + |∇u(x)|2 + u4 (x)}dx.

2

4

Ω 2

So for u ∈ H to be a solution, we must have

∫

[v(x) − λ]u2 (x)dx < 0,

(3.2)

Ω

which will force the peaks of u to concentrate on an area where v(x) is small. We note that when

r is larger or λ is smaller, inequality (3.2) will force the peaks of u to concentrate on a smaller

14

area where v(x) is smaller. So the solution peaks are localized in the area. For each λ, there are

only a finite number of solutions. The first 7 numerical solutions u1 , ..., u7 are shown in Fig. 3.

Their energy values J(ui ) = −1, 046.0; −412.0963; −412.0963; −410.896; −410.896; −57.8304;

−56.439; their solution norms ∥ui ∥ = 20.695, 28.2988, 28.2988, 28.363, 28.363, 23.2704, 23.3154

and their supports L = [0], [u1 ], [u1 ], [u1 ], [u1 ], [u1 , u2 , u3 ], [u1 , u4 , u5 ]. The solution contours do

not appear near the boundary and thus clearly show the localized property.

0

−2

−4

0

0

−6

−2

−8

u−axis

−2

−4

−6

−6

u−axis

u−axis

−4

−8

−16

−18

−20

1

−14

0.5

−12

−14

−8

−10

−12

−10

1

−10

0.5

1

1

−16

0

0.5

0

−18

1

0

−0.5

−0.5

−1

y−axis

−1

0

0.5

0

−0.5

−1

−1

x−axis

y−axis

x−axis

y−axis

(a)

−0.5

−1

1

0.5

0

−0.5

−1

x−axis

(b)

(c)

0

0

−2

0

−2

0

−10

−15

0.5

0

−0.5

0.5

y−axis

−1

−1

−20

−1

−10

−12

1

−12

1

0.5

0

−0.5

0.5

1

x−axis

−1

0.5

0

−0.5

0

−0.5

−8

−10

1

0

y−axis

y−axis

−0.5

0

−1

−0.5

−1

x−axis

(e)

0

1

0.5

−0.5

x−axis

(d)

−6

−10

1

0.5

−6

−8

−15

−20

1

u−axis

u−axis

−5

u−axis

u−axis

−4

−4

−5

(f)

y−axis

−1

−1

0

−0.5

0.5

1

x−axis

(g)

Figure 3: The first 7 numerical solutions u1 , ..., u7 to Example 3. The solutions are localized.

Example 4. (A defocusing case with a less symmetric external trapping potential) To show that

our method works for problems with less symmetry, in (1.2), we choose all the same parameters as

in Example 3 except a less symmetric external trapping potential v(x) = r( 23 x2 + 43 x22 ), r = 200. So

for u ∈ H to be a solution, the inequality (3.2) will force the peaks of u to concentrate on an elliptical area where v(x) is smaller. Thus the localized property should also be observed. This analysis

is confirmed by our numerical computation. The first 4 numerical solutions u1 , ..., u4 are shown

in Fig. 4. Their energies J(ui ) = −1.1283e + 3, −645.3762, −268.2216, −59.8842, their solution

norms ∥ui ∥ = 23.2462, 29.4751, 29.2566, 24.0469 and their supports L = [0], [u1 ], [u1 ], [u1 , u2 , u3 ].

Example 5. As the last example to PDE (1.2), we choose v(x) = r(sin2 (2πx1 ) + sin2 (2πx2 ))

with r = 100 and other terms remaining the same as in Example 3.

The first 7 numerical solutions u1 , ..., u7 are shown in Fig. 5 with their energy values J(ui ) =

−764.4081, −460.3365, −460.3365, −454.8088, −454.8088, −250.1220, −229.2876, their norms

∥ui ∥ = 44.0352, 37.7503, 37.7503, 37.7907, 37.7907, 32.2368, 32.5469 and their supports L = [0],

15

0

0

0

−2

0

−2

−4

−5

u−axis

−5

−6

u−axis

u−axis

u−axis

−4

−10

−6

−8

−10

−15

−8

−10

1

−10

−20

1

0.5

1

0.5

0

1

−1

−1

(a)

−1

0.5

0

−0.5

−0.5

−1

y−axis

x−axis

0

0

−0.5

−0.5

−1

y−axis

x−axis

0.5

1

0

−0.5

−0.5

y−axis

0.5

0.5

0

0

−0.5

−12

1

−15

1

0.5

(b)

−1

−1

y−axis

x−axis

−0.5

−1

1

0.5

0

x−axis

(c)

(d)

Figure 4: The profiles of the first 4 numerical solutions u1 , ..., u4 for Example 4.

0

0

0

−5

−5

−10

−10

−2

−6

u−axis

u−axis

u−axis

−4

−15

−15

−8

−20

−10

−12

1

−20

−25

1

0.5

1

−25

1

0.5

1

0.5

0

−1

y−axis

−1

−1

0

−0.5

−0.5

−1

y−axis

x−axis

(a)

1

0.5

0

0

−0.5

−0.5

0.5

0.5

0

0

−0.5

−0.5

−1

y−axis

x−axis

(b)

−1

x−axis

(c)

−5

−5

−10

−10

−5

0

−10

−5

−15

u−axis

−15

u−axis

0

u−axis

u−axis

0

0

−15

−20

−10

−20

−25

1

0.5

1

0.5

0

0

−0.5

y−axis

(d)

−20

1

−25

1

1

0.5

0.5

0

−1

x−axis

y−axis

0.5

−0.5

−0.5

−1

−1

0

0

0

−0.5

−0.5

−1

y−axis

x−axis

(e)

1

−15

0.5

−1

−1

−0.5

0

x−axis

(f)

0.5

1

−20

1

0.5

−0.5

0

−0.5

−1

−1

x−axis

y−axis

(g)

Figure 5: The profiles of the first 7 numerical solutions u1 , ..., u7 to Example 5.

[u1 ], [u1 ], [u1 ], [u1 ], [u1 , u2 , u3 ], [u1 , u4 , u5 ]. The solution profiles show an interesting phenomenon

i.e., higher order (MI) solutions have less peaks, a significant contrast to all previous examples.

Example 6. (A defocusing eigen problem without external trapping potential) Next we let λ

vary as an eigen-value and solve the defocusing eigen problem (1.2) for multiple solutions (λ, u).

We choose v(x) = 0, κ = 1, f (x, u(x))u(x) = u3 (x), Ω = (−0.5, 0.5)2 and ε = 10−4 in the

algorithm, then write its energy J(λ, u) = F (u) − λG(u) where

∫

∫

1 4

1

1

2

u2 (x)dx.

F (u) = [ |∇u(x)| + u (x)]dx and G(u) =

2

4

2

Ω

Ω

Then (λ, u) solves (1.2) if and only if Ju (λ, u) = 0 or F ′ (u) = λG′ (u). Since the other partial

derivative Jλ (λ, u) = 0 is not involved, the problem is not variational. A normalized condition

∫

G(u) = 21 Ω u2 (x)dx = c is usually used in the literature to make the problem variational. Under

this constraint, the eigenvalue λ can be viewed as a Lagrange multiplier and a Lagrange functional

16

can be used to solve for its multiple critical points (λ, u), see [32]. But a standing (solitary) wave

solution automatically satisfies the conservation condition in (1.1), the normalized condition

∫ 2

u (x)dx = c is not necessary for NLS, see [4]. So instead of using a Lagrange multiplier

Ω

method as in [32], we propose to solve the problem on its energy level, e.g., we fix its energy level

J(λ, u) = F (u) − λG(u) = −C

where C > 0 in view of the inequalities in (1.5). For each u at that energy level, we have

∫

[|∇u(x)|2 + 12 u4 (x)]dx + 2C

F (u) + C

.

λ(u) =

= Ω

G(u)

∥u∥2L2

Then it is clear that λ(u) has the same symmetry invariant property as F (u) and

λ′ (u) =

F ′ (u) − λ(u)G′ (u)

= 0 ⇔ Ju (λ, u) = F ′ (u) − λG′ (u) = 0,

G(u)

i.e., (λ, u) is an eigen-solution to (1.2). So we focus on finding multiple critical points of λ. Also

by the Poincare inequality, we see that for each M > 0, there is d > 0 s.t. when ∥u∥ < d,

λ(u) > M holds. So a global minimum of λ in H exists. Thus a local minimum of λ in each

subspace exists and we can use our method to find multiple critical points of λ for each C ≥ 0

in the order of λ-values. Since this approach is new, we choose C = 900 and C = 10, and want

to see the shape difference between the eigen functions at different energy levels.

0

0

0

0

−5

−5

−2

−5

−10

−4

−10

−10

−15

−6

−15

−15

−8

−20

−20

−20

−10

0.5

0.5

−25

0.5

0

−25

0.5

0

0

0.5

−0.5

−0.5

0.5

(a)

0

0

0

−0.5

−25

0.5

0

0.5

−0.5

−0.5

(b)

−0.5

0

−0.5

−0.5

(c)

(d)

0

0

0

−5

0

−5

−5

−10

−10

−5

−10

−10

−15

−15

−15

−15

−20

−20

−20

−20

−25

0.5

−25

−0.5

0.5

0.5

(e)

0

0

0

0

−0.5

−25

0.5

0.5

−0.5

0.5

−25

0.5

0.5

−0.5

−0.5

(f)

0

0

0

0

(g)

−0.5

−0.5

−0.5

(h)

Figure 6: The profiles of the first 8 numerical eigen functions u1 , ..., u8 for Example 6 with

v(x) = 0 at the energy level −C = −900. The contours do not show the localized property.

17

The first 8 numerical eigen functions u1 , ..., u8 at the energy level −C = −900 are shown

in Fig. 6 with their eigenvalues λi = 98.8414, 131.6574, 131.6574, 137.3578, 137.3578, 164.7840,

190.5393, 292.7556, norms ∥ui ∥ = 35.0447, 49.9499, 49.9499, 49.3652, 49.3652, 60.1673, 65.3372,

88.2909 and supports L = [0], [u1 ], [u1 ], [u1 ], [u1 ], [u1 , u2 , u3 ], [u1 , u4 , u5 ], [u1 , u4 , u5 , u6 , u7 ].

The first 8 numerical eigen functions u1 , ..., u8 at the energy level −C = −10 are shown in

Fig. 7 with their eigenvalues λi = 28.9900, 58.7792, 58.7792, 59.5321, 59.5321, 88.6519, 109.1362,

208.8727, norms ∥ui ∥ = 9.3864, 14.6748, 14.6748, 14.1167, 14.1167, 18.4019, 19.8152, 27.4468 and

supports L = [0], [u1 ], [u1 ], [u1 ], [u1 ], [u1 , u2 , u3 ], [u1 , u4 , u5 ], [u1 , u4 , u5 , u6 , u7 ].

Comparing those two cases, we see that the tops of solution peaks are flatter when C is larger.

From their contour plots we see that such eigen functions do not show the localized property,

which agree with the condition that the external trapping potential v(x) = 0.

0

0

0

0

−2

−1

−2

−2

−4

−3

−6

−3

u−axis

−2

u−axis

u−axis

u−axis

−1

−4

−5

−4

0.5

−0.5

−0.5

y−axis

x−axis

0

−0.5

0.5

0

x−axis

−0.5

y−axis

(b)

0

−2

−4

−4

−6

−2

−4

−4

−6

0.5

x−axis

(e)

−0.5

y−axis

0.5

0

0

−0.5

y−axis

−0.5

(f)

−6

−8

0.5

0

y−axis

x−axis

x−axis

−10

0.5

−10

0.5

0.5

−10

0.5

−0.5

0

−2

−8

−8

0

0

−0.5

(d)

−8

0

y−axis

x−axis

0

−6

−10

−0.5

0.5

0

u−axis

0

−2

−0.5

(c)

u−axis

u−axis

(a)

−0.5

−10

0.5

0

−8

0.5

0.5

u−axis

y−axis

−8

−7

0

0

−6

−6

−8

0.5

0.5

0

−4

0.5

0

0

0

−0.5

−0.5

(g)

x−axis

y−axis

−0.5

−0.5

x−axis

(h)

Figure 7: The first 8 numerical eigen functions u1 , ..., u8 for Example 6 with v(x) = 0 at the

energy level −C = −10. The contours do not show the localized property.

Example 7. (A defocusing eigen problem with external trapping potential) We choose v(x) =

r(x21 + x22 ) with r = 200 at the energy level −C = −900. All other parameters remain the same

as in Example 6. So the potential function is symmetric. We have

∫

[|∇u(x)|2 + v(x)u2 (x) + 12 u4 (x)]dx + 2C

F (u) + C

λ(u) =

= Ω

.

G(u)

∥u∥2L2

It has the same symmetric property as that of λ in Example 6 with v(x) = 0. So our method can

be applied to find its multiple eigen-solutions. The first 8 numerical eigen functions are shown

18

in Fig. 8 with their eigenvalues λi = 96.6997, 118.4102, 118.4102, 118.4792, 118.4792, 142.9253,

143.2324, 195.1539, norms ∥ui ∥ = 19.9896, 34.9773, 34.9773, 35.1204, 35.1204, 47.3457, 47.9260,

68.4491 and supports L = [0], [u1 ], [u1 ], [u1 ], [u1 ], [u1 , u2 , u3 ], [u1 , u4 , u5 ], [u1 , u4 , u5 , u6 , u7 ]. Their

contour plots show a clear localized property.

0

−5

0

−10

−5

0

0

−5

u−axis

u−axis

−4

−15

−6

u−axis

u−axis

−2

−8

−10

−15

−10

−20

−10

1

1

−15

0.5

−20

1

1

1

0.5

0.5

0

0

0

−0.5

−20

1

−0.5

−1

y−axis

−1

0

0.5

0

−0.5

−1

−1

−1

x−axis y−axis

(a)

1

0.5

0

−0.5

−1

y−axis

0

u−axis

−5

−10

u−axis

u−axis

u−axis

−5

−5

−15

1

0.5

−0.5

0

0

−0.5

0.5

1

x−axis

(e)

−1

−20

1

1

−15

0.5

0

y−axis

−1

−1

1

1

x−axis

0

−0.5

−20

−0.5

0.5

0

0

0

−0.5

y−axis

−20

1

−15

0.5

0.5

−10

−10

1

−20

−1

x−axis

(d)

0

−15

−1

(c)

0

−5

−0.5

−1

y−axis

x−axis

(b)

−10

0

−0.5

x−axis

0

1

0.5

0

0.5

0

−0.5

−1

−1

x−axis

y−axis

−0.5

−1

−1

x−axis

y−axis

(f)

(g)

(h)

Figure 8: The first 8 numerical eigen functions u1 , ..., u8 for Example 7 with v(x) = 200(x21 + x22 )

at the energy level −C = −900. The contours clearly show the localized property.

According to our numerical experience in the above examples, when the domain is substantially larger, we find that (1) if solutions do not have the ”localized property”, then the

magnitudes of solutions become too large for us to carry out numerical computation; (2) if solutions do have the ”localized property”, the solutions’ peaks remain in a relatively small region

where their profile and contour plots become too dense for one to visualize the differences among

those multiple solutions’ patterns, symmetry and other properties. While those solution properties are of interests for people to understand multiple solution problems. We also note that

some multiple solutions in a bounded domain are created by the geometry of the boundary of

the domain, such as the square or rectangular domain. The number of multiple solutions will

be reduced if a circular domain is used. As long as the domain is not too small, the size of the

domain does not play a crucial role.

Conclusion Remark. In this paper, by using the knowledge of previously found solutions,

a simple and efficient numerical orthogonal subspace minimization method has been developed

for finding multiple (eigen) solutions to the defocusing nonlinear Schrödinger equation with symmetry. Its mathematical justification is provided. Many numerical multiple (eigen) solutions

19

are computed in an order of their variational energy levels and visualized for the first time,

where some interesting differences in solution behaviors, such as solution profiles, the symmetry

breaking phenomenon, etc. can be observed by comparing the current defocusing cases with

their focusing counterparts, such as the Lane-Emden-Fowler, Henon equations and their corresponding eigen solution problems. From the numerical solutions, we also observed that the

external trapping potential v(x) plays an important role in determining the localized property,

the shape and pattern sequential order of solutions. Since those solutions are numerically computed and visualized for the first time, many of their properties are still unknown and open to be

verified. As for algorithm development, it is interesting to compare OSMM with the projected

gradient methods [5] for constrained minimization problems in the literature, where the gradient

is projected so that an approximation solution in iterations always satisfies the constraints in

a problem and the methods focus on finding one solution. While in OSMM, the gradient is

projected onto the subspace spanned by previously found solutions so that the sensitive part of

numerical errors can be removed. Although the purposes are different, OSMM can be viewed

as one of the projected gradient methods in a broad sense. So if interested, one may refer [5]

for the convergence properties of projected gradient methods. We are also working on designing

multi-level methods for finding multiple solutions in an order to the defocusing type problems

without using symmetry. It is our experience that when the problem has symmetries, OSMM is

clearly much simpler and more efficient.

Acknowledgement. The authors thank two reviewers for their comments and also Profs.

M. Pilant and J. Whiteman for their suggestions to improve the paper writing.

References

[1] G. P. Agrawal, Nonlinear Fiber Optics, fifth edition, Academic Press 2012.

[2] W. Bao and Q. Du, Computing the ground state solution of Bose-Einstein condensates by

normalized gradient flow, SIAM J. Sci. Comp., 25(2004) 1674-1697.

[3] W. Bao, H. Wang and P. A. Markowich, Ground, symmetric and central vortex states in

rotating Bose-Einstein condensates, Comm. Math. Sci. 3(2005) 57-88.

[4] T. Bodurov, Derivation of the nonlinear Schrödinger equation from first principles, Annales

de la Fondation Louis de Broglie, 30(2005) 1-9.

[5] P. Calaman and J. More, Projected gradient methods for linear constrained problems, Math.

Program., 39(1987) 93-116.

[6] T.F.C. Chan and H.B. Keller, Arc-length continuation and multi-grid techniques for nonlinear elliptic eigenvalue problems, SIAM J. Sci. Stat. Comput., 3(1982) 173-194.

20

[7] S.-L. Chang and C.-S. Chien, Computing multiple peak solutions for Bose-Einstein condensates in optical lattics, Comp. Phy. Comm., 180 (2009) 926-947.

[8] G. Chen, B. G. Englert and J. Zhou, Convergence analysis of an optimal scaling algorithm

for semilinear elliptic boundary value problems, Contemporary Math., 357(2004) American

Mathematical Society, 69-84.

[9] G. Chen, W.M. Ni and J. Zhou, Algorithms and visualization for solutions of nonlinear

elliptic equation, Part I: Dirichlet Problem”, Int. J. Bifur. Chaos, 7(2000) 1565-1612.

[10] Garcia-Ripoll and Perez-Garcia, Optimizing Schrödinger Functionals using Sobolev gradients: applications to quantum mechanics and nonlinear optics, SIAM J. Sci. Comp., 23

(2001) 1315-1333.

[11] J.J. Garcia-Ripoll, V. V. Konotop, B. M. Malomed and V.M.Perez-Garcia, A quasi-local

Gross- Pitaevskii equation for attractive Bose-Einstein condensate, Math. Comp. in Simulation, 62(2003) 21-30.

[12] E. P. Gross, Structure of a quantized vortex in boson systems, Nuovo Cimento, 20(1961)

454-477.

[13] P. Kazemi, M. Eckart, Minimizing the Gross-Pitaevskii energy functional with the Sobolev

gradient - analytical and numerical results, Int. J. Comp. Methods, 7 (2010) 453-475.

[14] S. Komineas and N. Papanicolaous, Static solitary waves in axisymmetric Bose-Einstein

condensates, Laser Physics, 14(2004) 571-574.

[15] T.I. Lakoba and J. Yang, A generalized Petviashvili iteration method for scalar and vector

Hamiltonian equations with arbitrary form of nonlinearity, J. Comp. Phys., 226 (2007)

1668-1692.

[16] T.I. Lakoba and J. Yang, A mode elimination technique to improve convergence of iteration

methods for finding solitary waves, J. Comp. Phys., 226 (2007) 1693-1709.

[17] Y. Li and J. Zhou, A minimax method for finding multiple critical points and its applications

to semilinear elliptic PDEs, SIAM Sci. Comp., 23(2001), 840-865.

[18] Y. Li and J. Zhou, Convergence results of a local minimax method for finding multiple

critical points, SIAM Sci. Comp., 24(2002), 865-885.

[19] L.P. Pitaevskii, Vortex lines in an imperfect Bose gas, Soviet Phys. JETP, 13(1961) 451-454.

[20] P. Rabinowitz, Minimax Method in Critical Point Theory with Applications to Differential

Equations, CBMS Regional Conf. Series in Math., No. 65, AMS, Providence, 1986.

[21] M. Schechter, Linking Methods in Critical Point Theory, Birkhauser, Boston, 1999.

[22] M. Struwe, Variational Methods, Springer, 1996.

[23] E. W. C. Van Groesen and T. Nijmegen, Continuation of solutions of constrained extremum

problems and nonlinear eigenvalue problems, Math. Model., 1(1980) 255-270.

21

[24] Z.Q. Wang and J. Zhou, An efficient and stable method for computing multiple saddle points

with symmetries, SIAM J. Num. Anal., 43(2005), 891-907.

[25] Z.Q. Wang and J. Zhou, A local minimax-Newton method for finding critical points with

symmetries, SIAM J. Num. Anal., 42(2004), 1745-1759.

[26] T. Watanabe, K. Nishikawa, Y. Kishimoto and H. Hojo, Numerical method for nonlinear

eigenvalue problems, Physica Scripta., T2/1 (1982) 142-146.

[27] M. Willem, Minimax Theorems, Birkhauser, Boston, 1996.

[28] J. Yang, Newton-conjugate-gradient methods for solitary wave computations, J. Comp.

Phys., 228 (2009) 7007-7024.

[29] J. Yang and T.I. Lakoba, Accelerated imaginary-time evolution methods for the computation

of solitary waves, Stud. Appl. Math., 120 (2008) 265-292.

[30] X. Yao and J. Zhou, A minimax method for finding multiple critical points in Banach spaces

and its application to quasilinear elliptic PDE, SIAM J. Sci. Comp., 26(2005), 1796-1809.

[31] X. Yao and J. Zhou, Numerical methods for computing nonlinear eigenpairs. Part I. isohomogenous cases, SIAM J. Sci. Comp., 29 (2007) 1355-1374.

[32] X. Yao and J. Zhou, Numerical methods for computing nonlinear eigenpairs. Part II. non

iso-homogenous cases, SIAM J. Sci. Comp., 30(2008) 937-956.

[33] E. Zeidler, Ljusternik-Schnirelman theory on general level sets, Math. Nachr., 129 (1986)

238-259.

[34] J. Zhou, A local min-orthogonal method for finding multiple saddle points, J. Math. Anal.

Appl., 291(2004), 66-81.

[35] J. Zhou, Instability analysis of saddle points by a local minimax method, Math. Comp.,

74(2005), 1391-1411.

[36] J. Zhou, Global sequence convergence of a a local minimax method for finding multiple

solutions in Banach spaces, Num. Func. Anal. Optim., 32(2011) 1365-1380.

22