Lecture 4: Auditory Perception

advertisement

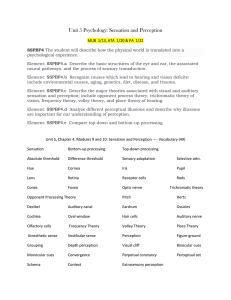

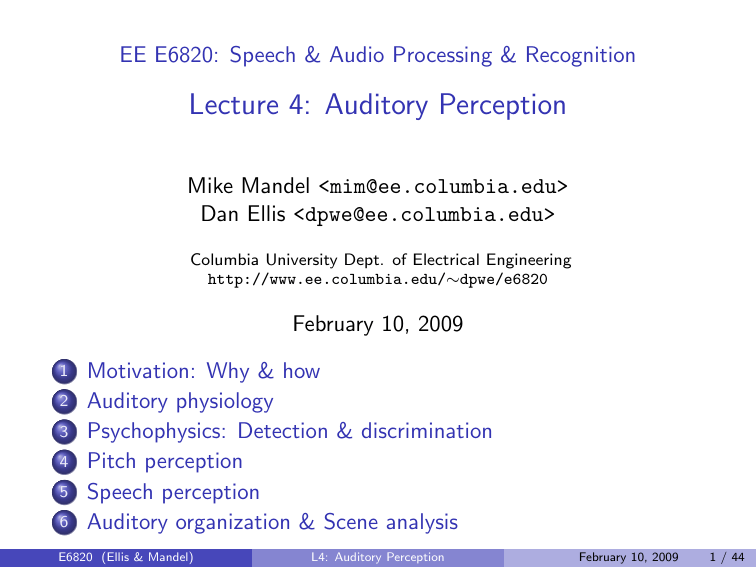

EE E6820: Speech & Audio Processing & Recognition Lecture 4: Auditory Perception Mike Mandel <mim@ee.columbia.edu> Dan Ellis <dpwe@ee.columbia.edu> Columbia University Dept. of Electrical Engineering http://www.ee.columbia.edu/∼dpwe/e6820 February 10, 2009 1 2 3 4 5 6 Motivation: Why & how Auditory physiology Psychophysics: Detection & discrimination Pitch perception Speech perception Auditory organization & Scene analysis E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 1 / 44 Outline 1 Motivation: Why & how 2 Auditory physiology 3 Psychophysics: Detection & discrimination 4 Pitch perception 5 Speech perception 6 Auditory organization & Scene analysis E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 2 / 44 Why study perception? Perception is messy: can we avoid it? No! Audition provides the ‘ground truth’ in audio I I I what is relevant and irrelevant subjective importance of distortion (coding etc.) (there could be other information in sound. . . ) Some sounds are ‘designed’ for audition I co-evolution of speech and hearing The auditory system is very successful I we would do extremely well to duplicate it We are now able to model complex systems I faster computers, bigger memories E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 3 / 44 How to study perception? Three different approaches: Analyze the example: physiology I dissection & nerve recordings Black box input/output: psychophysics I fit simple models of simple functions Information processing models investigate and model complex functions e.g. scene analysis, speech perception I E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 4 / 44 Outline 1 Motivation: Why & how 2 Auditory physiology 3 Psychophysics: Detection & discrimination 4 Pitch perception 5 Speech perception 6 Auditory organization & Scene analysis E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 5 / 44 Physiology Processing chain from air to brain: Middle ear Auditory nerve Cortex Outer ear Midbrain Inner ear (cochlea) Study via: I I anatomy nerve recordings Signals flow in both directions E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 6 / 44 Outer & middle ear Ear canal Middle ear bones Pinna Cochlea (inner ear) Eardrum (tympanum) Pinna ‘horn’ I complex reflections give spatial (elevation) cues Ear canal I acoustic tube Middle ear I bones provide impedance matching E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 7 / 44 Inner ear: Cochlea Oval window (from ME bones) Basilar Membrane (BM) Travelling wave Cochlea 16 kHz Resonant frequency 50 Hz 0 Position 35mm Mechanical input from middle ear starts traveling wave moving down Basilar membrane Varying stiffness and mass of BM results in continuous variation of resonant frequency At resonance, traveling wave energy is dissipated in BM vibration I Frequency (Fourier) analysis E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 8 / 44 Cochlea hair cells Ear converts sound to BM motion I each point on BM corresponds to a frequency Cochlea Tectorial membrane Basilar membrane Auditory nerve Inner Hair Cell (IHC) Outer Hair Cell (OHC) Hair cells on BM convert motion into nerve impulses (firings) Inner Hair Cells detect motion Outer Hair Cells? Variable damping? E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 9 / 44 Inner Hair Cells IHCs convert BM vibration into nerve firings Human ear has ∼3500 IHCs I each IHC has ∼7 connections to Auditory Nerve Each nerve fires (sometimes) near peak displacement Local BM displacement 50 time / ms Typical nerve signal (mV) Histogram to get firing probability Firing count Cycle angle E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 10 / 44 Auditory nerve (AN) signals Single nerve measurements Tone burst histogram Spike count Frequency threshold dB SPL 80 100 60 40 Time 20 100 ms 1 kHz 100 Hz Tone burst 10 kHz (log) frequency Rate vs intensity One fiber: ~ 25 dB dynamic range Spikes/sec 300 200 100 Intensity / dB SPL 0 0 20 40 60 80 100 Hearing dynamic range > 100 dB Hard to measure: probe living ANs? E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 11 / 44 AN population response All the information the brain has about sound average rate & spike timings on 30,000 fibers Not unlike a (constant-Q) spectrogram freq / 8ve re 100 Hz PatSla rectsmoo on bbctmp2 (2001-02-18) 5 4 3 2 1 0 0 E6820 (Ellis & Mandel) 10 20 30 40 50 L4: Auditory Perception 60 time / ms February 10, 2009 12 / 44 Beyond the auditory nerve Ascending and descending Tonotopic × ? I modulation, position, source?? E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 13 / 44 Periphery models IHC Sound IHC Outer/middle ear filtering Cochlea filterbank SlaneyPatterson 12 chans/oct from 180 Hz, BBC1tmp (20010218) 60 Modeled aspects I I I outer / middle ear hair cell transduction cochlea filtering efferent feedback? channel I 50 40 30 20 10 0 0.1 0.2 0.3 0.4 0.5 time / s Results: ‘neurogram’ / ‘cochleagram’ E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 14 / 44 Outline 1 Motivation: Why & how 2 Auditory physiology 3 Psychophysics: Detection & discrimination 4 Pitch perception 5 Speech perception 6 Auditory organization & Scene analysis E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 15 / 44 Psychophysics Physiology looks at the implementation Psychology looks at the function/behavior Analyze audition as signal detection: p(θ | x) psychological tests reflect internal decisions assume optimal decision process I infer nature of internal representations, noise, . . . → lower bounds on more complex functions I I Different aspects to measure I I I I time, frequency, intensity tones, complexes, noise binaural pitch, detuning E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 16 / 44 Basic psychophysics Relate physical and perceptual variables e.g. intensity → loudness frequency → pitch Methodology: subject tests I I just noticeable difference (JND) magnitude scaling e.g. “adjust to twice as loud” Results for Intensity vs Loudness: Weber’s law ∆I ∝ I ⇒ log(L) = k log(I ) Log(loudness rating) Hartmann(1993) Classroom loudness scaling data 2.6 2.4 Textbook figure: L α I 0.3 2.2 2.0 Power law fit: L α I 0.22 1.8 1.6 1.4 -20 -10 E6820 (Ellis & Mandel) 0 10 log2 (L) = 0.3 log2 (I ) log I = 0.3 10 log10 2 0.3 dB = log10 2 10 = dB/10 Sound level / dB L4: Auditory Perception February 10, 2009 17 / 44 Loudness as a function of frequency Fletcher-Munsen equal-loudness curves Intensity / dB SPL 120 100 80 60 40 20 0 100 100 100 Hz 80 60 rapid loudness growth 40 20 E6820 (Ellis & Mandel) 1 kHz 80 60 0 10,000 freq / Hz Equivalent loudness @ 1kHz Equivalent loudness @ 1kHz 100 1000 0 20 40 60 80 Intensity / dB 40 20 0 0 L4: Auditory Perception 20 40 60 80 Intensity / dB February 10, 2009 18 / 44 Loudness as a function of bandwidth Same total energy, different distribution e.g. 2 channels at −6 dB (not −10 dB) freq I0 I1 freq B1 Loudness B0 mag mag time Same total energy I·B freq ... but wider perceived as louder ‘Critical’ Bandwidth B bandwidth Critical bands: independent frequency channels I ∼25 total (4-6 / octave) E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 19 / 44 Simultaneous masking Intensity / dB A louder tone can ‘mask’ the perception of a second tone nearby in frequency: absolute threshold masking tone masked threshold log freq Suggests an ‘internal noise’ model: p(x | I) p(x | I+∆I) p(x | I) σn I E6820 (Ellis & Mandel) internal noise decision variable L4: Auditory Perception x February 10, 2009 20 / 44 Sequential masking Backward/forward in time: masker envelope Intensity / dB simultaneous masking ~10 dB masked threshold time backward masking ~5 ms forward masking ~100 ms → Time-frequency masking ‘skirt’: intensity Masking tone freq Masked threshold time E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 21 / 44 What we do and don’t hear A “two-interval forced-choice”: X B X = A or B? time Timing: 2 ms attack resolution, 20 ms discrimination I but: spectral splatter Tuning: ∼1% discrimination I but: beats Spectrum: profile changes, formants I variables time-frequency resolution Harmonic phase? Noisy signals & texture (Trace vs categorical memory) E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 22 / 44 Outline 1 Motivation: Why & how 2 Auditory physiology 3 Psychophysics: Detection & discrimination 4 Pitch perception 5 Speech perception 6 Auditory organization & Scene analysis E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 23 / 44 Pitch perception: a classic argument in psychophysics Harmonic complexes are a pattern on AN freq. chan. 70 60 50 40 30 20 10 0 I 0.05 0.1 time/s but give a fused percept (ecological) What determines the pitch percept? I not the fundamental How is it computed? Two competing models: place and time E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 24 / 44 Place model of pitch AN excitation pattern shows individual peaks ‘Pattern matching’ method to find pitch AN excitation broader HF channels cannot resolve harmonics resolved harmonics frequency channel Correlate with harmonic ‘sieve’: Pitch strength frequency channel Support: Low harmonics are very important But: Flat-spectrum noise can carry pitch E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 25 / 44 Time model of pitch Timing information is preserved in AN down to ∼1 ms scale freq Extract periodicity by e.g. autocorrelation and combine across frequency channels per-channel autocorrelation autocorrelation time Summary autocorrelation 0 10 20 30 common period (pitch) lag / ms But: HF gives weak pitch (in practice) E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 26 / 44 Alternate & competing cues Pitch perception could rely on various cues I I I average excitation pattern summary autocorrelation more complex pattern matching Relying on just one cue is brittle I e.g. missing fundamental → Perceptual system appears to use a flexible, opportunistic combination Optimal detector justification? argmax p(θ | x) = argmax p(x | θ)p(θ) θ θ = argmax p(x1 | θ)p(x2 | θ)p(θ) θ I if x1 and x2 are conditionally independent E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 27 / 44 Outline 1 Motivation: Why & how 2 Auditory physiology 3 Psychophysics: Detection & discrimination 4 Pitch perception 5 Speech perception 6 Auditory organization & Scene analysis E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 28 / 44 Speech perception Highly specialized function I subsequent to source organization? . . . but also can interact Kinds of speech sounds glide freq / Hz vowel nasal fricative stop burst 60 4000 50 3000 40 2000 30 1000 20 level/dB 0 1.4 has a E6820 (Ellis & Mandel) 1.6 1.8 watch 2 2.2 thin 2.4 as L4: Auditory Perception a 2.6 time/s dime February 10, 2009 29 / 44 Cues to phoneme perception Linguists describe speech with phonemes ^ e hε z tcl c θ w has a watch I n thin z I I d as a ay m dime Acoustic-phoneticians describe phonemes by freq • formants & transitions • bursts & onset times transition stop burst voicing onset time vowel formants time E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 30 / 44 Categorical perception (Some) speech sounds perceived categorically rather than analogically I e.g. stop-burst and timing: vowel formants fb time T burst freq fb / Hz stop burst 3000 2000 K P P 1000 i e ε P a c freq 4000 o u following vowel I I tokens within category are hard to distinguish category boundaries are very sharp Categories are learned for native tongue I “merry” / “Mary” / “marry” E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 31 / 44 Where is the information in speech? ‘Articulation’ of high/low-pass filtered speech: Articulation / % high-pass low-pass 80 60 40 20 1000 2000 3000 4000 freq / Hz sums to more than 1. . . Speech message is highly redundant e.g. constraints of language, context → listeners can understand with very few cues E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 32 / 44 Top-down influences: Phonemic restoration (Warren, 1970) freq / Hz What if a noise burst obscures speech? 4000 3000 2000 1000 0 1.4 1.6 1.8 2 2.2 2.4 2.6 time / s auditory system ‘restores’ the missing phoneme . . . based on semantic content . . . even in retrospect Subjects are typically unaware of which sounds are restored E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 33 / 44 A predisposition for speech: Sinewave replicas freq / Hz freq / Hz Replace each formant with a single sinusoid (Remez et al., 1981) 5000 4000 3000 2000 1000 0 5000 4000 3000 2000 1000 0 Speech Sines 0.5 1 1.5 2 2.5 3 time / s speech is (somewhat) intelligible people hear both whistles and speech (“duplex”) processed as speech despite un-speech-like What does it take to be speech? E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 34 / 44 Simultaneous vowels dB Mix synthetic vowels with different f0 s /iy/ @ 100 Hz + freq = /ah/ @ 125 Hz Pitch difference helps (though not necessarily) % both vowels correct DV identification vs. ∆f0 (200ms) (Culling & Darwin 1993) 75 50 25 0 1/4 1/2 1 2 4 ∆f0 (semitones) E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 35 / 44 Computational models of speech perception Various theoretical-practical models of speech comprehension e.g. Speech Phoneme recognition Lexical access Words Grammar constraints Open questions: I I I mechanism of phoneme classification mechanism of lexical recall mechanism of grammar constraints ASR is a practical implementation (?) E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 36 / 44 Outline 1 Motivation: Why & how 2 Auditory physiology 3 Psychophysics: Detection & discrimination 4 Pitch perception 5 Speech perception 6 Auditory organization & Scene analysis E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 37 / 44 Auditory organization Detection model is huge simplification The real role of hearing is much more general: Recover useful information from the outside world → Sound organization into events and sources frq/Hz Voice 4000 Stab 2000 Rumble 0 0 2 4 time/s Research questions: I I I what determines perception of sources? how do humans separate mixtures? how much can we tell about a source? E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 38 / 44 Auditory scene analysis: simultaneous fusion freq Harmonics are distinct on AN, but perceived as one sound (“fused”) time I I depends on common onset depends on harmonicity (common period) Methodologies: I I I ask subject how many ‘objects’ match attributes e.g. object pitch manipulate high level e.g. vowel identity E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 39 / 44 Sequential grouping: streaming Pattern / rhythm: property of a set of objects I subsequent to fusion ∵ employs fused events? frequency TRT: 60-150 ms 1 kHz ∆f: –2 octaves time Measure by relative timing judgments I cannot compare between streams Separate ‘coherence’ and ‘fusion’ boundaries Can interact and compete with fusion E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 40 / 44 Continuity and restoration freq Tone is interrupted by noise burst: what happened? + ? + + time I masking makes tone undetectable during noise Need to infer most probable real-world events I I observation equally likely for either explanation prior on continuous tone much higher ⇒ choose Top-down influence on perceived events. . . E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 41 / 44 Models of auditory organization Psychological accounts suggest bottom-up input mixture Front end signal features (maps) Object formation discrete objects Grouping rules Source groups freq onset time period frq.mod Brown and Cooke (1994) Complicated in practice formation of separate elements contradictory cues influence of top-down constraints (context, expectations, . . . ) E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 42 / 44 Summary Auditory perception provides the ‘ground truth’ underlying audio processing Physiology specifies information available Psychophysics measure basic sensitivities Sounds sources require further organization Strong contextual effects in speech perception Transduce Sound Multiple represent'ns Scene analysis High-level recognition Parting thought Is pitch central to communication? Why? E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 43 / 44 References Richard M. Warren. Perceptual restoration of missing speech sounds. Science, 167 (3917):392–393, January 1970. R. E. Remez, P. E. Rubin, D. B. Pisoni, and T. D. Carrell. Speech perception without traditional speech cues. Science, 212(4497):947–949, May 1981. G. J. Brown and M. Cooke. Computational auditory scene analysis. Computer Speech & Language, 8(4):297–336, 1994. Brian C. J. Moore. An Introduction to the Psychology of Hearing. Academic Press, fifth edition, April 2003. ISBN 0125056281. James O. Pickles. An Introduction to the Physiology of Hearing. Academic Press, second edition, January 1988. ISBN 0125547544. E6820 (Ellis & Mandel) L4: Auditory Perception February 10, 2009 44 / 44