Software Reliability as a Function of User Execution Patterns

advertisement

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Software Reliability as a Function

of User Execution Patterns

John C. Munson, Sebastian Elbaum

Computer Science Department

University of Idaho

Moscow, Idaho 83844-1010

{jmunson,elbaum}@cs.uidaho.edu

Abstract

Assessing the reliability of a software system

has always been an elusive target. A program may

work very well for a number of years and this same

program may suddenly become quite unreliable if

its mission is changed by the user. This has led to

the conclusion that the failure of a software system

is dependent only on what the software is currently

doing. If a program is always executing a set of

fault free modules, it will certainly execute

indefinitely without any likelihood of failure. A

program may execute a sequence of fault prone

modules and still not fail. In this particular case,

the faults may lie in a region of the code that is not

likely to be expressed during the execution of that

module. A failure event can only occur when the

software system executes a module that contains

faults. If an execution pattern that drives the

program into a module that contains faults is

never selected, then the program will never fail.

Alternatively, a program may execute successfully

a module that contains faults just as long as the

faults are in code subsets that are not executed.

The reliability of the system then, can only be

determined with respect to what the software is

currently doing. Future reliability predictions will

be bound in their precision by the degree of

understanding of future execution patterns. In this

paper we investigate a model that represents the

program sequential execution of modules as a

stochastic process. By analyzing the transitions

between modules and their failure counts, we may

learn exactly where the system is fragile and under

which execution patterns a certain level of

reliability can be guaranteed.

1. Introduction

The subject of this paper is measurement,

specifically, the measurement of those software

attributes that are associated with software reliability.

Existing approaches to the understanding of software

reliability patently assume that software failure events are

observable. The truth is that the overwhelming majority

of software failures go unnoticed when they occur. Only

when these unobserved failures disrupt the system by

second, third or fourth order effects do they provide

enough disruption for outside observation. Consequently,

only those dramatic events that lead to the immediate

collapse of a system can been seen directly by an

observer when they occur. The more insidious failures

will lurk in the code for a long interval before their

effects are observed. Failure events go unnoticed and

undetected because the software has not been

instrumented so that these failure events may be detected.

In that software failures go largely unnoticed, it is

presumptuous to attempt to model the reliability of

software based on observed failure events. If we cannot

observe failure events directly, then we must seek another

metaphor that will permit us to model and understand

reliability in a context that we can measure.

Computer programs do not break. They do not fail

monolithically. Programs are designed to perform a set

of mutually exclusive tasks or functions. Some of these

functions work quite well while others may not work well

at all. When a program is executing a particular function,

it executes a well defined subset of its code. Some of

these subsets are flawed and some are not. Users tend to

execute subsets of the total program functionality. Two

users of the same software may have totally different

perceptions as to the reliability of the same system. One

user may use the system on a daily basis and never

experience a problem. Another user may have continual

problems in trying to execute exactly the same program.

A new metaphor for software systems would focus on

the functionality that the code is executing and not the

software as a monolithic system. In computer software

systems, it is the functionality that fails. Some functions

may be virtually failure free while other functions will

collapse with certainty whenever they are executed. The

focus of this paper is on the notion that it is possible to

measure the activities of a system as it executes its

0-7695-0001-3/99 $10.00 (c) 1999 IEEE

1

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

various functions and characterize the reliability of

the system in terms of these functionalities.

Each program function may be thought of as

having an associated reliability estimate. We may

chose to think of the reliability of a system in these

functional terms. Users of the software system,

however, have a very different view of the system.

What is important to the user is not that a particular

function is fragile or reliable, but rather whether

the system will operate to perform those actions

that the user will want the system to perform

correctly. From a user’s perspective, it matters not,

then, that certain functions are very unreliable. It

only matters that the functions associated with the

user’s actions or operations are reliable. The

classical example of this idea was expressed by the

authors of the early UNIX utility programs. In the

last paragraph of the documentation for each of

these utilities was a list of known bugs for that

program. In general, these bugs were not a

problem. Most involved aspects of functionality

that the typical user would never exploit.

As a program is exercising any one of its many

functionalities in the normal course of operation of

the program, it will apportion its time across this

set of functionalities [8]. The proportion of time

that a program spends in each of its functionalities

is the functional profile of the program. Further,

within the functionality, it will apportion its

activities across one to many program modules.

This distribution of processing activity is

represented by the concept of the execution profile.

In other words, if we have a program structured

into n distinct modules, the execution profile for a

given functionality will be the proportion of

program activity for each program module while

the function was being expressed.

As the discussion herein unfolds, we will see

that the key to understanding program failure

events is the direct association of these failures to

execution events with a given functionality. A

stochastic process will be used to describe the

transition of program modules from one to another

as a program expresses a functionality. From these

observations, it will become fairly obvious just

what data will be needed to describe accurately the

reliability of the system. In essence, the system

will be able to appraise us of its own health. The

reliability modeling process is no longer something

that will be performed ex post facto. It may be

accomplished dynamically while the program is

executing. It is the goal of this paper to develop a

methodology that will permit the modeling of the

reliability of program functionality.

This

methodology will then be used to develop notions

of design robustness in the face of departures from design

functional profiles.

2. A Formal Description Of Program

Operation

To assist in the subsequent discussion of program

functionality, it will be useful to make this description

somewhat more precise by introducing some notation

conveniences. Assume that a software system S was

designed to implement a specific set of mutually

exclusive functionalities F. Thus, if the system is

executing a function f ∈ F then it cannot be expressing

elements of any other functionality in F. Each of these

functions in F was designed to implement a set of

software specifications based on a user’s requirements.

From a user’s perspective, this software system will

implement a specific set of operations, O. This mapping

from the set of user perceived operations, O, to a set of

specific program functionalities, F, is one of the major

tasks in the software specification process.

To facilitate this discussion, it would be appropriate to

describe the operations and the functionality of the

Simple Database Management System (SDMS) that we

have instrumented for test purposes. Though the majority

of the systems that we have investigated and

instrumented are very large (>500 KLOC) we would like

demonstrate the use of this model in the context of a

relatively small database application. The target

application has 20 modules and approximately 1600

LOC. The methodology scales down quite well.

In order to implement the model, the first step was to

re-discover the set A of user operations through the

analysis of the program documentation.

These

operations, again specified by a set of functional

requirements, will be mapped into a set, B, of elementary

program functionalities. Then, the second step was the

extraction of the system functionalities. The

functionalities were extracted with a tool that analyzes

the transition patterns as the program executes and

generates a list of functionalities and the mapping of

these functionalities to modules [3]. The tool was used

because the requirements and design documentation

wasn’t complete enough to specify the operations,

functionalities, modules and their mapping. The lack of

complete and unambiguous development information is a

common situation in the software industry which forces

this type of re-engineering approaches.

Each operation that a system may perform for a user

may be thought of as having been implemented in a set of

functional specifications. There may be a one-to-one

mapping between the user’s notion of an operation and a

program function. In most cases, however, there may be

several discrete functions that must be executed to

express the user’s concept of an operation. For each

0-7695-0001-3/99 $10.00 (c) 1999 IEEE

2

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

operation, o, that the system may perform, the

range of functionalities, f, must be well known.

Within each operation one or more of the system’s

functionalities will be expressed. For a given

operation, o, these expressed functionalities are

those with the property

F (o ) = { f : F | ∀ IMPLEMENTS(o, f )}

It is possible, then, to define a relation

O×F

such

that

IMPLEMENTS

over

IMPLEMENTS(o, f) is true if functionality f is

used in the specification of an operation, o.

As

an example of the IMPLEMENTS relation for a

user operation within the framework of our

demonstration example of a database application

let us consider a typical user operation, the

retrieval of a specific record.

In order to

implement this operation ( oi ) the system will

present the menu system to the user ( f1 ), open the

selected database ( f 2 ), perform the necessary

query ( f 3 ) and then display the desired record

( f 4 ).

The software design process is basically a

matter of assigning functionalities in F to specific

program modules m ∈ M , the set of program

modules. The design process may be thought of as

the process of defining a set of relations, ASSIGNS

over F × M such that ASSIGNS(f, m) is true if

functionality f is expressed in module m. Table 1

shows an example of the ASSIGNS relation for

four of the functions that IMPLEMENT the

retrieval operation. In this example we can see the

function f 4 has been implemented in the program

modules {m1 , m3 , m15 , m20 }. One of these modules,

m1 , will be invoked regardless of the functionality.

It is the main program module and thus is common

to all functions. Other program modules, such as

m7 , are distinctly associated with a single function.

F×M

f1

f2

f3

f4

m1

m3

T

T

T

m7

m15 m16 m20 m17

T

T

T

T

T

T

T

T

T

T

T

T

Table 1. Example of the ASSIGNS relation

There is a relationship between program

functionalities and the software modules that they will

cause to be executed. For the SDMS system, the set

M = {m1 , m2 , m3 ,K, m20 } denotes the set of all program

modules that constitute the whole system. For each

function f ∈ F , there is a relation p over F × M such

that p( f , m) is the proportion of execution events of

module m when the system is executing functionality f .

If p( f , m) < 1 this means that a module m may for may

not execute when functionality f is expressed. Thus,

program modules may be assigned to one of three distinct

sets of modules that, in turn, are subsets of M. Some

modules may execute under all of the functionalities of

SDMS. This will be the set of common modules. The

main program is an example of such a module that is

common to all operations of the software system.

Essentially, program modules will be members of one of

two mutually exclusive sets. There is the set of program

modules M c of common modules and the set of modules

M F that are invoked only in response to the execution of

a particular function. The set of common modules,

M c ⊂ M is defined as those modules that have the

property.

M c = {m : M | ∀f ∈ F • ASSIGNS(f, m)} .

All of these modules will execute regardless of the

specific functionality being executed by the software

system.

Yet another set of software modules may or may not

execute when the system is running a particular function.

These modules are said to be potentially involved

modules. The set of potentially involved modules is.

M (p f ) = {

m : M F | ∃ f ∈ F • ASSIGNS( f , m) ∧

0 < p( f , m) < 1}

In other program modules, there is extremely tight

binding between a particular functionality and a set of

program modules. That is, every time a particular

function, f, is executed, a distinct set of software modules

will always be invoked. These modules are said to be

indispensably involved with the functionality f. This set

of indispensably involved modules for a particular

functionality, f, is the set of those modules that have the

property that

M i( f ) = {m : M F | ∀f ∈ F • ASSIGNS( f , m) ⇒

.

p( f , m) = 1}

As a direct result of the design of the program, there

will be a well defined set of program modules , M f , that

might be used to express all aspects of a given

functionality, f. These are the modules that have the

(f)

(f)

property that m ∈ M f = M p ∪ M i .

From the standpoint of software design, the real

problems in understanding the dynamic behavior of a

0-7695-0001-3/99 $10.00 (c) 1999 IEEE

3

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

system are not necessarily attributable to the set of

modules, M i , that are tightly bound to a

functionality or to the set of common modules,

M c , that will be invoked for all executing

processes.

The real problem is the set of

potentially invoked modules, M p . The greater the

cardinality of this set of modules, the less certain

we may be about the behavior of a system

performing that function. For any one instance of

execution of this functionality, a varying number of

the modules in M p may execute.

3. The Profiles of Software Dynamics

When a program is executing a functionality it

will apportion its activities among a set of

modules. As such it will transition from one

module to the next on a call (or return) sequence.

Each module called in this call sequence will have

an associated call frequency. When the software is

subjected to a series of unique and distinct

functional expressions, there will be a different

behavior for each of the user’s operations in that

each will implement a different set of functions

that will, in turn, invoke possibly different sets of

program modules.

When the process of software requirements

specification is complete we will have specified a

system consisting of a set of a mutually exclusive

operations. It is a characteristic of each user of the

new system that this user will cause each operation

to be performed at a potentially different rate than

another user. Each user, then, will induce a

probability distribution on the set O of mutually

exclusive operations. This probability function is a

multinomial distribution.

It constitutes the

operational profile for that user.

The operation profile of the software system is

the set of unconditional probabilities of each of the

operations in O being executed by the user. Then,

Pr

[X = k], k =1,2,K, O is the probability that

the user is executing program operation k as

specified in the functional requirements of the

program and O is the cardinality of the set of

functions [12]. A program executing on a serial

machine can only be executing one operation at a

time. The distribution of the operational profile,

then, is multinomial for programs designed to

fulfill more than two distinct operations. The prior

knowledge of this distribution of functions should

guide the software design process [7].

As a user performs the various operations on a

system, he/she, will cause each operation to occur

in a series of steps or transitions. The transition from one

operation to another may be described as a stochastic

process. In which case we may define an indexed

collection of random variables { X t } , where the index t

runs through a set of non-negative integers, t = 0,1,2,K

representing the individual transitions or intervals of the

process. At any particular interval the user is found to be

expressing exactly one of the system’s a operations. The

fact of the execution occurring in a particular operation is

a state of the user. During any interval the user is found

performing exactly one of a finite number of mutually

exclusive and exhaustive states that may be

labeled 0,1,2,K, a . In this representation of the system,

there is a stochastic process { X t } , where the random

variables are observed at intervals t = 0,1,2,K and where

each random variable may take on any one of the (a + 1)

integers, from the state space O = {0,1,2,K, a} .

Each user may potentially bring his/her own distinct

behavior to the system. Thus, each user will have his/her

own characteristic operational profile.

It is a

characteristic, then, of each user to induce a probability

function pi = Pr[ X = i ] on the set of operations, O. In

that these operations are mutually exclusive, the induced

probability function is a multinomial distribution.

As the system progresses through the steps in the

software lifecycle, the user requirements specifications,

the set O, must be mapped on a specific set of

functionalities, F, by systems designers. This set F is in

fact the design specifications for the system. As per our

earlier discussion, each operation is implemented by one

for more functionality.

Now let us examine the behavior of the system within

each operation. Each operation is implemented by one

or more functionalities.

The transition from one

functionality to another may be also be described as a

stochastic process. In which case we may define a new

indexed collection of random variables {Yt } , as before

representing the individual transitions events among

particular functionalities. At any particular interval a

given operation is found to be expressing exactly one of

the system’s b functionalities. During any interval the

user is found performing exactly one of a finite number

of mutually exclusive and exhaustive states that may be

labeled 0,1,2,K, b . In this representation of the system,

there is a stochastic process {Yt } , where the random

variables are observed at intervals t = 0,1,2,K and where

each random variable may take on any one of the (b + 1)

integers, from the state space F = {0,1,2,K, b} , where b

represents the cardinality of F , the set of functionalities.

When a program is executing a given operation, say

ok , it will distribute its activity across the set of

0-7695-0001-3/99 $10.00 (c) 1999 IEEE

4

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

functionalities, F ( o ) . At any arbitrary interval, n,

during the expression of ok the program will be

k

executing a functionality

fi ∈ F (ok )

and the way that the activity of the system will be

distributed across the modules in the software will

depend on the user.

with a

probability, Pr[Yn = i | X = k ] .

From this

condition probability distribution for all operations

we may derive the functional profile for the design

specifications as a function of a user operational

profile to wit:

Pr[Y = i ] =

Pr[ X = j ] Pr[Y = i | X = j ] .

j

∑

Alternatively,

wi =

∑

j

p j Pr[Y = i | X = j ]

The next logical step is to study the behavior of

a software system at the module level. Each of the

functionalities is implemented in one or more

program modules. The transition from one module

to another may be also be described as a stochastic

process. In which case we may define a third

indexed collection of random variables {Z t } , as

before representing the individual transitions

events among the set of program modules. At any

particular interval a given functionality is found to

be executing exactly one of the system’s c

modules. The fact of the execution occurring in a

particular module is a state of the system. During

any interval the system is found executing exactly

one of a finite number of mutually exclusive and

exhaustive states (program modules)that may be

labeled 0,1,2,K, c . In this representation of the

system, there is a stochastic process {Z t } , where

the random variables are observed at epochs

t = 0,1,2,K and where each random variable may

take on any one of the (c + 1) integers, from the

state space M = {0,1,2,K, c} , where c represents

the cardinality of M , the set of modules.

Each functionality j has a distinct set of modules

M f j that it may cause to execute. At any arbitrary

interval, n, during the expression of

f j the

program will be executing a module mi ∈ M f j

with a probability, Pr[ Z n = i | Y = j ] . From this

condition probability distribution for all

functionalities we may derive the module profile

for the system as a function of a the system

functional profile as follows:

Pr[ Z = i ] =

Pr[Y = j ] Pr[ Z = i | Y = j ] .

j

∑

Again,

ri =

∑

j

w j Pr[ Z = i | Y = j ] .

The module profile ultimately depends on the

operational profile. Each user’s view of the system

F

Figure 1. Program Call Graph

Interestingly enough, for all software systems there is

a distinguished module, the main program module that

will always receive execution control from the operating

system. If we denote this main program as module 0

then, Pr[ Z 0 = 0] = 1 and Pr[ Z 0 = i ] = 0 for i = 1,2,K , c .

Further, for epoch 1, Pr[ Z1 = 0] = 0 , in that control will

have been transferred from the main program module to

another function module.

An interesting variation on this stochastic process is

one that has an absorbing state. In which case, pii( 0) = 1

for at least one i = 1,2,K, c . An example of such a

system is a call graph that has a module that always exits

to the operating system. Once this state has been entered,

no other state is reachable from this absorbing state. We

will use this notion of an absorbing state to model the

failure of a system. In this case, we will consider the

failure of a program module to be the transition from that

module to the absorbing failure state. This modeling

approach was initially explored by Littlewood [4].

When a program module fails, we can imagine that the

module has made a transition to a distinguished program

module, a failure state. Thus, every program may be

thought to have a virtual module representing the failed

state of that program. This virtual program module is

shown pictorially in program call graph of Figure 1.

When the virtual module receives control, it will not

relinquish it, there are no returns from it. The transition

matrix for this new model is augmented by an additional

row and a new column. For a program with c modules,

0-7695-0001-3/99 $10.00 (c) 1999 IEEE

5

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

let the error state be represented by a new state,

e = c + 1 . For this new state,

= 0 for all j = 1,2,K, c n = 0,1,2,L

,

.

pej( n )

= 1 for j = e

This represents the augmented row of the new

transition matrix. Each row in the transition matrix

will be augmented by a new column entry pie(n ) for

i = 1,2,K, c , where pie(n ) represents the probability

th

th

of the failure of the i module in the n epoch.

When a program dies, it is the result of a fault in

one or more of its modules. Not all modules are

equally likely to lead to the failure event. The fault

proneness of the module is distinctly related to

measurable software attributes [6]. When program

modules are executed that are fault prone, they are

much more likely to fail than those that are not

fault prone. We seek a forecasting or prediction

mechanism that will capitalize on this

understanding.

The granularity of the term epoch is now of

interest. An epoch begins with the onset of

execution in a particular module and ends when

control is passed to another module.

The

measurable event for modeling purposes is this

transition among the program modules. We will

count the number of calls from a module and the

number of returns to that module. Each of these

transitions to a different program module from the

one currently executing will represent an

incremental change in the epoch number.

Computer programs executing in their normal

mode will make state transitions between program

modules rather rapidly. In terms of real clock

time, many epochs may elapse in a relatively short

period.

In reality, few if any systems are understood at

the functional or operation level.

We are

continually confronted with systems whose

functionality is not completely understood. While

we have developed methodologies to recapture the

essential functionalities [cf. 3], the majority of the

time we will not know the precise behavior of the

system that we are working with. To this end we

will develop a more relaxed form of profile called

the execution profile of a system.

When a user is exercising a system, the software

will be driven through a sequence of functionalities

S = {f a , f b , f c ,K}. Depending on the particular

sequence of functionalities that are executed

different sets of modules may or may not execute.

From an empirical perspective, it makes a great

deal of sense to model the behavior of a system in

terms of an execution profile. This execution

profile will be the conditional probability of

execution a particular program module based on a

sequence, S as follows: pi = Pr[ Z = i | S ] . It is clear that

lim Pr[ Z = i | S ] = ri .

S →∞

That is, the execution profile

tends to the module profile for large execution sequences.

Necessarily, if the program is maintaining the

transition matrix, it will not be able to update the vector

for the virtual module that represents the failure state of

the program. When the program transitions to this

hypothetical state, it will be dead and unable to keep track

of its own failures. In addition, in order to validate the

model, consistent failure data was needed but not

available for the application. To solve those problems,

the application process was encapsulated to allow the

trapping and simulation of failures. The capsule was

designed to receive different types of process signals

from the operating system, one of the signals provided a

means to simulate a failure at any module at any time.

When the process receives a failure signal from the

operating system, it updates the vector for the virtual

module representing the failure state simulating a failure.

Other signals allow to observe the transition matrix at any

time, to modify its contents, to estimate the current

reliability of the system, etc. The purpose of this simple

simulation scheme is to provide an inexpensive procedure

to demonstrate the conceptual framework. The simulated

failure assumes perfect fault coverage and a modular failstop behavior.

4. Estimates for Transition Probabilities and

Profiles

The focus will now shift to the problem of

understanding the nature of the distribution of the

probabilities for various profiles. We have so far come to

recognize these profiles in terms of their multinomial

nature. The multinomial distribution is useful for

representing the outcome of an experiment involving a

set of mutually exclusive events. Let Z =

c

UZ

i

where

i =1

Z i is one of c mutually exclusive sets of events. Each of

these events would correspond to a program executing a

particular module in the total set of program modules.

From the definition of module profile Pr( Z i ) = ri and

re = 1 − (r1 + r2 + L + rc ) , under the condition that

e = c + 1 , as defined earlier. In which case ri is the

probability that the outcome of a random experiment is an

element of the set Z i (the program is executing module

i). If this experiment is conducted over a period of n

trials then the random variable X i will represent the

frequency of Z i outcomes. In this case, the value, n,

represents the number of transitions from one program

0-7695-0001-3/99 $10.00 (c) 1999 IEEE

6

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

module

to

the

next.

Note

that

X e = n − X 1 − X 2 −L − X e .

This particular distribution will be useful in the

modeling of a program with a set of c modules.

During a set of n epochs, each of the modules may

be executed. These, of course, are mutually

exclusive events. If module i is executing then

module j cannot be executing. This principle of

mutual exclusion is a requirement for the

multinomial distribution.

The multinomial

distribution function with parameters n

and

r = (r1 , r2 ,K, rc ) is given by

n! x1 x 2

xc

c −1 r1 r2 L rc , ( x1 , x2 ,L, xc ) ∈ X

f (x | n, r ) =

xi !

i =1

0 elsewhere

∏

where xi represents the frequency of execution of

th

the i program module. The expected values for

the xi are given by E ( xi ) = xi = nri , for

i = 1,2,K, c .

We would like to come to understand, for

example, the multinomial distribution of a

program’s execution profile while it is executing a

particular functionality. The problem, here, is that

every time a program is run we will observe that

there is some variation in the profile from one

execution sample to the next. It will be difficult to

estimate the parameters r = (r1 , r2 ,K, re ) for the

multinomial distribution of the execution profile.

Rather than estimating these parameters statically,

it would be far more useful to us to get estimates of

these parameters dynamically as the program is

actually in operation, hence the utility of the

Bayesian approach [c.f. 5].

To aid in the process of characterizing the

nature of the true underlying multinomial

distribution, let us observe that the family of

Dirichlet distributions is a conjugate family for

observations that have a multinomial distribution

[14]. The p.d.f. for a Dirichlet distribution,

D ( ,α e ) ,

with

a

parametric

vector

= (α1 ,α 2 ,K,α c ) where (α i > 0; i = 1,2,K, c) is

f (r | ) =

Γ(α1 + α 2 + L + α c )

c

∏ Γ(α )

r1α1 −1r2α 2 −1 L rcα c −1

i

i =1

where (ri > 0; i = 1,2,K, c) and

c

∑r =1 .

i

i =1

expected values of the

wi are given by

The

E (ri ) = µi =

where α 0 =

∑

e

αi

α0

α i . In this context, α 0 represents the

i =1

total epochs.

Within the set of expected values µi ,i = 1,2,K, e , not

all of the values are of equal interest. We are interested,

in particular, in the value of µ e . This will represent the

probability of a transition to the terminal failure state

from a particular program module. So that we might use

this value for our succeeding reliability prediction

activities, it will be useful to know how good this

estimate is. To this end, we would like to set 100(1-α)%

confidence limits on the estimate. For the Dirichlet

distribution, this is not clean. To simplify the process of

setting these confidence limits, let us observe that if

r = (r1 , r2 ,K, rc ) is a random vector having the c-variate

Dirichlet distribution, D ( ,α e ) , then the sum

z = r1 + L + r has the beta distribution,

f β ( z | γ ,α e ) =

Γ(γ + α e ) γ

z (1 − z )α

Γ(γ )Γ(α e )

e

or alternately

Γ(γ + α e )

(1 − re )γ (re )α ,

Γ(γ )Γ(α e )

where γ = α1 + α 2 + L + α c .

Thus, we may obtain 100(1-α)% confidence limits for

µe − a ≤ µe ≤ µ e + b

from

µ −a

α

Fβ ( µ e − a | γ ,α e ) =

f β (re | γ ,α e )dr =

0

2

and

µ +b

α

Fβ ( µ e + b | γ ,α e ) =

f β (re | γ ,α e )dr = 1 − . (1)

0

2

The value of the use of the Dirichlet conjugate family

for modeling purposes is twofold. First, it permits us to

estimate the probabilities of the module transitions

directly from the observed transitions. Secondly, we are

able to obtain revised estimates for these probabilities as

the observation process progresses. Let us now suppose

that we wish to model the behavior of a software system

whose execution profile has a multinomial distribution

with parameters n and R = (r1 , r2 , K , re ) where n is the

total number of observed module transitions and the

f β (re | γ ,α e ) =

e

∫

∫

e

e

values of the w1 are unknown. Let us assume that the

prior distribution of R is a Dirichlet distribution with a

parametric

vector

= (α1 , α 2 , K , α e )

where

(α i > 0; i = 1,2,K, e) . Then the posterior distribution of

R for the total module execution frequency counts

X = ( x1 , x2 ,K, xe )

is a Dirichlet distribution with

0-7695-0001-3/99 $10.00 (c) 1999 IEEE

7

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

parametric vector ∗ = (α1 + x1 ,α 2 + x2 ,K,α e + xe )

[c.f. 2]. As an example, suppose that we now wish

to model the behavior of a large software system

with such a parametric vector . As the system

makes sequential transitions from one module to

another, the posterior distribution of R at each

transition will be a Dirichlet distribution. Further,

th

the i

component of the

for i = 1,2,K, e

augmented parametric vector α will be increased

by 1 unit each time module mi is executed.

Further, every time the system fails, the failure

count, xe , will also increase my one.

5. Reliability Estimation

The modeling of reliability of systems will be

implemented through the use of an absorbing state

in the stochastic process. In this application, we

will postulate the existence of a virtual program

module representing the failure of the system.

Should control ever be transferred to this module,

it will never be returned. Each program module

has a non-zero probability of transferring control to

this virtual failure module. This probability is

directly related to the fault proneness of the

module. We may, in fact, use the functional

relationship between software complexity and

software faults to derive our prior probabilities for

the transition between each program module and

the virtual failed state module [c.f. 10].

What is important to understand is that each

program module is distinctly related to one or more

functions. If a function is expressed by a set of

modules that are failure prone, then the function

will appear to be failure prone. If, on the other

hand, a function is expressed by a set of modules in

a call tree that are fault free, this function will

never fail. The key point is that it is a

functionality that fails. Not all functions will be

executed by a user with the same likelihood. If a

user executes unreliable functions consistently,

then he will perceive the system to be unreliable.

Conversely, if another user were to use the same

system but exercise functionalities that were not so

likely to fail, then his perceptions of the same

system would be very different.

In order to model the reliability of a software

function, we will augment the basic stochastic

model to include an absorbing state that represents

a virtual program module called the failure state.

Each program module may have a non-zero

transition probability to this virtual module. If a

module is fault free, then its transition probability

will be zero.

To this point we have created a mechanism for

modeling the transition of each program module to the

failure state. In this sense the reliability of each module

mi may be directly determined by the elements, pie0 , of

P0 .

With the Bayesian approach, we have also

established a mechanism for refining our estimates of

these reliabilities and establishing a measure of

confidence in each of these estimates. This information

will now be used to establish the reliability of functions

that employ each of the modules in varying degrees. The

0

successive powers of P will show the failure likelihood

for each of the modules. This will permit us to postulate

on the probability of a failure at some future epoch n

based on the current estimates of failure probability.

A central thrust of this investigation is to establish a

framework for establishing the reliability of program

modules, the basic building blocks of a computer

program. Each program module has an associated

reliability. The total system is a composite of all of the

program modules. As a direct result of design decision

made in the implementation of program functionality, a

module profile emerged for the design. The module

profile qi is the unconditional probability that a module

will be in execution at any epoch. The expected value for

the system unreliability U S at epoch n is simply

c

U S = ∑ rj p (jen ) .

(2)

j =1

An upper bound on this value may be obtained from

c

U Su = ∑ r j ( p (jen ) + b)

j =1

.

where the value b is derived from the upper α 2

confidence limit for each of the transition failure

estimates from equation (1).

Over a number of epochs of successful program

execution the unreliability of a system will diminish

rather rapidly. Hence it only makes sense to talk about

the reliability of a system on an exponential scale. The

reliability of the system RS will be derived from its

unreliability as follows:

RS = − log10 U S .

A lower

bound on this reliability estimate is RSl = − log10 U Su .

The system reliability is ultimately a function of the

user’s operational profile. Should this profile differ from

one use to another, then so too will the system reliability.

Different users running under differing operational

profiles will have differing perceptions of the system’s

reliability.

0-7695-0001-3/99 $10.00 (c) 1999 IEEE

8

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

the element tij of T will increase by one. Every time that

It seems pointless to engage in the academic

exercise of software reliability modeling without

making at least the slightest attempt to discuss the

process of measuring the independent variable(s) in

these models. The basic premise of this paper is

that we really cannot measure temporal aspects of

program failure. The state of the art in measuring

these temporal aspects is best summarized by

Brocklehurst [1]. There are, however, certain

aspects of program behavior that we can measure

and also measure with accuracy such as transitions

into and out of program modules [9].

Let us now turn to the measurement scenario for

the modeling process described above. For this

exercise we have instrumented the SDMS system

to track the transfer of control among the program

modules. We have also designed an experimental

scenario to introduce failures caused by specific

faults in individual program modules. Three users

then exercised the system performing different

activities. User 1 performed information retrieval

activities, displayed the retrieved records, and

generated queries. User 2 performed the role of a

database administrator, creating databases,

modifying their structure and maintaining them

User 3 entered records into a database much as a

clerk would do.

There is a need to record the dynamic behavior

of the total system as it transitions from one

program module to another. We adapted and

extended a software transition profiler called CLIC

[11]. CLIC works in three phases. Phase one

consist in the code instrumentation where "hooks"

are added to each module of the target application

in order to observe the transitions. The second

phase is the profiling itself, where the target

application is run and the software transitions are

recorded as they occurred. The third phase is the

post-processing and analysis of the collected

information which was discarded under the

proposed model because it is done dynamically as

the data is gathered.

The target application was instrumented with

CLIC following the standard procedures. The

second phase of CLIC was modified to fit the

model. Every time a transition was made, the

information was sent to an additional function

called RELEST (RELiability ESTimation) in

charge of keeping track of and analyzing the

system behavior. If there are a total of 20 modules,

then we will need an e × e (21 × 21) matrix T to

record these transitions. Whenever the program

transfers control from module mi to module m j

a failure is caused by a fault in module mi then tie of T

will increase by one representing a transition from that

module to the failure state. The results of program

execution by the three users performing their three

distinct roles is shown in Table 2. The rows of this table,

for each user, contain the row marginal totals for the

matrix T for each user. As the software executed, 9

simulated failures were created during the execution of

the system by all three users.

Total Calls

Module User 1

User 2

User 3 Failures

1

278

33

12

2

3

36

25

10

4

2

1

5

4

3

2

6

3

7

5

4

3

8

11

4

2

1

9

3

0

6

1

10

2

3

11

19

1

12

3

13

2

14

2

2

15

312

2

1

16

15

2

17

5

18

1

19

20

10

1

Totals

681

107

35

9

Table 2. Transition Frequencies

3

2.9

2.8

2.7

R

6. An Empirical Study

2.6

2.5

2.4

2.3

2.2

0

200

400

600

800

1000

1200

1400

Cumulative Epochs

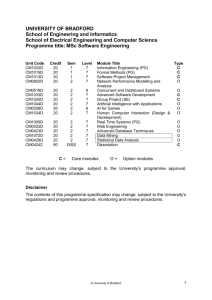

Figure 2. Reliability Growth for User 3

0-7695-0001-3/99 $10.00 (c) 1999 IEEE

9

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

From just the simple frequency counts of

program activity represented in Table 2, we can see

substantial differences in the way the three users of

this system exercise this system. It is clear, for

example, that neither User 1 nor User 3 would have

seen the 2 failure events in module 14 nor would

they have seen the failure events in modules 4 or

19. They didn’t cause either of these three

modules to execute. Their view of the system is

rather like the three blind men who are studying

the nature of the elephant by feel. Each user has a

different piece of the animal.

From the distribution of activity shown in Table

2, we can now compute the execution profiles for

Exec.

Prof.

Module

1

Fail.

Prob.

execution profiles for each user are shown in Table 3.

For each module under each user profile, the failure

probability of each module was then calculated. The is in

essence the probability of the transition to the failure state

for each module. Finally, the System Reliability (Q) is

calculated for each user. This value is obtained from

formula (2) above and is shown in the penultimate row of

Table 3. From the System reliability for each user, we

may

then

calculate

the

System

Reliability

(logarithmically scaled) for each user. These data are

shown in the last row of Table 3.

The three users are using the same software. They are

doing different things with it. User 1’s perception of the

system is very different than that of User 3. It is an order

Perceived Exec. Prof.

Unrel.

User 1

Fail.

Prob.

Perceived

Unrel.

Exec.

Prof.

User 2

Fail.

Prob.

Perceived

Unrel.

User 3

0.408

0.308

0.342

0.052

0.233

0.285

2

3

4

5

0.001

0.018

0.005

0.009

1.7e-04

0.028

6

0.030

0.057

0.028

7

0.007

8

0.016

0.001

2.3e-05

9

0.004

0.001

6.4e-06

10

0.002

11

0.037

0.037

0.009

3.4e-04

0.009

0.057

0.030

1.6e-03

0.171

0.030

4.8e-03

0.028

0.001

0.177

0.009

1.6e-03

0.030

0.018

0.019

3.4e-04

0.060

0.018

0.009

1.7e-04

0.030

12

0.028

13

0.018

14

0.085

0.003

15

0.458

0.001

6.7e-04

16

0.022

0.003

6.4e-05

17

0.007

18

0.019

0.060

0.009

19

20

0.014

System Unreliability (Q)

System Reliability ( − log10 Q )

0.009

7.6e-04

2.7e-03

6.5e-03

3.11

2.56

2.18

Table 3. Reliability Calculations

each user. Estimates for these profiles may be

obtained by dividing the total number of epochs for

each user into the individual module counts. These

of magnitude more reliable for activities performed by

User 1 than for User 3. Both User 2 and User 3 will think

0-7695-0001-3/99 $10.00 (c) 1999 IEEE

10

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

that their software is considerably less reliable than

User 1’s perception of his system.

We would now like to represent the reliability

growth of a system after it has been placed back in

service after a fix. To this end we will continue to

monitor the activity of User 3 through an additional

1170 epochs. At intervals, the transition matrix

was dumped and the reliability calculated for the

elapsed number of epochs. The results of this

computation is shown in Figure 2. Here we can

see a fairly rapid rise in the reliability of the system

in its early stages will some attenuation in this

growth over time.

7. Summary

The failure of a software system is dependent

only on what the software is currently doing: its

functionality. If a program is currently executing a

functionality that is expressed in terms of a set of

fault free modules, this functionality will certainly

execute indefinitely without any likelihood of

failure. A program may execute a sequence of

fault prone modules and still not fail. In this

particular case, the faults may lie in a region of the

code that is not likely to be expressed during the

execution of a function. A failure event can only

occur when the software system executes a module

that contains faults. If a functionality is never

selected that drives the program into a module that

contains faults, then the program will never fail.

Alternatively, a program may well execute

successfully in a module that contains faults just as

long as the faults are in code subsets that are not

executed.

The very nature of the milieu in which

programs operate dictates that they will modify the

behavior of the individuals that are using them.

The result of this is that the user’s initial use of the

system as characterized by the operational profile

will not necessarily reflect his/her future use of the

software. There may be a dramatic shift in the

operational profile of the software user based

directly on the impact of the software or due to the

fact the users’ needs have changed over time. A

design that has be established to be robust under

one operational profile may become less than

satisfactory under new profiles. We must come to

understand that some systems may become less

reliable as they mature due to circumstances

external to the program environment.

The continuing evaluation of the execution,

function, and module profiles over the life of a

system can provide substantial information as to

the changing nature of the program’s execution

environment. This, in turn, will foster the notion that

software reliability assessment is as dynamic as the

operating environment of the program. That a system has

functioned reliably in its past is not a clear indication

that it will function reliably in the future.

Some of the problems that have arisen in past attempts

at software reliability determination all relate to the fact

that their perspective has been distorted. Programs do not

wear out over time. If they are not modified, they will

certainly not improve over time. Nor will they get less

reliable over time. The only thing that really impacts the

reliability of a software system is its functionality. A

program may work very well for a number of years based

on the functions that it is asked to execute. This same

program may suddenly become quite unreliable if its

mission is changed by the user.

By keeping track of the state transitions from module

to module and function to function we may learn exactly

where a system is fragile. This information coupled with

the functional profile will tell us just how reliable the

system will be when we use it as specified. Programs

make transitions from module to module as they execute.

These transitions may be observed. Transitions to

program modules that are fault laden will result in an

increased probability of failure. We can model these

transitions as a stochastic process. Ultimately, by

developing a mathematical description for the behavior of

the software as it transitions from one module to another

driven by the functionalities that it is performing, we can

describe the reliability of the functionality. The software

system is the sum of its functionalities. If we can know

the reliability of the functionalities and how the system

apportions its time among these functionalities, we can

then know the reliability of the system.

8. Acknowledgments

This work was supported in part by a research grant

from the National Science Foundation and the Storage

Technology Corporation of Louisville, Colorado.

7. References

[1] S. Brocklehurst and B. Littlewood, “New ways to get

accurate reliability measures”, IEEE Software, July 1997, pp.

34-42.

[2] M. H. DeGroot, Optimal Statistical Decisions, McGrawHill Book Company, New York, 1970.

[3] G. A. Hall, Usage Patterns:

Extracting System

Functionality from Observed Profiles, unpublished dissertation.

University of Idaho, 1997. SETL TR #97-030.

[4] B. Littlewood, “Software reliability model for modular

program structure”, IEEE Transactions on Reliability, Vol. R28, No. 3, 1979, pp. 241-246.

0-7695-0001-3/99 $10.00 (c) 1999 IEEE

11

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

[5] T. A. Mazzuchi , R. Soyer, “A Bayes method for

assessing product-reliability during development

testing”, IEEE Transactions on Reliability, vol. 42, no.

3, 1993, pp. 503-510.

[6] J. C. Munson, T. M. Khoshgoftaar, “The detection of

fault-prone programs”, IEEE Transactions on Software

Engineering, SE-18, No. 5, 1992, pp. 423-433.

[7] J. C. Munson, R. H. Ravenel, “Designing reliable

software”, Proceedings of the 1993 IEEE International

Symposium of Software Reliability Engineering, IEEE

Computer Society Press, Los Alamitos, CA, November,

1993, pp. 45-54.

[8] J. C. Munson, “Software measurement: problems

and practice”, Annals of Software Engineering, vol. 1,

no. 1, 1995, pp. 255-285.

[9] J. C. Munson, “A Software Blackbox Recorder,”

Proceedings of the 1996 IEEE Aerospace Applications

Conference, IEEE Computer Society Press, pp. 309320.

[10] J. C. Munson, "A Functional Approach to Software

Reliability Modeling," in Boisvert, ed., Quality of

Numerical Software, Assessment and Enhancement,

Chapman & Hall, London, 1997. ISBN 0-412-80530-8.

[11] J. C. Munson, S. G. Elbaum, R. M. Karcich, and J.

P. Wilcox,

“Software Risk Assessment Through

Software Measurement and Modeling”, Proceedings of

the 1998 IEEE Aerospace Conference, IEEE Computer

Society Press.

[12] J. D. Musa, “The operational profile in software

reliability engineering: an overview”, In Proceedings of

the IEEE International Symposium on Software

Reliability Engineering, IEEE Computer Society Press,

Los Alamitos, CA, November, 1992. pp. 140-154.

[13] H. Raiffa, R. Schlaifer, Applied Statistical Decision

Theory, Harvard University Press, Cambridge, 1961.

[14] S.S. Wilks, Mathematical Statistics, John Wiley and

Sons, Inc., New York, 1962.

0-7695-0001-3/99 $10.00 (c) 1999 IEEE

12