Costing Issues in the Production of Biopharmaceuticals

advertisement

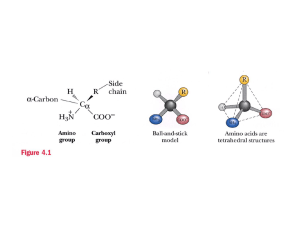

February 1, 2004 Costing Issues in the Production of Biopharmaceuticals By Anurag S. Rathore, PhD, Peter Latham, Oliver Kaltenbrunner, John Curling, Howard Levine Manufacturing costs are crucial to overall profit margins. Developing a new drug is a satisfying scientific achievement. Bringing that new drug to market and making a profit is essential to the continued existence of a company, and manufacturing costs are crucial to overall profit margins. Operational planning and early cost analyses are key to generating optimal, robust, and economical commercial processes. Companies can use models of their processes to understand and minimize production costs and optimize their manufacturing operations. This leads to efficient processes and better scheduling and consumables control. The ultimate result is a successful, and profitable, product. This article, the third in the "Elements of Biopharmaceutical Production" series, will discuss various tools available to perform the modeling required to understand, develop, and optimize manufacturing processes, facilities, and operations. Anurag S. Rathore discusses incentives and steps during process development. Then four industry experts present their viewpoints, insights, and experience. Peter Latham and Howard Levine describe modeling tools; John Curling discusses problems of early and late scale-up; and Oliver Kaltenbrunner discusses how to modify classical chemical engineering costing to the realities of biopharmaceuticals. Anurag S. Rathore: Incentives The average cost to bring a new drug to market currently is about $802 million.1 Consequently, drug developers are searching for tools and methods to contain R&D costs without compromising clinical test data. Competition is so intense that FDA is reviewing more than 100 pharmaceutical and biotechnology products that are in phase 3 clinical trials or beyond - the largest number of products so close to approval at one time.1 Production costs loom large in management planning. The optimal time to bring them under control is early in process development while the processes are being designed and various options are still under consideration. Intense product competition, patent expirations, the introduction of more second-generation therapeutics, and pricing constraints are forcing close scrutiny of the economics of bringing drugs to market. The sales price ($/g) of the different drug products generally decreases with increasing production volume. Biogen's Interferon, a small-volume drug, sells at $2,940/g. Large-volume drugs such as intravenous immune globulin (IVIG) typically sell at $40 to $60/g.2 Also, the split between the cost of upstream and downstream unit operations reportedly depends on the source organism. The upstream processing costs are lower (14 to 19% of total) for a recombinant E. coli or Streptomyces derived product and significantly higher (40% of total) for a mammalian cell culture product.3 Figure 1: Modeling tools vary at each stage of product development. Many pharmaceutical and biotechnology companies are using and developing a variety of sophisticated techniques for evaluating and minimizing the costs of each new therapy with the goal of generating optimal, robust, and economical commercial processes. Performing cost analysis during process development can prove to be extremely helpful for several reasons. 1. When the process for a new therapeutic product is defined during initial process development, use an early cost of goods sold (COGS) analysis to identify manufacturing cost drivers (for example, Protein A and virus removal steps). Then focus your efforts on evaluating new technologies and process options such as increasing lifetimes of the chosen resins or membranes. 2. Take a detailed look at the cycle times for different unit operations. The process design can be modified to increase productivity. For example, use big bead resins for faster chromatography and appropriately sized equipment and utilities. Also, a model could be used to evaluate the cost difference between a single large bioreactor and multiple small bioreactors. 3. Cost models of existing products can generate useful data for analyses of future products. Even for companies that outsource, models can help predict the production cost and can serve as a starting point for negotiations with contract manufacturers. Several development teams have recently written about their approaches to design more economical processes. If they look interesting, consult the references. Remember, their applicability depends on similarity to your product and constraints specific to your facility. Recent cases include the following: Using ion-exchange (IEX) chromatography as an alternative to Protein Achromatography.2,4 IEX is cheaper (media cost/g processed is $0.30 for High S Macroprep vs. $16.25 for rProtein A Sepharose) and is also free of contaminating immunoglobulins.2 However, additional steps may be required if IEX is used and may require process development time. Using membrane chromatography (MC) as an alternative to conventional resin column chromatography.5 MC requires higher medium and equipment costs, but lower buffer, labor, and validation costs might make it a more economical option. Using fully disposable, pre-sterilized, and pre-validated components in the bioprocessing plant instead of conventional stainless steel equipment.2,6 A comparison of two cases showed that the disposable option substantially reduced capital investment (60% of the conventional plant). The running costs for the disposable option were 70% higher than for the conventional plant; however, there was a nine-month reduction in time-to-market with the disposable option. 4. Optimize the facility to minimize downtime and improve efficiency of changeover and maintenance procedures. Some facility changes meeting these objectives include: addition or upgrade of manufacturing area or equipment; upgrade of utility systems; modernization of control systems; and coupling or decoupling of several process steps to improve operational flexibility and maximize equipment utilization. 5. Focus process development efforts on increasing the titer of fermentation and cell cultures.7 A fivefold improvement in titer combined with a 40% improvement in downstream recovery resulted in a sevenfold reduction in processing volume plus a fourfold reduction in COGS and associated capital requirements. Peter Latham and Howard Levine: Software Models for Analyzing Manufacturing Costs There are many tools to perform COGS analysis of biotherapeutic products. Not all of these tools are appropriate at all times. Often, the tool used to evaluate costs will have a major impact on the quality, reliability, and cost of the estimates obtained. The success of any modeling exercise depends on the questions asked and the accuracy and sensitivity of the expected answers. Figure 2: The structure of a calculation-based model is simple. The tools described here are calculation-based models, Monte Carlo simulations, and discrete event simulations. Figure 1 summarizes the best time to use each of these tools during product development. Calculation-Based Modeling Calculation-based (CB) models range from simple spreadsheet models developed inhouse to sophisticated database or spreadsheet-based offerings from software vendors or consultants. These CB models estimate the rough cost of goods based on a variety of input parameters. They provide an inexpensive way to estimate the cost of manufacturing for a product while providing flexibility in the definition of input parameters and the structure of the model. The model's basic structure can follow any number of formats; a simple and powerful structure is shown in Figure 2. The key to this and every other model structure is the process definition. To ensure proper estimates for each of the critical components of cost (materials, labor, and capital), it is essential the process definition be as accurate and complete as possible. The user interface can be designed to limit the number and range of variables tested or to allow the maximum flexibility and variation in input parameters. The greatest benefits of CB models are cost, time, and flexibility. Generally, such models can be built to the level of complexity needed to answer specific questions within a short time frame. If the modeler uses an off-the-shelf spreadsheet or database program, no additional software licenses are required to build the model, and it can be as flexible as desired. All inputs and outputs can be configured to identify key parameters and sensitivities. The user can, with some simple analysis, not only understand the estimated cost of goods associated with a specific process but also the cost implications of changes to that process. These advantages make CB models a valuable part of the cost analysis tool-kit throughout the life of a project. CB models are particularly useful in the early stages of a project when the process is not completely defined and assumptions must be made about input variables such as gene expression level, purity and yield, binding capacity of purification columns, and run time for each unit operation. In any modeling exercise, it is important to make sure that the power and accuracy of the model is consistent with the data that drive it. CB models can be oversimplified, which can lead to large errors in the outputs. Each assumption and input variable must be carefully evaluated for relevance and accuracy. So called "rules of thumb" buried in the software may be a source of error. When complexity overwhelms utility, the CB model must be abandoned in favor of other tools. CB models are limited when predict-ting the probability of an outcome due to the variability of the many different inputs. To take the CB model to the next level of sophistication, and to improve upon a simple cost analysis, Monte Carlo (MC) simulations are useful. Monte Carlo Simulations An MC simulation uses statistical simulation to model a multitude of outputs and scenarios. Many versions are based on spreadsheets that randomly generate values for uncertain variables over and over to simulate a model. A statistical simulation, in contrast to conventional numerical methods like the CB model, calculates multiple scenarios of a model by repeatedly sampling values from the probability distributions for each of the input variables. It then uses those values to calculate the output for each potential scenario. The result is a distribution of outputs showing the probability of each output over a range of input variables - rather than the single output of a CB model. This effectively automates the "brute force" approach to running sensitivities for CB models. Figure 3: Monte Carlo simulations show that risky projects can have negative payoffs. In an MC simulation, the process is simulated based on the process description and its input variables as described by probability density functions (PDFs) rather than discrete values. (Developing a PDF is hard work and beyond the scope of this article. To learn more, see Reference 8.) Once the PDFs are known, the MC simulation proceeds by random sampling from the PDFs. Many simulations are performed and averaged based on the number of observations (a single observation or up to several million). In many practical applications, one can predict the statistical error or variance of this result and estimate the number of MC trials needed to achieve a given degree of certainty. A good application of MC simulation is predicting the range of net present values (NPVs) for a product portfolio based on a variety of input variables, including the overall yield of the manufacturing process, different process options, and the probability of successful commercialization of each product. Each product in the pipeline will have a different probability of success based on its current stage of development and potential clinical indication, as well as fermentation volume requirements based on the overall process definition, process yield (expression level and purification yield), and expected market demand. The weaker alternate, a CB model, allows the estimation of the most probable COGS or the required fermentation volume for each product based on discrete inputs. However, the CB model does not allow a complete analysis of each product's potential demand based on a distribution of values for each input variable. And it cannot analyze the entire product pipeline simultaneously or estimate the most probable distribution of portfolio NPVs. We can combine a simple CB model with an MC simulation, and generate a probability distribution of NPV. Different PDF inputs lead to markedly different outputs. In both examples in Figure 3, the dotted red line shows the mean NPV of a company's current portfolio based on the discrete inputs (CB alone). While the mean NPV for the two cases is the same, the distribution of possible outcomes is very different. In the first example - which shows the probability distribution of a high-risk product portfolio - there is a significant probability that the NPV will be negative, suggesting that the company may want to re-evaluate the portfolio, including manufacturing options. The second example shows the output for a more conservative product pipeline. In this case, the majority of the predicted values for the NPV of the portfolio are positive. A similar MC simulation using the same data can determine whether the company will have either too much or too little manufacturing capacity for its product portfolio. In the case of the risky portfolio where the chance of product failure is high, the company may find that it has too much capacity, resulting in valuable capital being tied up in "bricks and mortar," potentially making product development difficult. On the other hand, building too little capacity could result in an inability to meet market demand as in the recent Enbrel shortage. In addition to the example in Figure 3, MC analyses can also be used to analyze all aspects of the COGS for a specific product, including the impact of process variables on cost and the risk of not achieving certain process objectives. The timing of bringing a product to market can impact overall operations and costs, and analyzing project schedules can help explain their uncertainty. As with the other models, MC simulations are dependent on the probability distributions supplied for the variables. In short, the better the input data, the more accurate the model. Nevertheless, by using a distribution of values rather than discrete inputs and by reviewing the results of the simulation, one can begin to understand the possible implications of errors in the model's assumptions. Discrete Event Modeling As a project progresses towards commercialization, discrete event modeling (DEM) - or discrete event simulation - represents a more powerful and useful modeling tool. With DEM, an entire manufacturing facility can be modeled on a computer. This allows the user to virtually build, run, and optimize a facility before investing any capital. The heart and soul of a DEM is the scheduler, which simulates the running of the plant as the process calls for resources. Delays become readily apparent with DEM if adequate resources are not available to run the process optimally. This allows the user to identify and understand not only the causes of delays but also their impact. As such, DEM is the most powerful tool available for the design (including support systems and utilities), de-bottlenecking, scheduling, and optimization of a manufacturing facility. When used in conjunction with a CB model, DEMs can provide a complete picture of manufacturing costs, plant throughput, and resource utilization. In addition, DEM is the only tool that can help management understand the full impact of - and schedule - multi-product facility changes. As with CB models, a DEM is only as good as the process information supplied to the model. However, this model calculates a greater number of parameters, and the implications of potential problems are more readily apparent than with CB models or MC simulations. Sometimes, the most powerful tool is not the right tool for the job. This is true early in process development when the assumptions made and the accuracy required do not warrant the time and cost of more sophisticated modeling. Nevertheless, once the basic process is defined, DEM becomes a valuable tool for designing and optimizing a facility. For this reason, many companies begin DEM as early as the preliminary design phase. Past uses of DEM in the biopharmaceutical industry include optimization of a facility that was achieving only 50% of its design capacity. By modeling the plant and process on a computer, and verifying the model's output against the actual operating facility, we were able to identify the causes for relevant delays and explore ways to alleviate them. Through an iterative process of testing multiple changes to the facility and process, we were able to identify the ideal approach to optimize the facility's output. Subsequently, these changes were implemented and the plant's productivity dramatically improved - as predicted by the model. The running facility matched the revised model, thereby validating the model. DEM has been successful in calculating the cost advantages of disposable technologies. It can look closely at the cost benefits of certain efficiencies in the plant support areas. CB and MC are not as precise. DEM also helped in the scheduling of resources in a manufacturing plant, including matching labor utilization to rotating shifts. Whatever the application, if the objective is to address scheduling and the use of limited resources (including equipment, labor, support systems, and utilities), then DEM is the right tool for the job. John Curling: Early Scale-Up Helps - or Hurts Our industry is in the process of maturing. It has done a good job of responding to the new opportunities provided by recombinant DNA and hybridoma (a hybrid cell resulting from the fusion of a lymphocite and a tumor cell) technologies. Industry is now actively pursuing cost-effective pharmaceutical processes for defined therapeutic products, with an intense focus on the cost of goods. Table 1: Discovery and commercial production differences Management may focus on COGS, cost of goods manufactured (COGM), or cost of sales, but the ultimate cost depends on the expression system used, the expression level in that system, the integration of unit operations of the process, and the process efficiency or yield. According to Myers, costs for monoclonal antibody production are split with one-third attributable to cell culture, one-third to purification, and onethird to support. The overriding cost driver is the bioreactor titer, even though chromatography may account for twothirds of downstream processing costs.9 The best time to start addressing process costs is in the R&D phase. The dialogue between research and process development is a potential gold mine. If product development is integrated further back into discovery and drug development, more savings may be possible - although there is a risk of stifling research and constricting the drug pipeline. Frequently, technology choices made during development in both upstream and downstream processing present problems when the product is in the clinic or on the market. A real challenge is addressing the difference in characterization standards between drug substances and biologics. Because of their origin and complexity, biopharmaceutical entities are characterized by the process used to manufacture them - at least in the eyes of regulators. This has immense cost impact because processes are frozen at the very early stage of clinical trial lot production. Incorporating early-stage, poorly developed methodologies into production will have major impact later on. Process development is therefore pivotal in transforming the R&D process into a manufacturing process. According to Karri et al., There is as yet no framework to link together predictive process models as part of an overall business planning strategy. This is probably a reflection of the historical focus on discovery, rather than manufacture, and of the very significant margins, and hence returns, that have characterized the industry during past decades."10 This is also a statement of the hidden cost of biologics development. Most companies have learned that if process development releases an incomplete process to manufacturing, then multiple failures and consequent delays and major costs will ensue. Gary Pisano examines the differences between discovery and full commercial-scale production in his book, The Development Factory.11 Table 1, adapted from that book, shows the major differences between the two. The driving forces in research are the identification of the potential drug substance and demonstration of initial therapeutic effect. At this stage, little attention is paid to optimizing purity and cost outputs. The initial microbiology and biochemistry lay the foundation for the process - the process development group must scale up the batch, improve the purity, and cut costs while meeting a schedule. At this stage, scale-up is nonlinear, and new equipment configurations will be used - sometimes with advantages, sometimes without. It is questionable whether we yet have the models and structures to describe and help effect the major changes that need to occur to, for example, reduce the number of steps from 25 to 7, increase the purity to >99.9% (and identify and quantify impurities and product variants), and achieve regulatory compliance (see Table 1). Over the past decade, highly sophisticated software tools and models have developed. These analyze and describe the cost and operational aspects of biological processes ranging from fermentation to formulation. Programs for DEM and COGS, such as SuperProDesigner and the BPS Simulation Process Models, provide excellent support. They force the user to identify every detail that impacts the process. That alone is a critical task of process development. In microbiological development, R&D may choose the cell line for speed of development rather than genetic stability, expression level, and adaptability to animal serum or protein-free media. Purification therefore gets pushed into downstream processing. Temporarily, we get an apparent gain by the early integration of biochemical engineering with biochemistry. However, trusted separation media and operations are integrated before optimization and before consideration of newer and more cost-effective technologies that could cut steps in the process. New technology is not always easy to adopt and must be proven in a pilot plant. This may apply to the use of general adsorbents (such as ion exchangers) rather than biospecific ligand capture or elute technologies; the use of soft gels rather than rigid matrices; and the use of traditional fixed-column beds in preference to expanded-bed adsorption or multiple-column arrangements (such as swing-bed or simulated moving-bed chromatography). Membrane chromatography may finally be coming of age and should have a positive cost impact in removal of lowconcentration impurities and contaminants. Oliver Kaltenbrunner: Biopharmaceutical Costs are Different Cost estimation for biopharmaceutical processing has its basis in standard chemical engineering practice. First, a model representing the intended process is created. Second, this model estimates the equipment needed. And third, overall capital cost requirements are projected based on equipment needs and typical multipliers for piping, instrumentation, construction, and installation. In addition to fixed capital investments, variable costs are estimated based on raw material needs of the process and typical labor, laboratory testing, and utilities costs for the particular unit operations. Labor and utility costs are treated as fixed or variable costs, depending on the particular manufacturing situation. For example, it may be more expensive to shut down and restart utilities in a regulatory-approved manufacturing site than to keep those utilities running during nonproductive times. Table 2: Frequently used cost terms The classical estimation method needs refinement to be suitable for biotechnical processes because it underestimates variable costs when using a singular metric like direct materials costs, COGS, or unit manufacturing costs. An alternative is to consider several metrics. Table 2 (presented as an input-output table) summarizes what expenses to include to generate specific cost estimates. In this table an input item affects a cost only if "YES" is in its corresponding box. Input and output terms should not be used interchangeably to avoid complicating the comparison of estimates. Unlike conventional chemical process estimates, the inventory levels of both work in process and finished goods for biotech products are held significantly higher. The costing terms in Table 2 work well for estimating costs of a particular production year and are therefore useful to estimate taxes. However, the results may vary dramatically from year to year. For any multi-year project the timing of expenses can be critical and only NPV analysis can capture the true nature of the activities. The comparison of capital-intensive production versus high operating costs, or of stocking upfront large amounts of expensive raw materials versus large consumption over the life of the project, can only be assessed when the timing of expenses is considered in more detail. Another recurrent difficulty is omitting or combining the success rates of process steps. Failure rates of cell culture bioreactors can be significant and in many cases should not be neglected. But failing a bioreactor production step does not necessarily fail the downstream process and cannot be lumped into an overall success rate. Reprocessing or waste disposal units in the process model usually indicate this has been accomplished. Cost of consumables is one factor that complicates the analysis of typical biotechnological processing considerably and is very different from standard cost estimating methods. In contrast to classical chemical processing, consumables like resins and membranes can be a significant portion of the raw material costs in the production of biopharmaceuticals. For example, in a typical monoclonal antibody production process, the resin cost for an affinity-capture column can overwhelm raw material costs. If a monoclonal antibody were to be captured directly on a typical Protein A resin from a 10,000-L cell-culture broth at a product titer of 1 g/L, resin costs of $4 to $5 million would arise for one packed column. Common sense recommends reuse of resins despite the complications of reuse validation. Additionally, cycling of columns within one production lot to increase utilization of expensive consumables seems advisable. Also, the projected inventory of material on hand will add significantly to the projected process costs. To determine the optimum number of reuses and cycles of a particular resin, cost of production must be calculated for each scenario under consideration. Another big problem for establishing a reliable production cost estimate arises from the fact that consumables typically have both time- and use-dependent expiration criteria. If expiration could be defined just by number of uses, consumables costs would simply behave as variable costs. If expiration of resins and membranes could be defined just by an expiration time, they could be depreciated as a fixed asset. However, having criteria for both time and cycles makes their cost contribution sensitive to the annual production demand. An average production capacity cannot adequately describe production costs for products ramping up in demand after launch or during conformance lots. During the early production years, a resin may never experience its maximum number of validated reuses before it expires. To make a reliable production cost estimate, the entire projected production campaign must be taken into account, because the use of single-year estimates results in high manufacturing variances. As a result, it is not enough to estimate production costs based on an average production year. Every year of production must be estimated separately while keeping track of consumables' cycles and age. This is in stark contrast to traditional chemical engineering cost modeling, where the cost for each unit produced is virtually identical and process costs can be reasonably estimated without having to draw up detailed production demand scenarios. Estimating has certainly become difficult as consumables costs depend on the demand scenario. Cost estimates must be repeated for demand scenarios of varying optimism. The complex behavior of consumables costs must be considered when trying to determine the optimal process scale or number of consumables reuses and cycling within lots. Unfortunately, commercial software does not allow consideration of this level of complexity. At present, there is no way around a tedious aggregation of model scenario outputs or the tedious development of custom tools. Eventually, commercial vendors will acknowledge this as a shortfall of their products and implement solutions that are modified towards the unique circumstances of cost estimation in biotechnology. Elements of Biopharmaceutical Production This article is the third in a series in BioPharm International coordinated by Anurag S. Rathore. This series presents opinions and viewpoints of many industrial experts on issues routinely faced in the process development and manufacturing of biopharmaceutical products. Earlier articles were: "Process Validation - How Much to Do and When to Do It," Oct 2002 and "Qualification of a Chromatographic Column - Why and How to Do It," March 2003. References 1. Agres T. Better future for pharma/biotech? Drug Discovery & Development 2002, Dec 15. 2. Curling J, Baines D. The cost of chromatography. IBC: Production and Economics of Biopharmaceuticals; 2000 Nov 13-15; La Jolla, CA. 3. Rosenberg, M. Development cost and process economics for biologicals derived from recombinant, natural products fermentation, and mammalian cell culture. IBC: Production and Economics of Biopharmaceuticals; 2000 Nov 13-15; La Jolla, CA. 4. Sadana A, Beelaram, AM. Efficiency and economics of bioseparation: some case studies, Bioseparation 1994; (4):221. 5. Warner TN, Nochumson S. Rethinking the economics of chromatography. BioPharm International 2003; 16(1). 6. Novais JL, Titchener-Hookner NJ, Hoare M. Economic comparison between conventional and disposables-based technology for the production of biopharmaceuticals, Biotech. Bioeng. 2001; (75):143. 7. Mullen J. The position of biopharmaceuticals in the future. IBC: Production and Economics of Biopharmaceuticals; 2000 Nov 13-15; La Jolla, CA. 8. Mun J. Applied risk analysis: moving beyond uncertainty. Hoboken (NJ): Wiley Finance; 2003. 9. Myers J. Economic considerations in the development of downstream steps for large scale commercial biopharmaceutical processes. IBC: Production and Economics of Biopharmaceuticals; 2000 Nov 13-15; La Jolla, CA. 10. Karri S, Davies E, Titchener-Hooker NJ, Washbrook J. Biopharmaceutical process development: part III. J. BioPharm Europe; Sept 2001: 76-82. 11. Pisano GP. The development factory. Boston (MA): Harvard Business School Press; 1997. Figure 1: Modeling tools vary at each stage of product development. Figure 2: The structure of a calculation-based model is simple. Figure 3: Monte Carlo simulations show that risky projects can have negative payoffs. Table 1: Discovery and commercial production differences Table 2: Frequently used cost terms