A major part of engineering work is making and reporting... technical information such as numerical quantities, it is important to... CE207L

advertisement

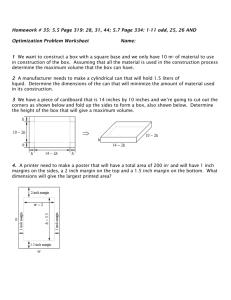

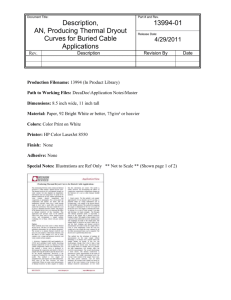

CE207L Measurement Error A major part of engineering work is making and reporting measurements. When communicating technical information such as numerical quantities, it is important to report numbers so that the reader can know that the results are representative of the precision achieved by the original measurement. To do otherwise may give your reader an incorrect idea about how well your answer represents reality. Since making completely error-free measurements is an elusive goal, you must understand and be able to correctly communicate numerical results so that your audience will understand or at least be given information about the accuracy of your work. Measurement terms (ASTM D 2777-96) accuracy - a measure of the degree of conformity of a single test result generated by a specific procedure to the assumed or accepted true value and includes both precision and bias. bias – the persistent positive or negative deviation of the average value of a test method from the assumed or accepted true value. precision – the degree of agreement of repeated measurements of the same property, expressed in terms of dispersion of test results about the arithmetical mean result obtained by repetitive testing of a homogeneous sample under specified conditions. The precision of a test method is expressed quantitatively as the standard deviation computed from the results of a series of controlled determinations. Measurement Error The difference between the true value of a measured object or parameter and the observed value of what is measured is called error. Sources of error include 1. instrument errors, such as manufacturing or calibration defects 2. user problems typically related to eyesight limitations including lighting, physical problems, etc., or user inexperience, mistakes etc. 3. inability to make a careful measurement due to environmental conditions, physical obstructions, changes in the object being measured, etc. 4. errors made in writing the original measurement value or in subsequent operations. There are two general categories of error – systematic (bias) error and random (precision) error. An illustration of each is given in Figure 1. 1. Systematic or bias error occurs when a measurement or group of measurements is consistently offset by some amount higher or lower than the true value. In many cases this type of error can be discovered and accounted for to make a correction to the original readings. Systematic errors tend to accumulate proportionately to the number of measurements made and used in subsequent calculations (Moffitt 1998). 2. Random or precision error occurs when the magnitude and sign of the error cannot be predicted. Although random errors may not be easily corrected, the effects of the random errors may be reduced by taking the average of several readings or by using other mathematical techniques that cause a part of the errors to cancel each other. Random 1 of 4 revised 2/19/2007 CE207L - Measurement Error errors tend to grow proportionately to the square root of the number of measurements (Moffitt 1998). As shown in Figure 1, the true value of a measured quantity can be estimated by adding the bias error to the average measured reading. For example, if the measured length of an object averaged over 7 readings is 12.56 mm and the known bias error is 1.02 mm, then the true length would be 12.56 mm + 1.02 mm = 13.58 mm. Figure 1 - Illustration of measurement bias and precision errors. measured value true value bias error average value precision error 1 2 3 4 5 6 7 measurement readings The goal of making any measurement is to get a true (perfect) indication of what the actual value is. In order to do this, the measurement technique or equipment must be precise and unbiased. To be able to do this means that an accurate measurement can be made. About the only way a measurement may be made with zero error is by chance. If an error is made due to an obvious or known mistake, such as by malfunctioning equipment or personnel, it should be noted as such and omitted from related calculations and conclusions. Making a measurement To make an accurate measurement the appropriate instrument must be selected for the task. Table 1 summarizes a variety of measurement task and equipment used in our labs to perform those measurements. Several points need to be considered before the most appropriate instrument choice can be made. 1. The type of measurement – length, weight/mass, time, pressure (differential or gage), temperature, strain, electrical potential in volts, electrical current in amperes, etc. 2. Must the instrument make physical/electrical contact with the object, and if so, how? Are there any obstructions to overcome? 3. What other conditions or factors might complicate the measurement task – accessibility, time of test, time duration of test, vibration, personnel requirements/training/availability, etc.? 4. How accurate must the measurement be? Will one test suffice or must there be multiple test samples taken? 2 of 4 revised 2/19/2007 CE207L - Measurement Error Table 1 - Measurement tasks and the typical instruments used in CE labs Measurement Instrument type Typical capacities Length Scale(ruler) typically up to 3 ft (1m) Surveyor’s tape Mechanical micrometer 100 ft 0-1inch Mechanical vernier caliper Electronic micrometer Electronic vernier caliper Linear displacement transducer Electronic scale Electronic scale 0-6 inch, 0-12 inch Weight/mass Pressure Temperature Pressure transducer, gage Pressure transducer, differential Pressure transducer, differential Mechanical thermometer Digital thermometer Time Electronic timer, manual operation Electrical Potential 0 to 2 inches 4100 x 0.01 g 8100 x 0.1 g 125 psi 0- 138 inch H2O 0- 15 inch H2O 0 – 300 VDC Typical accuracy of instrument and readout system +/- 1/32 inch to 0.5% of the full scale +/- 0.01 foot +/-0.001 inch +/-0.001 inch +/-0.001 inch +/-0.001 inch +/- 0.001 inch +/- 5 psi +/- 1 inch H2O +/- 0.2 inch H2O +/- 1° C to 5° C +/- 1° C +0/-0.1 sec and depends on manual operation to start/stop timer +/- 0.1 % of full scale Using measuring instruments Instrument precision generally means that a measurement device will allow the person making the measurement to read the dimension in sufficiently finely divided increments and to be able to observe the same measurement repeatedly. In order to use the device and report its output in calculations or a report, the user must know something about the instruments accuracy. The discussion below details some things to be considered for selecting and using certain instruments. While specific devices are covered here, the general comcepts can be applied to most measurement situations. Linear measurements A mechanical micrometer with a one-inch range may be used to measure anything from the thickness of a sheet of paper to another object up to one inch thick. Since a sheet of paper may have an average thickness of a few thousandths of an inch, it would be desirable to be able to 3 of 4 revised 2/19/2007 CE207L - Measurement Error make a measurement in 0.001-inch increments over the entire expected range. Provided the micrometer is designed to make accurate measurements to the nearest 0.001 inch, any such measurement could be reported to the nearest 0.001 inch. If you had a mechanical micrometer with a vernier scale, or a digital version, that enabled readings to the nearest 0.0001 (1x10-4 inch) you could report your readings to the nearest 0.0001 inch. On the other hand, consider a standard metal scale marked to one sixty-fourth of an inch as shown in Figure 2. Even though one sixty-fourth inch is numerically equivalent to 0.015625 inch, you should not report all of those digits in a report for at least two reasons: 1. To do so would imply that you were able to read the measurement to the nearest 1x10-6 inch, which is not possible for the average person. 2. The level of error in a scale this finely divided is probably not much better than one-half the smallest marked increment, or the width of the marks on the ruler, whichever is greater. Figure 2 - A typical steel scale marked in sixty-fourths of an inch. What would be the appropriate number to report in this case? You should report to a level of precision that you can defend if asked to verify your measurements. Assuming that your visual ability allows you to determine which mark on the scale is closest to the reference on the object you are measuring, then you should round to the nearest ½ mark. Since one sixty-fourth is about 0.0156 and one-half of that is 0.0078, you should round to the nearest 0.01 inch since that is about the finest increment you are likely to resolve. A linear displacement transducer (LDT) is an electrical device typically designed to work with a signal conditioner/digital readout device and calibrated with the resolution of both the LDT and readout devices in mind. In our labs, the LDTs have nearly infinite resolution but a stated nonlinearity of 0.1 percent (1/1000) of the full scale value. What this means is that for a 1-inch capacity LDT at any point within the 1-inch range the output from the device could be as much as 1 inch x 1/1000 = 0.001 inch away from the true value. The digital display units we use have accuracy (linearity) of 0.01 percent of full scale although the electrical output of the transducer will normally stay well within the display units range. Since the linearity error of the LDT is several times greater than that of the digital display it is presumed that the LDT error control the final error level of the system. Therefore, we can say that the accuracy of the LDT system in our labs will be plus or minus 0.001 inch. 4 of 4 revised 2/19/2007