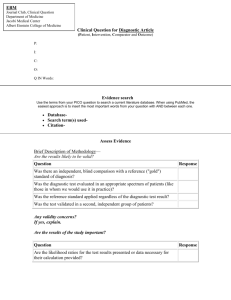

A diagnostic test study

advertisement

Diagnostic Test Studies Tran The Trung Nguyen Quang Vinh Why we need a diagnostic test? We need “information” to make a decision “Information” is usually a result from a test Medical tests: y To screen for a risk factor (screen test) y To diagnosse a disease (diagnostic test) y To estimate a patient’s prognosis (pronostic test) When and in whom, a test should be done? y When “information” from test result have a value. Value of a diagnostic test The ideal diagnostic test: y Always give the right answer: x Positive result in everyone with the disease x Negative result in everyone else y Be quick, safe, simple, painless, reliable & inexpensive But few, if any, tests are ideal. Thus there is a need for clinically useful substitutes Is the test useful ? Reproducibility (Precision) Accuracy (compare to “gold standard”) Feasibility Effects on clinical decisions Effects on Outcomes Determining Usefulness of a Medical Test Question Possible Designs Statistics for Results 1. How Studies of: reproducible - intra- and inter is the test? observer & - intra- and inter laboratory variability Proportion agreement, kappa, coefficient of variance, mean & distribution of differences (avoid correlation coefficient) Determining Usefulness of a Medical Test Question 2. How accurate is the test? Possible Designs Statistics for Results Cross-sectional, case- Sensitivity, control, cohort-type specificity, designs in which test PV+, PV-, result is compared ROC curves, with a “gold standard” LRs Determining Usefulness of a Medical Test Question 3. How often do test results affect clinical decisions? Possible Designs Diagnostic yield studies, studies of pre& post test clinical decision making Statistics for Results Proportion abnormal, proportion with discordant results, proportion of tests leading to changes in clinical decisions; cost per abnormal result or per decision change Determining Usefulness of a Medical Test Question 4. What are the costs, risks, & acceptability of the test? Possible Designs Prospective or retrospective studies Statistics for Results Mean cost, proportions experiencing adverse effects, proportions willing to undergo the test Determining Usefulness of a Medical Test Question Possible Designs 5. Does doing the test improve clinical outcome, or having adverse effects? Randomized trials, cohort or case-control studies in which the predictor variable is receiving the test & the outcome includes morbidity, mortality, or costs related either to the disease or to its treatment Statistics for Results Risk ratios, odd ratios, hazard ratios, number needed to treat, rates and ratios of desirable and undesirable outcomes Common Issues for Studies of Medical Tests Spectrum of Disease Severity and Test Results: y Difference between Sample and Population? y Almost tests do well on very sick and very well people. y The most difficulty is distinguishing Healthy & early, presymtomatic disease. Subjects should have a spectrum of disease that reflects the clinical use of the test. Common Issues for Studies of Medical Tests Sources of Variation: y Between patients y Observers’ skill y Equipments => Should sample several different institutions to obtain a generalizable result. Common Issues for Studies of Medical Tests Importance of Blinding: (if possible) y Minimize observer bias y Ex. Ultrasound to diagnose appendicitis (It is different to clinical practice) Studies of Diagnostic tests Studies of Test Reproducibility Studies of The Accuracy of Tests Studies of The Effect of Test Results on Clinical Decisions Studies of Feasibility, Costs, and Risks of Tests Studies of The Effect of Testing on Outcomes Studies of Test Reproducibility The test is to test the precision y Intra-observer variability y Inter-observer variability Design: y Cross-sectional design y Categorical variables: Kappa y Continuous variables: coefficient of variance Compare to it-self (“gold standard” is not required) Studies of the Accuracy of Tests Does the test give the right answer? “Tests” in clinical practice: y Symptoms y Signs y Laboratory tests y Imagine tests To find the right answer. “Gold standard” is required How accurate is the test? Validating tests against a gold standard: New tests should be validated by comparison against an established gold standard in an appropriate subjects Diagnostic tests are seldom 100% accurate (false positives and false negatives will occur) Validating tests against a gold standard A test is valid if: y It detects most people with disorder (high Sen) y It excludes most people without disorder (high Sp) y a positive test usually indicates that the disorder is present (high PV+) The best measure of the usefulness of a test is the LR: how much more likely a positive test is to be found in someone with, as opposed to without, the disorder A Pitfall of Diagnostic test A test can separate the very sick from the very healthy does not mean that it will be useful in distinguish patients with mild cases of the disease from others with similar symptoms Sampling The spectrum of patients should be representative of patients in real practice. Example: Which is better? What is the limits? y Chest X-ray to diagnose aortic aneurism (AA). Sample are 100 patients with and 100 without AA that ascertained by CT scan or MRI. y FNA to diagnose thyroid cancer. 100 patients with nodule > 3cm and had indication to thyroidectomy (biopsy was the gold standard). “Gold standard” “Gold standard” test: often confirm the presence or absence of the disease : D(+) or D(-). Properties of “Gold standard”: y y y y Ruling in the disease (often doing well) Ruling out the disease (maybe not doing well) Feasible & ethical ? (ex. Biopsy of breast mass) Widely acceptable. The test result Categorical variable: y Result: Positive or Negative y Ex. FNA cytology Continuous variable: y Next step is: find out “cut-off point” by ROC curve y Ex. almost biochemical test: pro-BNP, TR-Ab,.. Analysis of Diagnostic Tests How accurate is the test? Sensitivity & Specificity Likelihood ratio: LR (+), LR (-) Posterior probability (Post-test probability) / Positive, Negative Predictive value (PPV, NPV); given Prior probability (Pre-test probability) Sensitivity and Specificity a Sens ac d Spec bd Disease D Test Result + - “Gold standard” + a c b d Positive & Negative Predictive Value PV (+): positive predictive value PV (-): negative predictive value a PV () ab d PV () cd Test Result + - Disease D + a b c d a /(a c) LikelihoodRatio( LR) b /(b d ) Posterior odds When combined with information on the prior probability of a disease*, LRs can be used to determine the predictive value of a particular test result: Posterior odds = Prior odds x Likelihood ratio *expressing the prior probability [p] of a disease as the prior odds [p/(1-p)] of that disease. Conversely, if the odds of a disease are x/y, the probability of the disease is x / (x + y) Choice of a cut-off point for continuous results Consider the implications of the two possible errors: If false-positive results must be avoided (such as the test result being used to determine whether a patient undergoes dangerous surgery), then the cutoff point might be set to maximize the test's specificity If false-negative results must be avoided (as with screening for neonatal phenylketonuria), then the cutoff should be set to ensure a high test sensitivity Choice of a cut-off point for continuous results Using receiver operator characteristic (ROC) curves: y Selects several cut-off points, and determines the sensitivity and specificity at each point y Then, graphs sensitivity (true-positive rate) as a function of 1-specificity (false-positive rate) Usually, the best cut-off point is where the ROC curve "turns the corner” RECEIVER OPERATING CHARACTERISTIC (ROC) curve ROC curves (Receiver Operator Characteristic) Ex. SGPT and Hepatitis SGPT D+ D- Sum < 50 10 190 200 50-99 15 135 150 100-149 25 65 90 150-199 30 30 60 200-249 35 15 50 250-299 120 10 130 >300 65 5 70 Sum 300 450 750 Sensitivity 1 1-Specificity 1