Restart@Work_Cross-cultural Assessment

advertisement

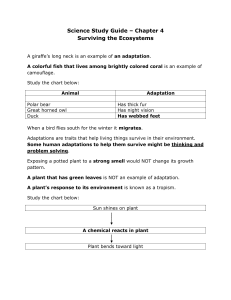

RESTART@WORK: A STRATEGIC PATTERN FOR OUTPLACEMENT 2012-1-IT1-LEO05-02621 KICK-OFF MEETING Cross-cultural Assessment: General Guidelines for Translating and Adapting Normative Tests Michelangelo Vianello, Egidio Robusto FISPPA Department, University of Padua Padua, November, 21 2012 Contents • Introduction •Translations and adaptation. •The importance of being adapted. •Consequences of a failure •A taxonomy of assessment devices. • The ITC Guidelines for Test Adaptations (2nd ed.)* • Remarks * Hambleton, R. K. (2001). The next generation of the ITC test translation and adaptation guidelines. European Journal of Psychological Assessment, 17(3), 164-172. Hambleton, Merenda, & Spielberger (2005), Adapting educational and psychological tests for crosscultural assessment . Hillsdale, NJ: Erlbaum. Failed translations can be funny… • Fight fire with fire! Failed translations can be funny… • They should do something with their chickens… Failed translations can be funny… • Or… why customers began choosing a resort with DEEP swimming pools in which they could safely drive and enjoy their holidays! The importance of being adapted • Psychological Testing For Basic Research: – Origin: Scientific curiosity, Theoretical gaps,… – Scope: Generate new knowledge • Applied Psychological Testing: – Origin: Real Problems – Scope: Find a Solution, Inform policy makers, take decisions regarding individuals • In applied settings, consequences of errors are much bigger and deleterious. Hence, measures should be as good as possible. The importance of being adapted • The term outplacement focuses on employees getting OUT of their organizations. But we should also pay attention to where they get IN. • In vocational guidance, errors might lead to: – – – – – – – – – Reduced quality of life Low satisfaction High turnover rates High costs of training Distress Conflicts Poor performances … MORE OUTPLACEMENT! Every practitioner inspired by high ethical standards should ask for “perfect measures” of any construct of interest. A taxonomy of assessment devices Source of data Structured Not structured Individual Questionnaires, Tests, Structured Interviews Open interviews, Focus Groups Assessor Behavioral Observation (e.g. assessment center) Participant observation Third-party Assessment of experts (e.g. job performance ratings) Brainstroming, Delphi, Objective indicators Tangible aspects (lay-out of an organization), devices for the collection of physiological or behavioral data (e.g. EEG, FMR, IAT) Audio-Video recordings The ITC Guidelines for Test Adaptation: General • G1. Effects of cultural and linguistic differences that are not important to the intended uses of the tests in the populations of interest should be minimized. – i.e., know two languages and you can be a translator: NOT TRUE! – Translators should be able to identify sources of method bias (e.g. experience with psychological tests, motivation, speededness, …) – Example: Measuring Extraversion by means of number of social exchanges in the timeframe (e.g. one day) in high and low social density populations (e.g. Hong Kong and Iceland). The ITC Guidelines for Test Adaptation: General • G2. The amount of overlap in the construct measured by the test or instrument in the populations of interest should be assessed. – Or: “Is the concept being understood in the same way across groups?” – E.g., Friendship is conceptually different in adolescents and old people – The overlap needs to be assessed a-posteriori via construct validity investigations (e.g., with Structural Equation Modeling Techniques and Nomological Nets) The ITC Guidelines for Test Adaptation: Translation and Adaptation • TA1&2. Test developers should ensure that the adaptation process takes full account of linguistic, psychological and cultural differences in the intended populations. – Or, why Google Translator does not provide a good translation. – Translators must have a knowledge of both cultures and a general knowledge of the subject matter – Use multiple translators – Pilot study The ITC Guidelines for Test Adaptation: Translation and Adaptation • TA3. Test developers should provide evidence that the test directions, scoring rubrics, test items, any passages or other stimulus materials are suitable for all populations of interest – E.g., (1) Emotion valence. Sneddon et al. (2011) showed the same video clip -intended to express sadness- to people from Guatemala and Peru and asked them to rate how positive or negative were the emotions felt by the protagonists. The more positive the ratings of people from Guatemala, the more negative the ratings of people from Peru!!! – E.g. (2). Task Motivation. Mesquita et al. (2003) provided American and Japanese respondents with either failure or success feedback on a particular task (e.g., a word association test). After this false feedback, all respondents were given the opportunity to spend more time on task for which they had received an evaluation. Japanese were more motivated to work on a task after failure feedback, whereas North Americans were more motivated to work after success feedback. The ITC Guidelines for Test Adaptation: Translation and Adaptation • TA4 & 5. Test developers should provide evidence that item content, stimulus materials, item formats, rating scales, etc., are familiar to all intended populations – Speededness, Item formats (e.g. multiple-choice), etc. are differently common across cultures. The OCSE/PISA tests have been questioned on exactly these aspects, and the Incomplete item stem format” (typically unadvisable) have been suggested because it might maintain equivalence across languages. – The effect is strong: people taking for practice different forms of IQ tests can increase their intelligence of more than a SD. – Unfamiliar stimuli might be, e.g., dollars, pounds, hamburgers, baseball bats, graffiti, ASCII text for emoticons such as ;-) or :-O, etc. The ITC Guidelines for Test Adaptation: Empyrical Analysis • EA1. The sample characteristics (including size and representativeness) and the samples themselves should be chosen to match the intended empirical analyses. – Invariance is typically estimated trough SEMs, more than 300 subjects per group needed – IRTs require less subjects (50 per group might be ok) • EA2. Test developers should provide relevant statistical evidence about the (a) construct, (b) method, and (c) item equivalence of the tests in all intended populations -Important: Differential Item Functioning The ITC Guidelines for Test Adaptation: Empyrical Analysis • EA3. Test developers should provide information on the validity of the adapted version of the test in the intended populations. – One of most common myths. The generalization of validity cannot be assumed but must be proved. – item analysis, reliability, validity studies (content, criterion related, construct) in relation to stated purposes are needed The ITC Guidelines for Test Adaptation: Administration • A1. Test developers and administrators should prepare materials and instructions in such a way that culture- and language-related problems due to stimulus materials, administration procedures, and response modes that can moderate the validity of the inferences drawn from the scores are minimized to the extent possible – Evidence needed to claim equivalence. Do not assume it! • A2. Those aspects of the testing environment that influence the administration should be made as similar as possible across populations of interest – Environmental factors may impact on test performance and reduce validity. Will respondents be honest? Maximize performance in achievement tests? … The ITC Guidelines for Test Adaptation: Score scales and Interpretation • SSI1: Score differences among samples of populations administered the test should not be taken at face value. The researcher has the responsibility to substantiate the meaningfulness of the results by fully analyzing the available information – Define the causes of these differences. They might not be representative of differences in trait. Sometimes they are due to stimulus materials, administration procedures, and response modes – Form a committee to interpret findings – Other research that might help? • SSI2: Comparisons of scores across populations can only be made at the level of invariance that has been established for the scale on which scores are reported – Are scores linked to a common scale? Are scores being interpreted at the level of invariance established in the analysis? A test might exhibit : • • • Configural invariance: the populations have the same numbers of factors (traits) Metric invariance: all factor loadings are the same across samples (i.e., all indicators “inform” the latent factors in the same way) this implies that the measures have the same intervals Scalar invariance: the two populations have the same means across factors (traits). The ITC Guidelines for Test Adaptation: Interpretation • I1: When a test is adapted for use in another population, technical documentation of any changes made should be provided, along with an account of the evidence obtained to support the equivalence of the adapted version of the test – Keep records of the adaptation process!! • I2: When a test is adapted for use in another population, documentation should be provided for test users that will support good practice in the application of the test to people in the context of the new population. – Are possible interpretations offered? – Are cautions for misinterpretations offered? – Are factors discussed that might impact on the results? Steps (Hambleton & Patsula, 1999) 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. Ensure that construct equivalence exists in the language and cultural groups of interest. Decide whether to adapt an existing test or develop a new test. Select well-qualified translators. Translate and adapt the test. Review the adapted version of the test and make necessary revisions. Conduct a small tryout of the adapted version of the test. Carry out a more ambitious field-test. Choose a statistical design for connecting scores on the source and target language versions of the test. If cross-cultural comparisons are of interest, ensure equivalence of the language versions of the test. Perform validation research, as appropriate. Document the process and prepare a manual for the users of the adapted tests. Train users. Monitor experiences with the adapted test, and make appropriate revisions. Remarks •Cross-cultural research is conceptually easy. The problems are in our assumptions!! •1. Good translator ≠ good translation –Never assume that a good translator (plus a back translation) guarantee a good translation. Hambleton (1994) illustrate this point with a nice example of question: “Where is a bird with webbed feet most likely to live?” •A. in the mountains •B. in the woods •C. In the sea •D. in the desert This question had to be translated in Swedish, in which country “webbed feet” rendered “swimming feet”, providing a cue about the correct answer. •2. Good test in a country ≠ good test in another country –The most famous Open-Access test of personality (Goldberg, 1990) turned out to be cross-culturally valid for 1 trait out of five and adaptable for 3 traits out of five (Nye et al. 2008). •3. True in a country ≠ true in another country –For instance, what we mean for “achievement motivation” is different for Asian populations (and its famous relationship with actual success is also different; Elliot et al. 2001)