Decision-Analysis

advertisement

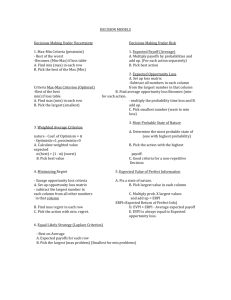

DECISION ANALYSIS Slide 2 Decision Analysis Structuring the Decision Problem / Developing a Decision Strategy Decision Making Without Probabilities Decision Making with Probabilities Expected Value of Perfect Information Decision Analysis with Sample Information Expected Value of Sample Information Slide 3 Structuring the Decision Problem A decision problem is characterized by decision alternatives, states of nature, and resulting payoffs. The decision alternatives are the different possible strategies the decision maker can employ. The states of nature refer to future events, not under the control of the decision maker, which may occur. States of nature should be defined so that they are mutually exclusive and collectively exhaustive. For each decision alternative and state of nature, there is a resulting payoff. These are often represented in matrix form called a payoff table. Slide 4 Decision Trees A decision tree is a chronological representation of the decision problem. Each decision tree has two types of nodes: round nodes correspond to the states of nature - square nodes correspond to the decision alternatives. The branches leaving round nodes represent the different states of nature while the branches leaving square nodes represent different decision alternatives. At the end of each limb of a tree are the payoffs attained from the series of branches making up that limb. Slide 5 Decision Making Under Uncertainty If the decision maker does not know with certainty which state of nature will occur, then he is said to be doing decision making under uncertainty. Three commonly used criteria for decision making under uncertainty when probability information regarding the likelihood of the states of nature is unavailable are: • the optimistic approach • the conservative approach • the minimax regret approach. Slide 6 The Optimistic Approach The optimistic approach would be used by an optimistic decision maker. The decision with the largest possible payoff is chosen. If the payoff table was in terms of costs, the decision with the lowest possible cost is chosen. Slide 7 The Conservative Approach The conservative approach would be used by a conservative decision maker. For each decision the minimum payoff is listed and then the decision corresponding to the maximum of these minimum payoffs is selected. (Hence, the minimum possible payoff is maximized.) If the payoff was in terms of costs, the maximum costs would be determined for each decision and then the decision corresponding to the minimum of these maximum costs is selected. (Hence, the maximum possible cost is minimized.) Slide 8 The Minimax Regret Approach The minimax regret approach requires the construction of a regret table or an opportunity loss table. This is done by calculating for each state of nature the difference between each payoff and the largest payoff for that state of nature. Then, using this regret table, the maximum regret for each possible decision is listed. The decision chosen is the one corresponding to the minimum of the maximum regrets. Slide 9 Expected Value Approach If probabilistic information regarding the states of nature is available, one may use the expected value (EV) approach. Here the expected return for each decision is calculated by summing the products of the payoff under each state of nature and the probability of the respective state of nature occurring. The decision yielding the best expected return is chosen. Slide 10 Expected Value of Perfect Information - EVPI Frequently information is available which can improve the probability estimates for the states of nature. The expected value of perfect information (EVPI) is the increase in the expected profit that would result if one knew with certainty which state of nature would occur. The EVPI provides an upper bound on the expected value of any sample or survey information. Slide 11 Expected Value of Perfect Information - EVPI EVPI Calculation • Step 1: Determine the optimal return corresponding to each state of nature. • Step 2: Compute the expected value of these optimal returns. • Step 3: Subtract the EV of the optimal decision from the amount determined in Step 2. Slide 12 Bayes’ Theorem and Posterior Probabilities Knowledge of sample or survey information can be used to revise the probability estimates for the states of nature. Prior to obtaining this information, the probability estimates for the states of nature are called prior probabilities. With knowledge of conditional probabilities for the outcomes or indicators of the sample or survey information, these prior probabilities can be revised by employing Bayes' Theorem. The outcomes of this analysis are called posterior probabilities. Slide 13 Posterior Probabilities Posterior Probabilities Calculation • Step 1: For each state of nature, multiply the prior probability by its conditional probability for the indicator -- this gives the joint probabilities for the states and indicator. • Step 2: Sum these joint probabilities over all states -- this gives the marginal probability for the indicator. • Step 3: For each state, divide its joint probability by the marginal probability for the indicator -- this gives the posterior probability distribution. Slide 14 Expected Value of Sample Information - EVSI The expected value of sample information (EVSI) is the additional expected profit possible through knowledge of the sample or survey information. Slide 15 Expected Value of Sample Information EVSI Calculation • Step 1: Determine the optimal decision and its expected return for the possible outcomes of the sample or survey using the posterior probabilities for the states of nature. • Step 2: Compute the expected value of these optimal returns. • Step 3: Subtract the EV of the optimal decision obtained without using the sample information from the amount determined in Step 2. Slide 16 Efficiency of Sample Information Efficiency of sample information is the ratio of EVSI to EVPI. As the EVPI provides an upper bound for the EVSI, efficiency is always a number between 0 and 1. Slide 17 PROBLEMS / EXAMPLES Slide 18 Example Consider the following problem with three decision alternatives and three states of nature with the following payoff table representing profits: States of Nature s1 s2 s3 d1 Decisions d2 d3 4 0 1 4 3 5 -2 -1 -3 Slide 19 Example Optimistic Approach An optimistic decision maker would use the optimistic approach. All we really need to do is to choose the decision that has the largest single value in the payoff table. This largest value is 5, and hence the optimal decision is d3. Maximum Decision Payoff d1 4 d2 3 choose d3 d3 5 maximum Slide 20 Example 1 2 3 4 5 6 7 8 9 Spreadsheet for Optimistic Approach A B PAYOFF TABLE Decision Alternative d1 d2 d3 C State s1 4 0 1 D of Nature s2 s3 4 -2 3 -1 5 -3 Best Payoff E F Maximum Recommended Payoff Decision 4 3 5 d3 5 Slide 22 Example Conservative Approach A conservative decision maker would use the conservative approach. List the minimum payoff for each decision. Choose the decision with the maximum of these minimum payoffs. Minimum Decision Payoff d1 -2 choose d2 d2 -1 maximum d3 -3 Slide 23 Example Spreadsheet for Conservative Approach A B C D 1 PAYOFF TABLE 2 3 Decision State of Nature 4 Alternative s1 s2 s3 5 d1 4 4 -2 6 d2 0 3 -1 7 d3 1 5 -3 8 9 Best Payoff E F Minimum Recommended Payoff Decision -2 -1 d2 -3 -1 Slide 25 Example Minimax Regret Approach For the minimax regret approach, first compute a regret table by subtracting each payoff in a column from the largest payoff in that column. In this example, in the first column subtract 4, 0, and 1 from 4; in the second column, subtract 4, 3, and 5 from 5; etc. The resulting regret table is: s1 s2 s3 d1 d2 d3 0 4 3 1 2 0 1 0 2 Slide 26 Example Minimax Regret Approach (continued) For each decision list the maximum regret. Choose the decision with the minimum of these values. choose d1 Decision d1 d2 d3 Maximum Regret 1 minimum 4 3 Slide 27 Example Spreadsheet for Minimax Regret Approach 1 2 3 4 5 6 7 8 9 10 11 12 13 14 PAYOFF TABLE Decision State Alternative s1 d1 4 d2 0 d3 1 of Nature s2 s3 4 -2 3 -1 5 -3 OPPORTUNITY LOSS TABLE Decision State of Nature Maximum Recommended Alternative s1 s2 s3 Regret Decision d1 0 1 1 1 d1 d2 4 2 0 4 d3 3 0 2 3 Minimax Regret Value 1 Slide 29 Example Decision Tree Using a decision tree to find the optimal decision if Payoffs P(s1) = .20, P(s2) = .50, P(s3) = .30: s1 d1 1 d2 2 s2 s3 s1 3 s2 s3 d3 s1 4 s3 s2 4 4 -2 0 3 -1 1 5 -3 Slide 30 Example Expected Value To calculate the expected values at nodes 2, 3, and 4, multiply the payoffs by the corresponding probabilities and then sum. The decision with the maximum expected value of 2.2, d1, is then chosen. EV(Node 2) = .20(4) + .50(4) + .30(-2) = 2.2 EV(Node 3) = .20(0) + .50(3) + .30(-1) = 1.2 EV(Node 4) = .20(1) + .50(5) + .30(-3) = 1.8 Slide 31 Example Expected Value of Perfect Information The EVPI is calculated by multiplying the maximum payoff for each state by the corresponding probability, summing these values, and then subtracting the expected value of the optimal decision from this sum. Thus, the EVPI = [.20(4) + .50(5) + .30(-1)] - 2.2 = .8 Slide 32 Example: Burger Prince Burger Prince Restaurant is contemplating opening a new restaurant on Main Street. It has three different models, each with a different seating capacity. Burger Prince estimates that the average number of customers per hour will be 80, 100, or 120. The payoff table for the three models is as follows: Average Number of Customers Per Hour s1 = 80 s2 = 100 s3 = 120 Model A Model B Model C $10,000 $ 8,000 $ 6,000 $15,000 $18,000 $16,000 $14,000 $12,000 $21,000 Slide 33 Example: Burger Prince Payoffs Decision Tree 2 d1 d3 .4 .2 10,000 15,000 .4 14,000 d2 1 s1 s2 s3 3 s1 s2 .4 s3 .4 .2 8,000 18,000 12,000 4 s1 s2 s3 .4 6,000 .2 16,000 .4 21,000 Slide 35 Example: Burger Prince Expected Value For Each Decision d1 EMV = .4(10,000) + .2(15,000) + .4(14,000) = $12,600 2 Model A 1 Model B Model C d2 d3 3 EMV = .4(8,000) + .2(18,000) + .4(12,000) = $11,600 EMV = .4(6,000) + .2(16,000) + .4(21,000) = $14,000 4 Choose the model with largest EV -- Model C. Slide 36 Example: Burger Prince Spreadsheet for Expected Value Approach A B C D E F 1 PAYOFF TABLE 2 3 Decision State of Nature Expected Recommended 4 Alternative s1 = 80 s2 = 100 s3 = 120 Value Decision 5 Model A 10,000 15,000 14,000 12600 6 Model B 8,000 18,000 12,000 11600 7 Model C 6,000 16,000 21,000 14000 Model C 8 Probability 0.4 0.2 0.4 9 Maximum Expected Value 14000 Slide 38 Example: Burger Prince Expected Value of Perfect Information Calculate the expected value for the optimum payoff for each state of nature and subtract the EV of the optimal decision. EVPI= .4(10,000) + .2(18,000) + .4(21,000) - 14,000 = $2,000 Slide 39 Example: Burger Prince Spreadsheet for Expected Value of Perfect Information A B C D E 1 PAYOFF TABLE 2 3 Decision State of Nature Expected 4 Alternative s1 = 80 s2 = 100 s3 = 120 Value 5 d1 = Model A 10,000 15,000 14,000 12600 6 d2 = Model B 8,000 18,000 12,000 11600 7 d3 = Model C 6,000 16,000 21,000 14000 8 Probability 0.4 0.2 0.4 9 Maximum Expected Value 14000 10 11 Maximum Payoff EVwPI 12 10,000 18,000 21,000 16000 F Recommended Decision d3 = Model C EVPI 2000 Slide 40 Example: Burger Prince Sample Information Burger Prince must decide whether or not to purchase a marketing survey from Stanton Marketing for $1,000. The results of the survey are "favorable" or "unfavorable". The conditional probabilities are: P(favorable ¦ 80 customers per hour) = .2 P(favorable ¦ 100 customers per hour) = .5 P(favorable ¦ 120 customers per hour) = .9 Should Burger Prince have the survey performed by Stanton Marketing? Slide 41 Example: Burger Prince Posterior Probabilities Favorable State 80 100 120 Prior .4 .2 .4 Conditional Joint .2 .08 .5 .10 .9 .36 Total .54 Posterior .148 .185 .667 1.000 P(favorable) = .54 Slide 42 Example: Burger Prince Posterior Probabilities Unfavorable State 80 100 120 Prior .4 .2 .4 Conditional .8 .5 .1 Total Joint .32 .10 .04 .46 Posterior .696 .217 .087 1.000 P(unfavorable) = .46 Slide 43 Example: Burger Prince Spreadsheet for Posterior Probabilities A B Market Research Favorable Prior State of Nature Probabilities s1 = 80 0.4 s2 = 100 0.2 s3 = 120 0.4 C D 1 2 Conditional Joint 3 Probabilities Probabilities 4 0.2 0.08 5 0.5 0.10 6 0.9 0.36 7 P(Favorable) = 0.54 8 9 Market Research Unfavorable 10 Prior Conditional Joint 11 State of Nature Probabilities Probabilities Probabilities 12 s1 = 80 0.4 0.8 0.32 13 s2 = 100 0.2 0.5 0.10 14 s3 = 120 0.4 0.1 0.04 15 P(Favorable) = 0.46 E Posterior Probabilities 0.148 0.185 0.667 Posterior Probabilities 0.696 0.217 0.087 Slide 45 Example: Burger Prince Decision Tree (top half) $10,000 4 s2 (.185) d1 $15,000 $14,000 $8,000 d2 2 I1 (.54) 5 $18,000 $12,000 d3 $6,000 6 1 s2 (.185) s2 (.185) $16,000 $21,000 Slide 46 Example: Burger Prince Decision Tree (bottom half) 1 $10,000 I2 (.46) d1 7 s2 (.217) $15,000 $14,000 d2 3 8 s2 (.217) $8,000 $18,000 $12,000 d3 $6,000 9 s2 (.217) $16,000 $21,000 Slide 47 Example: Burger Prince d1 $17,855 d2 2 4 EMV = .148(10,000) + .185(15,000) + .667(14,000) = $13,593 5 EMV = .148 (8,000) + .185(18,000) + .667(12,000) = $12,518 6 EMV = .148(6,000) + .185(16,000) +.667(21,000) = $17,855 7 EMV = .696(10,000) + .217(15,000) +.087(14,000)= $11,433 8 EMV = .696(8,000) + .217(18,000) + .087(12,000) = $10,554 9 EMV = .696(6,000) + .217(16,000) +.087(21,000) = $9,475 d3 I1 (.54) 1 d1 I2 (.46) d2 3 $11,433 d3 Slide 48 Example: Burger Prince Expected Value of Sample Information If the outcome of the survey is "favorable" choose Model C. If it is unfavorable, choose Model A. EVSI = .54($17,855) + .46($11,433) - $14,000 = $900.88 Since this is less than the cost of the survey, the survey should not be purchased. Slide 49 Example: Burger Prince Efficiency of Sample Information The efficiency of the survey: EVSI/EVPI = ($900.88)/($2000) = .4504 Slide 50 The End Slide 51