PPT16

advertisement

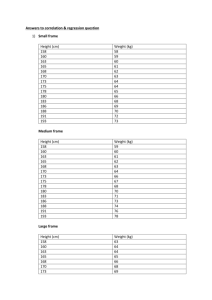

GG 313 Lecture 17 10/20/05 Chapter 4 Single an Multiple Regression Line Fit with Errors in Y Mid-term review Cruise Plans Last pre-cruise meeting tomorrow at 1:30 in POST 702 (?). Who needs a ride? I can take three from UH. Departure time is still uncertain, but, unless you hear from me or Garrett again before then, we should all be at the pier by 0730 Monday morning. Least-square line fitting with errors in y-values. In the last chapter we developed a method for determining the least-squares fit to a line from over determined data data where we have some degrees of freedom, and our data values, n, are larger than the number of values to be solved for, m. If we have possible errors in the y-values, we should be able to obtain an estimate of the uncertainty in our best-fit line. Paul changes his notation: In Ch. 3, he used y=a1+a2x, in Ch. 4 he uses y=a-bx, so be careful. We set up a chi2 function as follows: 2 yi ai bxi 2 (a,b) i i1 n (4.1) This equation is similar to equation 3.94, the function E, except that the observed-predicted values are now divided by the uncertainty in each y value. Note that i is NOT the standard deviation, but the individual possible errors of the yi. Yi are the observed values and a+bxi is the striaght line being fit to the data. What we want to do is find the best values of a and b AND find the possible errors in a and b. As before, we want values of a and b that minimize the value of 2. Thus, we want to find the values where the slope of 2, the first derivative with respect to a and b, equals zero. yi a bxi 0 2 2 a i i1 2 n n 2 yi a bxi 0 2 xi 2 b i i1 (4.2) We now set up several sums corresponding to the different terms in eqn. 4.2, similar to what was done in eqn. 3.100: S 1 2 i , Sx xi 2 i , Sy yi 2 i , Sxx xi2 2 i , S xy xi yi i2 We can now re-write eqns. 4.2: aS bS x S y aS x bS xx S xy (4.4) solving for a and b, and substituting, S y bS x S xy aS x a , b S S xx S y bS x S xy S x SS S S xy y x S b 2 S xx SS xx S x (4.3) Similarly, S xy aS x Sy Sx 2 S y Sxx Sxy S x aS x S yS xx Sxy S x S xx a S SSxx SS xx S x2 letting SS xx S x2 We obtain: (4.5) Sy Sxx Sxy Sx a SSxy Sy Sx b (4.6) This looks different from the equations obtained in chapter 3 where there was no error in y considered. If the I are constant, then (4.2) becomes: n 2 yi a bxi n 0 2 yi a bxi 2 a i i1 i1 n yi a bxi 0 2 xi yi a bxi xi 2 b i i1 i1 2 n These are essentially the same normal equations as (3.96) and (3.97), though we can argue about “what if =0”. We can now solve quite easily for the best-fit line, but is it a reasonable line? We need a test for whether a and b are realistic values. For example: If the points have large errors or are close together in x, then the line can be very uncertain. Way back in Chapter 1 (EQN???) we found in the section on propagation of errors that the variance of a function f, f2, is given by: f yi 2 2 f 2 i (4.7) We use our equations for a and b (eqn. 4.6) as f, and obtain: a Sxx Sx xi yi i2 b Sxi Sx yi i2 (4.8) Substituting back into (4.7), and after some simplification, we get: Sxx 2 2 Sxx Sx xi a i 2 i 2 And: S 2 2 Sxi S x b i 2 i (4.9) 2 (4.10) We find the covariance and correlation between a and b: a b Sx yi yi Sx r SS xx 2 ab 2 i (4.11) (4.12) The correlation coefficient will be non-zero in most cases, saying that the intercept is correlated to the slope. This is not true if we shift the origin to the mean x value, in which case r=0. Having calculated values for a and b, we can now go back to Eqn (4.1) and calculate a value for 2. We also get a critical value for 2 for n-2 degrees of freedom and check to see of our result from (4.1) is smaller or larger. If (4.1) yields the smaller value, then we can say that the fit is significant within the level of confidence. EXAMPLE: (Hypothetical) Data from 5 Hawaiian seamounts are given below: Name Dist. from Kilauea, km Age, MY Laysan 1,818 19.9±0.3 Northampton 1,841 26.6±2.7 Kimmei 3,668 39.9±1.2 Ojin 4,102 55.2±0.7 Suiko 4,860 64.7±1.1 Using the method of chapter 3, and Matlab, let’s find the best-fit line: % column 1: dist from Kilauea, km % column 2: age, MY % column 3: uncertainty, 1 std dev, MY n=5; % number of seamounts hold off; clear all % reset parameters Haw=[1818 19.9 0.3;... 1841 26.6 2.7;... 3668 39.9 1.2;... 4102 55.2 0.7;... 4860 64.7 1.1]; % data matrix from HVO Black book 1 XXX=Haw(:,1); % x values: dist from Kilauea YYY=Haw(:,2); % Y-values: ages Unit=[1 1 1 1 1]; % unit vector for additions Sy=Unit*YYY; % sum of all y values, see page 63 Wessel notes G Sx=Unit*XXX; % Sum of all x values Sxy=Unit*(XXX.*YYY); % sum of x*y Sxx=Unit*(XXX.*XXX); % sum of x^2 N=[n Sx;Sx Sxx]; % data matrix: Nx=B B=[Sy;Sxy]; % right side X=inv(N)*B; % X(1)=intercept, X(2)=slope BestFit=[Haw(1,1) Haw(n,1);X(2)*Haw(1,1)+X(2) X(2)*Haw(n,1)+X(2)]; plot(BestFit(1,:),BestFit(2,:)) hold on plot(XXX,YYY,'+')l Slope is 0.0133 my/km, or 75 km/my Or 7.5 cm/yr FOR HOMEWORK: Calculate the best fit to the Hawaiian-Emperor data using the equations we covered in chapter 4, including finding the critical value, covariance, correlation coef, the uncertainty in the slope (±1 standard deviation), and whether the results are significant at 95%. We will go over this at sea - best finish it before you leave. The m-file on the previous pages should give you a good start - and provide a check on your results.