L6_LinEqnIterative

advertisement

MA2213 Lecture 6

Linear Equations

(Iterative Solvers)

Iteration Methods p. 303-305

Many science and engineering applications

require solutions of large linear systems

A x b,

A R

nn

, n 10,000

Direct methods, e.g. Gaussian elimination,

that produce the exact solution (except for

roundoff error) in a fixed number of steps,

require excessive computation time and

computer memory.

Iteration Methods

Such systems are usually solved by

iterative methods which produce a

sequence of approximations to the

solution.

Often, the matrix A is very sparse

(most entries = 0), and iterative methods

are very efficient for sparse matrices.

Jacobi Method p.304 equivalent

system

1

9 x1 x2 x3 b1 x1 9 [b1 x2 x3 ]

1

x

2 x1 10 x2 3x3 b2

2

10 [b2 2 x1 3x3 ]

3x1 4 x2 11x3 b3 x3 111 [b3 3x1 4 x2 ]

This suggest the Jacobi iteration sequence

( k 1)

1

[b1 x

( k 1)

2

[b2 2 x

3x ]

( k 1)

3

[b3 3x

4x ]

x

x

x

1

9

1

10

1

11

(k )

2

(k )

1

(k )

1

x ]

(k )

3

for

k 0,1,2,...

(k )

3

(k )

2

0

1

0

2

0

3

starting from an initial estimate x , x , x

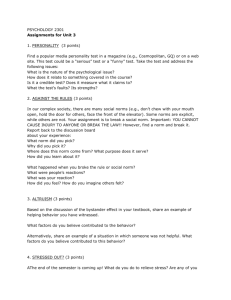

MATLAB Code for Jacobi Method

First pull down the File menu and choose New m-file

or Open an existing m-file, then type and save your

program below – I suggest using the same name as

the name of the function

function x_final = jacobi_special(x_init,b,num_iter)

% function x_final = jacobi_special(x_init,b,num_iter)

%

% bla bla bla

%

x(:,1) = x_init;

for k = 1:num_iter

x(1,k+1) = (1/9)*(b(1)-x(2,k)-x(3,k));

x(2,k+1) = (1/10)*(b(2) - 2*x(1,k) - 3*x(3,k));

x(3,k+1) = (1/11)*(b(3) - 3*x(1,k) - 4*x(2,k));

end

x_final = x;

Always compare your numerical results with results

available either in the textbook or elsewhere.

>> b = [10 19 0]';

>> x_final =

jacobi_special(x_init,b,20)'

x_final =

0

0

0

1.1111 1.9000

0

0.9000 1.6778 -0.9939

1.0351 2.0182 -0.8556

0.9819 1.9496 -1.0162

1.0074 2.0085 -0.9768

0.9965 1.9915 -1.0051

1.0015 2.0022 -0.9960

0.9993 1.9985 -1.0012

1.0003 2.0005 -0.9993

0.9999 1.9997 -1.0003

1.0001 2.0001 -0.9999

1.0000 1.9999 -1.0001

1.0000 2.0000 -1.0000

1.0000 2.0000 -1.0000

1.0000 2.0000 -1.0000

Textbook Results Jacobi Method p. 305

T

T

For b [10 19 0] the solution x [1 2 1]

(0)

(0)

(0)

(0) T

T

If x [ x1 x2 x3 ] [0 0 0]

the Jacobi iteration gives

(k )

1

k

x

0

0

x

(k )

2

0

(k )

3

x

0

Error || x x

2

1 1.1110 1.9000 0.0000 1000

2 0.9000 1.6778 0.9939 .3220

3 1.0351 2.0182 .8556 .1440

4 0.9819 1.9496 1.0162 .0506

5 1.0074

2.0085 0.9768 .0232

(k )

||

Matrix Splitting for Jacobi Method p.306

If

A D L U ,

with D diagonal ; L, U lower, upper triangular, then

1

1

x D (L U )x D b

Bxf

where

B D ( L U ),

-1

1

f D b

Jacobi Method

If certain conditions on the matrix B hold

then the following iteration produces a sequence

x

(k )

to

R , k 0,1,2,3,...

n

that converges

x R that satisfies x Bx f

(0)

n

x R initial ‘guess’

( k 1)

(k )

x

Bx f

n

stop after a specified number of iterations

or when

x

( k 1)

x

(k )

is sufficiently small

Question 1. What is a ‘closed’ formula for

x

(k )

Question 2. When will the sequence converge ?

Gauss-Seidel Method p.305

For the same system of equations

results from using the new (updated)

entries of x as soon as possible

This gives the Gauss-Seidel iteration

( k 1)

1

x

[b1 x

1

9

( k 1)

2

[b2 2 x

( k 1)

3

[b3 3 x

x

x

1

10

1

11

(k )

2

x ]

( k 1)

1

( k 1)

1

(k )

3

for

k 0,1,2,...

3x ]

(k )

3

4x

( k 1)

2

]

0

1

0

2

0

3

starting from an initial estimate x , x , x

Matrix Splitting for Gauss-Seidel p.306

If

A D L U ,

with D diagonal ; L, U lower, upper triangular, then

1

1

x ( D L) Ux ( D L) b

Gxf

where

1

1

G ( D L) U , f ( D L) b

Gauss-Seidel Method

If certain conditions on the matrix G hold

then the following iteration produces a sequence

x

(k )

to

R , k 0,1,2,3,...

n

that converges

x R that satisfies x G x f

(0)

n

x R initial ‘guess’

( k 1)

(k )

x

Gx f

n

stop after a specified number of iterations

or when

x

Question 3. How can

( k 1)

x

x

( k 1)

(k )

is sufficiently small

be computed without

computing the inverse of the matrix D-L ?

Convergence of Iterative Methods

The equation

x Bx f

and the iteration

implies that

therefore

x

x

( k 1)

x

(k )

( k 1)

for the solution

Bx

(k )

x B (x

Question 4. When will the sequence

converge to the solution

of the initial estimate

x

x

(0)

x)

( 0)

x

regardless

?

f

(k )

x B (x

k

x

x)

(k )

Convergence Conditions

Answer to Question 4. Converges always occurs iff

lim k B

(k )

0

Question 5. What does this statement mean ?

Question 6. When does that happen ?

Answer to Question 6. We need some mathematics !

Complex Numbers

Definition The real numbers R are a field under

the operations of addition and multiplication. Review !

Definition The complex numbers are the set

C u iv : u, v R with addition and multiplication

(u iv ) (a ib ) (u a) i (v b)

(u iv )( a ib ) (ua vb) i (ub va)

2

2

|

u

iv

|

u

v

Definition The absolute value

Question 7. Why does | z w | | z | | w |, z , w C ?

Question 8. Why are the complex numbers a field ?

n

C with add. & scalar mult.

n

(u v) j u j v j , (cu) j cu j , u, v C , c C

a vector space with dimension n over the field C ?

Question 9. Why is the set

Characteristic Polynomial of a Matrix

For

by

B C define the function cB : C C

cB ( z) det( zI B), z C

n n

Example b

11

B

b21

where

b12

22 c ( z ) z 2 tr ( B) z det ( B)

C

B

b22

tr( B) b11 b22 is called the trace of B

cB are 12 [tr( B) ],

2

2

where C satisfies (tr( B)) 4 det( B)

and cB ( z ) ( z ) ( z )

Remark c B is always a monic polynomial of degree n

It is called the characteristic polynomial of B

The roots of

Eigenvalues and Spectral Radius

Definition

iff

is an eigenvalue of a matrix

cB ( ) 0

B C

n n

Remark The word eigen is a German word

eigen; eigener; eigene; eigenes {adj}

own

sein eigenes Auto

his own car; car of his own

n n

is

B C

( B) set of eigenvalue s of B

n n

Definition The spectral radius of a matrix B C

( B) max {| | : ( B) }

Definition The spectrum of a matrix

Reference p. 380 Gilbert Strang, Linear Algebra

and its Applications, 3rd Edition, Sauders, 1988.

Eigenvectors and Eigenvspaces

Definition If

is an eigenvalue of a matrix

B C

n n

V { v C : Bv v } is called the

eigenspace of . A nonzero vector in V is called an

n n

(with eigenvalue ).

eigenvector of B C

n

the set

Question Why is

Result If

V

a subspace of

C

n

is an eigenvalue of a matrix

?

B C

n n

then

B with eigenvalue .

Proof ( B) c ( ) det(I B) 0

B

n

v C with v 0 and ( I B) v 0.

there exists an eigenvector of

Examples and Applications

0 1

2

c

(

z

)

det

(

zI

F

)

z

z 1

Example 1 F

F

1 1

( F ) {

V

1 5

2

,

1 5

2

} R

1

v1

: v1 v2 0 span

v

2

Question What is

V ?

1

Remark is called the Golden Ratio since 1

Remark Fibonacci sequence s 0 1 s

n 1

1

n

, s1 s2 1

1,1,2,3,5,8,13,21,34,55,...sn2 1 1 sn1

Examples and Applications

cos

Example 2 T

sin

sin

2

c

(

z

)

z

2 cos z 1

T

cos

(T ) { cos i sin , cos i sin } R

i

(T ) { e , e

1

2

V span R

i

Question What is V ?

i

} by Euler’s Formula.

Convergence Conditions

Answer to Question 6.

lim k B

(k )

0 ( B) 1

Question 7. How can we compute

(B)

?

Answer to Question 7. We will examine that problem in

Week 8.

Question 8. What can we do about convergence now ?

Answer to Question 8. Learn about matrix norms.

Vector Norms – Which One ?

>> help norm

NORM Matrix or vector norm.

For matrices...

NORM(X) is the largest singular value of X, max(svd(X)).

NORM(X,2) is the same as NORM(X).

NORM(X,1) is the 1-norm of X, the largest column sum,

= max(sum(abs(X))).

NORM(X,inf) is the infinity norm of X, the largest row sum,

= max(sum(abs(X'))).

NORM(X,'fro') is the Frobenius norm, sqrt(sum(diag(X'*X))).

NORM(X,P) is available for matrix X only if P is 1, 2, inf or

'fro'.

For vectors...

NORM(V,P) = sum(abs(V).^P)^(1/P).

NORM(V) = norm(V,2).

NORM(V,inf) = max(abs(V)).

NORM(V,-inf) = min(abs(V)).

See also COND, RCOND, CONDEST, NORMEST.

Vector Norms

A vector norm on C n is a function

n

|| || :C [0, )

that satisfies the following three properties:

|| v || 0, v 0 || v || 0,

|| cv || | c | || v || ,

|| u v || || u || || v ||

whenever

u , v C , c C.

n

Remark A vector norm measures the size of a vector.

Examples of Vector Norms

Euclidean Norm

p Norm || v || p

Max Norm

Remark

|| v ||2 (v, v) , v R

n

k 1

| vk |

p

1/ p

n

, v C , p [1, )

n

|| v || max {| vi |: i 1,..., n }, v C

AC

n n

is a vector norm on

nonsingular || v || A, p ||

C

n

for any

n

Av || p

p [1, ]

Remark The textbook considers ONLY the norm || v ||

(see page 298) and they denote this norm by || v ||

Matrix Norms

n

||

||

Given ANY vector norm

on C we can construct

a CORRESPONDING matrix norm on

C

n n

by

|| A || max{ || Av || : || v || 1 }

Theorem 1

Proof. If

|| Av || || A || || v ||,

v0

then ||

AC

nn

, v C

n

Av || || 0 || 0 || A || || v ||

v

v

|| || || v || A

||

If v 0 then || Av || || A || v ||

|| v ||

|| v ||

v

|| || v || || A || || v ||

|| A

|| v ||

Matrix Norms

Theorem 2 If A, B C nn then

(1)

|| A B || || A ||

(2)

|| B ||

| || A || || B || | || A B ||

(3)

|| A B || || A || || B ||

Proofs

|| A B || max || ( A B)v || max || Av || max || Bv |||| A || || B ||

||v||1

||v||1

||v||1

|| A || || A B || || B || , || B || || B A || || A || (2)

|| AB || max || ( AB)v || || A || || B ||

||v||1

Examples of Matrix Norms

Theorem 2

|| A || max { j 1 | aij | }

n

n

i 1

Proof.

|| v || 1 || Av || max { j 1| aij | }

hence

|| A || max

n

n

i 1

Choose

i

{ j 1| aij | }

n

i 1

that maximizes

n

n

j 1

| aij | then construct

u C by u j sgn( aij ), j 1,..., n hence || u || 1

n

and

|| Au || | ( Au)i | | j 1 aiju j |

n

j 1| aij | max { j 1| aij | }

n

n

i 1

n

Application to Jacobi Iteration

3 0 0

D 0 2 0

0 0 5

3 1 1

1

0 2 1 x 2

2 1 5

3

0 0

0

L 0

0 0

2 1 0

0

1

B D (L U ) 0

52

1

3

0

1

5

0 1 1

U 0 0 1

1

0 0 0

3

1

2

2

|| B ||

0

3

Question Will Jacobi iteration converge ?

Matrix Norms and Spectral Radius

Two amazing results, whose full proofs will not be

presented, hold for any matrix

AC

n n

Result 1

( A) glb { || A || : || || is a matrix norm}

Result 2 For EVERY matrix norm

|| ||

( A) lim k ( || A || )

We now prove half of Result 1. If v 0

Av v | | || v || || Av || || A || || v ||

Therefore || A || | | hence || A || ( A)

k

1/ k

Condition Number of a Matrix

Definition The condition number of a nonsingular

matrix

AC

n n

with respect to a matrix norm || || is

>> help cond

COND Condition number with respect

to inversion.

COND(X) returns the 2-norm condition

number (the ratio of the

largest singular value of X to the

smallest). Large condition

numbers indicate a nearly singular

matrix.

1

cond ( A) || A || || A ||

For the norm

the

1

1

COND(X,P) returns the condition

number of X in P-norm:

2

H4 1

3

NORM(X,P) * NORM(INV(X),P).

1

where P = 1, 2, inf, or 'fro'.

4

|| ||

4 4 Hilbert matrix

1

2

1

3

1

4

1

5

1

3

1

4

1

5

1

6

1

4

1

5

1

6

1

7

has

cond ( H )

4

28,375

Error Analysis For Direct Methods

A( x x) b b

where b is the error of the right side

and x is the error of the solution,

1

then A x b x A b

1

hence absolute error || x || || A || || b ||

If

and

A x b || b || || A || || x ||

|| x ||

|| b ||

cond ( A)

hence relative error

|| x ||

|| b ||

Error Analysis For Direct Methods

( A a) ( x x) b

where A is the error of the matrix

and x is the error of the solution,

If

then x ( A A) ( A) x ( I A A) A ( A) x

1

1

1

1

hence absolute error

|| x || || A || || A || (1 || A || || A ||) || x ||

1

1

1

hence relative error

|| x ||

|| A||

cond ( A) 1 cond ( A) || A||

|| x ||

1

whenever || A || || A || 1

1

|| A ||

|| A ||

Successive Over Relaxation

Modify the Gauss-Seidel

x

x

(0)

R

( k 1)

n

initial ‘guess’

L x

(k )

f

L ( D L) (1 ) D U

1

f ( D L) b

where 1 Gauss-Seidel

1

1

1

successive over relaxation

successive under relaxation

Homework Due Lab 3

1. Develop a MATLAB program for piecewise linear

interpolation. Then use it to compute and plot the piecewise linear

interpolants for the functions : (i) sin(x) on the interval [0,2 pi],

(ii) 1/(1 + x*x) on the interval [–5,5], based on N = 4, 8, 16, and 32

nodes. Also plot the error functions (difference between the

function and the interpolant) and discuss the behavior of the error

as a function of N for each function.

2. Develop a MATLAB program for polynomial interpolation. Then

use it (the same as you used the program that you developed in

question 1 above) to study the functions sin(x) and 1/(1+x*x).

Then discuss which interpolation method appears to be better

suited to interpolate each of the two functions.

Your answers should include well documented MATLAB code,

carefully labeled plots, and clear logical discussions.

Review Lectures 1-4 Problems

Question 1. Do problem 13 on page 79 of the textbook.

Question 2. Derive a closed form for the estimate of the

solution of the equation below obtained by applying 2

iterations of Newton’s Method with the initial estimate 0.

x 0.1sin x 0.2

Question 3. Compute a linear combination of functions

| x |, x, 1 that interpolate sin x

at

x 0, 2 , 2 .

Question 4. Compute the quadratic least squares

[

approximation to the function cos x over

2 , 2 ].

Question 5. Use Gram-Schmidt to compute orthonormal

functions from the sequence cos , sin , over [0, ] .

Question 6. Do problem 1 on page 215 of the textbook.