EDGE Architecture - California Lutheran University

advertisement

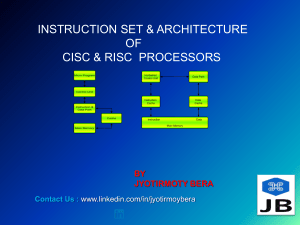

TRIPS – An EDGE Instruction Set Architecture Chirag Shah April 24, 2008 What is an Instruction Set Architecture (ISA)? • Attributes of a computer as seen by a machine language programmer • Native data types, instructions, registers, addressing modes, memory architecture, interrupt and exception handling, and external I/O • Native, machine language commands – opcodes • CISC (’60s and ’70s) • RISC (’80s, ’90s, and early ’00s) CISC vs RISC CISC (Complex Instruction Set Computer) RISC (Reduced Instruction Set Computer) Emphasis on hardware Multi-clock, complex instructions Emphasis on software Single-clock, reduced instructions “LOAD” and “STORE” incorporated in instructions Small code sizes, high cycles per second “LOAD” and “STORE” are independent instructions Large code sizes, low cycles per second Transistors used for storing complex instructions Spends more transistors on memory registers Generic Computer • Data resides in main memory • Execution unit carries out computations • Can only operate on data loaded into registers Multiply Two Numbers • One number “A” stored in 2:3 • Other number “B” stored in 5:2 • Store product in 2:3 CISC Approach • Complex instructions built into hardware (Ex. MULT) • Entire task in one line of assembly MULT 2:3, 5:2 • High-level language A = A * B • Compiler – high-level language into assembly • Smaller program size & fewer calls to memory -> savings on cost of memory and storage RISC Approach • Only simple instructions – 4 lines of assembly LOAD A, 2:3 LOAD B, 5:2 PROD A, B STORE 2:3, A • Less transistors of hardware space • All instructions execute in uniform time (one clock cycle) - pipelining What is Pipelining? Before Pipelining After Pipelining Why do we need a new ISA? • • • • • 20 yrs RISC CPU performance - deeper pipelines Suffer from data dependency Worse for longer pipelines Pipeline scaling nearly exhausted Beyond pipeline centric ISA Steve Keckler and Doug Burger • Associate professors - University of Texas at Austin • 2000 - predicted beginning of the end for conventional microprocessor architectures • Remarkable leaps in speed over last decade tailing off • Higher performance -> greater complexity • Designs consumed too much power and produced too much heat • Industry at inflection point - old ways have stopped working • Industry shifting to multicore to buy time, not a long range solution EDGE Architecture • EDGE (Explicit Data Graph Execution) • Conventional architectures process one instruction at a time; EDGE processes blocks of instructions all at once and more efficiently • Current multicore technologies increase speed by adding more processors • Shifts burden to software programmers, who must rewrite their code • EDGE technology - alternative approach when race to multicore runs out of steam EDGE Architecture (contd.) • Provides richer interface between compiler and microarchitecture: directly expresses dataflow graph that compiler generates • CISC and RISC require hardware to rediscover data dependences dynamically at runtime • Therefore CISC and RISC require many powerhungry structures and EDGE does not TRIPS • Tera-op Reliable Intelligently Adaptive Processing System – first EDGE processor prototype • Funded by the Defense Advanced Research Projects Agency - $15.4 million • Goal of one trillion instructions per second by 2012 Technology Characteristics for Future Architectures 1. 2. 3. 4. New concurrency mechanisms Power-efficient performance On-chip communication-dominated execution Polymorphism – Use its execution and memory units in different ways to run diverse applications TRIPS – Addresses Four Technology Characteristics 1. Increased concurrency – array of concurrently executing arithmetic logic units (ALUs) 2. Power-efficient performance – spreads out overheads of sequential, von Neumann semantics, over 128-instruction blocks 3. Compile-time instruction placement to mitigate communication delays 4. Increased flexibility – dataflow execution model does not presuppose a given application computation pattern Two Key Features • Block-atomic execution: Compiler sends executable code to hardware in blocks of 128 instructions. Processor sees and executes a block all at once, as if single instruction; greatly decreases overhead associated with instruction handling and scheduling. • Direct instruction communication: Hardware delivers a producer instruction’s output directly as an input to a consumer instruction, rather than writing to register file. Instructions execute in data flow fashion; each instruction executes as soon as its inputs arrive. Code Example – Vector Addition • Add and accumulate for fixed size vectors • Initial control flow graph • Loop is unrolled • Reduces the overhead per loop iteration • Reduces the number of conditional branches that must be executed • Compiler produces TRIPS Intermediate Language (TIL) files • Syntax of (name, target, sources) Block Dataflow Graph • Scheduler analyzes each block dataflow graph • Places instructions within the block • Produces assembly language files • Block-level execution, up to 8 blocks concurrently • TRIPS prototype chip - 130-nm ASIC process; 500 MHz • Two processing cores; each can issue 16 operations per cycle with up to 1,024 instructions in flight simultaneously • Current high-performance processors - maximum execution rate of 4 operations per cycle • 2 MBs L2 cache – 32 banks • Execution node – fully functional ALU and 64 instruction buffers • Data flow techniques work well with the three kinds of concurrency found in software – instruction level, thread level, and data level parallelism Architecture Generations Driven by Technology