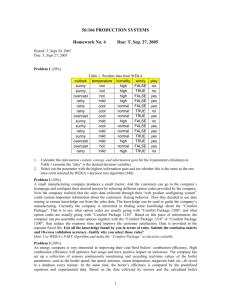

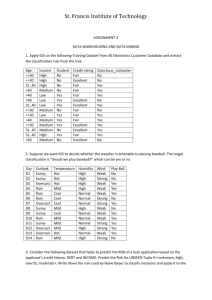

Classification

advertisement

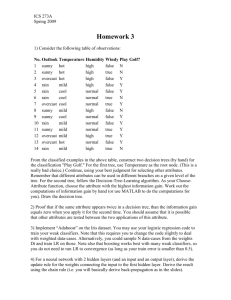

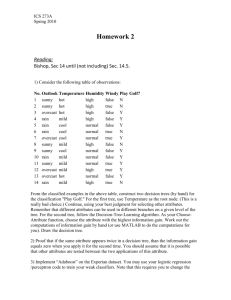

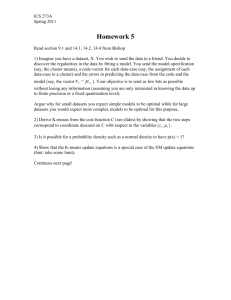

Classification Slide 1 Supervised vs. Unsupervised Learning Supervised learning (classification) • Supervision: The training data (observations, measurements, etc.) are accompanied by labels indicating the class of the observations • New data is classified based on the training set Unsupervised learning (clustering) • The class labels of training data is unknown • Given a set of measurements, observations, etc. with the aim of establishing the existence of classes or clusters in the data Slide 2 Prediction Problems: Classification vs. Numeric Prediction Classification • predicts categorical class labels (discrete or nominal) • classifies data (constructs a model) based on the training set and the values (class labels) in a classifying attribute and uses it in classifying new data Numeric Prediction • models continuous-valued functions, i.e., predicts unknown or missing values Typical applications • Credit/loan approval: • Medical diagnosis: if a tumor is cancerous or benign • Fraud detection: if a transaction is fraudulent • Web page categorization: which category it is Slide 3 Classification—A Two-Step Process Model construction: describing a set of predetermined classes • Each tuple/sample is assumed to belong to a predefined class, as determined by the class label attribute • The set of tuples used for model construction is training set • The model is represented as classification rules, decision trees, or mathematical formula Model usage: for classifying future or unknown objects • Estimate accuracy of the model • The known label of test sample is compared with the classified result from the model • Accuracy rate is the percentage of test set samples that are correctly classified by the model • Test set is independent of training set (otherwise overfitting) • If the accuracy is acceptable, use the model to classify new data Note: If the test set is used to select models, it is called validation (test) set Slide 4 Process (1): Model Construction Training Data NAME M ike M ary B ill Jim D ave Anne RANK YEARS TENURED A ssistan t P ro f 3 no A ssistan t P ro f 7 yes P ro fesso r 2 yes A sso ciate P ro f 7 yes A ssistan t P ro f 6 no A sso ciate P ro f 3 no Classification Algorithms Classifier (Model) IF rank = ‘professor’ OR years > 6 THEN tenured = ‘yes’ Slide 5 5 Process (2): Using the Model in Prediction Classifier Testing Data Unseen Data (Jeff, Professor, 4) NAME Tom M erlisa G eo rg e Jo sep h RANK YEARS TENURED A ssistan t P ro f 2 no A sso ciate P ro f 7 no P ro fesso r 5 yes A ssistan t P ro f 7 yes Tenured? Slide 6 6 Decision Tree Induction: An Example Training data set: Buys_computer The data set follows an example of Quinlan’s ID3 Resulting tree: age? <=30 31..40 overcast student? no no yes yes yes >40 age <=30 <=30 31…40 >40 >40 >40 31…40 <=30 <=30 >40 <=30 31…40 31…40 >40 income student credit_rating buys_computer high no fair no high no excellent no high no fair yes medium no fair yes low yes fair yes low yes excellent no low yes excellent yes medium no fair no low yes fair yes medium yes fair yes medium yes excellent yes medium no excellent yes high yes fair yes medium no excellent no credit rating? excellent fair yes Slide 7 7 Algorithm for Decision Tree Induction Basic algorithm (a greedy algorithm) • Tree is constructed in a top-down recursive divide-andconquer manner • At start, all the training examples are at the root • Attributes are categorical (if continuous-valued, they are discretized in advance) • Examples are partitioned recursively based on selected attributes • Test attributes are selected on the basis of a heuristic or statistical measure (e.g., information gain) Slide 8 8 MBA Application Classification Example Slide 9 Plan Discussion on Classification using Data Mining Tool (It’s suggested to work with your final project teammates.) Slide 10 Decisions on Admissions Download data mining tool—Weka.zip and an admission dataset ABC.csv from Course Page. Work as a team to make decisions on admissions to MBA program at ABC University. Remember to save your file. • Assuming that each record (row) represents a student, use the provided information (GPA, GMAT, Years of work experience) to make admission decisions. • If you decide to admit the student, fill in Yes in Admission column. Otherwise, fill in No to reject the application. Slide 11 Decisions on Admissions (cont.) Before using Weka, write down your criteria judging the applications. Slide 12 Decision Tree A machine learning (data mining) technique. Making decisions. Generating interpretable rules. Able to re-use for future predictions. Automatic and faster response (decision). Slide 13 Using Weka Extract Weka.zip to desktop Run the “RunWeka3-4-6.bat” from Weka folder on your desktop Open the ABC.csv with decisions from Weka • Set filetype to CSV Slide 14 Using Weka (cont.) Click Classify tab Choose J48 Classifier below trees Set the Test options to Use training set Enable Output predictions in More options Click Start to run Slide 15 Using Weka (cont.) Read the classification output report from Classifier output Slide 16 Using Weka (cont.) Use the decision tree generated from the tool for your write-up (from J48 pruned tree) Slide 17 Using Weka (cont.) The generated tree to my example is • GMAT <= 390: No (9.0) • GMAT > 390 • | Exp <= 2 GMAT>390 • | | GMAT <= 450: No (2.0) • | | GMAT > 450: Yes (6.0/1.0) No Yes • | Exp > 2: Yes (13.0) Rejected Exp > 2 No Yes Admitted GMAT>450 No Rejected Yes Admitted Slide 18 Using Weka (cont.) Performance of Decision Tree Incorrect prediction Slide 19 Using Weka (cont.) Accuracy rate for decision tree prediction = (the number of correct predictions) / (total observations) = 29 / 30 = 96.6667% Slide 20 Using Weka (cont.) Share your criteria (results) with other teams. Slide 21 Simplicity first! Simple algorithms often work very well! There are many kinds of simple structure, eg: – – – – – One attribute does all the work Attributes contribute equally and independently A decision tree that tests a few attributes Calculate distance from training instances Result depends on a linear combination of attributes Success of method depends on the domain – Data mining is an experimental science Slide 22 Simplicity first! OneR: One attribute does all the work Learn a 1‐level “decision tree” – i.e., rules that all test one particular attribute Basic version – One branch for each value – Each branch assigns most frequent class – Error rate: proportion of instances that don’t belong to the majority class of their corresponding branch – Choose attribute with smallest error rate Slide 23 Simplicity first! For each attribute, For each value of the attribute, make a rule as follows: count how often each class appears Find the most frequent class Make the rule assign that class to this attribute-value Calculate the error rate of this attribute’s rules Choose the attribute with the smallest error rate Slide 24 Simplicity first! Outlook Temp Humidity Wind Play Sunny Hot High False No Sunny Hot High True No Overcast Hot High False Rainy Mild High False Rainy Cool Normal False Yes Rainy Cool Normal True No Overcast Cool Normal True Yes Sunny Mild High False No Sunny Cool Normal False Yes Rainy Mild Normal False Yes Sunny Mild Normal True Yes Overcast Mild High True Yes Overcast Hot Normal False Yes Rainy Mild High True No Attribute Rules Errors Sunny No 2/5 Yes Overcast Yes 0/4 Yes Rainy Yes 2/5 Hot No* 2/4 Mild Yes 2/6 Cool Yes 1/4 High No 3/7 Normal Yes 1/7 False Yes 2/8 True No* 3/6 Outlook Temp Humidity Wind Total errors 4/14 5/14 4/14 5/14 * indicates a tie Slide 25 Simplicity first! Use OneR Open file weather.nominal.arff Choose OneR rule learner (rules>OneR) Look at the rule Slide 26 Simplicity first! OneR: One attribute does all the work Incredibly simple method, described in 1993 “Very Simple Classification Rules Perform Well on Most Commonly Used Datasets” – Experimental evaluation on 16 datasets – Used cross‐validation – Simple rules often outperformed far more complex methods How can it work so well? – some datasets really are simple – some are so small/noisy/complex that nothing can be learned from them! Rob Holte, Alberta, Canada Slide 27 Overfitting Any machine learning method may “overfit” the training data … … by producing a classifier that fits the training data too tightly Works well on training data but not on independent test data Remember the “User classifier”? Imagine tediously putting a tiny circle around every single training data point Overfitting is a general problem … we illustrate it with OneR Slide 28 Overfitting Numeric attributes Outlook Temp Humidity Wind Play Sunny 85 85 False No Sunny 80 90 True No Overcast 83 86 False Yes Rainy 75 80 False Yes … … … … … Attribute Temp Rules Errors Total errors 85 No 0/1 0/14 80 Yes 0/1 83 Yes 0/1 75 No 0/1 … … OneR has a parameter that limits the complexity of such rules Slide 29 Overfitting Experiment with OneR Open file weather.numeric.arff Choose OneR rule learner (rules>OneR) Resulting rule is based on outlook attribute, so remove outlook Rule is based on humidity attribute humidity: < 82.5 ‐> yes >= 82.5 ‐> no (10/14 instances correct) Slide 30 Overfitting Experiment with diabetes dataset Open file diabetes.arff Choose ZeroR rule learner (rules>ZeroR) Use cross‐validation: 65.1% Choose OneR rule learner (rules>OneR) Use cross‐validation: 72.1% Look at the rule (plas = plasma glucose concentration) Change minBucketSize parameter to 1: 54.9% Evaluate on training set: 86.6% Look at rule again Slide 31 Overfitting Overfitting is a general phenomenon that damages all ML methods One reason why you must never evaluate on the training set Overfitting can occur more generally E.g try many ML methods, choose the best for your data – you cannot expect to get the same performance on new test data Divide data into training, test, validation sets? Slide 32 Using probabilities (OneR: One attribute does all the work) Opposite strategy: use all the attributes “Naïve Bayes” method Two assumptions: Attributes are – – equally important a priori statistically independent (given the class value) i.e., knowing the value of one attribute says nothing about the value of another (if the class is known) Independence assumption is never correct! But … often works well in practice Slide 33 Using probabilities Probability of event H given evidence E Pr[H | E] class Pr[E | H ]Pr[H ] Pr[E] instance Pr[ H ] is a priori probability of H – Probability of event before evidence is seen Pr[ H | E ] is a posteriori probability of H – Probability of event after evidence is seen “Naïve” assumption: – Evidence splits into parts that are independent Pr[ H | E ] 22 Pr[ E1 | H ] Pr[ E 2 | H ]... Pr[ E n | H ] Pr[ H ] Pr[ E ] Thomas Bayes, British mathematician, 1702 –1761 Slide 34 Using probabilities Outlook Temperature Yes Humidity Yes No No Sunny 2 3 Hot 2 2 Overcast 4 0 Mild 4 2 Rainy 3 2 Cool 3 1 Sunny 2/9 3/5 Hot 2/9 2/5 Overcast 4/9 0/5 Mild 4/9 2/5 Rainy 3/9 2/5 Cool 3/9 1/5 Wind Yes No High 3 4 Normal 6 High Normal Play Yes No Yes False 6 2 9 1 True 3 3 3/9 4/5 False 6/9 2/5 6/9 1/5 True 3/9 3/5 9/14 No 5 5/14 Outlook Pr[ H | E ] Pr[ E1 | H ] Pr[ E 2 | H ]... Pr[ E n | H ] Pr[ H ] Pr[ E ] Temp Humidity Wind Play Sunny Hot High False No Sunny Hot High True No Overcast Hot High False Yes Rainy Mild High False Yes Rainy Cool Normal False Yes Rainy Cool Normal True No Overcast Cool Normal True Yes Sunny Mild High False No Sunny Cool Normal False Yes Rainy Mild Normal False Yes Sunny Mild Normal True Yes Overcast Mild High True Yes Overcast Hot Normal False Yes Rainy Mild High True No Slide 35 Using probabilities Outlook Temperature Yes Humidity Yes No No Sunny 2 3 Hot 2 2 Overcast 4 0 Mild 4 2 Rainy 3 2 Cool 3 1 Sunny 2/9 3/5 Hot 2/9 2/5 Overcast 4/9 0/5 Mild 4/9 2/5 Rainy 3/9 2/5 Cool 3/9 1/5 Wind Yes No High 3 4 Normal 6 High Normal Play Yes No Yes False 6 2 9 1 True 3 3 3/9 4/5 False 6/9 2/5 6/9 1/5 True 3/9 3/5 A new day: Outlook Temp. Sunny Cool 9/14 No 5 5/14 Humidity High Wind True Play ? Likelihood of the two classes For “yes” = 2/9 3/9 3/9 3/9 9/14 = 0.0053 Pr[ E1 | H ] Pr[ E 2 | H ]... Pr[ E n | H ] Pr[ H ] Pr[ H | E ] Pr[ E ] For “no” = 3/5 1/ 4/5 3/5 5/14 = 0.0206 Conversion into a probability by normalization: P(“yes”) = 0.0053 / (0.0053 + 0.0206) = 0.205 P(“no”) = 0.0206 / (0.0053 + 0.0206) = 0.795 Slide 36 Using probabilities Outlook Temp. Sunny Cool Humidity High Wind True Play ? Evidence E Pr[ yes | E] Pr[Outlook Sunny | yes] Pr[Temperature Cool | yes] Pr[Humidity High | yes] Probability of Pr[Windy True | yes] class “yes” Pr[ yes] Pr[E] 93 93 93 149 Pr[E] 2 9 Slide 37 Using probabilities Use Naïve Bayes Open file weather.nominal.arff Choose Naïve Bayes method (bayes>NaiveBayes) Look at the classifier Avoid zero frequencies: start all counts at 1 Slide 38 Using probabilities “Naïve Bayes”: all attributes contribute equally and independently Works surprisingly well – even if independence assumption is clearly violated Why? – classification doesn’t need accurate probability estimates so long as the greatest probability is assigned to the correct class Adding redundant attributes causes problems (e.g. identical attributes) attribute selection Slide 39 Decision Trees Top‐down: recursive divide‐and‐conquer Select attribute for root node – Create branch for each possible attribute v alue Split instances into subsets – One for each branch extending from the n ode Repeat recursively for each branch – using only instances that reach the branch Stop – if all instances have the same class Slide 40 Decision Trees Which attribute to select? Slide 41 Decision Trees Which is the best attribute? Aim: to get the smallest tree Heuristic – choose the attribute that produces the “purest” nodes – I.e. the greatest information gain Information theory: measure information in bits entropy( p1 , p 2 ,..., p n ) p1logp1 p 2 logp 2 ... p n logp n Information gain Amount of information gained by knowing the value of the attribute (Entropy of distribution before the split) – (entropy of distribution after it) Claude Shannon,American mathematician and scientist 1916–2001 Slide 42 Decision Trees Which attribute to select? 0.247 bits 0.048 bits 0.152 bits 0.029 bits Slide 43 Decision Trees Continue to split … gain(temperature) = 0.571 bits gain(windy) = 0.020 bits gain(humidity) = 0.971 bits Slide 44 Decision Trees Use J48 on the weather data Open file weather.nominal.arff Choose J48 decision tree learner (trees>J48) Look at the tree Use right‐click menu to visualize the tree Slide 45 Decision Trees J48: “top‐down induction of decision trees” Soundly based in information theory Produces a tree that people can understand Many different criteria for attribute selection – rarely make a large difference Needs further modification to be useful in practice Slide 46 Pruning Decision Trees Slide 47 Pruning Decision Trees Highly branching attributes — Extreme case: ID code Slide 48 Pruning Decision Trees How to prune? Don’t continue splitting if the nodes get very small (J48 minNumObj parameter, default value 2) Build full tree and then work back from the leaves, applying a statistical test at each stage (confidenceFactor parameter, default value 0.25) Sometimes it’s good to prune an interior node, raising the subtree beneath it up one level (subtreeRaising, default true) Messy … complicated … not particularly illuminating Slide 49 Pruning Decision Trees Over‐fitting (again!) Sometimes simplifying a decision tree gives better results Open file diabetes.arff Choose J48 decision tree learner (trees>J48) 73.8% accuracy, tree has 20 leaves, 39 nodes Prunes by default: 72.7% 22 leaves, 43 nodes Turn off pruning: Extreme example: breast‐cancer.arff Default (pruned): Unpruned: 75.5% accuracy, tree has 69.6% 4 leaves, 6 nodes 152 leaves, 179 nodes Slide 50 Pruning Decision Trees C4.5/J48 is a popular early machine learning method Many different pruning methods – mainly change the size of the pruned tree Pruning is a general technique that can apply to structures other than trees (e.g. decision rules) Univariate vs. multivariate decision trees – Single vs. compound tests at the nodes From C4.5 to J48 Ross Quinlan, Australian computer scientist Slide 51 Nearest neighbor “Rote learning”: simplest form of learning To classify a new instance, search training set for one that’s “most like” it – – the instances themselves represent the “knowledge” lazy learning: do nothing until you have to make predictions “Instance‐based” learning = “nearest‐neighbor” learning Slide 52 Nearest neighbor Slide 53 Nearest neighbor Search training set for one that’s “most like” it Need a similarity function – – – – Regular (“Euclidean”) distance? (sum of squares of differences) Manhattan (“city‐block”) distance? (sum of absolute differences) Nominal attributes? Distance = 1 if different, 0 if same Normalize the attributes to lie between 0 and 1? Slide 54 Nearest neighbor What about noisy instances? Nearest‐neighbor k‐nearest‐neighbors – choose majority class among several neighbors (k of them) In Weka, lazy>IBk (instance‐based learning) Slide 55 Nearest neighbor Investigate effect of changing k Glass dataset lazy > IBk, k = 1, 5, 20 10‐fold cross‐validation k=1 70.6% k=5 67.8% k = 20 65.4% Slide 56 Nearest neighbor Often very accurate … but slow: – – scan entire training data to make each prediction? sophisticated data structures can make this faster Assumes all attributes equally important – Remedy: attribute selection or weights Remedies against noisy instances: – – – Majority vote over the k nearest neighbors Weight instances according to prediction accuracy Identify reliable “prototypes” for each class Statisticians have used k‐NN since 1950s – If training set size n and k and k/n 0, error approaches minimum Slide 57