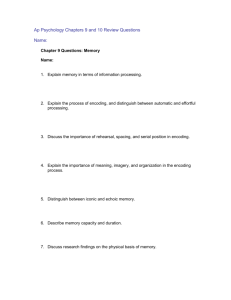

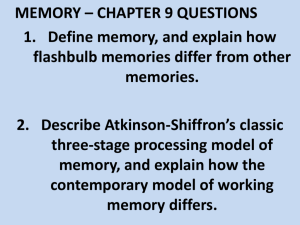

State Assignment

advertisement

State Assignment

The problem:

Assign a unique code to each state to produce a minimal binary logic level

implementation.

Given:

1. |S| states,

2. at least log |S| state bits (minimum width encoding),

3. at most |S| state bits (one-hot encoding)

estimate impact of encoding on the size and delay of the optimized

implementation.

Most known techniques are directed towards reducing the size. Difficult

to estimate delay before optimization.

2n

n

There are S S ! possible state assignments for 2 codes (n log |S| ),

since there are that many ways to select |S| distinct state codes and

|S|! ways to permute them among the states.

1

State Assignment

Techniques are in two categories:

Heuristic techniques try to capture some aspect of the process of

optimizing the logic, e.g. common cube extraction.

Example:

1. Construct a weighted graph of the states. Weights express

the gain in keeping the codes of the pair of states ‘close’.

2. Assign codes that minimize a proximity function (graph

embedding step)

Exact techniques model precisely the process of optimizing the

resulting logic as an encoding problem.

Example:

1. Perform an appropriate multi-valued logic minimization

(optimization step)

2. Extract a set of encoding constraints, that put conditions on

the codes

3. Assign codes that satisfy the encoding constraints

2

SA as an Encoding Problem

State assignment is a difficult problem: transform ‘optimally’

• a cover of multi-valued (symbolic) logic functions into

• an equivalent cover of two-valued logic functions.

Various applications dictate various definitions of optimality.

Encoding problems are classified as

1. input (symbolic variables appear as input)

2. output (symbolic variables appear as output)

3. input-output (symbolic variables appear as both input and

output)

State assignment is the most difficult case of input-output

encoding where the state variable is both an input and

output.

3

Encoding Problems: status

two-level

•well understood theory

•efficient algorithms

•many applications

two-level

•well understood theory

•no efficient algorithm

•few applications

two-level

•well understood theory

•no efficient algorithm

•few applications

Input

Encoding

Output

Encoding

InputOutput

Encoding

multi-level

•basic theory developed

•algorithms not yet mature

•few applications

multi-level

•no theory

•heuristic algorithms

•few applications

multi-level

•no theory

•heuristic algorithms

•few applications

4

Input Encoding for TwoLevel Implementations

Reference: [De Micheli, Brayton, Sangiovanni 1985]

To solve the input encoding problem for minimum

product term count:

1. represent the function as a multi-valued function

2. apply multi-valued minimization (Espresso-MV)

3. extract, from the minimized multi-valued cover, input

encoding constraints

4. obtain codes (of minimum length) that satisfy all input

encoding constraints

5

Input Encoding

Example: single primary output of an FSM

3 inputs: state variable, s, and two binary variables, c1 and c2

4 states: s0, s1, s2, s3

1 output: y

y is 1 when following conditions hold:

(state = s0 ) and c2 = 1

(state = s0 ) or (state = s2 ) and c1 = 0

(state = s1 ) and c2 = 0 and c1 = 1

(state = s3 ) or (state = s2 ) and c1 = 1

Pictorially

for output

Y=1:

Values along the

state axis are not

ordered.

6

Input Encoding

Function representation

• Symbolic variables represented by multiple valued (MV) variables Xi

restricted to Pi = { 0,1,…,ni-1 }.

• Output is a binary valued function f of variables Xi

f : P1 P2 … Pn B

• S Pi and literal X S is as:

Xi S = {1 if XiSi

{0 otherwise

Example: y = X {0}c2+ X {0,2}c1’+ X {1}c1c2’ + X {2,3}c1 (only 1 MV variable)

The cover of the function can also be written in positional form:

-1 1000 1

0- 1010 1

10 0100 1

1- 0011 1

7

Input Encoding

y = X {0}c2+ X {0,2}c1’+ X {1}c1c2’ + X {2,3}c1

Minimize using classical two-level MV-minimization (Espresso-MV).

Minimized result:

y = X {0,2,3} c1 c2+ X {0,2}c1’+ X {1,2,3}c1c2’

8

Input Encoding

Extraction of face constraints:

y = X {0,2,3} c1 c2+ X {0,2}c1’+ X {1,2,3}c1c2’

The face constraints are: (assign codes to keep MV-literals in

single cubes)

(s0, s2, s3)

(s0, s2)

(s1, s2, s3)

• two-level minimization results in the fewest product terms

for any possible encoding

• would like to select an encoding that results in the same

number of product terms

• each MV literal should be embedded in a face (subspace or

cube) in the encoded space of the associated MV-variable.

• unused codes may be used as don’t cares

9

Input Encoding

Satisfaction of face constraints:

• lower bound can always be achieved (one-hot encoding will do it),

– but this results in many bits (large code width)

• for a fixed code width need to find embedding that will result in

the fewest cubes

Example: A satisfying solution is:

s0 = 001, s1 = 111, s2 = 101, s3 = 100

The face constraints are assigned to the following faces:

(s0, s2, s3)

202

(s0, s2)

201

dc

(s1, s2, s3)

122

0

2

Encoded representation:

y

= X

->

{0,2,3} c

1 c2 +

X

{0,2}c

1’+

X {1,2,3}c1c2’

x2’ c1 c2+ x2’ x3 c1’+ x1 c1c2’

Note: needed 3 bits instead of the minimum 2.

x3

x2

dc

dc

x1

1

dc

3

10

Procedure for Input

Encoding

Summary:

A face constraint is satisfied by an encoding if the supercube

of the codes of the symbolic values in the literal does not

contain any code of a symbolic value not in the literal.

Satisfying all the face constraints guarantees that the

encoded minimized binary-valued cover will have size no

greater than the multi-valued cover.

Once an encoding satisfying all face constraints has been

found, a binary-valued encoded cover can be constructed

directly from the multi-valued cover, by

• replacing each multi-valued literal by the supercube

corresponding to that literal.

11

Satisfaction of Input

Constraints

Any set of face constraints can be satisfied by one-hot

encoding the symbols. But code length is too long …

Finding a minimum length code that satisfies a given set

of constraints is NP-hard

[Saldanha, Villa, Brayton, Sangiovanni 1991].

Many approaches proposed. The above reference

describes the best algorithm currently known, based on

the concept of encoding dichotomies.

[Tracey 1965, Ciesielski 1989].

12

Satisfying Input Constraints

Ordered Dichotomy:

• A ordered dichotomy is a 2-block partition of a

subset of symbols. Example: (s0 s1;s2 s3)

– Left block is s0,s1 - associated with 0 bit

– Right block is s2 s3 - associated with 1 bit

• A dichotomy is complete if each symbol of the

entire symbol set appears in one block

– (s0;s1 s2) is not complete but (s0 s1;s2 s3) is

complete

• Two dichotomies d1 and d2 are compatible if

1. the left block of d1 is disjoint from the right

block of d2, and

2. the right block of d1 is disjoint from the left

block of d2.

13

Satisfying Input Constraints

• The union of two compatible dichotomies, d1 and

d2, is (a;b) where

1. a is the union of the left blocks of d1 and d2

and

2. b is the union of the right blocks of d1 and d2.

• The set of all compatibles of a set of dichotomies

is obtained by

– a initial dichotomy is a compatible

– the union of two compatibles is a compatible

Example: initial

(0 1;2) (1:4) (3:2) (2;4)

generated: (0 1 ;2 4) (0 1 3;2) (1 3;2 4) (1 2;4)

(0 1 3;2 4)

14

Dichotomies

• Dichotomy d1 covers d2 if

1.the left block of d1 contains the left block of d2, and

2.the right block of d1 contains the right block of d2

(s0;s1 s2) is covered by (s0 s3;s1 s2 s4)

• A dichotomy is a prime compatible of a given set if it is

not covered by any other compatible of the set.

• A set of complete dichotomies generates an encoding:

Each dichotomy generates a bit of the encoding

– The left block symbols are 0 in that bit

– The right block symbols are 1 in that bit

Example: (s0 s1;s2 s3) and (s0 s3;s1 s2) ==>

s0=00, s1=01, s2=11, s3=10

15

Input constraint satisfaction

Initial dichotomies:

• Each face constraint with k symbols generates 2(n-k) initial

dichotomies (symbol set (s0 s1 s2 s3 s4 s5))

– (s1 s2 s4) ==> (s1 s2 s4;s0) (s1 s2 s4;s3) (s1 s2 s4;s5)

(s0;s1 s2 s4) (s3;s1 s2 s4) (s5;s1 s2 s4)

• Also require that each symbol is encoded uniquely

(s0;s1) (s0;s2) …

(s0;s5)

(s1;s0)

(s1;s2) …

(s1;s5)

(s5;s0) (s5;s1) …

(s5;s4)

n2-n such additional initial dichotomies

• All initial dichotomies, { d }, must be satisfied by encoding - a

dichotomy, d, is satisfied by a set of encoding dichotomies if there

exists a bit where the symbols in left block of d are 0 and the

symbols in right block of d are 1.

Note: can delete any initial dichotomy d that is covered by another e,

16

since since satisfaction of e implies satisfaction of d.

Input constraint satisfaction

Procedure:

1. Given set of face constraints, generate

initial dichotomies.

2. Generate set of prime dichotomies.

3. Form covering matrix

•

•

•

Rows = initial dichotomies

Columns = prime dichotomies

A 1 is in (i, j) if prime j covers dichotomy i.

4. Solve unate covering problem

17

Efficient generation of

prime dichotomies

1. Introduce symbol for each initial dichotomy

2. Form a 2-clause for each dichotomy a that is

incompatible with dichotomy b i.e. (a+b)

3. Multiply product of all 2-clauses to SOP.

4. For each term in SOP, list dichotomies not in term

- these are the set of all primes dichotomies.

Example: initial dichotomies (a b c d e)

ab,ac,bc,cd,de are incompatibles

CNF is (a+b)(a+c)(b+c)(c+d)(d+e) =

abd+acd+ace+bcd+bce

Primes are cUe, bUe, bUd, aUe, aUd

18

Efficient generation of

prime dichotomies

Procedure for multiplying 2-CNF expression E

Procedure cs(E)

x = splitting variable

C = sum terms with x

R = sum terms without x

p = product of all variables in C except x

q = cs(R)

result = single_cube_containment(x q + p q)

Note: procedure is linear in number of primes

19

Applications of Input

Encoding

References:

• PLA decomposition with multi-input decoders

[Sasao 1984, K.C.Chen and Muroga 1988, Yang and

Ciesielski 1989]

• Boolean decomposition in multi-level logic

optimization [Devadas, Wang, Newton, Sangiovanni

1989]

• Communication based logic partitioning [Beardslee

1992, Murgai 1993]

• Approximation to state assignment [De Micheli,

Brayton, Sangiovanni 1985, Villa and Sangiovanni

1989]

20

Input Encoding: PLA

Decomposition

Consider the PLA:

y1 = x1 x3 x4’ x6+ x2 x5 x6’ x7 + x1 x4 x7’ + x1’ x3’ x4 x7’ +

x3’ x5’ x6’ x7’

y2 = x2’ x4’ x6+ x3 x4’ x6 + x2’ x5 x6’ x7 + x1’ x3’ x4 x7’ +

x1’ x3’ x5’ x6’ x7’ + x2’ x4’ x5’ x7’

Decompose it into a driving PLA fed by inputs {X4, X5, X6, X7 } and a

driven PLA fed by the outputs of the driving PLA and the remaining

inputs {X1, X2, X3 } so as to minimize the area.

y1 y2

x4 x5 x6

x4 x5 x6 x7

21

Input Encoding: PLA

Decomposition

y1 = x1 x3 x4’ x6+ x2 x5 x6’ x7 + x1 x4 x7’ + x1’ x3’ x4 x7’ +

x3’ x5’ x6’ x7’

y2 = x2’ x4’ x6+ x3 x4’ x6 + x2’ x5 x6’ x7 + x1’ x3’ x4 x7’ +

x1’ x3’ x5’ x6’ x7’ + x2’ x4’ x5’ x7’

Projecting onto the selected inputs there are 5 distinct

product terms:

x4’ x6 , x5 x6’ x7 , x4 x7’ , x5’ x6’ x7’ , x4’ x5’ x7’

Product term pairs (x4 x7’ , x5’ x6’ x7’ ) and ( x5’ x6’ x7’ , x4’ x5’

x7’ ) are not disjoint.

22

PLA Decomposition

y1 = x1 x3 x4’ x6+ x2 x5 x6’ x7 + x1 x4 x7’ + x1’ x3’ x4 x7’ + x3’ x5’ x6’ x7’

y2 = x2’ x4’ x6+ x3 x4’ x6 + x2’ x5 x6’ x7 + x1’ x3’ x4 x7’ +

x1’ x3’ x5’ x6’ x7’ + x2’ x4’ x5’ x7’

To re-encode make disjoint all product terms involving selected inputs:

y1 = x1 x3 x4’ x6+ x2 x5 x6’ x7 + x1 x4 x7’ + x1’ x3’ x4 x7’ +

x 3’ x 4’ x 5 ’ x 6’ x 7’

(using reduce)

y2 = x2’ x4’ x6+ x3 x4’ x6 + x2’ x5 x6’ x7 + x1’ x3’ x4 x7’ +

x 1’ x 3 ’ x 4 ’ x 5 ’ x 6 ’ x 7 ’ + x 2 ’ x 4 ’ x 5 ’ x 6 ’ x 7 ’

(using reduce)

Projecting on the selected inputs there are 4 disjoint product terms:

x 4’ x 6 , x5 x 6’ x 7 , x 4 x 7’ , x4’ x 5’ x 6’ x 7’

View these as 4 values 1,2,3,4 of an MV variable S:

y1 = x1 x3 S {1} + x2 S {2} + x1 S {3} + x1’ x3’ S {3} + x3’ S {4}

y2 = x2’ S {1} + x3 S {1} + x2’ S {2} + x1’ x3’ S {3} + x1’ x3’ S {4} + x2’ S {4}

23

Input Encoding: PLA

Decomposition

y1 = x1 x3 S{1} + x2 S{2} + x1 S{3} + x1’ x3’ S{3} + x3’ S{4}

y2 = x2’ S{1} + x3 S{1} + x2’ S{2} + x1’ x3’ S{3} + x1’ x3’ S{4} + x2’ S{4}

Performing MV two-level minimization:

y1 = x1 x3 S {1,3} + x2 S {2} + x3’ S {3,4}

y2 = x2’ S {1,2,4} + x3 S {1} + x1’ x3’ S {3,4}

Face constraints are:

(1,3 ), (3,4 ), (1,2,4 )

Codes of minimum length are:

enc(1 ) = 001, enc(2 ) = 011, enc(3) = 100, enc(4) = 111.

2

4

x10

1

x9

x8

3

24

Input Encoding: PLA

Decomposition

The driven PLA becomes:

y1 = x1 x3 x9’ + x2 x8’x9 x10 + x3’ x8

y2 = x2’ x10 + x3 x8’x9’x10 + x1’ x3’ x8

The driving PLA becomes the function:

f: {X4, X5, X6, X7} {X8, X9, X10}

f(x4’ x6 ) = enc( 1 ) = 001

f(x4 x7’ ) = enc( 3 ) = 100

y1 y2

x8, x9, x10

x4 x5 x6

x4 x5 x6 x7

f(x5 x6’ x7 ) = enc( 2 ) = 011

f(x4’ x5’ x6’ x7’ ) = enc( 4 ) = 111

Represented in SOP as:

x8 = x4 x7’ + x4’ x5’ x6’ x7’

x9 = x5 x6’ x7 + x4’ x5’ x6’ x7’

x10 = x4’ x6 + x5 x6’ x7 + x4’ x5’ x6’ x7’

Result: Area before decomposition: 160.

Area after decomposition: 128.

25

Output Encoding for

Two-Level Implementations

The problem:

• find binary codes for symbolic outputs in a logic function so

as to minimize a two-level implementation of the function.

Terminology:

• Assume that we have a symbolic cover S with a symbolic

output assuming n values. The different values are denoted

v0, … , vn-1.

• The encoding of a symbolic value vi is denoted enc(vi).

• The onset of vi is denoted ONi. Each ONi is a set of Di

minterms {mi1, …, miDi }.

• Each minterm mij has a tag indicating which symbolic value’s

onset it belongs to. A minterm can only belong to one

symbolic value’s onset.

26

Output Encoding

Consider a function f with symbolic outputs and two different encoded

realizations of f :

0001 out1

0001 001

0001 10000

00-0 out2

00-0 010

00-0 01000

0011 out2

0011 010

0011 01000

0100 out3

0100 011

0100 00100

1000 out3

1000 011

1000 00100

1011 out4

1011 100

1011 00010

1111 out5

1111 101

1111 00001

• Two-level logic minimization exploits the sharing between the different

outputs to produce a minimum cover.

In the second realization no sharing is possible. The first can be

1111 001

minimized to:

1-11 100

0100 011

0001 101

1000 011

00-- 010

27

Dominance Constraints

Definition: enc(vi) > enc(vj) iff the code of vi bit-wise

dominates the code of vj, i.e. for each bit position where vj has

a 1, vi also has a 1. Example: 101 > 001

If enc(vi ) > enc(vj ) then ONi can be used as a DC set when

minimizing ONj.

Example:

symbolic cover encoded cover minimized encoded cover

0001 out1

0001 110

0001 110

00-0 out2

00-0 010

00-- 010

0011 out2

0011 010

Here enc(out1) = 110 > enc(out2) = 010. The input minterm

0001 of out1 has been included into the single cube 00-- that

asserts the code of out2.

Algorithms to exploit dominance constraints implemented in Cappucino (De

Michelli, 1986) and Nova (Villa and Sangiovanni, 1990).

28

Disjunctive Constraints

If enc(vi ) = enc(vj ) + enc(vk ), ONi can be minimized using ONj

and ONk as don’t cares.

Example:

symbolic cover encoded cover minimized encoded cover

101 out1 1

100 out2 1

111 out3 1

101

100

111

11 1

01 1

10 1

101-1

01 1

10 1

Here enc(out1) = enc(out2) + enc(out3). The input minterm 101 of out1

has been merged with the input minterm 100 of out2 (resulting in 10-)

and with the input minterm 111 of out3 (resulting in 1-1). Input minterm

101 now asserts 11 (i.e. the code of out1), by activating both cube 10that asserts 01 and cube 1-1 that asserts 10.

Algorithm to exploit dominance and disjunctive constraints

implemented in esp_sa (Villa, Saldanha, Brayton and Sangiovanni,

1995).

29

Exact Output Encoding

Proposed by Devadas and Newton, 1991.

The algorithm consists of the following:

1. Generate generalized prime implicants (GPIs) from the

original symbolic cover.

2. Solve a constrained covering problem,

• requires the selection of a minimum number of GPIs that

form an encodeable cover.

3. Obtain codes (of minimum length) that satisfy the encoding

constraints.

4. Given the codes of the symbolic outputs and the selected

GPIs, construct trivially a PLA with product term

cardinality equal to the number of GPIs.

30