entropy & information - People - Georgia Institute of Technology

advertisement

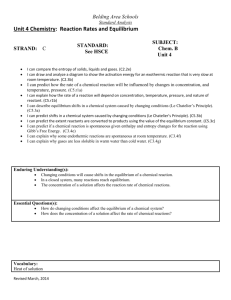

ENTROPY & INFORMATION a physicist point of view Jean V. Bellissard Georgia Institute of Technology & Institut Universitaire de France ENTROPY: Some history Carnot’s Principle: • Sadi CARNOT • 1825: • Reflexions sur la Puissance Motrice du Feu Carnot’s Principle: • Sadi CARNOT • 1825: • Reflexions sur la Puissance Motrice du Feu A steam machine needs 2 sources of heat: - a hot one: temperature Th - a cold one: temperature Tc Th > Tc Carnot’s Principle: • Sadi CARNOT • 1825: • Reflexions sur la Puissance Motrice du Feu The proportion of thermal energy that can be transformed into mechanical motion depends only on the temperatures of the two sources Steam Engines • Any steam engine has a heat source (burner) and a cold source (the atmosphere). Thermal engines are everywhere - in power plants (coal, nuclear, …) - in cars, airplane, boats, - in factories, Entropy: definition • Rudolf CLAUSIUS • 1865: Definition of entropy: d S = d Q/T • 2nd Law of Thermodynamics: Entropy cannot decrease over time Gas are made of molecules Qu i ck Ti me ™a nd a GIF d ec omp res so ra re ne ed ed to s ee th i s pi c tu re. • Clausius showed that gas were made of molecules, explaining the slow diffusion of dust and the origin of viscosity Statistical Thermodynamics: • Ludwig BOLTZMAN • 1872: - Kinetic theory • 1880: Statistical interpretation of entropy: disorder in energy space Statistical Mechanics • Josiah Willard GIBBS • 1880’s: Thermodynamical equilibrium corresponds to maximum of entropy • 1902 : book « Statistical Mechanics » Information theory • Claude E. SHANNON • 1948: « A Mathematical Theory of Communication » -Information theory -Entropy measures the lack of information of a system Second Law of Thermodynamics • Over time, the information contained in an isolated system can only be destroyed • Equivalently, the entropy can only increase MORPHOGENESIS: how does nature produces information ? Conservation Laws • In an isolated system, the Energy, the Momentum, the Angular Momentum, the Electric Charge,…. are conserved. Conservation Laws Angular momentum Conservation Laws • At equilibrium, the only information available on the system are the values of conserved quantities! • Example: elementary particles are characterized by their mass (energy), spin (angular momentum), electric charge… • Electron : m = 9.109x10-31 kg, s = 1/2, e = —1.602 x10-19 C, Out of Equilibrium time E flow E’ • Variations in time or space force transfer of conserved quantities • Transfer of Energy (Heat), Mass, Angular Momentum, Charges, creates current flows. Out of Equilibrium • Transfer of Energy (Heat), creates heat current like in flames and fires. Out of Equilibrium • Transfer of Mass, creates fluid currents like in rivers or streams. Out of Equilibrium • Transfer of Charges, creates electric currents. Out of Equilibrium • Transfer of Angular Momentum creates vortices like this hurricane seen from a satellite. Out of Equilibrium • Pattern Formation A shallow horizontal liquid heated from below exhibits instabilities and formation of rolls and patterns, as a consequence of fluids equations Out of Equilibrium Explosions produce interstellar clouds Collapses produces stars The Sun, the Moon, The Planets, and the Stars have been used as sources of information: measure of time, localization on Earth Beating the 2nd Principle • Without variations in time and space the only information contained in an isolated system is provided by conservation laws • Motion and heterogeneities allow Nature to create a large quantity of information. • All macroscopic equations (fluids, flame,…) describing it are given by conservation laws CODING INFORMATION the art of symbols Signs • Signs can be visual color, shape, design Signs • Signs can be a sound ring, noise, applause musical, speech • Signs can be a smell Signs • Signs can be a smell Signs Signs • Signs can be a smell plants can warn their neighbors with phenols Signs • Signs can be a smell female insects can attract males with pheromones Writings Writings • More than 80,000 characters are used to code the Chinese language Writings • Ancient Egyptians used hieroglyphs to code sounds and words Writings • Japanese language is also using the 96 Hiragana character coding syllables Writings • the Phoenicians and the Greeks found the alphabet simpler to code elementary sounds with 23 characters bgdezhq iklmnopr st uf cyw Writings • Modern numbers are coded with 10 digits created by Indians and transmitted to Europeans through the Arabs Writings • George BOOLE (18151864) used only two characters to code logical operations 0 1 Writings • John von NEUMANN (1903-1957) developed the concept of programming using also binary system to code all possible information 0 1 Writings • Nature uses 4 molecules Writings • Nature uses 4 molecules to code Writings • Nature uses 4 molecules to code the genetic heredity Writings • Proteins uses 20 amino acids to code their functions in the cell molecule of Tryptophan, one of the 20 amino acids Unit of information • Following Shannon (1948) the unit is the bit A system contains N-bits of information if it contains 2N possible characters TRANSMITTING INFORMATION redundancy Transmitting 0 coding 1 • Coding theory uses redundancy to transmit binary bits of information Transmitting 0 000 coding 1 111 • Coding theory uses redundancy to transmit binary bits of information Transmitting 0 000 coding 1 111 Transmission • Coding theory uses redundancy to transmit binary bits of information Transmitting 0 • Coding theory uses redundancy to transmit binary bits of information 000 Transmission 010 111 errors (2nd Principle) 110 coding 1 Transmitting 0 • Coding theory uses redundancy to transmit binary bits of information 000 Transmission 010 111 errors (2nd Principle) 110 coding 1 Reconstruction Transmitting 0 000 Transmission 111 errors (2nd Principle) • Coding theory uses redundancy to transmit binary bits of information 010 Reconstruction 000 110 at reception (correction) 111 coding 1 Transmitting • Humans use also redundancy to make sure they receive the correct information Transmitting • Humans use also redundancy to make sure they receive the correct information Transmitting say it again ! • Humans use also redundancy to make sure they receive the correct information Transmitting • A cell is a big factory designed to duplicate the information contained in the DNA Transmitting • Prior to the cell fission the DNA molecule is unzipped Transmitting • Prior to the cell fission the DNA molecule is unzipped by another protein Transmitting • A cell is a big factory designed to duplicate the information contained in the DNA QuickTime™ and a GIF decompress or are needed to s ee this picture. Transmitting • A cell is a big factory designed to duplicate the information contained in the DNA mitosis Transmitting • A cell is a big factory designed to duplicate the information contained in the DNA mitosis Transmitting • A cell is a big factory designed to duplicate the information contained in the DNA mitosis Transmitting • A cell is a big factory designed to duplicate the information contained in the DNA mitosis Transmitting • A cell is a big factory designed to duplicate the information contained in the DNA mitosis Transmitting • A cell is a big factory designed to duplicate the information contained in the DNA mitosis Transmitting • A cell is a big factory designed to duplicate the information contained in the DNA mitosis Beating the 2nd Principle • The cell divides before the information contained in the DNA fades away • In this way, cell division and DNA duplication at fast pace, conserve the genetic information for millions of years. THE MAXIMUM ENTROPY PRINCIPLE REVISITED The scary art of extrapolation Equilibrium • A physical system of particle reaches equilibrium when all information but the one that must be conserved have vanished Equilibrium • A physical system of particle reaches equilibrium when all information but the one that must be conserved have vanished In a gas the chaotic motion produced by collisions is responsible for the loss of information Equilibrium • By analogy other systems involving a large number of similar individuals can be treated through statistics and information Equilibrium • By analogy other systems involving a large number of similar individuals can be treated through statistics and information Like bureaucracy Equilibrium • By analogy other systems involving a large number of similar individuals can be treated through statistics and information Like bureaucracy 1837 J. S. MILL in Westm. Rev. XXVIII. 71 That vast net-work of administrative tyranny…that system of bureaucracy, which leaves no free agent in all France, except the man at Paris who pulls the wires. (Oxford English Dictionary) Bureaucracy • China (3rd century BC) Confucius • France (18th century) • USSR (1917-1990) • European Community (1952) The French ENA: National School of Administration Bureaucracy Bureaucracy • Rules Conserved quantities Bureaucracy • Rules Conserved quantities • Individuals particles undiscernable Bureaucracy • Rules Conserved quantities • Individuals particles undiscernable • Removal Shocks of an individual Loss of information Bureaucracy • Rules Conserved quantities • Individuals particles undiscernable • Removal Shocks Loss of information Maximum of entropy No evolution Bureaucracy • A bureaucratic system is stable (its entropy is maximum). • Example: China empire lasted for 2000 years. • It cannot be changed without a major source of instability. • Example: collapse of the USSR COMPUTERS: machines and brains Computers • Alan TURING (1912-1954) • 1936: • Description of a computing machine • Computers execute logical operations • They produce information, memorize them, treat them, Computers • A Turing machine is sequential: operations are time ordered rules states Left-Right tape Computers CPU data & instructions data MEMORY • The von NEUMANN computer repeatedly performs the following cycle of events 1. fetch an instruction from memory. 2. fetch any data required by the instruction from memory. 3. execute the instruction (process the data). 4. store results in memory. 5. go back to step 1. Computers • February 14th 1946 ENIAC the first computer Los Alamos NM • Cellular automata produce patterns as in shells Computers a b b a a b a a b a a b b a computer simulation rule change pattern from layer to layer Computers • Nature has also produced brains • Brain does not seem to follow the von Neumann nor Turing schemes Computers • In brain signals are not binary but activated by thresholds • The operations are not performed sequentially Computers • Brain can learn • It can adapt itself: plasticity • Brain memory is associative: it recognizes patterns by comparison with pre-stored ones TO CONCLUDE Entropy & Information • The Second Law of Thermodynamics leads to global loss of information • Systems out of equilibrium produce information… to the cost of the environment • Information can be coded, transmitted, memorized, hidden, treated. • Life is a way of producing information: genetic code, proteins, chemical signals, pattern formation, neurons, brain. • Machines can produce similar features Is Nature a big computer ? THE END