PPTX

advertisement

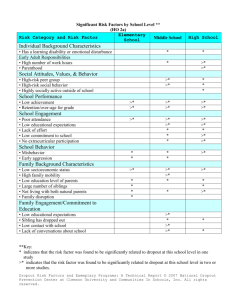

Qual Presentation Daniel Khashabi 1 Outline My own line of research Papers: 2 Fast Dropout training, ICML, 2013 Distributional Semantics Beyond Words: Supervised Learning of Analogy and Paraphrase, TACL, 2013. Current Line of Research Conventional approach to a classification problem: Problems: Never use the label information 3 Lose the structure in the output Limited to the classes in the training set Hard to leverage unsupervised data Current Line of Research For example take the relation extraction problem: “Bill Gates, CEO of Microsoft ….” Manager Conventional Approach: Given sentence s and mentions e1 and e2, find their relation: Output: 𝑟 ∈ 𝑅 = 𝑅1 , . . . , 𝑅𝑑 } 𝑠, 𝑒1, 𝑒2} → 𝑟 4 Current Line of Research Let’s change the problem a little: “Bill Gates, CEO of Microsoft ….” R = Manager Create a claim about the relation: Text=“Bill Gates, CEO of Microsoft ….” Claim=“Bill Gates is manager of Microsoft” 5 True Current Line of Research Creating data is very easy! What we do: 6 Use knowledge bases to find entities that are related Find sentences that contain these entities Create claims about the relation inside the original sentence Ask Turker’s to label it Much easier than extracting labels and labelling Current Line of Research This formulation makes use of the information inherent in the label This helps us to generalize over the relations that are not seen in the training data 7 Outline My own line of research Papers: 8 Fast Dropout training, ICML, 2013 Distributional Semantics Beyond Words: Supervised Learning of Analogy and Paraphrase, TACL, 2013. Dropout training Proposed by (Hinton et al, 2012) Each time decide whether to delete one hidden unit with some probability p 9 Dropout training Model averaging effect H Among 2 models, with shared parameters Only a few get trained Much stronger than the known regularizer What about the input space? 10 Do the same thing! Dropout training Model averaging effect Among 2 H models, with shared parameters Only a few get trained Much stronger than the known regularizer What about the input space? Do the same thing! Dropout of 50% of the hidden units and 20% of the input units (Hinton et al, 2012) 11 Outline Can we explicitly show that dropout acts as a regularizer? Dropout needs sampling Very easy to show for linear regression What about others? Can be slow! Can we convert the sampling based update into a deterministic form? 12 Find expected form of updates Linear Regression E zi pi Reminder: Var zi pi (1 pi ) zi ~ Bernoulli( pi ) Consider the standard linear regression g wT x w arg min w x y * T w (i ) i With regularization: L( w) w x y T (i ) (i ) 2 i (i ) 2 Closed form solution: i w X X I X y T 13 wi2 1 T Dropout Linear Regression Consider the standard linear regression gw x T xi zi ~ Bernoulli( pi ) Dz diag ( z1 ,..., zm ) LR with dropout: wT Dz x How to find the parameter? L( w) w Dz x y T i 14 (i ) (i ) 2 Fast Dropout for Linear Regression L( w) w Dz x y T We had: (i ) (i ) 2 i Instead of sampling, minimize the expected loss 2 T E w Dz x y Fixed x and y: m wT Dz x wi xi zi i 1 S, S ~ N ( S , S2 ) m m S E wT Dz x wi xi E zi wi xi pi m i 1 2 m i 1 Var w Dz x wi xi Var zi wi xi pi (1 pi ) i 1 i 1 2 S 15 T 2 Fast Dropout for Linear Regression We had: L( w) w Dz x y T (i ) (i ) 2 i Instead of sampling minimize the expected loss: m wT Dz x wi xi zi S ~ N ( S , S2 ) S, i 1 2 E S ~ N ( , 2 ) S y S y S2 S S 2 T E w Dz x y 2 Expected loss: L( w) EL(w) i y (i ) S 16 i (i ) S (i ) 2 y (i ) 2 2 (i ) S ci w , ci x i ( j) 2 i 2 i j Fast Dropout for Linear Regression Expected loss: L( w) EL(w) y i (i ) S (i ) S (i ) 2 i y (i ) 2 2 (i ) S ci w , ci x i j Data-dependent regulizer Closed form could be found: w X X diag ( X X ) X y T 17 T ( j) 2 i 2 i 1 T Some definitions Dropout each input dimension randomly: Probit: x 1 ( x) 2 e t 2 /2 Logistic function / sigmoid : 1 ( z) 1 e z 18 dt Some definitions useful equalities Useful equalities ( x) N ( x; , s )dx ( 2 ( x) ( ( x) N ( x; , s 2 8 s 2 2 ) x) )dx ( s2 8 1 ) We can find the following expectation in closed form: E S ~ N ( , 2 ) ( S ) 19 Logistic Regression Consider the standard LR P(Y 1| X x) ( w x) 1 T 1 e The standard gradient update rule is wj ( y (w x)) x j T For the parameter vector wlog ( y (wT x)) x 20 wT x Dropout on a Logistic Regression Dropout each input dimension randomly: xi zi ~ Bernoulli( pi ) Dz diag ( z1 ,..., zm ) For the parameter vector wlog ( y (wT x)) x w ( y (wT Dz x)) Dz x Notation: 21 xi i-th dimension of x x( j ) j -th training instance xi( j ) i -th dimension of j -th instance 1 i m 1 j n Fast Dropout training Instead of using w we use its expectation: wavg E z ; zi ~ Bernoulli ( pi ) w Ez; zi ~ Bernoulli ( pi ) ( y (wT Dz x)) Dz x m wT Dz x wi xi zi S, i 1 S ~ N ( S , S2 ) m m i 1 i 1 m S E wT Dz x wi xi E zi wi xi pi m Var wT Dz x wi xi Var zi wi xi pi (1 pi ) 2 S i 1 22 2 i 1 2 Fast Dropout training T w E ( y ( w Dz x)) Dz x Approx: avg z ; zi ~ Bernoulli ( pi ) By knowing: How to approximate? Option 1: ES ( y (S )) Ez Dz x Option 2: ( y (ES S ))Ez Dz x wT Dz x S, S ~ N ( S , S2 ) Have closed forms but poor approximations 23 Experiment: evaluating the approximation The quality of approximation for wlog 24 Experiment: Document Classification 20-newsgroup subtask alt.atheism vs. religion.misc 25 Experiment: Document Classification(2) 26 Fast Dropout training T w E ( y ( w Dz x)) Dz x Approx: avg z ; zi ~ Bernoulli ( pi ) By knowing: wT Dz x S, S ~ N ( S , S2 ) wavg ,i E z ; zi ~ Bernoulli ( pi ) ( y ( wT Dz x)) zi xi p( zi 1) xi pi pi E z i | zi 1 ( y ( wT Dz x)) T xi E z i | zi 1 ( y ( w Dz x)) xi y E z i |zi 1 ( wT Dz x) T E z i | zi 1 ( w Dz x) ? 27 Fast Dropout training T E z i | zi 1 ( w Dz x) ? Previously: wT Dz x S , S ~ N ( S , S2 ), zi ~ Bern( pi ) We want to: T zi | zi 1 w Dz x wi xi zi wi zi Si ~ N (Si , S2i ) S S E wi xi zi wi xi S wi xi (1 pi ) i S2 S2 Var wi xi zi wi xi S2 ( wi xi ) 2 (1 pi ) pi i E z i |zi 1 ( wT Dz x) ESi ( Si ) which could be found in closed form. 28 Fast Dropout training We want to: Previously: T E z i | zi 1 ( w Dz x) ? wT Dz x S , S ~ N ( S , S2 ) zi | zi 1 wT Dz x wi xi zi wi zi Si ~ N (Si , S2i ) Si S wi xi (1 pi ), 2 S2i S2 ( wi xi ) 2 (1 pi ) pi Si deviates (approximately) from S with and 2 E z i | zi 1 ( wT Dz x) E N ( Has closed form! 29 S , S2 ( S ) E N ( , S2 ) (S ) ) S 2 2 EN ( S , 2 ) ( S ) 2 S2