SSPV06 talk on SAT and software verification

advertisement

SAT, Interpolants

and Software Model Checking

Ken McMillan

Cadence Berkeley Labs

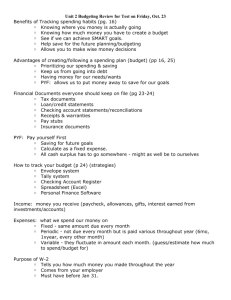

Applications of SAT solvers

SAT solvers have been applied in many ways in software verification

• BMC of programs using SAT (e.g., CBMC)

• SAT solvers in decision procedures

– Eager approach (e.g., UCLID)

– Lazy approach (Verifun, ICS, many others)

• SAT-based image computation

– Applied to predicate abstraction (Lahiri, et al)

• ...

We will consider instead the lessons learned from solving SAT

that can be applied to software verification.

Outline

• SAT solvers

– How do they work

– What general lessons can we learn from the experience

• Software model checking survey

– How various methods do or do not embody lessons from SAT

• A modest proposal

– An attempt to apply the lessons of SAT to software verification

SAT solvers

DPLL

DP

variable

elimination

• Solvers charactized by

– Exhaustive BCP

– Conflict-driven learning (resolution)

– Deduction-based decision heuristics

SATO, GRASP,CHAFF,etc

DLL

backtrack

search

Lesson #1: Be Lazy

• DP approach

– Eliminate variables by exhaustive resolution

– Extremely eager: deduces all facts about remaining variables

– Essentially quantifier elimination -- explodes.

• DPLL approach

– Lazy: only resolves clauses when model search fails

– Resolution use as a form of failure generalization

• Learns general facts from model search failure

Implications:

1. Make expensive deductions only when their relevance can be

justified.

2. Don't do quantifier elimination.

Lesson #2: Be Eager

• In a DPLL solver, we always close deduction under unit resolution (BCP)

before making a decision.

– Guides decision making model search

– Guides resolution steps in failure generalization

– BCP updated after decision making and clause learning

Implications:

1. Be eager with inexpensive deduction.

2. Deduce all the cheap facts before trying any expensive ones.

3. Let the expensive deduction drive the cheap deduction

Lesson #3: Learn from the Past

• Facts useful in one particular case are likely to be useful in other cases.

• This principle is embodied in

– Clause learning

– Deduction-based decision heuristics (e.g., VSIDS)

Implication: Deduce facts that have been useful in the past.

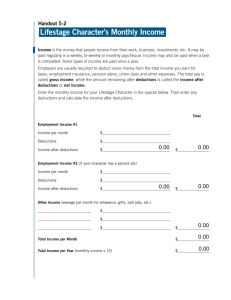

Static Analysis

• Compute the least fixed-point of an abstract transformer

– This is the strongest invariant the analysis can provide

• Inexpensive analyses:

– value set analysis

– affine equalities, etc.

• These analyses lose information at a merge:

x=y

x=z

T

Be eager with inexpensive deductions

Be lazy with expensive deductions

X

Learn from the past

N/A

Predicate abstraction

• Abstract transformer:

– strongest Boolean postcondition over given predicates

• Advantage: does not lose information at a merge

– join is disjunction

x=y

• Disadvantage:

x=z

x=y Ç x=z

– Abstract post is very expensive!

– Computes information about predicates with no relevance justification

Be eager with inexpensive deductions

X

Be lazy with expensive deductions

X

Learn from the past

N/A

PA with CEGAR loop

• Choose predicates to refute cex's

Choose initial T#

Model check

abstraction T#

true, done

Cex

Can extend Cex

from T# to T?

– Generalizes failures

– Some relevance justification

• Still performs expensive deduction

without justification

– strongest Boolean postcondition

yes, Cex

• Fails to learn from past

no

Add predicates

to T#

– Start fresh each iteration

– Forgets expensive deductions

Be eager with inexpensive deductions

X

Be lazy with expensive deductions

X+

Learn from the past

X

Boolean Programs

• Abstract transformer

– Weaker than predicate abstraction

– Evaluates predicates independently -- loses correlations

Predicate abstraction

{T} x=y; {x=0 , y=0}

Boolean programs

{T} x=y; {T}

• Advantages

– Computes less expensive information eagerly

– Disadvantages

– Still computes expensive information without justification

– Still uses CEGAR loop

Be eager with inexpensive deductions

X

Be lazy with expensive deductions

X++

Learn from the past

X

Lazy Predicate Abstraction

• Unwind the program CFG into a tree

– Refine paths as needed to refute errors

x=y

x=y

y=0

Add predicates along path

to allow refutation of error

ERR!

• Refinement is local to an error path

• Search continues after refinement

– Do not start fresh -- no big CEGAR loop

• Previously useful predicates applied to new vertices

Lazy Predicate Abstraction

x=y

x=y

y=0

Add predicates along path

to allow refutation of error

ERR!

• Refinement is local to an error path

• Search continues after refinement

– Do not start fresh -- no big CEGAR loop

• Previously useful predicates applied to new vertices

Be eager with inexpensive deductions

X

Be lazy with expensive deductions

-

Learn from the past

SAT-based BMC

Program

Loop

Unwinding

Convert to

Bit Level

• Inherits all the properties of SAT

• Deduction limited to propositional logic

– Cannot directly infer facts like x · y

– Inexpensive deduction limited to BCP

Be eager with inexpensive deductions

--

Be lazy with expensive deductions

Learn from the past

SAT

SAT-based with Static Analysis

Program

Static

Analysis

Loop

Unwinding

• Allows richer class of inexpensive

deductions

• Inexpensive deductions not updated after

decisions and clause learning

Convert to

Bit Level

SAT

decision

x=y;

– Coupling could be tighter

– Perhaps using lazy decision procedures?

x=z;

x=z

Be eager with inexpensive deductions

-

Be lazy with expensive deductions

Learn from the past

Lazy abstraction and interpolants

• A way to apply the lessons of SAT to lazy abstraction

• Keep the advantages of lazy abstraction...

– Local refinement (be lazy)

– No "big loop" as in CEGAR (learn from the past)

• ...while avoiding the disadvantages of predicate abstraction...

– no eager image computation

• ...and propagating inexpensive deductions eagerly

– as in static analysis

Interpolation Lemma

(Craig,57)

• Notation: L() is the set of FO formulas over the symbols of

• If A B = false, there exists an interpolant A' for (A,B) such that:

• Example:

– A = p q, B = q r,

A A'

A' B = false

A' 2 L(A) Å L(B)

A' = q

• Interpolants from proofs

– in certain quantifier-free theories, we can obtain an interpolant for a

pair A,B from a refutation in linear time. [McMillan 05]

– in particular, we can have linear arithmetic,uninterpreted functions,

and restricted use of arrays

Interpolants for sequences

• Let A1...An be a sequence of formulas

• A sequence A’0...A’n is an interpolant for A1...An when

– A’0 = True

– A’i-1 Æ Ai ) A’i, for i = 1..n

– An = False

– and finally, A’i 2 L (A1...Ai) Å L(Ai+1...An)

A1

A2

A3

...

Ak

True ) A'1 ) A'2 ) A'3 ... A'k-1 ) False

In other words, the interpolant is a structured

refutation of A1...An

Interpolants as Floyd-Hoare proofs

True

1. Each formula implies the next

)

xx=y;

1= y0

x1=y0

)

y1y++;

=y0+1

2. Each is over common symbols of

prefix and suffix

y1>x1

3. Begins with true, ends with false

)

x1=y1

[x=y]

False

Path refinement procedure

SSA

sequence

proof

structured

proof

Prover

Interpolation

Path

Refinement

Lazy abstraction -- an example

do{

lock();

old = new;

if(*){

unlock;

new++;

}

} while (new != old);

L=0

[L!=0]

L=1;

old=new

L=0;

new++

[new!=old]

[new==old]

program fragment

control-flow graph

Unwinding the CFG

T

0

L=0

L=0

[L!=0]

F

T

2

L=1;

old=new

L=0;

new++

[new!=old]

[new==old]

control-flow graph

TL=0

[L!=0]

1

Label error state with false, by

refining labels on path

Unwinding the CFG

T

0

L=0

L=0

[L!=0]

[L!=0]

F

2

L=1;

old=new

L=0;

new++

L=0

1

L=1;

old=new

L=0;

new++

[new!=old]

3 T

TL=0

[new!=old] 4

[new==old]

[L!=0]

FT

6

5

TL=0

control-flow graph

Covering: state 5 is subsumed by

state 1.

Unwinding the CFG

T

0

L=0

L=0

[L!=0]

[L!=0]

F

2

L=1;

old=new

L=0;

new++

L=0

1

L=1;

old=new

L=0;

new++

[new!=old]

T

old=new

3

T

old=new

L=0

[new!=old] 4 [new==old]

[new==old]

[L!=0]

F

6

5

L=0

7

T T

9

8 [new!=old]

T

F

10

[L!=0]

T

F

11

control-flow graph

Another cover. Unwinding is now complete.

Covering step

• If y(x) ) y(y)...

– add covering arc x B y

– remove all z B w for w descendant of y

x· y

x=y

X

We restict covers to be descending in a suitable total order on vertices.

This prevents covering from diverging.

Refinement step

• Label an error vertex False by refining the path to that vertex with an

interpolant for that path.

• By refining with interpolants, we avoid predicate image computation.

T

x=0

x=0T

[xy]

[x=y]

T

y=0T

y++

y0T

y=2

X

T

[y=0]

FT

Refinement may remove covers

Forced cover

• Try to refine a sub-path to force a cover

– show that path from nearest common ancestor of x,y proves y(x) at y

T

x=0T

[x=y]

y=0T

y++

y0T

x=0

[xy]

T

refine this path

y=2

Ty0

[y=0]

FT

Forced cover allow us to efficiently handle nested control structure

Incremental static analysis

• Update static analysis of unwinding incrementally

– Static analysis can prevent many interpolant-based refinements

– Interpolant-based refinements can refine static analysis

T

x=0T

[x=y]

y=0T

y++

y0T

[y=0]

FT

refine this

path

x=0

[x=z]

[xz]

x=z

[xy]

T

y=2

Ty=2

from value

set analysis

y=1

y=2

y2{1,2}

x=zT

[y=1Æ xz]

y=2

F

value set

refined

Applying the lessons from SAT

• Be lazy with epensive deductions

– All path refinements justified

– No eager predicate image computation

• Be eager with inexpensive deductions

– Static anlalysis updated after all changes

– Refinement and static analysis interact

• Learn from the past

– Refinements incremental – no “big CEGAR loop”

– Re-use of historically useful facts by forced covering

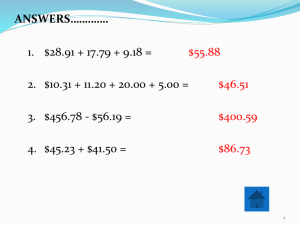

Experiments

• Windows device driver benchmarks from BLAST benchmark suite

– programs flattened to "simple goto programs"

• Compare performance against BLAST, a lazy predicate abstraction tool

• No static analysis.

name

source

LOC

SGP

LOC

BLAST

(s)

IMPACT

(s)

BLAST

IMPACT

kbfiltr

12K

2.3K

26.3

3.15

8.3

diskperf

14K

3.9K

102

20.0

5.1

cdaudio

44K

6.3K

310

19.1

16.2

floppy

18K

8.7K

455

17.8

25.6

parclass

138K

8.8K

5511

26.2

210

parport

61K

13K

8084

37.1

224

Almost all BLAST time spent in predicate image operation.

The Saga Continues

• After these results, Ranjit Jhala modified BLAST

– vertices inherit predicates from their parents, reducing refinements

– fewer refinements allows more predicate localization

• Impact also made more eager, using value set analysis

name

source

LOC

SGP

LOC

BLAST

(s)

IMPACT

(s)

BLAST

IMPACT

kbfiltr

12K

2.3K

11.9

0.35

34

diskperf

14K

3.9K

117

2.37

49

cdaudio

44K

6.3K

202

1.51

134

floppy

18K

8.7K

164

4.09

41

parclass

138K

8.8K

463

3.84

121

parport

61K

13K

324

6.47

50

Conclusions

• Caveats

– Comparing different implementations is dangerous

– More and better software model checking benchmarks are needed

• Tentative conclusions

– For control-dominated codes, predicate abstraction is too "eager“

• better to be more lazy about expensive deductions

– Propagate inexpensive deductions can produce substantial speedup

• roughly one order of magnitude for Windows examples

– Perhaps by applying the lessons of SAT, we can obtain the same kind of

rapid performance improvements obtained in that area

• Note 2-3 orders of magnitude speedup in lazy model checking in 6

months!

Future work

• Procedure summaries

– Many similar subgraphs in unwinding due to procedure expansions

– Cannot handle recursion

– Can we use interpolants to compute approximate procedure summaries?

• Quantified interpolants

– Can be used to generate program invariants with quantifiers

– Works for simple examples, but need to prevent number of quantifiers from

increasing without bound

• Richer theories

– In this work, all program variables modeled by integers

– Need an interpolating prover for bit vector theory

• Concurrency...

Unwinding the CFG

• An unwinding is a tree with an embedding in the CFG

Mv

L=0

[L!=0]

L=0

[L!=0]

2

L=1;

old=new

L=0;

new++

0

Me

1

L=1;

old=new

[new!=old]

L=0;

new++

4

[new==old]

3

8

Expansion

• Every non-leaf vertex of the unwinding must be fully expanded...

Mv

...and this exists...

L=0

Me

If this is not a leaf...

0

L=0

...then this exists.

1

...but we allow unexpanded leaves (i.e., we are building a

finite prefix of the infinite unwinding)

Labeled unwinding

• A labeled unwinding is equiped with...

T

0

– a lableing function y : V ! L(S)

– a covering relation B µ V £ V

L=0

[L!=0]

F

L=0

2

1

L=1;

old=new

L=0;

new++

T

3

...

L=0

[new!=old] 4 [new==old]

[L!=0]

F

6

5

L=0

...

These two nodes are covered.

(have a ancestor at the tail of a covering arc)

7

T

Well-labeled unwinding

• An unwinding is well-labeled when...

– y(e) = True

– every edge is a valid Hoare triple

– if x B y then y not covered

T

0

L=0

[L!=0]

F

L=0

2

1

L=1;

old=new

L=0;

new++

T

3

L=0

[new!=old] 4 [new==old]

[L!=0]

F

6

5

L=0

7

T

Safe and complete

• An unwinding is

– safe if every error vertex is labeled False

– complete if every nonterminal leaf is covered

T

0

L=0

[L!=0]

F

L=0

2

1

L=1;

old=new

L=0;

new++

T

old=new

3

T

old=new

L=0

[new!=old] 4 [new==old]

[L!=0]

F

6

5

L=0

7

...

Theorem: A CFG with a safe complete unwinding is safe.

T T

9

8 [new!=old]

T

F

9

...

[L!=0]

T

F

10

Unwinding steps

• Three basic operations:

– Expand a nonterminal leaf

– Cover: add a covering arc

– Refine: strengthen labels along a path so error vertex labeled False

Overall algorithm

1.

2.

3.

4.

5.

Do as much covering as possible

If a leaf can't be covered, try forced covering

If the leaf still can't be covered, expand it

Label all error states False by refining with an interpolant

Continue until unwinding is safe and complete