Review for Final Exam

advertisement

GEOG090 - Quantiative Methods in Geography

Spring 2006

Review for Final Exam

GEOG 090 – Quantitative Methods in

Geography

• The Scientific Method

– Exploratory methods (descriptive statistics)

– Confirmatory methods (inferential statistics)

• Mathematical Notation

– Summation notation

– Pi notation

– Factorial notation

– Combinations

Summation Notation: Components

refers to where the

sum of terms ends

in

x

i

indicates we are

taking a sum

i 1

refers to where the

sum of terms begins

indicates what we

are summing up

Summation Notation: Compound Sums

• We frequently use tabular data (or data drawn from

matrices), with which we can construct sums of

both the rows and the columns (compound sums),

using subscript i to denote the row index and the

subscript j to denote the column index:

Columns

Rows

x11 x12 x13

x21 x22 x23

2

3

x

i 1 j 1

ij

( x11 x12 x13 x21 x22 x23 )

Pi Notation

• Whereas the summation notation refers to the

addition of terms, the product notation applies to

the multiplication of terms

• It is denoted by the following capital Green letter

(pi), and is used in the same way as the

summation notation

n

x

i

x1 x2 xn

i 1

n

(x y ) (x

i

i 1

i

1

y1 )( x2 y2 ) ( xn yn )

Factorial

• The factorial of a positive integer, n, is equal to

the product of the first n integers

• Factorials can be denoted by an exclamation

point

n

n! i

i 1

5

5! 5 4 3 2 1 120 i

i 1

• There is also a convention that 0! = 1

• Factorials are not defined for negative integers

or nonintegers

Combinations

• Combinations refer to the number of possible

outcomes that particular probability experiments

may have

• Specifically, the number of ways that r items may

be chosen from a group of n items is denoted by:

n

n!

r r!(n r )!

or

n!

C (n, r )

r!(n r )!

C. Scales of Measurement

• The data used in statistical analyses can divided

into four types:

1. The Nominal Scale

2. The Ordinal Scale

3. The interval Scale

4. The Ratio Scale

As we progress through

these scales, the types

of data they describe

have increasing

information content

Which one is better: mean, median,

or mode?

• The mean is valid only for interval data or ratio

data.

• The median can be determined for ordinal data as

well as interval and ratio data.

• The mode can be used with nominal, ordinal,

interval, and ratio data

• Mode is the only measure of central tendency that

can be used with nominal data

Which one is better: mean, median,

or mode?

• The mean is valid only for interval data or ratio

data.

• The median can be determined for ordinal data as

well as interval and ratio data.

• The mode can be used with nominal, ordinal,

interval, and ratio data

• Mode is the only measure of central tendency that

can be used with nominal data

Which one is better: mean, median,

or mode?

• It also depends on the nature of the distribution

Multi-modal distribution

Unimodal skewed

Unimodal symmetric

Unimodal skewed

Which one is better: mean, median,

or mode?

• It also depends on your goals

• Consider a company that has nine employees with

salaries of 35,000 a year, and their supervisor

makes 150,000 a year

• What if you are a recruiting officer for the

company that wants to make a good impression

on a prospective employee?

• The mean is (35,000*9 + 150,000)/10 = 46,500 I

would probably say: "The average salary in our

company is 46,500" using mean

Source: http://www.shodor.org/interactivate/discussions/sd1.html

Measures of dispersion

• Measures of Dispersion

– Range

– Variance

– Standard deviation

– Interquartile range

– z-score

– Coefficient of variation

Further Moments of the Distribution

• There are further statistics that describe the

shape of the distribution, using formulae that are

similar to those of the mean and variance

• 1st moment - Mean (describes central value)

• 2nd moment - Variance (describes dispersion)

• 3rd moment - Skewness (describes asymmetry)

• 4th moment - Kurtosis (describes peakedness)

Further Moments of the Distribution

• There are further statistics that describe the

shape of the distribution, using formulae that are

similar to those of the mean and variance

• 1st moment - Mean (describes central value)

• 2nd moment - Variance (describes dispersion)

• 3rd moment - Skewness (describes asymmetry)

• 4th moment - Kurtosis (describes peakedness)

How to Graphically Summarize Data?

• Histograms

• Box plots

Functions of a Histogram

• The function of a histogram is to graphically

summarize the distribution of a data set

• The histogram graphically shows the following:

1. Center (i.e., the location) of the data

2. Spread (i.e., the scale) of the data

3. Skewness of the data

4. Kurtosis of the data

4. Presence of outliers

5. Presence of multiple modes in the data.

Box Plots

• We can also use a box plot to graphically

summarize a data set

• A box plot represents a graphical summary of

what is sometimes called a “five-number

summary” of the distribution

– Minimum

– Maximum

– 25th percentile

– 75th percentile

– Median

• Interquartile Range (IQR)

max.

median

min.

Rogerson, p. 8.

75th

%-ile

25th

%-ile

How To Assign Probabilities

to Experimental Outcomes?

• There are numerous ways to assign probabilities

to the elements of sample spaces

• Classical method assigns probabilities based on

the assumption of equally likely outcomes

• Relative frequency method assigns probabilities

based on experimentation or historical data

• Subjective method assigns probabilities based on

the assignor’s judgment or belief

Probability-Related Concepts

• An event – Any phenomenon you can observe that

can have more than one outcome (e.g., flipping a

coin)

• An outcome – Any unique condition that can be

the result of an event (e.g., flipping a coin: heads or

tails), a.k.a simple event or sample points

• Sample space – The set of all possible outcomes

associated with an event

– e.g., flip a coin – heads (H) and tails (T)

– e.g., flip a coin twice – HH, HT, TH, TT

Probability-Related Concepts

• Associated with each possible outcome in a

sample space is a probability

• Probability is a measure of the likelihood of

each possible outcome

• Probability measures the degree of uncertainty

• Each of the probabilities is greater than or equal

to zero, and less than or equal to one

• The sum of probabilities over the sample space

is equal to one

How To Assign Probabilities

to Experimental Outcomes?

• There are numerous ways to assign probabilities

to the elements of sample spaces

• Classical method assigns probabilities based on

the assumption of equally likely outcomes

• Relative frequency method assigns probabilities

based on experimentation or historical data

• Subjective method assigns probabilities based on

the assignor’s judgment or belief

Probability Rules

• Rules for combining multiple probabilities

• A useful aid is the Venn diagram - depicts multiple

probabilities and their relations using a graphical

depiction of sets

• The rectangle that forms the area of

the Venn Diagram represents the

sample (or probability) space, which

we have defined above

• Figures that appear within the

sample space are sets that represent

events in the probability context, &

their area is proportional to their

probability (full sample space = 1)

A

B

Probability Mass Function

• Example: # of malls in cities

xi

p(X=xi)

1

2

3

4

1/6 = 0.167

1/6 = 0.167

1/6 = 0.167

3/6 = 0.5

p(xi)

0.50

0.25

0

1

2

3

xi

• This plot uses thin lines to denote that the

probabilities are massed at discrete values of

this random variable

4

a

b

f(x)

x

• The probability of a continuous random variable

X within an arbitrary interval is given by:

b

p(a X b) f ( x)dx

a

• Simply calculate the shaded shaded area if we

know the density function, we could use calculus

Discrete Probability Distributions

• Discrete probability distributions

– The Uniform Distribution

– The Binomial Distribution

– The Poisson Distribution

• Each is appropriately applied in certain

situations and to particular phenomena

Source: http://en.wikipedia.org/wiki/Uniform_distribution_(discrete)

1 /(b a 1) 1 / n a<=x<=b

f ( x)

otherwise

0

x<a

0

F ( x) P( X x) ( x a 1) /(b a 1)

1

a<=x<=b

x>b

The Uniform Distribution

• Example – Predict the direction of the prevailing

wind with no prior knowledge of the weather

system’s tendencies in the area

• We would have to begin with the idea that

P(xEast) = 1/4

P(xSouth) = 1/4

P(xWest) = 1/4

P(xi)

P(xNorth) = 1/4

0.25

0.125

0

N

E

S

W

• Until we had an opportunity to sample and find

out some tendency in the wind pattern based on

those observations

The Binomial Distribution – Example

• Naturally, we can plot the probability mass

function produced by this binomial distribution:

xi P(xi)

0.50

1

2

3

4

0.4096

0.1536

0.0256

0.0016

P(xi)

0 0.4096

0.25

0

0

1

xi

2

3

4

The Poisson Distribution

• Poisson distribution

P(x) =

-l

e

*

x!

x

l

• The shape of the distribution depends strongly

upon the value of λ, because as λ increases, the

distribution becomes less skewed, eventually

approaching a normal-shaped distribution as it

gets quite large

• We can evaluate P(x) for any value of x, but large

values of x will have very small values of P(x)

Source: http://en.wikipedia.org/wiki/Normal_distribution

Finding the P(x) for Various Intervals

1.

a

P(Z a) = (table value)

• Table gives the value of P(x) in the

tail above a

a

P(Z a) = [1 – (table value)]

•Total Area under the curve = 1, and

we subtract the area of the tail

2.

3.

a

P(0 Z a) = [0.5 – (table value)]

•Total Area under the curve = 1, thus

the area above x is equal to 0.5, and

we subtract the area of the tail

Finding the P(x) for Various Intervals

4.

a

5.

P(Z a) = (table value)

• Table gives the value of P(x) in the

tail below a, equivalent to P(Z a)

when a is positive

a

P(Z a) = [1 – (table value)]

• This is equivalent to P(Z a) when

a is positive

a

P(a Z 0) = [0.5 – (table value)]

• This is equivalent to P(0 Z a)

when a is positive

6.

Finding the P(x) for Various Intervals

P(a Z b) if a < 0 and b > 0

7.

b

a

= (0.5 – P(Z<a)) + (0.5 – P(Z>b))

= 1 – P(Z<a) – P(Z>b)

or

= [0.5 – (table value for a)] +

[0.5 – (table value for b)]

= [1 – {(table value for a) +

(table value for b)}]

• With this set of building blocks, you should be able to

calculate the probability for any interval using a standard

normal table

The Central Limit Theorem

• Suppose we draw a random sample of size n (x1,

x2, x3, … xn – 1, xn) from a population random

variable that is distributed with mean µ and standard

deviation σ

• Do this repeatedly, drawing many samples from the

population, and then calculate the

• We will treat the

x

x

of each sample

values as another distribution,

which we will call the sampling distribution of the

mean (

X

)

The Central Limit Theorem

• Given a distribution with a mean μ and variance σ2, the

sampling distribution of the mean approaches a

normal distribution with a mean (μ) and a variance

σ2/n as n, the sample size, increases

• The amazing and counter- intuitive thing about the

central limit theorem is that no matter what the shape

of the original (parent) distribution, the sampling

distribution of the mean approaches a normal

distribution

Confidence Intervals for the Mean

• More generally, a (1- α)*100% confidence interval

around the sample mean is:

margin of

Standard

error

error

pr x z

x z

1

n

n

• Where zα is the value taken from the z-table that

is associated with a fraction α of the weight in the

tails (and therefore α/2 is the area in each tail)

Hypothesis Testing

• One-sample tests

– One-sample tests for the mean

– One-sample tests for proportions

• Two-sample tests

– Two-sample tests for the mean

Hypothesis Testing

1. State the null hypothesis, H0

2. State the alternative hypothesis, HA

3. Choose a, our significance level

4. Select a statistical test, and find the observed test

statistic

5. Find the critical value of the test statistic

6. Compare the observed test statistic with the critical

value, and decide to accept or reject H0

Hypothesis Testing - Errors

H0 is true

H0 is false

Accept H0

Correct decision

(1-α)

Type II Error (β)

Reject H0

Type I Error

Correct decision

(1-β)

(α)

p-value

• p-value is the probability of getting a value of the test

statistic as extreme as or more extreme than that observed

by chance alone, if the null hypothesis H0, is true.

• It is the probability of wrongly rejecting the null

hypothesis if it is in fact true

• It is equal to the significance level of the test for which

we would only just reject the null hypothesis

p-value

• p-value vs. significance level

• Small p-values the null hypothesis is unlikely to be

true

• The smaller it is, the more convincing is the rejection of

the null hypothesis

One-Sample t-Tests

Data: Acidity data has been collected for a population of

~6000 lakes in Ontario, with a mean pH of μ = 6.69, and σ

= 0.83. A group of 27 lakes in a particular region of

Ontario with acidic conditions is sampled and is found to

have a mean pH of x = 6.16, and a s = 0.60.

Research question: Are the lakes in that particular region

more acidic than the lakes throughout Ontario?

One-Sample Tests for Proportions

• Data: A citywide survey finds that the proportion of

households that own cars is p0 = 0.2. We survey 50

households and find that 16 of them own a car (p = 16/50

= 0.32)

• Research question: Is the proportion of households in

our survey that has a car different from the proportion

found in the citywide survey?

Two-Sample t-tests

•Variances are equal (homoscedasticity)

ttest =

| x1 - x 2 |

Sp

(1 / n1) + (1 / n2)

Pooled estimate of the standard deviation:

sp =

(n1 - 1)s12 + (n2 - 1)s22

n1 + n2 - 2

df = n1 + n2 - 2

Two-Sample t-tests

• Variances are unequal

ttest =

| x1 - x 2 |

(s12 / n1) + (s22 / n2)

df = min[(n1 - 1),(n2 - 1)]

Matched pairs t-tests

(x11, x12, …, x1n)

(x21, x22, …, x2n)

• Paired observations reduced independence

of observations

• Matched pairs t-test

Matched Pairs t-tests

ttest =

Sd =

|d|

sd

n

S (di - d)2

n-1

ANOVA

• Null hypothesis

H0:

1 2 k

• Alternative hypothesis

– Not all means are equal

ANOVA

• Null hypothesis

H0:

1 2 k

• Alternative hypothesis

– Not all means are equal

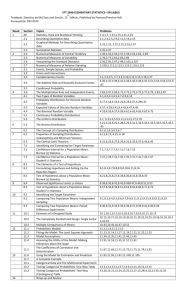

ANOVA Table

Source of

Variation

Sum of

Squares

Degrees of Mean Square

Freedom

Variance

F-Test

Between

Groups

BSS

k-1

BSS/(k-1) BSS/(k-1)

WSS/(N-k)

Within

Groups

WSS

N-k

WSS/(N-k)

Total

Variation

Fα, k-1, N-k

TSS

N–1

Linear Correlation

Source: Earickson, RJ, and Harlin, JM. 1994. Geographic Measurement and

Quantitative Analysis. USA: Macmillan College Publishing Co., p. 209.

Linear Correlation

Source: Earickson, RJ, and Harlin, JM. 1994. Geographic Measurement and

Quantitative Analysis. USA: Macmillan College Publishing Co., p. 209.

Covariance Formulae

1

Cov [X, Y] =

n-1

i=n

S (xi - x)(yi - y)

i=1

Covariance Example

Mean

TVDI (x)

Soil

Moisture

(y)

0.274

0.542

0.419

0.286

0.374

0.489

0.623

0.506

0.768

0.725

0.414

0.359

0.396

0.458

0.350

0.357

0.255

0.189

0.171

0.119

1

0.501

0.307

(x - xbar) (y - ybar)

2

-0.227

0.042

-0.082

-0.215

-0.127

-0.011

0.122

0.005

0.267

0.225

0.107

0.052

0.090

0.151

0.044

0.050

-0.052

-0.118

-0.136

-0.188

Sum

Covariance

(x - xbar) *

(y - ybar)

-0.024305

0.0021624

-0.007323

-0.032424

-0.005553

-0.000566

-0.006374

-0.000618

-0.036282

-0.042289

3

-0.15357

-0.017063

4

5

Linear Correlation

Source: Earickson, RJ, and Harlin, JM. 1994. Geographic Measurement and

Quantitative Analysis. USA: Macmillan College Publishing Co., p. 209.

Covariance Formulae

1

Cov [X, Y] =

n-1

i=n

S (xi - x)(yi - y)

i=1

Covariance Example

Mean

TVDI (x)

Soil

Moisture

(y)

0.274

0.542

0.419

0.286

0.374

0.489

0.623

0.506

0.768

0.725

0.414

0.359

0.396

0.458

0.350

0.357

0.255

0.189

0.171

0.119

1

0.501

0.307

(x - xbar) (y - ybar)

2

-0.227

0.042

-0.082

-0.215

-0.127

-0.011

0.122

0.005

0.267

0.225

0.107

0.052

0.090

0.151

0.044

0.050

-0.052

-0.118

-0.136

-0.188

Sum

Covariance

(x - xbar) *

(y - ybar)

-0.024305

0.0021624

-0.007323

-0.032424

-0.005553

-0.000566

-0.006374

-0.000618

-0.036282

-0.042289

3

-0.15357

-0.017063

4

5

Pearson’s product-moment

correlation coefficient

Cov [X, Y]

r=

sX sY

i=n

r =

S (xi - x)(yi - y)

i=1

(n - 1) sXsY

S ZxZy

i=n

r

=

i=1

(n - 1)

Linear Correlation

Source: Earickson, RJ, and Harlin, JM. 1994. Geographic Measurement and

Quantitative Analysis. USA: Macmillan College Publishing Co., p. 209.

Covariance Formulae

1

Cov [X, Y] =

n-1

i=n

S (xi - x)(yi - y)

i=1

Covariance Example

Mean

TVDI (x)

Soil

Moisture

(y)

0.274

0.542

0.419

0.286

0.374

0.489

0.623

0.506

0.768

0.725

0.414

0.359

0.396

0.458

0.350

0.357

0.255

0.189

0.171

0.119

1

0.501

0.307

(x - xbar) (y - ybar)

2

-0.227

0.042

-0.082

-0.215

-0.127

-0.011

0.122

0.005

0.267

0.225

0.107

0.052

0.090

0.151

0.044

0.050

-0.052

-0.118

-0.136

-0.188

Sum

Covariance

(x - xbar) *

(y - ybar)

-0.024305

0.0021624

-0.007323

-0.032424

-0.005553

-0.000566

-0.006374

-0.000618

-0.036282

-0.042289

3

-0.15357

-0.017063

4

5

Pearson’s product-moment

correlation coefficient

Cov [X, Y]

r=

sX sY

i=n

r =

S (xi - x)(yi - y)

i=1

(n - 1) sXsY

S ZxZy

i=n

r

=

i=1

(n - 1)

A Significance Test for r

r

ttest =

=

SEr

r

r2

1n-2

df = n - 2

=

r n-2

1 - r2

Spearman’s Rank

Correlation Coefficient

6S

i=1

i=n

rs = 1 -

2

di

n3 - n

Spearman’s Rank

Correlation Coefficient

6S

i=1

i=n

rs = 1 -

2

di

n3 - n

A Significance Test for rs

1

SErs =

n -1

rs

ttest =

= rs

SErs

n -1

df = n - 1

Correlation Regression

• Correlation Direction & Strength

• We might wish to go a little further

• Rate of change

• Predictability

Simple Linear Regression

• Model

y = a + bx + e

error:

x: independent variable

y (dependent)

b

a

x (independent)

y: dependent variable

b: slope

a: intercept

e: error term

Fitting a Line to a Set of Points

• Scatterplot fitting a line

y (dependent)

• Least squares method

• Minimize the error term e

x (independent)

Minimizing the SSE

n

min

a,b

S (y - ŷ)

i=1

n

2

min

= a,b

S (y - a - bx )

i=1

i

i

2

Finding Regression Coefficients

• Least squares method

n

S (x - x) (y - y)

b=

i

i=1

i

n

S (x - x)

i=1

a = y - bx

i

2

Coefficient of Determination (r2)

y

• Regression sum of squares

(SSR)

y

n

SSR =

S (ŷ - y)

i=1

i

2

• Total sum of squares

(SST)

• Coefficient of determination

(R2)

n

SST =

S (y i=1

i

ŷ

y)2

r2

SSR

=

SST

Regression ANOVA Table

Component Sum of Squares

n

Regression

2

(ŷ

y)

(SSR)

i

Error

(SSE)

S

S (y - ŷ)

Total

(SST)

S (y - y)

df Mean Square

1

SSR / 1

i=1

n

i=1

n

i=1

i

i

2

2

n - 2 SSE / (n - 2)

n-1

F

MSSR

MSSE

Significance Test for Slope (b)

• H0: b = 0

b

ttest =

sb

sb is the standard deviation of the slope parameter:

sb =

df = (n - 2)

se 2

(n - 1) sx2

Significance Test for

Regression Intercept

a

ttest =

sa

where sa is the standard deviation of the intercept:

sa = se2

Sxi2

nS(xi - x)2

and degrees of freedom = (n - 2)

Sampling designs

• Non-probability designs

- Not concerned with being representative

• Probability designs

- Aim to representative of the population

Non-probability Sampling Designs

• Volunteer sampling

- Self-selecting

- Convenient

- Rarely representative

• Quota sampling

- Fulfilling counts of sub-groups

• Convenience sampling

- Availability/accessibility

• Judgmental or purposive sampling

- Preconceived notions

Probability Sampling Designs

• Random sampling

• Systematic sampling

• Stratified sampling

Point Pattern Analysis

Regular

Random

Clustered

Point Pattern Analysis

1. The Quadrat Method

2. Nearest Neighbor Analysis