Fast Motion Fly Tracking by Adaptive LBP and Cascaded Data

advertisement

Proceedings of the 7th Annual ISC Graduate Research Symposium

ISC-GRS 2013

April 24, 2013, Rolla, Missouri

Mingzhong Li

Department of Engineering Management

Department of Computer Science

Missouri University of Science and Technology, Rolla, MO 65409

FAST MOTION FLY TRACKING BY ADAPTIVE-LBP AND CASCADED DATA

ASSOCIATION

Mingzhong Li, Zhaozheng Yin, Ruwen Qin

ABSTRACT

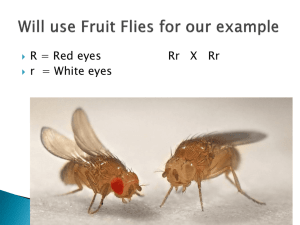

Learning the behavior patterns of fruit flies can inform us about

the molecular mechanisms and biochemical pathways that drive

human behavior based on analogical human motivations. A

glass chamber to house flies was build and their behaviors in

time-lapse videos are recorded in this container. Due to several

challenges in data analysis such as low image contrast, small

object size and fast object motion, we propose an adaptive

Local Binary Pattern feature to detect flies and develop a

cascaded data association approach with fine-to-coarse gating

region control to track flies in the spatial-temporal domain. Our

approach is validated on two long video sequences with very

good performance especially on fast motion prediction,

showing its potential to enable automatic characterization of

biological processes.

ml424@mst.edu, yinz@mst.edu, qinr@mst.edu

approach to quantitatively analyze the behaviors of flies in

time-lapse images.

There are three main challenges for our fly tracking

problem: the contrast between the flies and their surrounding

background is low (Fig.1.1-1.3), making the automatic object

detection hard; (2) the size of a fly (around 3-6 pixels in Fig.1)

Index Terms— Multi-object tracking, adaptive LBP feature,

fast motion, cascaded data association

1. INTRODUCTION

Behavioral analysis of model organisms can inform us about

the molecular mechanisms and biochemical pathways that drive

human behavior. Specifically, studying the fruit fly offers

distinct advantages over humans, including sophisticated

behaviors that mimic human motivations, short generation

times, and the ability to mutate and alter the genes to study the

role of specific genes. We have established a novel behavioral

paradigm in which flies are housed in a 7in x 7in x 1.5in open

field with water and food provided (Fig.1). Within the glass

chamber, we diffuse and change the light to simulate the

day/night transition and control the temperature and air

pressure to simulate different weather conditions. Flies are free

to move anywhere they choose, including walking, flying, and

interacting with other males and females. These behaviors rely

on positions of the fly in relation to one another but it is

difficult to assess the behaviors manually over months, which

motivates us to develop an automated multiple object tracking

Figure.1. Flies in the chamber

is small and the appearances of flies are highly

indistinguishable to each other, so we cannot extract rich usable

feature descriptors on flies to build distinctive object models,

making the appearance-based object tracking methods [9]

unsuitable here; (3) the flies can fly at a maximum speed of 1.7

meters/second [4], or 30 pixels/second in videos captured by a

120fps video camera with the resolution of 480x848 pixels. The

motion blur caused by fast-motion (Fig.1.4) makes the object

detection hard. Furthermore, the displacement of a fast-moving

fly between two consecutive frames is 5 or 10 times of its

object size, challenging the data-association-based tracking

methods that rely on good object detection performance and

continuous motion [1, 7].

In this paper, we propose to conquer the challenges with

the following contributions: (1) An Adaptive Local Binary

Pattern (ALBP) feature is developed to classify pixels into

objects and background, attacking the challenges of fly

detection caused by low image contrast, lighting fluctuation and

motion blur; (2) A cascaded data association approach is

proposed to match objects between consecutive images, link

1

short trajectories among image subsequences, connect long

trajectories between image subsequences; (3) A fine-to-coarse

mechanism to control gating regions in the spatial-temporal

domain is proposed to effectively remove false positive

trajectories and link broken trajectories caused by fast motion.

2.

METHODOLOGY

2.1. Introducing ALBP Feature for Fly Detection

Considering the background variation caused by light

transition over time, light diffusion and reflection on glasses, it

N

LBP s ( I n -I c )2n

(1)

n 0

where s(x) is a step function, i.e., s(x) = 0 if x < 0 and s(x) = 1

otherwise. N is the number of neighbors (e.g., N = 8 for a

3 3 neighborhood). Due to the fluctuation of intensity

values, different flies in an image may exhibit different LBP

features. We train and apply a Support Vector Machine (SVM)

classifier on the LBP features to classify image pixels into flies

and background. However, classification using the LBP feature

does not generate good results, as shown in Fig.2(d).

To increase the robustness over intensity fluctuation, we

introduce a threshold T into the step function in Eq.1, i.e,

s ( x ) 0 if x < T and s(x) = 1 otherwise. When using the

same T to get thresholded LBP for all image pixels, the

classification does not work well, as shown in Fig.2(e). This is

because the contrast between flies and their background varies

in different regions of an image. Therefore, we propose an

Adaptive LBP (ALBP) feature by adapting threshold T at

different locations (x; y):

T , i f ( x, y ) ,

L

L

T ( x, y ) TH , i f ( x, y ) H ,

T T

H L ( x, y ) TL ot her wi se.

H L

where

( x, y)

(2)`

computes the mean intensity value within a

patch around (x,y) (e.g., the patch size is 11 11 in this

paper). The parameters ( H , L , TH , TL ) in Eq.2 are

learned from a training set of fly pixels { I i } with their

corresponding { i }. H max i i and TH I i* H

Figure.2. Fly detection. (a) Input image; (b) Zoom-in details of

four sub-images in (a); (c) Segmentation by Otsu thresholding

(white and black denote fly and background pixels,

respectively); (d) Classify each pixel into fly or background by

its LBP feature; (e) Classify pixels by constant thresholded

LBP features; (f) Classify pixels by our adaptive LBP feature;

(g) Detected flies by grouping nearby classified fly pixels.

is not easy to build and update an accurate background model

to detect flies by background subtraction. Simply thresholding

the images in Fig.2(b) by the Otsu method [6] does not work as

well, as shown in Fig.2(c).

To distinguish the small contrast between flies and their

surrounding background, we explore the Local Binary Pattern

(LBP, [5]) feature that characterizes the local spatial structure

of the image texture. Given the center pixel I c , a binary code is

computed by comparing I c with its neighboring pixels I n :

where i* arg max i i . Similarly, we define L and TL .

We train and apply a SVM classifier on the ALBP feature

to classify pixels into flies and background, as shown in

Fig.2(f), which outperforms the results by Otsu thresholding,

original LBP and constant thresholded LBP. Finally, the nearby

fly pixels are grouped into fly objects. The detected flies

corresponding to Fig.2(a) are shown in Fig.2(g).

2.2. CASCAEDED DATA ASSOCIATION SYSTEM WITH

FINE-TO-COARSE GATING REGION CONTROL

A cascaded data association approach consisting of four

steps is proposed to link tracklets in growing levels of

discontinuity, as schematically illustrated in Fig.3:

(1) The video sequence is divided into many subsequences

(Fig.3(a)). Each subsequence consists of ten thousand images in

this paper. There are two reasons to apply this divide-andconquer technique here: (a) reduce a large-scale optimization

problem into many small-scale solvable optimization problems,

and (b) enable the fast parallel processing in each subsequence.

2

For example, monitoring flies from their birth to death lasts

four months. 1.2 billions of images will be recorded at 120fps,

which would result in billions of tracklets (short trajectories)

and make it unsolvable on a normal workstation to optimally

link all the tracklets in a single optimization problem with

billions of variables.

(2) Detected flies between consecutive frames are matched

into tracklets. Due to the camouflage and fast motion, some

flies are not detected in the current image and are re-detected in

the later images, resulting in broken tracklets in the spatiotemporal domain (Fig.3(b)).

(3) The short tracklets within each subsequence are linked

into long trajectories by iteratively solving a Linear Assignment

Figure.3. The scheme of our cascaded data association approach for object tracking.

Problem (LAP) with fine-to-coarse gating region control in the

spatio-temporal domain (Fig.3(c)).4.

(4) The long trajectories of all subsequences are

sequentially connected by solving a series of LAPs to form the

complete trajectories of all flies (Fig.3(d)).

After sequentially performing the matching on every pair of

consecutive frames, we generate many tracklets within a

subsequence (Fig.3(b)).

2.2.1. Matching Flies between Frames into Tracklets

We leverage the Hungarian algorithm [3] to match flies

between consecutive frames and generate tracklets. The cost

function used in the Hungarian algorithm is defined as:

and lie represent the head and tail locations of the tracklet in

frame si and ei , respectively. There are three possible

associations happening to tracklets within a subsequence:

(1) Linking. Two tracklets generated by the same fly are

broken in the matching step (Sec.3.1) due to miss detection.

The cost to link the tail of Ti with the head of T j is

^

c( Fi t , Fjt 1 ) L

(Fi t)- L

(Fjt 1) L

(Fi t)- L(Fjt|t 1)

(3)

where Fi t and Fjt 1 represent the ith fly in frame t and the jth

fly in frame t-1, respectively. Function L(x) retrieves the

2.2.2. Associate Tracklets within Each Subsequence

Denote the ith tracklet by Ti {lisi , lisi1 ,..., liei } where lisi

i

s

c(Ti T j )

^

is the L2 norm. L

(Fjt|t 1)is the

predicted location of the jth fly in frame t based on its previous

trajectories. We use a linear motion model for the prediction.

location of fly x.

liei l j j

.

s

+

ei s j

t

iei j j

s

+

when liei l j j s and 0 s j ei t

s

3

(4)

where

s and t denote the gating regions in the spatial and

temporal domain separately.

orientation of the tail of

ie and j j denote the trajectory

s

i

Ti and the head of T j , respectively.

(2) Disappearing. The tail of a tracklet is not linked to any

other tracklets. The cost for a tracklet disappearance is

c(Ti ) c(Ti T j ) c(Ti T j )

i

j

(5)

j

(3) Appearing. The head of a tracklet is not linked to any

other tracklets. The cost for a tracklet appearance is

c( T j ) c(Ti T j ) c(Ti T j )

i

j

(6)

i

The actual associations among a subsequence are determined

by solving a Linear Assignment Problem (LAP):

a r g mi nTc ,as.t. QT a=1

3.1. Datasets

Two videos captured by GoPro Hero2 camera with

resolution of 480x848 and frame rate at 120fps were used as

our experimenting dataset. Dataset 1 has 21000 frames on 52

flies and dataset 2 has 220000 frames on 82 flies. An airpressure change is placed inside the chamber on Video#2

around 25 min, in order to experiment the flies’ relative activity

adjustment. The result shows that our proposed system is

capable of outputting satisfactory tracking result by revealing

the activity pattern changing in statistical data. In this section

we first introduce the evaluation metrics we used to validate the

reliability of our tracking system. And secondly we will

demonstrate the performance of our approach based on these

metrics. Finally we exhibit the biological statistics which were

supposed to reveal our hypothesis, proving the stability of our

approach once again.

(7)

a

where a is a binary vector whose elements indicate which

association is selected in the optimal solution, Q is a binary

matrix, the nonzero elements of each row indicating which

trackets are involved in that association. The constraint ensures

that each tracklet appears in only one association in the optimal

solution.

We propose a fine-to-coarse algorithm (Fig.3(c)) to

increase the gating regions gradually and iteratively associate

tracklets within a subsequence:

Algorithm: Iterative Tracklet Association

Initialization:

3. EXPERIMENTS

k 0, (0)

120;

t

Repeat

Solve the LAP problem in Eq.7;

k k 1 ;

(t k ) q (sk 1) ;

until no change happens to the association.

where q controls the increasing rate of gating region (e.g., q = 2

in this paper). s is set as a constant in this paper (240 pixels,

half height of the chamber). The iterative algorithm solves the

most confident associations first and then gradually solves the

less-confident ones, which helps to handle the fast motion. As a

comparison, Fig.3(e) shows an example in which the tracklets

are associated incorrectly when the gating region is large and

the LAP problem is solved once.

2.2.3. Generate Long Trajectories

Trajectory association between consecutive subsequences

is solved by formulate a LAP similar to the tracklet association.

3.2. Performance Evaluation Metrics

Three well-known metrics are adopted to evaluate the

performance of our approach:

(1) Tracker Purity (TP, [8]), the ratio of frames that a

tracklet correctly follows the ground-truth to the total number

of frames that the tracklet has;

(2) Target Effectiveness (TE, [8]), the ratio of frames that

the object is correctly tracked to the total number of frames in

which the object exists;

(3) Multiple Object Tracking Accuracy (MOTA, [2]) that

considers the number of missed detections, false positives and

ID switches.

3.3. Quantitative Evaluation

Figure.4 shows the TP of all computer-generated

trajectories of dataset 1 and 2 in Fig.4(a)(b), respectively. We

sort the TP values and show some examples of the trajectory

evaluation in Fig.4(c-e) where the worst TP and OP are

analyzed along with the best TP and OP. By retrieving their 3D

plotting trajectories comparing the 2D footprints on the

background image, we can tell that in most cases our approach

tracks fast motion pretty well except a few false positive cases.

Part of them occurs in areas where the chamber background

performs a similar color feature as flies, thus flies are

camouflaged around the joint edges of two glass surfaces (e.g.,

false positives on the top of chamber in Fig.4(c), though it will

be excluded from selection at most time. But when some of the

flies move across those areas, these similar background features

will be treated as motion features in the lower level of the

cascaded linking system, and thus be forward to the next level.

This may be solved by further efforts on chamber material

modification. Fast motion can be detected and trajectories be

modulated by the trackers with confidence as shown in yellow

ellipsis in Fig.(c)-(e).

Figure. 5 demonstrates the TE evaluation of all ground

truth targets (flies) in Video #1 and #2, as shown in Fig.5(a)(b).

In Fig.(c)-(e) we plot the ground truth trajectories of target #1,

4

#10 and #50 in black lines, both in 3D dimension and 2D space.

The colored shadow in red, green and blue around the ground

truth trajectories represent the segments that are successfully

tracked. By implementing this procedure, we found that most

miss-detection happens in areas where the chamber structure

creates low foreground-background contrast, such as the edges

and corners. When the flies move along these areas, the

background sematic color protects them from being detected.

Fast motion detection is also proved to be satisfactory on TE

evaluation and backward checking, as shown in yellow ellipsis

in Fig.(c)-(e).

#Frames #Flies

TP

TE

MOTA

Video#1 20000

52

0.9831

0.9310

0.9728

Video#2 220000

82

0.9657

0.9275

0.9841

Table.1. Quantitative Evaluation of our Approach

Figure.4. Evaluation of TP Metrics. (a) Tracker Purity evaluation for Video#1; (b) Tracker Purity evaluation for Video#2; (c)(e)Tracker #1, #10 and #50 with their 2D trajectories and 3D plotting, demonstrating the segments of false positives and fast motion.

Figure.5. Evaluation of TE Metrics. (a) Target Effectiveness evaluation for Video#1; (b)Target Effectiveness evaluation for Video#2;

(c)-(e) target #1, #10 and #50 with their 2D trajectories and 3d plotting, demonstrating the segments of fast motion and miss detection.

From Fig.4-5 we observe that in general our approach

tracks the fast-moving flies very well. We summarize the

quantitative evaluation of our approach on the two datasets in

Table 1. We achieve Multiple Object Tracking Accuracy of

0.973 and 0.984 for dataset 1 and 2, separately (the perfect

MOTA is 1), showing the high performance of our approach.

The overall performance on Video #1 is better than Video #2,

considering TP and TE. One of the main reasons is that in

Video #2 more numbers of flies are participating in the

experiment and thus bring up the occurrence of high speed

movements and chances of camouflaging. Another reason is

that in Video #2 the flies’ interactive activities between

different ones are more often than that of Video #1, which

highly increase occurrence of body overlapping (occlussion)

5

and high speed chasing (multiple highly blurred suspects),

which are the most challenging cases to our system.

3.4 Biological Statistics

As stated at the beginning of this section, we manually

lowered the pressure around 25 min on Video#2, as shown in

Fig.6(a). We hypothesize that the flies should be acting more

obnoxious and thus are less likely to stay stationary. We

implement our tracking approach on this video in order to test if

the tracking result is capable of revealing the same truth. To

save limited computation resource, we only did our experiment

through period from the 140000th frame (about 19 minutes

26sec) to the end.

4. CONCLUSION AND FUTURE WORKS

We propose a novel adaptive LBP feature to detect tiny flies

with low image contrast and develop a cascaded data

association approach with fine-to-coarse gating region control

to track fast-moving flies. The high performance of our

approach shows its potential to enable automated analysis of fly

behavior. In the near future, we plan to acquire high-resolution

high-speed cameras to solve the extreme fast motion and redesign the chamber to reduce the effects of camouflages.

Meanwhile, adaptive local framerate selection based on flies’

activity liveliness is one of the intriguing topics for future

researches which could benefit the efficiency and thus lower

the time cost in large data analysis.

5. REFERENCES

[1]

[2]

[3]

[4]

[5]

[6]

[7]

Figure.6. (a) the diagram of air pressure change; (b) the

number of walking flies vs. time; (c) the distance covered by

walking flies vs. time;

Figure.6(b), (c) illustrate the activity patterns of walking

flies. An obvious abrupt rise-up after 25min is noticeable in

Fig.6(b), showing that a growing number of flies begin to walk

from stationary status when the air pressure change is felt by

them. This experimental phenomenon matches our prediction

about the changing. In Fig.6(c) the figure shows that a growing

trend is performed after 25min and a peak is reached at around

26min 30sec. This observation also matches our hypothesis. In

future researches, we will implement experiments with more

flies in the dataset to test different patterns of biological

behaviors and its related motion tracking statistics.

[8]

[9]

R. Bise et al., “Reliable Cell Tracking By Global Data

Association,” IEEE Intl. Symposium on Biomedical

Imaging, 2011.

R.Kasturi et al., “Framwork for Performance

Evaluation of Face, Text and Vehicle Detection and

Tracking in Video: Data, Metrics, and Protocol,” IEEE

Trans, on PAMI 31(2), pp. 310-336, 2007.

H. W. Kuhn, “Variants of the Hungarian method for

assignment problems,” Naval Research Logistics

Quarterly, 3:253-258,1956.

J. H. Marden et al., “Aerial Performance of Drosophila

Melanogaster from Population Selected for Upwind

Fight Ability,” J. of Experimental Biology, 200:27472755, 1997.

T. Ojala et al., “Multi-resolution gray-scal and rotation

invariant texture classification with Local Binary

Pattern,” IEEE Trans, on PAMI 24(7), PP. 971-987,

2002

N. Otsu, “A Threshold Selection Method from Gray

Level Histograms,” IEEE Trans, on Systems, Man, and

Cybernetics, 9(1):62-66, 1979.

D. Padfield etal., “Coupled Minimum-Cost Flow Cell

Tracking,” International Conference on Information

Processing in Medical Imaging (IPMI), 2009

K. Smith et al., “Evaluating Multi-Object Tracking,” in

Proc. of IEEE Conf. on CVPR Workshop on Empirical

Evaluation Methods in Computer Vision, 2005

A. Yilmaz et al., “Object Tracking: a Survey,” ACM

Computing Surveys, 38(4), 45 pages, 2006

6. ACKNOWLEDGEMENT

Special thanks to the Intelligent System Center for supporting

me and our research works which are presented in this paper.

6