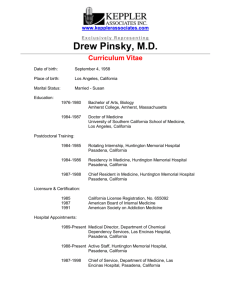

Experimental Error in Physics

advertisement

Experimental Error in Physics,

A Few Brief Remarks…

[What Every Physicist SHOULD Know]

L. Pinsky

July 2004

© 2004 L. Pinsky

1

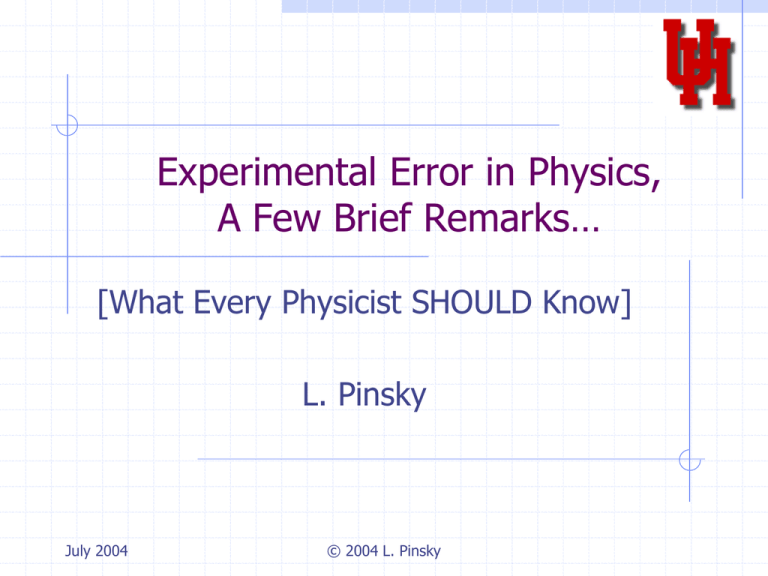

Outline of This Talk…

Overview

Systematic and Statistical Errors

Kinds of Statistics

The Interval Distribution

Drawing Conclusions

July 2004

© 2004 L. Pinsky

2

What your should take away

In Science, it is NOT the value you

measure, BUT how well you know that

value that really counts…

Appreciation of the accuracy of the

information is what distinguishes REAL

Science from the rest of human speculation

about nature…

…And, remember, NOT all measurements

are statistical, BUT all observations have a

some sort of associated confidence level…

July 2004

© 2004 L. Pinsky

3

Almighty Chance…

Repeatability is the cornerstone of Science!

…BUT, No observation or measurement is truly

repeatable!

The challenge is to understand the differences

between successive measurements…

Some observations differ because they are

genuinely unique!

(e.g. Supernovae, Individual Human Behavior, etc.)

Some are different because of RANDOM CHANCE

Most real measurements are a combination of

BOTH…

(Even the most careful preparation cannot guarantee

identical initial conditions…)

July 2004

© 2004 L. Pinsky

4

The Experimentalist’s Goal

The Experimental Scientist seeks to

observe nature and deduce from those

observations, generalizations about the

Universe.

The generalizations are typically compared

with representations of nature (theoretical

models) to gain insight as to how well

those representations do in mimicking

nature’s behavior…

July 2004

© 2004 L. Pinsky

5

Tools of the Trade

The techniques associated with STATISTICS are

employed to focus the analysis in cases where

RANDOM CHANCE is present in the measurement.

(e.g. Measuring the individual energy levels in an atom)

Statistical analysis is generally combined with a

more global attempt to place the significance of

the observation within the broader context of

similar or related phenomena.

(e.g. Fitting the measured energy levels into a

Quantum Mechanical Theory of atomic structure…)

What we typically want to know is whether, and

to what extent the measurements support or

contradict the Theory…

July 2004

© 2004 L. Pinsky

6

Blunders

These can be either Explicit or Implicit

Explicit—Making an overt mistake (i.e. intending to do the

right thing, but accidentally doing something else, and not

realizing it…)

(e.g. Using a mislabeled reagent bottle…)

Implicit—Thinking some principle is true, which is not, and

proceeding on that assumption.

(e.g. Believing that no pathogens can survive 100 C)

Blunders can only be guarded against by vigilance, and are

NOT reflected in error bars when the data are presented…

Confidence against Explicit blunders can be enhanced by

independent repetition.

Protection against Implicit blunders can be enhanced by

carefully considering (and disclosing) the details regarding

ALL procedures and assumptions…

July 2004

© 2004 L. Pinsky

7

Systematic Error

Generally, this includes all of the KNOWN uncertainties that are

related to the nature of the observations being made.

Instrumental Limitations (e.g. resolution or calibration)

Human Limitations (e.g. gauge reading ability)

Knowledge limitations (e.g. the accuracy with which needed

fundamental constants are known)

Usually, Systematic Error is quoted independently from Statistical

Error. However, like all combinations of errors, effects that are

independent of one an other can be added in “Quadrature”:

(i.e. Etotal = [ E12 + E22]1/2 )

Increased statistics can NEVER reduce Systematic Error !

Even Non-Statistical measurements are subject to Blunders and

Systematic Error…

July 2004

© 2004 L. Pinsky

8

Quantitative v. Categorical

Statistics

Quantitative—When the measured variable takes

NUMERICAL values, so that differences and

averages between the values make sense…

Continuous—The variable is a continuous real

number… (e.g. kinematic elastic scattering angles)

Discrete—The variable can take on only discrete

“counting” values… (e.g. demand as a function of

price in Economics)

Categorical—When the variable can only have an

exclusive value (e.g. your country of residence),

and arithmetic operations have no meaning with

respect to the categories…

July 2004

© 2004 L. Pinsky

9

Getting the Right Parent

Distribution

Generally, the issue is to find the proper

PARENT DISTRIBUTION—(i.e. the

probability distribution that is actually

responsible for the data…)

In most cases the PARENT DISTRIBUTION

is complex and unknown…

…BUT, in most cases it may be reasonably

approximated by one of the well known

distribution functions…

July 2004

© 2004 L. Pinsky

10

Deviation, Variance

and Standard Deviation

The Mean Square Deviation refers to the actual data:

s2 = S (xi – m)2/(N-1), and is an experimental statement of fact!

Standard Deviation (s) and the Variance (s2) refer to the PARENT

DISTRIBUTION:

s2 = Lim[S (ci – m)2/N], and is a mathematically useful concept!

One can calculate “Confidence Limits” (the fraction of the time the

truth is within) from Standard Deviations, not Deviations!

This is because you can integrate the PARENT DISTRIBUTION

to determine the fraction inside ±ns!

The Mean Square Deviation is sometimes used as an estimate of

the Variance when one assumes a particular PARENT

DISTRIBUTION!

…BUT, the resulting Confidence Limit is only as good as the

assumption about the PARENT DISTRIBUTION!

The wrong DISTRIBUTION gives you a false Confidence Limit!

July 2004

© 2004 L. Pinsky

11

Categorical Distributions

The CATEGORIES must be EXCLUSIVE!

(i.e. Being a member of one CATEGORY precludes being a

member of any other within the distribution…)

Sometimes there are “Explanatory” and “Response” variables,

where the “Response” variable is Quantative, and the

“Explanatory” Variable is Categorical.

(e.g. Annual Income is the [Quantative] “Response” variable

and Educational Degree [i.e. high school, B.S., M.S., Ph.D.] is

the [Categorical] “Explanatory” variable).

The “Response” variable is called the Dependent variable, with

the “Explanatory” variable being the Independent variable…

We can use statistical methods on the individual categorical

“Response” variables. Note that in some Categorical

Distributions the “Explanatory” variable can be Quantative.

(e.g. In the example above, the Education Degree could be

replaced by Number of Years of Education).

July 2004

© 2004 L. Pinsky

12

The Binomial Distribution

Where the SAMPLE SIZE is FIXED, and one has a

“Bernoulli” (Yes or No) Variable, the PARENT

DISTRIBUTION is a Binomial…

N independent trials, each with a probability of “success”

of p. (e.g. The number of fatal accidents per every 100

highway accidents)

P(y) = [N! py (1 – p)N-y] / [y! (N – y)!]

With: y = 0, 1, 2…

m(y) = N p, and s(y) = [N p (1 – p)]1/2

The Binomial Variance, s(y)2 is always smaller than m(y).

It is impractical to evaluate P(y) exactly for large N…

July 2004

© 2004 L. Pinsky

13

The Poisson Distribution

Where many identical measurements are made, and during

which, some variable number, y, of sought after events

occur…

(e.g. The number of radioactive decays/sec)

P(y) = ( e-m my ) / y! ( y = 0, 1, 2, …)

m = Distribution mean & s = m1/2

s increases with the value of m

When the Experimental Variance exceeds s, it is called

“Overdispersion” and is usually due to differences in the

conditions from one measurement to the next…

The distribution of counts within an INDIVIDUAL category

over multiple experiments is Poisson!

When N is Large and p is small (such that m = Np << N) a

Binomial Distribution tends towards a Poisson Distribution.

July 2004

© 2004 L. Pinsky

14

The Pervasive Gaussian:

The NORMAL Distribution

2

P(y) = e-(1/2)[(y-m)/s] /(s [2p]1/2)

Characterized solely by m and s, and the average is the best

estimate of the mean.

In the limit of large N, with a non-vanishing Np, (i.e. Np>>1)

the Normal Distribution approximates a Binomial Distribution…

Also, in the limit where Np>>1 a Poisson Distribution tends

towards a Normal Distribution.

…Because P(y) is symmetric, s2 = 1/(N-1)

dP(y)/dy = 0 at y = m, and d2P(y)/dy2 = 0 at y = m ± s.

±s ~ 68%, ±2s ~ 95%, and ±3s ~ 99.7%. FWHM =

2.354s

Although it is by far the most common and likely PARENT

DISTRIBUTION encountered in Experimental Science, it is

NOT the only one!

July 2004

© 2004 L. Pinsky

15

The Central Limit Theorem

Any Distribution that is the sum of many

SMALL effects, which are each due to

some RANDOM DISTRIBUTION, will tend

towards a Normal Distribution in the limit

of large statistics, REGARDLESS of the

nature of the individual random

distributions!

July 2004

© 2004 L. Pinsky

16

Other Distributions to Know

Lorentzian (Cauchy) Distribution—Used to

describe Resonant behavior:

P(y) = (G/2)/{p[(y-m)2 + (G/2)2]}, G =FWHM

Here, p means 3.14159… & s has no meaning!

…Instead, the FWHM is the relevant parameter!

Landau Distribution—in Particle Physics…

Boltzmann Distribution—in Thermo…

Bose-Einstein Distribution—in QM…

Fermi-Dirac Distribution—in QM…

…and others…

July 2004

© 2004 L. Pinsky

17

Maximum Likelihood

The “Likelihood” is simply the product of the

probabilities for each individual outcome in a

measurement, or an estimate for the total actual

probability of the observed measurement being

made.

If one has a candidate distribution that is a

function of some parameter, then the value of

that parameter that maximizes the likelihood of

the observation is the best estimate of that

parameter’s value.

The catch is, one has to know the correct

candidate distribution for this to have any

meaning…

July 2004

© 2004 L. Pinsky

18

Drawing Conclusions

Rejecting Hypotheses:

Relatively Easy if the form of the PARENT

Distribution is known: just show a low

probability of fit. The c2 technique is perhaps

the best known method.

A more general technique is the F-Test, which

allows one to separate the deviation of the

data from the Estimated Distribution AND the

discrepancy between the Estimated

Distribution and the PARENT DISTRIBUTION.

July 2004

© 2004 L. Pinsky

19

Comparing Alternatives

This is much tougher…

Where c2 tests favor one hypothesis over another, but not

decisively, one must take great care. It is very east to be

fooled into rejecting the correct alternative…

Generally, a test is based on some statistic (e.g. c2) that

estimates some parameter in a hypothesis. Values of the

estimate of the parameter far from that specified by the

hypothesis gives evidence against it…

One can ask, given a hypothesis, for the probability of

getting a set of measurements farther from the one

obtained assuming the hypothesis is correct. The lower the

probability, the less the confidence in the hypothesis being

correct…

July 2004

© 2004 L. Pinsky

20

Fitting Data

Fitting to WHAT???

Phenomenological (Generic)

Linear

LogLinear

Polynomial

Hypothesis Driven

Functional Form From Hypothesis

Least Squares Paradigm…

Minimizing the Mean Square Error is the Best

Estimate of Fit…

July 2004

© 2004 L. Pinsky

21

Errors in Comparing Hypotheses:

Choice of Tests

Type I Error—Rejecting a TRUE Hypothesis

The Significance Level of any fixed level confidence

test is the probability of a Type I Error. More serious,

so choose a strict test.

Type II Error—Accepting a FALSE Hypothesis

The Power of a fixed level test against a particular

alternative is 1 – the probability of a Type II Error.

Choose a test that makes the probability of a Type II

Error as small as possible.

July 2004

© 2004 L. Pinsky

22

The INTERVAL DISTRIBUTION

This is just an aside that needs mentioning:

For RANDOMLY OCCURING EVENTS, the

Distribution of TIME INTERVALS between

successive events is given by:

I(t) = (1/t) e-t/t

The mean value is t.

I(0) = t, or in words: the most likely value is 0.

Thus, there are far more short intervals than long

ones! BEWARE: As such, truly RANDOM EVENTS

TO THE NAÏVE EYE APPEAR TO “CLUSTER”!!!

July 2004

© 2004 L. Pinsky

23

Time Series Analysis

Plotting Data taken at fixed time intervals is

called a Time Series.

(e.g. The closing Dow Jones Average each day)

If nothing changes in the underlying PARENT

DISTRIBUTION, then Poisson Statistics apply…

BUT, in the real world one normally sees

changes from period to period.

Without specific hints as to causes, one can look

for TRENDS and CYCLES or“SEASONS.”

Usually, the problem is filtering these out from

large variation background fluctuations…

July 2004

© 2004 L. Pinsky

24

Bayesian Statistics

A Field of Statistics that takes into account

the degree of “Belief” in a Hypothesis:

P(H|d) = P(d|H) P(H)/P(d)

P(d) = Si P(d|Hi) P(Hi), for multiple hypotheses

Can be useful for non-repeatable events

Can be applied to multiple sets of prior

knowledge taken under differing conditions

Bayes Theorem: P(B|A) P(A) = P(A|B) P(B)

Where P(A) and P(B) are unconditional or a

priori probabilities…

July 2004

© 2004 L. Pinsky

25

Propagation of Error

Where x= f(u,v), (from the 1st term in the

Taylor Series expansion):

Df(u,v) ~ f/u Du + f/v Dv

More generally:

sx2 = su2 (x/u)2 + sv2 (x/v)2 + …

…+ 2 suv2 (x/u) (x/v) ,

Where suv2 is the Covariance…

July 2004

© 2004 L. Pinsky

26

Binning Effects

One usually “BINS” data in intervals in the

dependent variable. The choice of both BIN

WIDTH and BIN OFFSET may have serious

effects on the analysis…

Bin Width Effects May Include:

A large variation in the PARENT DISTRIBUTION over the

bin width…

Bins with small statistics…

Artifacts due to discrete structure in the measured

values…

Bin Offset Effects May Include:

Mean Value or Fit Slewing…

Artifacts due to discrete structure in the measured

values…

July 2004

© 2004 L. Pinsky

27

Falsifiability

To be a valid Scientific Hypothesis, it MUST be

FALSIFIABLE.

Astrology is a good example of a theory that is not

falsifiable because the proponents only look as

confirming observations.

Likewise, the “Marxist Theory of History” is not

falsifiable for a similar reason, proponents tend to

subsume ALL results within the theory.

That is: It must make clear, testable predictions,

that if shown not to occur, cause REJECTION of

the Hypothesis.

Good Scientific Theories generally Prohibit things!

July 2004

© 2004 L. Pinsky

28

Occam’s Razor

This often misunderstood Philosophical

Principle is critical to Scientific Reasoning!

Originally stated as “…Assumptions

introduced to explain a thing must not be

multiplied beyond necessity…”

The implication is that if two theories are

INDISTINGUISHABLE in EFFECT, then

there is NO Distinction, and one can

proceed to assume the simpler is true!

July 2004

© 2004 L. Pinsky

29

After Karl Popper…

There are no “Laws” in Science, only

Falsifiable CONJECTURES.

Science is Empirical, which means that an

existing Law (Conjecture) can be Falsified

without rejecting any or all prior results.

There is no absolute “Demarkation” in the

life of a Hypothesis that elevates it to the

exalted status of a LAW… That tends to

happen when it is the only Hypothesis left

standing at a particular time…

July 2004

© 2004 L. Pinsky

30