Surveys & Interviews

advertisement

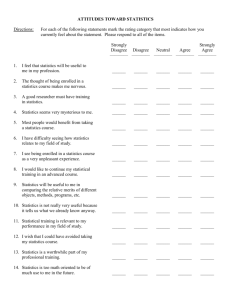

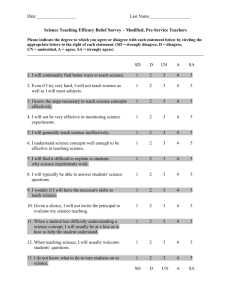

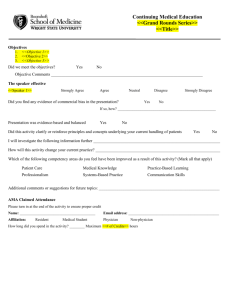

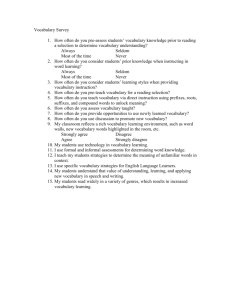

Collecting Data from Users Uses • User and task analysis • Prototype testing • On-going evaluation and re-design Data collection methods • Questionnaires • Interviews • Focus groups Definitions • Survey: – (n): A gathering of a sample of data or opinions considered to be representative of a whole. – (v): To conduct a statistical survey on. • Questionnaire: (n) A form containing a set of questions, especially one addressed to a statistically significant number of subjects as a way of gathering information for a survey. • Interview – (n): A conversation, such as one conducted by a reporter, in which facts or statements are elicited from another. – (v) To obtain an interview from. – American Heritage Dictionary Surveying • Sample selection • Questionnaire construction • Data collection • Data analysis Surveys – detailed steps • • • • • determine purpose, information needed identify target audience(s) Select method of administration design sampling method design prelim questionnaire – including analysis – Often based on unstructured or semi-structured interviews with people like your respondents • • • • pretest Revise, pretest… administer analyze Why survey as method? • Answers from many people, including those at a distance • Relatively easy to administer, analyze • Can continue for a long time Surveys can collect data on: • Facts – Characteristics of respondents – Self-reported behavior • This instance • Generally/usually • Opinions and attitudes: – Preferences, opinions, satisfaction, concerns Some Limits of Surveys • Reaching users is easier than non-users • Relies on voluntary cooperation, possibly biasing the sample • Questions have to be unambiguous, amenable to short answers • You only get answers to the questions you ask; you generally don’t get explanations • The longer or more complex the survey the less cooperation Some sources of error • Sample • Question choice – Can respondents answer? • • • • Question wording Method of administration Inferences from the data Users’ interests in influencing results – “vote and view the results” CNN quick vote: http://www.cnn.com/ When to do interviews? • Need details that can’t get from survey • Need more open-ended discussions with users • Small #s OK • Can identify and gain cooperation from target group • Sometimes: want to influence respondents as well as get info from them Sample selection Targeting respondents • About whom do you want information? • About whom can you get information? – E.g. non-users are hard to reach Sampling terminology • Element: the unit about which info is collected; basis of analysis. E.g., “User” • Universe: hypothetical aggregation of all elements. “All users” • Population: a specified aggregation of survey elements. “People who have used this service at least once in the last year.” • Survey population: aggregate of elements from which the sample is selected. “People who use the service at least once during the survey period.” Terminology, cont. • Sampling unit: elements considered for selection. • Sampling frame: list of sampling units. • Observation unit: unit about which data is collected. Often the same as unit of analysis but not always. E.g. one person (observational unit) may be asked about the household (unit of analysis). • Variable: a set of mutually exclusive characteristics such as sex, age, employment status. • Parameter: summary description of a given variable in a population. • Statistics: summary description of a given variable in a sample. Sample design • Probability samples – – – – – random stratified random cluster Systematic Size: if 10/90% split, 100; if 50/50, 400; • Yale’s slide • 30-50 in each cell – GOAL: Representative sample • Non-probability sampling – convenience sampling – purposive sampling – quota sampling Representative samples • Which characteristics matter? • Want the sample to be roughly proportional to the population in terms of groups that matter • E.g., students by gender and grad/undergrad status: • http://opa.vcbf.berkeley.edu/IC/Campus.Stats/ CampStats_F00/CS.F00.Table.F2.htmgraduate/u ndergraduate…a Active vs passive sampling • active: solicit respondents – – – – Send out email Phone Otherwise reach out to them Follow up on non-respondents if possible • passive – e.g. on web site – Response rate may be unmeasurable – heavy users may be over-represented – Disgruntled and/or happy users represented over- Response Rates • low rates may > bias – Whom did you miss? Why? • How much is enough? – Babbie: 50% is adequate; 70% is very good • May help if they understand purpose – Don’t underestimate altruism • Incentives may increase response – Reporting back to respondents as a way of getting response Example of a careful sample design • http://www.pewinternet.org/reports /reports.asp?Report=55&Section=R eportLevel1&Field=Level1ID&ID=24 8 RECAP • We collect data from users/potential users for: – User and task analysis – Prototype testing – On-going evaluation and re-design • Methods include: – Surveys, interviews, focus groups • Different methods useful for different purposes Some Issues Common to Different Methods • Know your purpose! – Match method to purpose and feasibility • Whom do you need/can you get to participate? – Population, sample composition, sample selection methods, size – Response rate, respondent characteristics (bias) Common Issues, cont. • What do you need to know? – What can/will respondents tell you? – How will it help? • How do you ask what you want to know? – Question construction, wording – Question ordering • What do you do with results? – Reporting and analysis Types of web surveys • Comprehensive • Quick polls – focused, one or few questions – http://www.gomez.com/ratings/index.cfm?topcat_id=19&firm_id=1768&CFID=295681&CFTOKEN=81 36111 • Short, focused surveys • Guestbooks, user registration, user feedback – http://www.bookfinder.com/interact/comments/ Uses of surveys of web sites • Identify users – Describe their characteristics • Describe their behavior • Ask their needs, preferences • Assess user satisfaction/response • Identify user problems, dissatisfactions • Solicit ideas for improvement Problems with Web Surveys • Population? – Size – Characteristics • Response rate? – Multiple responses from same person OK? • Biased sample? • Users competent to answer? – E.g., will they answer after they have used the site enough to be able to judge? • Non-users not represented; infrequent users under-represented? Questionnaire construction Questionnaire construction • Content – Goals of study: What do you need to know? – What can people tell you? • Conceptualization • Operationalization – e.g., how do you define “user”? • Question design • Question ordering Topics addressed by surveys • Respondent (user) characteristics • Respondent behavior • Respondent opinions, perceptions, preferences, evaluations Respondent characteristics • Demographics – General http://www.gvu.gatech.edu/user_surveys/survey1998-10/questions/general.html – Professional role (manager, student..) • User role (e.g., buyer, browser…) • Expertise – hard to ask – Domain – technology • http://www.gvu.gatech.edu/user_surveys/survey1998-10/questions/general.html • http://www.gvu.gatech.edu/user_surveys/survey-1998-10/questions/general.html – System Behavior • Tasks (e.g., for what are they using this site?) • Site usage, activity – Frequency; common functions – hard to answer accurately – Self-reports vs observations • Web and internet use: Pew study Opinions, preferences, concerns • • • • • content Organization, architecture interface perceived needs concerns – http://www.gvu.gatech.edu/user_surveys/survey-199810/questions/privacy.html • Success, satisfaction – Subdivided by part of site, task, purpose… • other requirements • Suggestions Problems with questions • Social acceptability • Privacy – “Do you use the internet to view pornography?” • Difficulty wording unambiguously • International concerns – Language – Social acceptability Problems: topics hard to conceptualize, operationalize • e.g., “Why did you use the CDL today?” – Teaching, research… • my category of work or task – to find an electronic journal, locate a book, find a citation… • To locate kind of resource – to find material on x topic • the subject area – to save me time • i.e. I used this rather than another way of accessing same resource Question construction Question formulation • • • • match respondents’ language match respondents’ behavior what do they want to tell you? What can they tell you? – Recent CNN.com poll: “do you think the sentence given to x was too long, about right, too short.” What was the sentence? (What was the crime?) • Beware of compound questions, hidden assumptions: – ‘did you order something? If so, did you….’ – what if only browsing? Question formulation: simplicity and clarity Complete the following sentence in the way that comes closest to your own views: 'Since getting on the Internet, I have ...' • ... become MORE connected with people like me. • ... become LESS connected with people like me. • ... become EQUALLY connected with people like me. • ... Don't know/No answer. http://www.gvu.gatech.edu/user_surveys/survey-1998-10/questions/general.html Survey questions – format • open-ended http://www.bookfinder.com/interact/comments / – “What is your job title?” _______ – “What do you find most difficult about your job?” • closed-ended (one answer; multiple answers) – http://www.useit.com/papers/surveys.html “Select the range that best represents the total number of staff…” 1-2 3-5 6-10… – paired characteristics/semantic differential Friendly__|___|___|___|____| Unfriendly Question format, cont. • Ordinal scale/ “Likert scale” Strongly agree, agree, neutral, disagree, strongly disagree http://www.amazon.com/exec/obidos/tg/st ores/detail/-/books/073571102X/ratethis-item/104-0765616-4703139 – Rating scale – matrix (usability survey, qn 5) • Always include neutral, “other,” “N/A” (not applicable) Closed-ended questions • Answers need to be comprehensive and mutually exclusive; • OR – Allow people to give more than one answer – Tell them how to choose “Why did you come to SIMS?” • How many responses to allow? – As many as your respondent needs – Likert scale-like questions: • 5 OR 7 is usual; can respondent differentiate 7? • Very strongly agree; strongly agree; agree; neutral; disagree; strongly disagree; very strongly disagree? – Odd vs even: even allows neutral, odd forces a choices. Filter and Contingency questions • 1. “Have you ever used our competitor?” – “If no, go to question 3.” – 2. “If yes, how would you rate….” • Did you apply to any graduate program other than SIMS? – If yes… – If no… Layout: consistency Circle the answer that best matches your opinion. SIMS is a great place to study. Strongly agree Agree Neutral Disagree Strongly disagree UC Berkeley is a great university. Strongly agree Agree Neutral Disagree Strongly disagree IS214 is a great course. Strongly disagree Disagree Neutral Agree Strongly agree Include Instructions! • One answer to each question, or multiples? – If online, can program to test and let user know they have violated guidelines. • Respond every time you visit this site? • Be sure it’s clear what to do if a question does not apply. – “Why did you choose SIMS over any other programs that accepted you?” Question ordering • Group similar questions • Using headings to label parts of survey, topics • Funneling: – General to particular – Particular to general • Keep it short! Response falls off with length. Pre-test, pre-test, pre-test! • With people like expected respondents • Looking for: – Ambiguous wording – Missing responses – Mismatch between your expectations and their reality – Any other difficulties respondents will have answering, or you will have interpreting their responses Web Survey Problems • Who is the population? Self-selected sample. • Stuffing the ballot box – Cnn.com polls • How to know what response rate is • How to get responses (1) after they have used site (2) before they leave • What are you assessing and what are they responding to? – E.g., design of site, or content? Presentation of content, or content of content? • Loss of context – what exactly are you asking about, what are they responding to? – Are you reaching them at the appropriate point in their interaction with site? Web Survey Problems II • Incomplete responses -- avoiding blank responses – and annoying users • Respondents may not know how long the survey is – Let people know • Multiple submissions Survey administration Human subjects considerations • not hurt by the process itself (e.g., uncomfortable questions) • not hurt by uses of the data – job etc not in jeopardy – not at risk for criminal prosecution • confidentiality or anonymity • Consent, voluntary participation • http://cphs.berkeley.edu Ways of administering surveys • On a web site – Open access – Limited access • Email (with URL) • Phone • Paper – – – – Mail Users pick up on their own Hand out in person Fax • Face to face Resources for online surveys Examples: • Zoomerang – http://www.zoomerang.com/ • Perseus Development Corp. – http://www.perseusdevelopment.com/ • Websurveys.net – http://www.web-surveys.net/ Telephone surveys • Reach a broader cross-section, more representative sample – Non-internet users, people outside your usual group (whatever that is) – More chance of persuading people to participate • More labor intensive • More intrusive Other Active Methods of Getting User Info • User journals (e.g., Arbitron ratings) Keeping track of responses • Mail or phone procedure: If you have a list of people contacted, and a way of knowing who has responded, can follow up with those who have not. – Pew tried phone survey 10 times, varying times of day. – Trying to maximize response rate – Characterizing non-respondents – Privacy sensitivity? E.g., sealed envelopes. • If no list, a reminder that says, if you have not yet responded, please do so. Reporting and analysis Reporting • Purpose • Methods • Data • Interpretation • Conclusions, implications Easy method: Questionnaire with the responses filled in Q5 Do you use a computer at your workplace, at school, at home, or anywhere else on at least an occasional basis? % 64 Yes 36 No * Don’t know/Refused Q6 Do you ever go online to access the Internet or World Wide Web or to send and receive email? % 49 Goes online 15 Does not go online * Don’t know 36 Not a computer user http://www.pewinternet.org/reports/pdfs/Report1Question.pdf Analysis • Univariate descriptive statistics – Frequency; • table: note that they quote the question exactly as asked – http://www.harrisinteractive.com/harris_poll/index.asp?PID=260 • Pie chart http://www.cc.gatech.edu/gvu/user_surveys/papers/199710/sld015.htm – http://pm.netratings.com/nnpm/owa/NRpublicreports.toppr opertiesweekly – – – – Mean Standard deviation Quartiles Max, min Analysis • Bi-variate – Cross-tabs http://www.census.gov/population/estimates/state/st-99-1.txt, • http://www.cc.gatech.edu/gvu/user_surveys/papers/1997-10/sld019.htm – Correlations – Graphs http://www.cc.gatech.edu/gvu/user_surveys/ papers/1997-10/sld006.htm • http://www.cc.gatech.edu/gvu/user_surv eys/survey-199810/graphs/general/q54.htm Types of measures • Dichotomous – Yes/no; male/female – Can report: frequencies • Nominal – Favorite color; most frequent activity; race/ethnicity – Frequencies, mode • Ordinal – Strongly agree, agree, neutral, disagree, strongly disagree – Frequencies, mode, median • Integer/ratio – # of staff; salary – can compare values not only in terms of which is larger or smaller, but also how much larger or smaller one is. – ratio variables that have a natural zero point, e.g., weight, length – Integer variables do not, e.g. temperature. 100°F is not twice as warm as 50°F. – Frequencies, mode, median, mean. Crosstabs Undergrads Grads Total (n=120) (n=200) (n=320) % 13 % 31 25 96 87 69 47 165 127 100% n = 118 n=2 100% n = 190 n = 10 100% n = 308 n= 12 % Satisfied 60 71 Dissatis. 40 Total No ans. Confidence intervals • http://www.pewinternet.org/reports /reports.asp?Report=55&Section=R eportLevel1&Field=Level1ID&ID=24 8