slides

advertisement

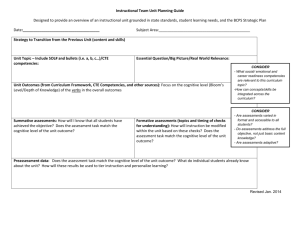

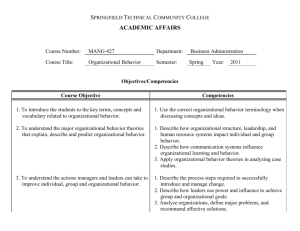

Aligning Course Competencies using Text Analytics Dr. Swapna Gottipati Assistant Professor (Education) School of Information Systems Singapore Management University Introduction “College standards are becoming diluted and there is a fuzziness about what faculty teach and what is expected from students.” (Miller & Malandra, 2006, p. 3/ Commission on the Future of Higher Education) 2 Background Labs Project Assignments Exams Case studies Quizzes Competency an intended effect of the educational program experiences that has been stated specific, observable, and measurable E.g: “Create and evaluate the business process model for a given real world scenario” Assessment should be tailored to a specific competency and should be reliable, valid and fair for that 3 What is Course Alignment? Learning objectives: What do I want students to know how to do when they leave this course? Assessments: What kinds of tasks will reveal whether students have achieved the learning objectives I have identified? Competencies Course Evaluation Assessments Teaching activities Instructional activities: What kinds of activities in and out of class will reinforce the learning objectives and prepare students for assessments? We focus on aligning the competencies and assessments in this talk. 4 Why is it important? Enabling teachers to provide students with the opportunities for learn and practice Enabling students to focus their efforts on activities for “good grades” which are more likely to translate into “good learning”. Suggesting assessment questions to the teaching staff. Discovering the gaps in the overall curriculum. 5 Agenda Motivation Challenges Solution Evaluations Conclusions 6 Motivation Academic success = what students remember + + students are able to do with their knowledge. Alignment is a way of evaluating the course components. We need a alignment models (methodology) to measure the alignment. ALIGNING TEACHING AND ASSESSING TO COURSE OBJECTIVES, John Biggs 7 Current Alignment Models Model Webb SEC Achieve Key Features Review and Analysis Time Training Time 1 day per team and match items, depth of ½ day to 1. Qualitative ratings knowledge (Multiple grades); 1 month train 2. Quantitative results turnaround for analysis and report. reviewers 1. Content matrix 2. Measure of 1 day per team for coding items and alignment highly benchmarks in ½ day to predictive of student matrix; ½ day for readers to complete survey train achievement scores on instruction; 1 week for analysis and report reviewers Has pool of 1. Reviewers need to highly make inferences Alignment review takes 1 day per test; Report trained 2. In-depth review and analysis takes 1 – 1½ months. reviewers Manual, Slow, Resource intense, Not scalable 8 Porter Model Alignment is perfect if the proportions for the assessment match cell by cell the proportions for the standards. Competencies We need automated methods that leverage the existing alignment models. 9 Goal: How to align the competencies and assessments? Analyse the assessment wrt to the competencies. Analyse assessments of the course to find missing gaps or useful patterns. Measure alignment between the assessments and competencies quantitatively. 10 Challenges Assessments and Competencies are textual in nature Topics are not explicitly specified in any of the documents Manual discovery of the topics and cognition is expensive and burdensome To construct the competency-cognitive and assessment-cognitive matrices, we need a principled method that can automatically; 1. Extract topics from the documents. 2. Extract the cognition domains for each topic from both competencies and assessments 11 Solution Motivation Levels of skills can be discovered with the standard cognitive domain of Bloom’s taxonomy. Porter’s alignment model uses topics, standards (competencies) and assessments on the cognitive domain to discover alignment. Keyphrase extraction models are the means to extract the important terms or topics from the documents. Sensitivity Alignment Score that uses sensitivity measure, also known as true positive rate. Given the list of topics and the cognitive competencies, we measure to what extent the assessments test the topics at the cognitive levels of competencies. 12 Competency-Assessment Cube Learning Outcomes (Subsumes competencies) Integration Skills Design and development skills Management skills Learning to learn skills Collaboration skills Change management skills Global Skills Social Skills Labs Topics Projects Quiz Exam Case studies Cognitive taxonomy Assignments Assessments 13 Solution Topic (Competency phrase) Assessment Phrase to-be option to-be scenario to-be analysis organizational business process path analysis as-is process C1 C1 C1 C1 C1 C1 C1 C1 T1 0.2 0.1 0.0 0.3 T1 0.3 0.0 0.0 0.2 T2 0.0 0.0 0.3 0.2 T2 0.1 0.1 0.3 0.2 T3 0.2 0.2 0.3 0.1 T3 0.1 0.2 0.3 0.1 T4 0.3 0.1 0.1 0.0 T4 0.2 0.1 0.2 0.1 0.93 14 Evaluations School of Information System’s undergraduate course - Process Modeling and Solution Blueprinting Competencies – 62 Assessments – 4 labs, assignment, project & exam 15 Data Analysis 140 120 100 80 60 40 20 0 Competencies - Cognitive Competency Analysis by Cognitive Levels Word cloud for the competency phrases Assessments Analysis by Cognitive Levels Word cloud for the assessment phrases 16 Data Analysis Comprehension Evaluation Knowledge Synthesis Analysis Application Comprehension Evaluation Knowledge Synthesis Assessments Application application model as-is process associate cost associate task Competencies Analysis Topics 0.00 0.00 0.00 0.00 0.02 0.00 0.03 0.03 0.00 0.00 0.02 0.02 0.00 0.00 0.00 0.00 0.00 0.01 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.02 0.00 0.00 0.01 0.02 0.00 0.00 0.01 0.02 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.01 0.00 0.00 0.00 0.01 0.00 0.00 Porter’s alignment tables for 4 sample topics in our dataset. 17 Results - Stage1 (Keyphrase Extraction) Phrases Competency Phrases (Topics) Assessment Phrases Assessment Phrases similar to topics Count 55 258 57 Table1: Statistics of the topics, competencies and assessments Topic Assessment Phrase to-be option to-be scenario to-be analysis path analysis organizational business processas-is process Table2: Example topic and corresponding assessment phrase. These phrases might exist in multiple assessments (labs, projects etc). 18 Results - Stage2 (Alignment) Alignment Results The cognitive distribution of competencies and assessments is similar. 19 Results– Gaps & Insights Analyse assessments of the course to find missing gaps or useful patterns. The above heatmap shows which topics are assessed and to what extent. 20 Results - Gaps & Insights Assessment rates for topics such as executive view, external entity are at very low level. 21 Results – Alignment Score Alignment Score Sensitivity alignment score is 90.8%. Indicates that assessments are highly aligned with the competencies. 22 Results - Analysis 1. Misalignment - Improve the competency definitions Topics (Competency Phrases) missing in assessments business-it misalignment enterprise level executive view hierarchy view Example topics which are missing in the assessments The above topics are not aligned and therefore the competencies can be redefined. 23 Results - Analysis 2. Misalignment - Recommend Questions to the Instructor Topic Missing Assessments business activity Analysis , Comprehension business-it misalignment Comprehension enterprise level Application, Knowledge executive view Application, Knowledge hierarchy view Application, Knowledge Example topics and missing assessments at cognitive levels The above topics are not tested (assessments) and can be suggested to the teaching staff. 24 Conclusions Some topics/keyphrases are noisy or non-coherent. Topics can be improved with external knowledge such as Wiki. Alignment analysis is incomplete without understanding the teaching activities. Our alignment model can be applied at the program level to measure the overall curriculum alignment. 25 Thank you for your kind attention. http://www.mysmu.edu/faculty/swapnag/ 26