University of Bristol 14th of March, 2008 Presentation

advertisement

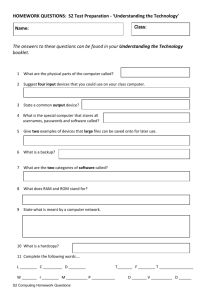

CREOLE 21 October, 2009 Using English in final examinations: Rationales and realities SPINE team 2 What is SPINE? • Student Performance in National Examinations: the dynamics of language in school achievement (SPINE) www.bristol.ac.uk/spine (ESRC/DfID RES-167-25-0263) • Bristol team: Rea-Dickins, Yu, Afitska, Sutherland, Olivero, Erduran, Ingram, Goldstein • Zanzibar team: Z. Khamis, Mohammed, A. Khamis, Abeid, Said, Mwevura • LTA = high stakes; > 50% of school aged children leave school at the end of Basic Education as unsuccessful 3 Case 1: context Khadija is 15 years old and in Form 2 of secondary school. Her learning in primary school was entirely through the medium of Kiswahili, with English taught as a subject. She experienced an abrupt shift from Kiswahili as medium of instruction on transition to secondary school as in Form 1 she was expected to learn all her subjects through English. However, in reality both her L1 (Kiswahili) and L2 (English) are used when she is being taught Maths, Science, Geography and her other subjects. But, at the end of Form 2 she is required to demonstrate her subject learning in formal school examinations entirely through the medium of English. 4 4 • Insert the SPINE diagram here 5 The Policy & the Politics: historical perspectives • Continuous assessment (CA) was introduced in Tanzania schools in 1976 after Musoma Resolution (NECTA Guidelines 1990:1). • In Musoma Resolution, Nyerere (1974) (see Lema et al 2006:111-112) criticised the examination system existed since independence and addressed the need to change it. • He maintained that the assessment procedures valued more competitive, written type, theoretically and intellectually focused examinations. 6 The Policy & the Politics: historical perspectives • He saw the need to make Assessment procedures more friendly, less competitive and practically focused. In that manner, he directed that students’ assessment must; • Include works that can enable students to function effectively in their environments. • Cover both students’ performance in theoretical and practical works. • Be carried out throughout the year. 7 The Policy & the Politics: historical perspectives • The National Examination Council of Tanzania (NECTA), started to include projects, character assessment, exercises and tests in the assessment system Teachers were then given responsibility to; • measure students’ ability to use their acquired knowledge and skills in their local environments. • keep records of their progress continuously 8 Tanzania and Zanzibar Education Policies Both The Tanzania Education and Training Policy (1990:79) and The Zanzibar Education Policy (2006:28) stated that continuous assessment (based on teacher assessment) combined with the final exam would be the basis for awarding of certificates at Secondary Education levels. • The Zanzibar Education Master Plan of 19962006 emphasized consistency between teacher assessment and National examinations for selection purposes and quality control (p.43) 9 Language policy and Assessment • Students’ assessment is affected by Language Policy because students have to demonstrate their knowledge and abilities through a language ratified by the system. • The Zanzibar Education Policy of 1995, Kiswahili version Section 5.72 (p28), The Zanzibar Education Master Plan of 1996-2006 (p43) and the Education Policy of 2006 (p38), all emphasized the use of English Language as a medium of instruction for all subjects in secondary education except for Kiswahili and Islamic studies. 10 Language policy and Assessment • So, corollary to this, English, as a language of instruction in teaching science at secondary level has automatically become language of assessment; continuous assessment and examination. • This implies that students’ opportunities to demonstrate their knowledge in examination is limited if they are not proficient in the language • 11 Coursework assessment as part of national examining: implementation issues At the level of Form II, Teachers Assessment (TA) was implemented basing on the following assessment procedures: • Class works • Oral questions • Home works • Weekly tests • Terminal tests • 12 Continuous Assessment format & Guidelines • Term: --------- Class: --------- subject--------- Pupils’ Month Month Month Month Total Exam Grand name 1 2 3 4 C/work 60% 10% 10% 10% 10% 40% 5 6 3 8 22 X Total 100% 30 52 13 Continuous Assessment format & Guidelines • Note: Coursework assessment will be obtained from class works, home works and weekly tests or as it will be directed otherwise by the Department or the Ministry. All the marks scored by the pupil from the mentioned activities within a particular month, will be combined and transformed into percentage of ten (10%) before filled in the relevant space in this form. • Source: Ministry of Education Working Guidelines: Guideline No. 9 (1995) 14 Continuous Assessment format & Guidelines Additional notes from another version • If the term is less or more than four (4) months, four activities chosen by the teacher him or herself should be picked at equal intervals. The teacher is advised to give as many tests as he or she can in a term but should choose only four for recording. • In getting pupil’s marks for terminal exam for each subject, the exam itself should contribute 60% and classroom assessments (coursework assessments) should contribute 40% 15 Coursework assessment as part of national examining: implementation issues • The language to be used is as indicated in the lesson plan. Source: Ministry of Education Working Guidelines: Guideline No. 9 (1995) • These notes appear to contradict with the first version which might be leading to confusion. This version requires the teacher to find average of marks in a month while the second requires the teacher to choose one activity from which the marks can be recorded. 16 Coursework assessment as part of national examining: implementation issues • Homework, class work, oral questions, weekly tests make 40% and the terminal exam makes 60% which when combined make 100%. • The hundred percent obtained is sent to the Department of Curriculum and Examination for further processing. There was variability among teachers on: • The specific activities that constitute teacher assessment marks. 17 Coursework assessment as part of national examining: implementation issues • Number of activities they should take marks from for each month. • Decision on students’ absentees • How to get 10% of marks for each month; some picked the one that students did better, some picked randomly, and some put average. • Understanding of what assessment is to be done. • Awareness of assessment guidelines 18 Coursework assessment as part of national examining: implementation issues • Percentage of marks that go to the final examination • Sharing what teachers know and practice about assessment guidelines among teachers within schools • Level of monitoring of Teacher Assessment by head teachers. • So, students from the same school and from different schools are assessed in different ways. 19 Overall, teachers were observed using L1 during the lessons in 49% of cases. Specifically, they did so some of the time in 20% of cases, most of the time in 11% of cases; and rarely in 18% of cases. 20 Teachers presented concepts clearly most of the time (28% of cases), some of the time (62% of cases). Teachers’ presentation of concepts was unclear (6%) or confusing (3%) in 9% of cases. 21 In 42% of cases observed, teachers rarely probed pupils’ comprehension. They did not probe pupils’ comprehension at all (24%). Teachers frequently probed pupils’ comprehension (8%); and sometimes probed pupils’ comprehension (24%). 22 Teachers provided feedback to learners using wide range of strategies (1%); with hardly any or no feedback in 19% of cases. In 78% of cases, teachers were observed either using some range (24%) or a limited range (54%) of feedback to the learners 23 In 83% of cases, learners rarely (28%), almost never or never (55%) provided extended responses; 16% pupils provided extended responses some of the time; only 2% learners were observed providing extended responses most of the time. 24 Findings 24 25 Findings 25 26 Student performance on exam items English RC Qn: How whales resemble man 45 students took this item: • 35.6% = no answer • 26.7% = wrong answer • 28.9% = partially correct answer • 8.8% = correct answer 27 Interview: D1 who didn’t answer Q3 explains D1: Int: D1: Int: D1: “because I did not understand by this this … resemble” (lines 115-117) “If I tell you that resemble means ‘to look like’ … can you do the question now? “Yes” OK so what’s the answer? “Man … is warm blooded … and whales also … whales have lungs and man also have lungs …” (122-133) 28 29 Maths: original & modified question 30 Linguistic accommodations make a difference Modifications to item – i.e. changing the word ‘below’ to ‘under’/ ‘younger’ cf. “below 14 years” (refer to the age where the item is) was interpreted in 3 different ways: • includes the 14 year olds (3+2+5+4+2=16) • cells on the left of the cell containing 14 (10,11,12,13) • cell below the cell containing 14 (which says 2) 31 Biology: responses to original item • No answer = 67.4% • Wrong answer = 21.7% • Partially correct answer = 6.5% • Correct answer 4.4% = 32 Biology: on locusts 33 Biology: on locusts 34 Biology: on locusts Questions: a) In which picture do you think the locust will/may die? b) Why do you think it will/may die? 35 Original item modified: • Greater contextualisation • Simplification of instruction • 2 structured parts: A & B • Visual clues to support information retrieval • Rephrasing of the item • Altering item layout 36 Results • Original item – Only 32.6% of students wrote an answer – Just under 11% gave a partially correct or correct answer • Modified item – 100% responded to this item – 42% gave a partially correct answer to Part A – 53% gave a partially correct answer to Part B 37 Nzige (locust): Changes in student response OR Sc Written responses on Modified Item MOD Score Comment H3 0 In picture A the locust may/will die I think it will/may dies because the locust get its breathing by using its body 2+2 Understands that locusts breathe using the body C2 1 I think it will/may die because the boy is dipping the locust in the water to all the bodies with its trachea that used to respiration as a respiratory surface of a locust 2+2 Getting very close to a very complex answer “I think a very able pupil indeed”. The right answer but with great difficulty in expressing this in English 38 Nzige (locust): changes in response G2 OR Written responses on MOD Comment Sc Modified Item Score (Neil Ingram: biologist) 0 I think its because of its 2+1 Test taker locates a locust as not body covered (immersed) naturally aquatic and therefore unlikely completely in the water, to survive if immersed in water. and its terrestrial not an Evidence of a learner using everyday aquatic. It can’ (informal) knowledge beyond the curriculum to answer the question but this level of insight would not be recognised in the original scoring 39 Means of Form II Exam Results (MoEVT) KISW Islamic BIO CHEM MATH PHYS studies 2004 49 37.1 18.4 30.8 21.1 25.8 2005 46 44.3 22.6 34.2 15.3 25.4 2006 36 47.9 21.5 34.3 15.5 25.1 2007 35.5 44.5 21.5 32.0 14.3 23.9 2008 50.6 47.5 24.5 34.8 16.4 29.1 40 ENG and MATH Total: 64.717, school=29.18%, pupil=70.82% ENGLISH explains (90.464.717)/90.4=28.41% of the maths total variance Total=90.4, School=30.75%, Pupil=69.25% 41 ENG and BIO Total=65.646, school=18.17%, pupil=81.83% ENGLISH alone explains (114.968-65.646)/114.968=42.90% of the total variance in BIOLOGY Total=114.968, school=18.79%, pupil=81.21% 42 English and CHEM Total=158.281, school=23.06%, pupil=76.94% ENGLISH explains (275.993158.281)/275.993=42.65% of the total CHEM variance Total=275.993, school=22.05%, pupil=77.95% 43 How about KISWAHILI & ARABIC? • Although other two languages (Kiswahili and Arabic) are also significant predictors of the students’ performance in maths, biology and chemistry, it is noted that they are less capable of explaining the variances than ENGLISH. • KISWAHILI explains (275.993-88.954)/275.993=31.54% of CHEM total variance, (114.968-76.585)/ 114.968=33.39% of BIO total variance, (90.4-75.678)/90.4=16.29% of MATH total variance. • ARABIC explains (275.993-203.452)/275.993=26.28% of the CHEM total variance, (114.968-88.569)/114.968=22.96% of the BIO total variance, (90.4-71.885)/90.4=20.48% of the maths total variance. 44 Summary of the multilevel models (a) • It is very clear that ENGLISH is a significant and substantial predictor of the students’ performance in MATH, BIO & CHEM. • The school-level variances explained in the cons models as well as in the models including ENGLISH as the single explanatory variable demonstrated a substantial proportion of the variance is attributable to school factors 45 Summary of the multilevel models (b) • Not much improvement in terms of fitness of the models (measured by the change of % of school-level variance in the total variance). • Therefore, essential to collect further school- and pupil-level data to examine what factors (e.g. English language learning opportunities at home and at school, academic English proficiency) and how much they account for the variances (in the tradition of school effectiveness studies) – our plan for nationwide data collection using pupil and headteacher questionnaires and vocabulary knowledge test 46 High stakes classroom assessment: the realities Impact/Potential Disadvantage Consequences/Injustice •Teachers do not use full range of LTA procedures & processes •Use of inappropriately constructed assessment frameworks •Inaccurate CWA of learners •CWA implemented as a series of tests •Learners not fully supported in their language & content knowledge development & fail to reach potential •Test performance valued over learning •Leave school with poor educational outcomes •Inadequate d-base for decision making about student & learning progression 47 Using English in high stakes examinations (SSA, UK, TIMSS, PISA): the realities Impact/Potential Disadvantage (examples) Consequences/Injustice: (examples) •Learners do not engage or respond poorly in examinations •Learners are not given a fair chance to show their abilities •Subject area (e.g. Biology, maths) construct can only be assessed once the linguistic construct has been successfully negotiated •Loss of self-esteem & motivation for learning •Learners do not achieve their potential – glass ceiling effect •Learners leave school as unsuccessful learners at end of Basic Education (in some countries end of Primary Phase) •Unskilled individuals unable to join the workforce in turn leading to social & economic deprivation 48