PowerPoint slides

advertisement

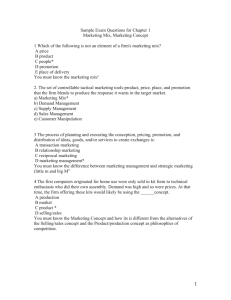

Extreme Metrics Analysis for Fun and Profit Paul Below Agenda • Statistical Thinking • Metrics Use: Reporting and Analysis • Measuring Process Improvement • Surveys and Sampling • Organizational Measures February 20, 2003 2 Agenda Statistical Thinking “Experiments should be reproducible. They should all fail in the same way.” February 20, 2003 3 Statistical Thinking • You already use it, at home and at work • We generalize in everyday thinking • Often, our generalizations or predictions are wrong February 20, 2003 4 Uses for Statistics • Summarize our experiences so others can understand • Use information to make predictions or estimates • Goal is to do this more precisely than we would in everyday conversation February 20, 2003 5 Listen for Questions • We are not used to using numbers in professional lives – “What does this mean?” – “What should we do with this?” • We need to take advantage of our past experience February 20, 2003 6 Statistical Thinking is more important than methods or technology Analysis is iterative, not one shot Data Induction I Deduction I D D Model Learning February 20, 2003 (Modification of Shewhart/Deming cycle by George Box, 2000 Deming lecture, Statistics for Discovery) 7 Agenda Metrics Use: Reporting and Analysis "It ain't so much the things we don't know that get us in trouble. It's the things we know that ain't so." Artemus Ward, 19th Century American Humorist February 20, 2003 8 Purpose of Metrics • The purpose of metrics is to take action. All types of analysis and reporting have the same high-level goal: to provide information to people who will act upon that information and thereby benefit. • Metrics offer a means to describe an activity in a quantitative form that would allow a knowledgeable person to make rational decisions. However, – Good statistical inference on bad data is no help. – Bad statistical analysis, even on the right variable, is still bad statistics. February 20, 2003 9 Therefore… • Metrics use requires implemented processes for: – metrics collection, – reporting requirements determination, – metrics analysis, and – metrics reporting. February 20, 2003 10 Types of Metrics Use “You go to your tailor for a suit of clothes and the first thing that he does is make some measurements; you go to your physician because you are ill and the first thing he does is make some measurements. The objects of making measurements in these two cases are different. They typify the two general objects of making measurements. They are: (a) To obtain quantitative information (b) To obtain a causal explanation of observed phenomena.” Walter Shewhart February 20, 2003 11 The Four Types of Analysis 1. Ad hoc: Answer specific questions, usually in a short time frame. Example: Sales support 2. Reporting: Generate predefined output (graphs, tables) and publish or disseminate to defined audience, either on demand or on regular schedule. 3. Analysis: Use statistics and statistical thinking to investigate questions and reach conclusions. The questions are usually analytical (e.g., “Why?” or “How many will there be?”) in nature. 4. Data Mining: Data mining starts with data definition and cleansing, followed by automated knowledge extraction from historical data. Finally, analysis and expert review of the results is required. February 20, 2003 12 Body of Knowledge (suggestions) • Reporting – Database query languages, distributed databases, query tools, graphical techniques, OLAP, Six Sigma Green Belt (or Black Belt), Goal-Question-Metric • Analysis – Statistics and statistical thinking, graphical techniques, database query languages, Six Sigma black belt, CSQE, CSQA • Data Mining – Data mining, OLAP, data warehousing, statistics February 20, 2003 13 Analysis Decision Tree Type of Question ? Enumerative Analytical Factors Analyzed: Few Many One Time? Yes No Ad hoc February 20, 2003 Reporting Analysis Data Mining and Analysis 14 Extreme Programming February 20, 2003 15 Extreme Analysis • Short deadlines, small releases • Overall high level purposes defined up front, prior to analysis start • Specific questions prioritized prior to analysis start • Iterative approach with frequent stakeholder reviews to obtain interim feedback and new direction • Peer synergy, metrics analysts work in pairs. • Advanced query and analysis tools, saved work can be reused in future engagements • Data warehousing techniques, combining data from multiple sources where possible • Data cleansing done prior to analysis start (as much as possible) • Collective ownership of the results February 20, 2003 16 Extreme Analysis Tips Produce clean graphs and tables displaying important information. These can be used by various people for multiple purposes. Explanations should be clear, organization should make it easy to find information of interest. However, It takes too long to analyze everything -- we cannot expect to produce interpretations for every graph we produce. And even when we do, the results are superficial because we don't have time to dig into everything. "Special analysis", where we focus in on one topic at a time, and study it in depth, is a good idea. Both because we can complete it in a reasonable time, and also because the result should be something of use to the audience. Therefore, ongoing feedback from the audience is crucial to obtaining useful results February 20, 2003 17 Agenda Measuring Process Improvement “Is there any way that the data can show improvement when things aren’t improving?” -Robert Grady February 20, 2003 18 Measuring Process Improvement • Analysis can determine if a perceived difference could be attributed to random variation • Inferential techniques are commonly used in other fields, we have used them in software engineering for years • This is an overview, not a training class February 20, 2003 19 Expand our Set of Techniques Metrics are used for: • Benchmarking • Process improvement • Prediction and trend analysis • Business decisions • …all of which require confidence analysis! Is This a Meaningful Difference? Relative Performance 2.0 1.5 1.0 0.5 0 1 2 3 CMM Maturity Level February 20, 2003 21 Pressure to Product Results • Why doesn’t the data show improvement? • “Take another sample!” • Good inference on bad data is no help “If you torture the data long enough, it will confess.” -- Ronald Coase February 20, 2003 22 Types of Studies Anecdote Case Study Quasi-experimental Experiment • Anecdote: “I heard it worked once”, cargo cult mentality • Case Study: some internal validity • Quasi-Experiment: can demonstrate external validity • Experiment: can be repeated, need to be carefully designed and controlled February 20, 2003 23 Attributes of Experiments Subject Treatment Reaction • Random Assignment • Blocked and Unblocked • Single Factor and Multi Factor • Census or Sample • Double Blind • When you really have to prove causation (can be expensive) February 20, 2003 24 Limitations of Retrospective Studies • No pretest, we use previous data from similar past projects • No random assignment possible • No control group • Cannot custom design metrics (have to use what you have) February 20, 2003 25 Quasi-Experimental Designs • There are many variations • Common theme is to increase internal validity through reasonable comparisons between groups • Useful when formal experiment is not possible • Can address some limitations of retrospective studies February 20, 2003 26 Causation in Absence of Experiment • Strength and consistency of the association • Temporal relationship • Non-spuriousness • Theoretical adequacy February 20, 2003 27 What Should We Look For? Are the Conclusions Warranted? Some information to accompany claims: •measure of variation •sample size •confidence intervals •data collection methods used •sources •analysis methods February 20, 2003 28 Decision Without Analysis • Conclusions may be wrong or misleading • Observed effects tend to be unexplainable • Statistics allows us to make honest, verifiable conclusions from data February 20, 2003 29 Types of Confidence Analysis Variables February 20, 2003 Quantitative Categorical Correlation Two-Way Tables 30 Two Techniques We Use Frequently • Inference for difference between two means – Works for quantitative variables – Compute confidence interval for the difference between the means • Inference for two-way tables – Works for categorical variables – Compare actual and expected counts February 20, 2003 31 Quantitative Variables Comparison of means of quartiles 2 and 4 yields p value of 88.2%, not a significant difference at 95% level) Project Productivity ISBSG release 6 1.0 .9 .8 .7 .6 AFP per hour .5 .4 .3 .2 .1 0.0 N= February 20, 2003 119 120 120 119 1 2 3 4 Quartiles of Project Size 32 Categorical Variables P value is approximately 50% Effort Low PM Variance Met 3 Not Met February 20, 2003 9 Medium PM 6 High PM 10 9 7 33 Categorical Variables P value is greater than 99.9% Date Low PM Variance Met 2 Not Met February 20, 2003 10 Medium PM 10 High PM 6 3 13 34 Expressing the Results “in English” • “We are 95% certain that the difference in average productivity for these two project types is between 11 and 21 FP/PM.” • “Some project types have a greater likelihood of cancellation than other types, we would be unlikely to see these results by chance.” February 20, 2003 35 What if... • Current data is insufficient • Experiment can not be done • Direct observation or 100% collection cannot be done • or, lower level information is needed? February 20, 2003 36 Agenda Surveys and Samples In a scientific survey every person in the population has some known positive probability of being selected. February 20, 2003 37 What is a Survey? • A way to gather information about a population from a sample of that population • Varying purposes • Different ways: – telephone – mail – internet – in person February 20, 2003 38 What is a Sample? • Representative fraction of the population • Random selection • Can reliably project to the larger population February 20, 2003 39 What is a Margin of Error? • An estimate from a survey is unlikely to exactly equal to quantity of interest • Sampling error means results differ from a target population due to “luck of the draw” • Margin of error depends on sample size and sample design February 20, 2003 40 What Makes a Sample Unrepresentative? • Subjective or arbitrary selection • Respondents are volunteers • Questionable intent February 20, 2003 41 How Large Should the Sample Be? • What do you want to learn? • How reliable must the result be? – Size of population is not important – 1500 people is reliable enough for entire U.S. • How large CAN it be? February 20, 2003 42 “Dewey Defeats Truman” • Prominent example of a poorly conceived survey • 1948 pre-election poll • Main flaw: non-representative sample • 2000 election: methods not modified to new situation February 20, 2003 43 Is Flawed Sample the Only Type of Problem That Happens? • Non-response • Measurement difficulties • Design problems, leading questions • Analysis problems February 20, 2003 44 Some Remedies • Stratify sample • Adjust for incomplete coverage • Maximize response rate • Test questions for – clarity – objectivity • Train interviewers February 20, 2003 45 Agenda Organizational Measures “Whether measurement is intended to motivate or to provide information, or both, turns out to be very important.” -- Robert Austin February 20, 2003 46 Dysfunctional Measures • Disconnect between measure and goal – Can one get worse while the other gets better? • Is one measure used for two incompatible goals? • The two general types of measurement are... February 20, 2003 47 Measurement in Organizations • Motivational Measurements – intended to affect the people being measured, to provoke greater expenditure of effort in pursuit of org’s goals • Informational Measurements – logistical, status, or research information, provide insight to provide short term management and long term improvement February 20, 2003 48 Informational Measurements • Process Refinement Measurements – reveals detailed structure of processes • Coordination Measurements – logistical purpose February 20, 2003 49 Mixed Measurements The desire to be viewed favorably provides an incentive for people being measured to tailor, supplement, repackage, or censor information that flows upward. • “Dashboard” concept is incomplete • We have Gremlins February 20, 2003 50 The Right Kind of Culture Internal or external motivation? • Ask yourself what is driving the people around you to do a good job: – Do they identify with the organization and fellow team members? (Work hard to avoid letting coworkers down) – Are they only focused on the next performance review and getting a big raise? February 20, 2003 51 Why is this important? • Each of us makes dozens of small decisions each day – Motivational measures influence us – These small decisions add up to large impacts • Are these decisions aligned with the organization’s goals? February 20, 2003 52 Conclusion: It Has Been Done • There are organizations in which people have given themselves completely to pursuit of organizational goals • These people want measurements as a tool that helps get the job done • If this is your organization, fight hard to keep it February 20, 2003 53 A Few Selected Resources: • Measuring and Managing Performance in Organizations, Robert D. Austin, 1996. • Schaum’s Outlines: Business Statistics, Leonard J. Kazmier, 1996. • International Software Benchmarking Standards Group, http://www.isbsg.org.au • American Statistical Association, http://www.amstat.org/education/Curriculum_Guidelines.html • Graphical techniques books by E. Tufte • Contact a statistician for help February 20, 2003 54 eds.com