Progress in new computer science research directions

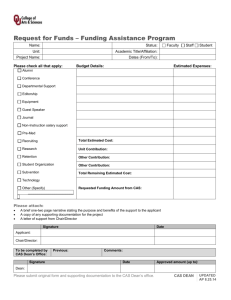

advertisement

Progress in New Computer Science Research Directions John Hopcroft Cornell University Ithaca, New York CAS May 21, 2010 Time of change The information age is undergoing a fundamental revolution that is changing all aspects of our lives. Those individuals and nations who recognize this change and position themselves for the future will benefit enormously. CAS May 21, 2010 Drivers of change Merging of computing and communications Data available in digital form Networked devices and sensors Computers becoming ubiquitous CAS May 21, 2010 Internet search engines are changing • When was Einstein born? Einstein was born at Ulm, in Wurttemberg, Germany, on March 14, 1879. List of relevant web pages CAS May 21, 2010 CAS May 21, 2010 Internet queries will be different Which car should I buy? What are the key papers in Theoretical Computer Science? Construct an annotated bibliography on graph theory. Where should I go to college? How did the field of CS develop? CAS May 21, 2010 Which car should I buy? • Search engine response: Which criteria below are important to you? Fuel economy Crash safety Reliability Performance Etc. CAS May 21, 2010 Make Cost Reliability Fuel economy Crash safety Toyota Prius 23,780 Excellent 44 mpg Fair Honda Accord 28,695 Better 26 mpg Excellent Toyota Camry 29,839 Average 24 mpg Good Lexus 350 38,615 Excellent 23 mpg Good Infiniti M35 47,650 Excellent 19 mpg Good CAS May 21, 2010 Links to photos/ articles CAS May 21, 2010 2010 Toyota Camry - Auto Shows Toyota sneaks the new Camry into the Detroit Auto Show. Usually, redesigns and facelifts of cars as significant as the hot-selling Toyota Camry are accompanied by a commensurate amount of fanfare. So we were surprised when, right about the time that we were walking by the Toyota booth, a chirp of our Blackberries brought us the press release announcing that the facelifted 2010 Toyota Camry and Camry Hybrid mid-sized sedans were appearing at the 2009 NAIAS in Detroit. We’d have hardly noticed if they hadn’t told us—the headlamps are slightly larger, the grilles on the gas and hybrid models go their own way, and taillamps become primarily LED. Wheels are also new, but overall, the resemblance to the Corolla is downright uncanny. Let’s hear it for brand consistency! Four-cylinder Camrys get Toyota’s new 2.5-liter four-cylinder with a boost in horsepower to 169 for LE and XLE grades, 179 for the Camry SE, all of which are available with six-speed manual or automatic transmissions. Camry V-6 and Hybrid models are relatively unchanged under the skin. Inside, changes are likewise minimal: the options list has been shaken up a bit, but the only visible change on any Camry model is the Hybrid’s new gauge cluster and softer seat fabrics. Pricing will be announced closer to the time it goes on sale this March. Toyota Camry › Overview › Specifications › Price with Options › Get a Free Quote News & Reviews 2010 Toyota Camry - Auto Shows Top Competitors Chevrolet Malibu Ford Fusion Honda Accord sedan CAS May 21, 2010 Which are the key papers in Theoretical Computer Science? • Hartmanis and Stearns, “On the computational complexity of algorithms” • Blum, “A machine-independent theory of the complexity of recursive functions” • Cook, “The complexity of theorem proving procedures” • Karp, “Reducibility among combinatorial problems” • Garey and Johnson, “Computers and Intractability: A Guide to the Theory of NP-Completeness” • Yao, “Theory and Applications of Trapdoor Functions” • Shafi Goldwasser, Silvio Micali, Charles Rackoff , “The Knowledge Complexity of Interactive Proof Systems” • Sanjeev Arora, Carsten Lund, Rajeev Motwani, Madhu Sudan, and Mario Szegedy, “Proof Verification and the Hardness of Approximation Problems” CAS May 21, 2010 Temporal Cluster Histograms: NIPS Results NIPS k-means clusters (k=13) 180 12: chip, circuit, analog, voltage, vlsi 11: kernel, margin, svm, vc, xi 10: bayesian, mixture, posterior, likelihood, em 9: spike, spikes, firing, neuron, neurons 8: neurons, neuron, synaptic, memory, firing 7: david, michael, john, richard, chair 6: policy, reinforcement, action, state, agent 5: visual, eye, cells, motion, orientation 4: units, node, training, nodes, tree 3: code, codes, decoding, message, hints 2: image, images, object, face, video 1: recurrent, hidden, training, units, error 0: speech, word, hmm, recognition, mlp 160 Number of Papers 140 120 100 80 60 40 20 0 1 2 3 4 5 6 7 8 Year 9 10 11 12 13 14 CAS May 21, 2010 Caruana, Gehrke, and Thorsten Shaparenko, Fed Ex package tracking CAS May 21, 2010 CAS May 21, 2010 CAS May 21, 2010 CAS May 21, 2010 CAS May 21, 2010 CAS May 21, 2010 Storm Total Show Regional Zoom Pan Map Precipitation Severe Map In Out Tracks Radar Click: Animate Map NEXRAD Radar Binghamton, Base Reflectivity 0.50 Degree Elevation Range 124 NMI — Map of All US Radar Sites » A d v a n c e d R a d a r T y p e s CAS May 21, 2010 Collective Inference on Markov Models for Modeling Bird Migration Space time CAS May 21, 2010 Daniel Sheldon, M. A. Saleh Elmohamed, Dexter Kozen CAS May 21, 2010 Science base to support activities • Track flow of ideas in scientific literature • Track evolution of communities in social networks • Extract information from unstructured data sources. CAS May 21, 2010 Tracking the flow of ideas in scientific literature Yookyung Jo CAS May 21, 2010 File Retrieve Index Web Probabilistic Chord Text Usage Text Index Page rank Web Web Retrieval Link Word Page Query Graph Centering Search Search Anaphora Rank Text Discourse Tracking the flow of ideas in the scientific literature Yookyung Jo CAS May 21, 2010 Original papers CAS May 21, 2010 Original papers cleaned up CAS May 21, 2010 Referenced papers CAS May 21, 2010 Referenced papers cleaned up. Three distinct categories of papers CAS May 21, 2010 Topic evolution thread • Seed topic : – 648 : making c program type-safe by separating pointer types by their usage to prevent memory errors • 3 subthreads : – Type : – Garbage collection : – Pointer analysis : Yookyung Jo, 2010 CAS May 21, 2010 Tracking communities in social networks Liaoruo Wang CAS May 21, 2010 “Statistical Properties of Community Structure in Large Social and Information Networks”, Jure Leskovec; Kevin Lang; Anirban Dasgupta; Michael Mahoney • Studied over 70 large sparse real-world networks. • Best communities are of approximate size 100 to 150. CAS May 21, 2010 Our most striking finding is that in nearly every network dataset we examined, we observe tight but almost trivial communities at very small scales, and at larger size scales, the best possible communities gradually "blend in" with the rest of the network and thus become less "community-like." CAS May 21, 2010 Conductance 100 Size of community CAS May 21, 2010 Giant component CAS May 21, 2010 Whisker: A component with v vertices connected by e v edges CAS May 21, 2010 Our view of a community Colleagues at Cornell Classmates TCS Me Family and friends CAS May 21, 2010 More connections outside than inside Core Should we remove all whiskers and search for communities in the core? CAS May 21, 2010 Should we remove whiskers? • Does there exist a core in social networks? Yes • Experimentally it appears at p=1/n in G(n,p) model • Is the core unique? Yes and No • In G(n,p) model should we require that a whisker have only a finite number of edges connecting it to the core? Laura Wang CAS May 21, 2010 Algorithms • How do you find the core? • Are there communities in the core of social networks? CAS May 21, 2010 How do we find whiskers? • NP-complete if graph has a whisker • There exists graphs with whiskers for which neither the union of two whiskers nor the intersection of two whiskers is a whisker CAS May 21, 2010 Graph with no unique core 3 1 1 CAS May 21, 2010 Graph with no unique core 1 CAS May 21, 2010 • What is a community? • How do you find them? CAS May 21, 2010 Communities Conductance Can we compress graph? Rosvall and Bergstrom, “An informatio-theoretic framework for resolving community structure in complex networks” Hypothesis testing Yookyung Jo CAS May 21, 2010 Description of graph with community structure • Specify which vertices are in which communities. • Specify the number of edges between each pair of communities. CAS May 21, 2010 Information necessary to specify graph given community structure • m=number of communities • ni=number of vertices in ith community • lij number of edges between ith and jth communities • m ni ni 1 / 2 ni n j H Z log lii i 1 i j lij CAS May 21, 2010 Description of graph consists of description of community structure plus specification of graph given structure. • Specify community for each edge and the number of edges between each community • nlogm 12 m m 1 log l H Z community structure • Can this method be used to specify more complex community structure where communities overlap? CAS May 21, 2010 Hypothesis testing • Null hypothesis: All edges generated with some probability p0 • Hypothesis: Edges in communities generated with probability p1, other edges with probability p0. CAS May 21, 2010 Clustering Social networks Mishra, Schreiber, Stanton, and Tarjan • Each member of community is connected to a beta fraction of community • No member outside the community is connected to more than an alpha fraction of the community • Some connectivity constraint CAS May 21, 2010 In sparse graphs • How do you find alpha – beta communities? • What if each person in the community is connected to more members outside the community then inside? CAS May 21, 2010 In sparse graphs • How do you find alpha – beta communities? • What if each person in the community is connected to more members outside the community then inside? CAS May 21, 2010 Structure of social networks • Different networks may have quite different structure. • An algorithm for finding alpha beta communities in sparse graphs. • How many communities of a given size are there in a social network? • Results on exploring a tweeter network of approximately 100,000 nodes. Liaoruo Wang, Jing He, Hongyu Liang CAS May 21, 2010 200 randomly chosen nodes Apply alpha beta algorithm How many alpha beta communities? CAS May 21, 2010 Resulting alpha beta community Massively overlapping communities • Are there a small number of massively overlapping communities that share a common core? • Are there massively overlapping communities in which one can move from one community to a totally disjoint community? CAS May 21, 2010 Massively overlapping communities with a common core CAS May 21, 2010 Massively overlapping communities CAS May 21, 2010 • Define the core of a set of overlapping communities to be the intersection of the communities. • There are a small number of cores in the tweeter data set. CAS May 21, 2010 Size of initial set Number of cores 25 221 50 94 100 19 150 8 200 4 250 4 300 4 350 3 400 3 450 3 CAS May 21, 2010 450 1 5 400 1 5 350 1 5 300 1 3 5 6 250 1 3 5 6 200 1 3 5 6 150 1 2 3 4 CAS May 21, 2010 5 6 6 6 6 7 8 • What is the graph structure that causes certain cores to merge and others to simply vanish? • What is the structure of cores as they get larger? Do they consist of concentric layers that are less dense at the outside? CAS May 21, 2010 Transmission paths for viruses, flow of ideas, or influence Sucheta Soundarajan CAS May 21, 2010 Trivial model Half of contacts Two third of contacts CAS May 21, 2010 Time of first item Time of second item CAS May 21, 2010 Theory to support new directions Random graphs High dimensions and dimension reduction Spectral analysis Massive data sets and streams Collaborative filtering Extracting signal from noise Learning and VC dimension CAS May 21, 2010 Science base • What do we mean by science base? Example: High dimensions CAS May 21, 2010 High dimension is fundamentally different from 2 or 3 dimensional space CAS May 21, 2010 High dimensional data is inherently unstable • Given n random points in d dimensional space essentially all n2 distances are equal. • d x y xi yi 2 i 1 CAS May 21, 2010 2 High Dimensions Intuition from two and three dimensions not valid for high dimension Volume of cube is one in all dimensions Volume of sphere goes to zero CAS May 21, 2010 Gaussian distribution Probability mass concentrated between dotted lines CAS May 21, 2010 Gaussian in high dimensions √d 3 CAS May 21, 2010 Two Gaussians √d CAS May 21, 2010 3 CAS May 21, 2010 Distance between two random points from same Gaussian Points on thin annulus of radius d Approximate by sphere of radius d Average distance between two points is 2d (Place one pt at N. Pole other at random. Almost surely second point near the equator.) CAS May 21, 2010 CAS May 21, 2010 2d d d CAS May 21, 2010 Expected distance between pts from two Gaussians separated by δ 2d 2d 2 CAS May 21, 2010 Can separate points from two Gaussians if 2 2d 2d 2d 1 12 2 2d 2d 1 2 2 2d 2 2d 1 4 CAS May 21, 2010 Dimension reduction • Project points onto subspace containing centers of Gaussians • Reduce dimension from d to k, the number of Gaussians CAS May 21, 2010 • Centers retain separation • Average distance between points reduced by x1, x2 , , xd x1 , x2 , , xk ,0, ,0 d xi k xi CAS May 21, 2010 d k Can separate Gaussians provided 2 2k 2k > some constant involving k and γ independent of the dimension CAS May 21, 2010 Finding centers of Gaussians a1 a2 an a1 v1 a2 an First singular vector v1 minimizes the perpendicular distance to points CAS May 21, 2010 Gaussian The first singular vector goes through center of Gaussian and minimizes distance to the points. CAS May 21, 2010 Best k-dimensional space for Gaussian is any space containing the line through the center of the Gaussian. CAS May 21, 2010 Given k Gaussians, the top k singular vectors define a k dimensional space that contains the k lines through the centers of the k Gaussian and hence contain the centers of the Gaussians CAS May 21, 2010 Johnson-Lindenstrass • A random projection of n points to log n dimensional space approximately preserves all pair wise distances with high probability. • Clearly one cannot project n points to a line and approximately preserve all pair wise distances. CAS May 21, 2010 • We have just seen what a science base for high dimensional data might look like. • What other areas do we need to develop a science base for? CAS May 21, 2010 Extracting information from large data sources Communities in social networks Tracking flow of ideas Ranking is important Restaurants, movies, books, web pages Multi billion dollar industry Collaborative filtering When a customer buys a product what else is he likely to buy Dimension reduction CAS May 21, 2010 Sparse vectors There are a number of situations where sparse vectors are important Tracking the flow of ideas in scientific literature Biological applications Signal processing CAS May 21, 2010 Sparse vectors in biology plants Phenotype Observables Outward manifestation Genotype Internal code CAS May 21, 2010 Signal processing Reconstruction of sparse vector Y A Random orthonormal matrix Fourier transform 0 0 0 13 0 9 0 0 0 X CAS May 21, 2010 A y x orthonormal matrix x AT If x is a sparse vector, one can recover x from a few random samples of y Let ŷ be the random samples of y. The set of x consistent with ŷ is an affine space V and x arg min V 1 CAS May 21, 2010 y • A vector and it’s Fourier transform cannot both be sparse. CAS May 21, 2010 Lemma: Let nx be the number of non zero elements of x and let ny be the number of non zero elements of y. Then nxny≥n Proof: Let x have s non zero elements. Let Ẑ be the sub matrix of Z consisting of columns corresponding to non zero elements of x and s consecutive rows of Z. Ẑ is non singular CAS May 21, 2010 Zˆ is non singular a u 1 a au2 u v b bv1 bv2 T c 1 a w1 c a2 c w 2 w Normalize CAS May 21, 2010 1 c c2 Vandermonde 1 b b2 Lemma: The Vandermonde matrix is non singular. Proof: 1 1 1 1 a a 2 a b 2 c 1 b b c 2 1 c c 2 a 2 b2 Let a= a1 , a2 , , an and b= b1 , b2 , f a1 1 b1 f a2 1 b2 f an 1 bn b12 b22 bn2 c0 c 1 cn1 CAS May 21, 2010 , bn . • One can construct an n-1 degree polynomial through any n points CAS May 21, 2010 CAS May 21, 2010 Representation in composite basis cannot have two sparse representations coef1 Fourier1 x coef 2 Fourier2 coef1 coef 2 Fourier1 Fourier2 0 coef1 coef 2 Fourier2 Fourier1 y F y CAS May 21, 2010 Data streams • Record the WWW on your lap top • Census data • Find number of distinct elements in a sequence. • Find all frequently occurring elements in a sequence. CAS May 21, 2010 Multiplication of two very large sparse matrices C = A B Large sparse matrix Sample row of A and columns of B. What is most rows of A are very light and a few are very heavy? Sample rows with probability proportional to square of weight. CAS May 21, 2010 Random graphs – the emergence of the giant component in the G(n,p) model. To compute the size of a connected component of G(n,p) do a search of a component starting from a random vertex and generate an edge only when the search process needs to know if the edge exists. CAS May 21, 2010 Algorithm Mark start vertex discovered. Select unexplored vertex from set of discovered vertices and add all vertices that are connected to this vertex to the set of discovered vertices andmark vertex as explored. Discovered but unexplored vertices are called the frontier. Process terminates when frontier becomes empty. CAS May 21, 2010 Let zi be the number of vertices discovered in the first i steps of the search. i d Distribution of zi is binomial n 1, 1 1 n To see this observe probability given vertex not adjacent to vertex being searched in a given step is 1 dn not discovered in i steps 1 is discovered in i steps 1 1 CAS May 21, 2010 d i n d i n 1 e din Let s be the expected size of the discovered set of vertices. s=E zi n 1 e di n At each time unit a vertex is marked as explored. Thus the expected size of frontier is s i n 1 e normalized size of frontier replace i n s i n di n i 1 e di n i n by x the normalized time normalized size of frontier f x 1 e dx x Search process stops when frontier becomes empty. CAS May 21, 2010 This argument used average values. Can we make it rigorous? 0 f x 1 e dx x CAS May 21, 2010 1 Belief propagation graphical model or random Markov field x1 x1, x3 x2 x3 1 p x1 , x2 x3 xi , x j Z ij marginal p x1 p x , x x 1 2 3 x2 , x3 MAP arg max p x1 , x2 x3 CAS May 21, 2010 Factor graph x1 x2 x3 x1 x1 x4 x5 x2 x3 CAS May 21, 2010 x4 x5 If factor graph is a tree Send messages up the tree Computes correct marginals CAS May 21, 2010 If factor graph not a tree then we could use message passing algorithm anyway. No guarantee of convergence No guarantee of correct marginals even if the algorithm converge e + + + xi x j + + + inverse temperature + ? + + + + + + + + Important issue – correlation of variables CAS May 21, 2010 + high temp p x 1 1/ 2 low temp p(x=1)=1 Phase transition in correlation between spin at leaves and spin at root. q p CAS May 21, 2010 Low temperature High temperature CAS May 21, 2010 Low Temperature High temperature CAS May 21, 2010 Low Temperature Phase transition High temperature At phase transition q =1 and we can solve for . p CAS May 21, 2010 1 2 ln d 1 d 1 Learning theory and VC-dimension salary 250 million data points age census data Theorem: If shape has finite VC-dimension, an estimate based on a random sample will not deviate from true value by much. CAS May 21, 2010 Discrepancy theory CAS May 21, 2010 Conclusions We are in an exciting time of change. Information technology is a big driver of that change. The computer science theory needs to be developed to support this information age. CAS May 21, 2010