BEAR: Architecting Gigascale DRAM caches

advertisement

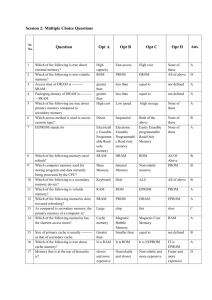

BEAR: MITIGATING BANDWIDTH BLOAT IN GIGASCALE DRAM CACHES ISCA 2015 Portland, OR June 15 , 2015 Chiachen Chou, Georgia Tech Aamer Jaleel, NVIDIA* Moinuddin K. Qureshi, Georgia Tech 3D DRAM HELPS MITIGATE BANDWIDTH WALL 3D DRAM: Hybrid Memory Cube (HMC), High Bandwidth Memory (HBM) Intel Xeon Phi NVIDIA Pascal Stacked DRAM provides 4-8X bandwidth, but has limited capacity courtesy: Micron, JEDEC, Intel, NVIDIA 2 Memory Hierarchy 3D DRAM IS USED AS A CACHE (DRAM CACHE) fast CPU CPU L1$ L2$ L1$ L2$ L3$ DRAM Cache Off-chip DRAM slow 1GB 3DDRAM$ DRAM 16M Cache Lines 4B Tags 64MB Tag Storage DRAM$ stores tags in 3D DRAM for scalability 3 CAN DRAM CACHE PROVIDE 4X BANDWIDTH? DRAM Cache (Tags + Data) TAGDATA ✔ Hit (Good Use of BW) 4X DATA CPU 1X Memory ✘ Miss Detection ✘ Miss Fill ✘ Writeback Detection ✘ Writeback Fill Secondary Operations (Waste BW) DRAM$ does not utilize full bandwidth 4 AGENDA • Introduction • Background – DRAM Cache Designs – Secondary Operations – Bloat Factor • BEAR • Results • Summary 5 DRAM CACHE HAS NARROW BUS CPU 16-byte buses Alloy Cache 2KB Row Buffer 8B Tag 64B Data DRAM Cache DRAM$ accesses tag and data via a narrow bus [Qureshi and Loh MICRO’12] 6 CACHE REQUIRES MAINTENANCE OPERATIONS L3$ Hit Line X Useful Hit (HIT) Line X Miss Dirty Line Y WB Detection/Fill DRAM Cache Miss Fill Secondary Miss Detection (MD), Miss Fill (MF), Memory WB Detection (WD), WB Fill (WF) DRAM$ bandwidth is used for secondary operations 7 QUANTIFYING THE BANDWIDTH USAGE Total Bytes Transferred å Bloat Factor = Useful åUseful Bytes Transferred HIT WF WD HIT MF MD HIT Transfer on Bus HIT HIT HIT 7 = 3 =1 Bloat Factor indicates the bandwidth inefficiency 8 BLOAT FACTOR BREAKDOWN 8-core, 8MB shared L3$, 1GB DRAM$, 16GB memory SPEC2006: 16 rate and 38 mix workloads WF 0.6 WD 0.6 MF 0.7 MD HIT 0.7 1.25 Bloat Factor Bloat Factor Breakdown 4 3.5 3 2.5 2 1.5 1 0.5 0 WB Fill WB Detection Miss Fill Miss Detection Hit (Tag+Data) Hit Baseline Ideal Baseline has a Bloat Factor of 3.8 9 POTENTIAL PERFORMANCE OF 22% 8-core, 8MB shared L3$, 1GB DRAM$, 16GB memory SPEC2006: 16 rate and 38 mix workloads Hit Latency Performance 1.3 250 200 150 Baseline 100 Ideal Speedup Hit Latenc (cycle) 300 1.2 1.1 50 0 1 Hit Latency Ideal Reducing Bloat Factor improves performance 10 NOT ALL OPERATIONS ARE CREATED EQUAL Opportunities to remove Secondary Operations 1. Operations to improve cache performance 2. Operations to ensure correctness Request DATA Insert Exist? DRAM Cache We propose BEAR to exploit these opportunities 11 AGENDA • Introduction • Background • BEAR: Bandwidth-Efficient ARchitecture 1. Bandwidth-Efficient Miss Fill 2. Bandwidth-Efficient Writeback Detection 3. Bandwidth-Efficient Miss Detection • Results • Summary 12 1-P Insert Hit Rate Change (%) DRAM$ 10 20 AVG mix7 mix6 mix4 mix3 mix2 milc 0 mix1 Throw away 12% soplex P Insert 20 lbm returns from memory 30 mcf P=90% Performance Improvement Line X Hit Latency Reduction (%) BANDWIDTH-EFFICIENT MISS FILL +10% 10 0 -10 10 -5% 0 -10 -20 How to enable bypass without hit rate degradation? 13 BAB LIMITS THE HIT RATE LOSS Bandwidth-Aware Bypass (BAB) no bypass Insert Set Hit Rate No Bypass False + + X-Y<Δ Bypass Set Hit Rate probabilistic bypass 90% bypass DRAM$ True Probabilistic Bypass Use Probabilistic Bypass when hit rate loss is small 14 BAB IMPROVE PERFORMANCE BY 5% Bloat Factor Breakdown Performance 4 0.6 0.6 MF 0.7 0.1 MD HIT 0.7 1.25 3 2.5 Speedup WD 3.5 Bloat Factor WF 1.1 2 1.5 1.05 BAB 1 0.5 0 1 Baseline BAB BEAR Hit Rate: Alloy 64%, BAB 62% BAB trades off small hit rate for 5% improvement 15 WHAT IS A WRITEBACK DETECTION? L3$ Dirty Line Ynew (WB Detection) DRAM Cache Line Yold Exist? How can we remove Writeback Detection? 16 DRAM CACHE PRESENCE FOR WB DETECTION DRAM Cache Presence (DCP) L3$ Dirty Line Ynew V D ? True False Only WB Fill WB Detection + WB Fill Exist? DRAM Cache Line Yold DRAM Cache Presence reduces WB Detection 17 DCP IMPROVES PERFORMANCE BY 4% Bloat Factor Breakdown WF Performance 4 0.6 1.1 3.5 WD 0.6 0.1 MD 0.7 2.5 Speedup 0.1 Bloat Factor MF 3 2 1.5 BAB+ DCP 1.05 BAB 1 HIT 1.25 0.5 0 1 Baseline BAB BAB+DCP BEAR DCP provides 4% improvement in addition to BAB 18 WHAT IS A MISS DETECTION? L3$ Missing Line X (Miss Detection) DRAM Cache (Tag + Data) Line X Exist? Can we detect a miss w/o using BW? 19 NEIGHBOR’S TAG COMES FREE WITH DEMAND Address X DRAM Row Buffer 2KB X TAD TAD Demand Tag+Data+Tag (8+64+8=80Bytes) Neighbor Neighboring Tag Cache (NTC) Neighboring Tag Cache saves Miss Detection 20 NTC SHOWS 2% PERFORMANCE IMPROVEMENT Bloat Factor Breakdown WD 0.1 MF 0.1 MD 0.7 0.5 HIT 1.25 1.15 3.5 BAB+ DCP+ NTC BAB+ DCP 3 2.5 Speedup 0.6 4 Bloat Factor WF Performance 2 1.5 1.1 1.05 1 BAB 0.5 0 1 Baseline BAB BAB+DCP BEAR BEAR NTC improves performance by additional 2% 21 AGENDA • • • • • Introduction Background BEAR Results Summary 22 METHODOLOGY CPU Stacked DRAM Core Chips: • 8 cores 3.2 GHz • 2-wide OOO • 8MB 16-way L3 shared cache Off-chip DRAM DRAM Cache Off-chip DRAM Capacity 1GB 16GB Bus DDR3.2GHz, 128-bit DDR1.6GHz, 64-bit Channel 4 channels, 16 banks/ch 2 channels 8 banks/ch • Baseline: Alloy Cache [MICRO’12] • SPEC2006 (16 memory intensive apps): 16 rate and 38 mix workloads 23 BEAR REDUCES BLOAT FACTOR BY 32% Bloat Factor Baseline BEAR Performance Ideal BEAR 4 Ideal 1.3 3 Speedup Bloat Factor 3.5 2.5 2 1.5 1.2 1.1 1 0.5 0 1 ALL54 ALL54 BEAR improves performance by 11% 24 BW BLOAT IN TAGS-IN-SRAM DESIGNS Tags-In-SRAM (TIS) Designs: (1) storage overhead (64MB) and (2) access latency Bloat Factor CPU 4 Hit Tags in SRAM 64MB 3.5 MF WF Bloat Factor 3 2.5 2 1.5 1 DRAM$ 0.5 0 Alloy BEAR TIS (64MB) Tags-in-SRAM also has bandwidth bloat problem 25 TAGS-IN-SRAM PERFORMS SIMILAR TO BEAR Performance 1.7 1.6 Speedup 1.5 1.4 1.3 1.2 1.1 1 Alloy BEAR TIS (64MB) BEAR can be applied to reduce BW bloat in Tags-in-SRAM DRAM$ designs 26 SUMMARY • 3D DRAM as a cache mitigates the memory wall. • In DRAM caches, secondary operations cause slow down to the critical data. • We propose BEAR, which targets three sources of bandwidth bloat in DRAM cache. 1. Bandwidth-Efficient Miss Fill 2. Bandwidth-Efficient Writeback Detection 3. Bandwidth-Efficient Miss Detection • Overall, BEAR reduces the bandwidth bloat by 32%, and improves the performance by 11% 27 THANK YOU Computer Architecture and Emerging Technologies Lab, Georgia Tech Backup Slides 29 THE OVERHEAD OF BEAR IS NEGLIGIBLE SMALL Design Cost Total Bandwidth-Aware Bypass 8 bytes per thread 64 bytes DRAM Cache Presence One bit per line in LLC 16K bytes Neighboring Tag Register 44 bytes per bank 3.2K bytes Total Cost 19.2K bytes Overall, BEAR incurs HW overhead of 19.2KB 30 COMPARISON TO OTHER DRAM$ DESIGNS Tags-In-DRAM Designs Performance Speedup (w.r.t No L4) 2 1.8 28% 11% LH-cache 1.6 Alloy 1.4 Incl-Alloy BEAR 1.2 1 RATE MIX ALL BEAR outperforms other DRAM$ designs 31