Presentation on reviewing state-of-art approach

advertisement

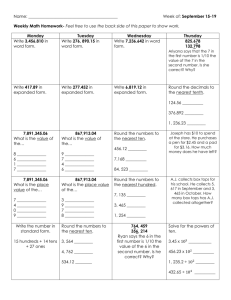

FLANN Fast Library for Approximate Nearest Neighbors Marius Muja and David G. Lowe University of British Columbia Presented by Mohammad Sadegh Riazi Rice University 1 /26 Outline Introduction • What is FLANN? • Which programming languages does it support? Applications Approaches • Randomized k-d Tree Algorithm • The Priority Search K-Means Tree Algorithm Experiments • Data Dimensionality • Search Precision • Automatic Selection of the Optimal Algorithm Scaling Nearest Neighbor Search What we are going to do References 2 /26 Outline Introduction • What is FLANN? • Which programming languages does it support? Applications Approaches • Randomized k-d Tree Algorithm • The Priority Search K-Means Tree Algorithm Experiments • Data Dimensionality • Search Precision • Automatic Selection of the Optimal Algorithm Scaling Nearest Neighbor Search What we are going to do References 3 /26 What is FLANN? FLANN is a library for performing fast approximate nearest neighbor searches in high dimensional spaces. System for automatically choosing the best algorithm and optimum parameters depending on the dataset. 4 /26 Which Programming Languages does it support? Written in C++ Contains a binding for: •C • MATLAB • Python 5 /26 Outline Introduction • What is FLANN? • Which programming languages does it support? Applications Approaches • Randomized k-d Tree Algorithm • The Priority Search K-Means Tree Algorithm Experiments • Data Dimensionality • Search Precision • Automatic Selection of the Optimal Algorithm Scaling Nearest Neighbor Search What we are going to do References 6 /26 Applications • Cluster analysis • Pattern Recognition • Statistical classification • Computational Geometry • Data compression • Database 7 /26 Outline Introduction • What is FLANN? • Which programming languages does it support? Applications Approaches • Randomized k-d Tree Algorithm • The Priority Search K-Means Tree Algorithm Experiments • Data Dimensionality • Search Precision • Automatic Selection of the Optimal Algorithm Scaling Nearest Neighbor Search What we are going to do References 8 /26 Approaches Multiple Randomized k-d Tree Algorithm • Searching multiple trees in parallel • Splitting dimension is randomly chosen from top ND dimensions with highest variance • ND is fixed to 5 • Usually 20 trees is used • Best performance in most of data sets 9 /26 Approaches The priority search K-Means Tree algorithm • Partitioning data into K distinct regions • Recursively partitioning each zone until the leaf node which has no more than K items • Pick up the initial centers using random selection Gonzales’ algorithm • I max is number of iterations of making regions • Better performance than k-d tree for higher precisions 10 /26 Approaches Complexity Comparison 11 /26 Outline Introduction • What is FLANN? • Which programming languages does it support? Applications Approaches • Randomized k-d Tree Algorithm • The Priority Search K-Means Tree Algorithm Experiments • Data Dimensionality • Search Precision • Automatic Selection of the Optimal Algorithm Scaling Nearest Neighbor Search What we are going to do References 12 /26 Experiments Data Dimensionality • Has a great impact on the nearest neighbor matching performance • The decrease or increase in performance is highly correlated with the type of data • For Random data samples it will highly decreases 13 /26 Experiments Data Dimensionality • However for Image Patches and real life data, the performance will increases as dimensionality increases. It can be explained by the fact that each dimension gives us some information about the other dimensions so with few search iterations it’s more likely to find the exact NN 14 /26 Experiments Search Precision • The desired search precision determines the degree of speedup • Accepting precision as low as 60% we can achieve a speedup of three orders of magnitude 15 /26 Experiments Automatic Selection of the Optimal Algorithm Algorithm is a parameter itself Each algorithm can have different performance with different data sets Each algorithm has some internal parameters Dimensionality has a great impact Size and Structure of data (Correlation?) Desired Precision k-means tree & randomized Kd-trees have best performances in most data sets 16 /26 Experiments How to find the best internal parameters • First using Global Grid Search to find a zone on parameter plane in order to achieve better performance • Then Local optimizing using Nelder-Mead downhill simplex method • Can choose optimizing on all data sets or portion of it • Do this for all available algorithms Find the best algorithms with its internal parameters 17 /26 Outline Introduction • What is FLANN? • Which programming languages does it support? Applications Approaches • Randomized k-d Tree Algorithm • The Priority Search K-Means Tree Algorithm Experiments • Data Dimensionality • Search Precision • Automatic Selection of the Optimal Algorithm Scaling Nearest Neighbor Search What we are going to do References 18 /26 Scaling Nearest Neighbor Search • We can achieve better performance using larger scale data sets • Problem: NOT possible to load into single memory • Solutions: • Dimension Reduction • Keeping data on a disk and loading into memory (poor performance) • Distributing ← 19 /26 Scaling Nearest Neighbor Search • Distribute NN matching among N machines using Map-Reduce like algorithm • Each machine will only have to index and search 1/N of the whole data • The final result of NNS is obtained by merging the partial results from all the machines in the cluster once they have completed the search • Using Message Passing Interface (MPI) specification • The query is sent from a client to one of the computers in MPI cluster (Master server) • The master server broadcasts the query to all of the processes in the cluster • Each process run NNS in parallel on its own fraction of the data • When the search is complete an MPI reduce operation is used to merge the results back to master process and the final results is returned to the client 20 /26 Scaling Nearest Neighbor Search • Implementing using Message Passing Interface (MPI) 21 /26 Outline Introduction • What is FLANN? • Which programming languages does it support? Applications Approaches • Randomized k-d Tree Algorithm • The Priority Search K-Means Tree Algorithm Experiments • Data Dimensionality • Search Precision • Automatic Selection of the Optimal Algorithm Scaling Nearest Neighbor Search What we are going to do References 22 /26 What we are going to do • Developing and simulating an approach for pre processing the input queries to get the better performance • Try to group the input data so that we do not need to search though all data in the tree • Trade off between Throughput and Latency 23 /26 References [1] Marius Muja and David G. Lowe: "Scalable Nearest Neighbor Algorithms for High Dimensional Data". Pattern Analysis and Machine Intelligence (PAMI), Vol. 36, 2014. [PDF] [BibTeX] [2] Marius Muja and David G. Lowe: "Fast Matching of Binary Features". Conference on Computer and Robot Vision (CRV) 2012. [PDF] [BibTeX] [3] Marius Muja and David G. Lowe, "Fast Approximate Nearest Neighbors with Automatic Algorithm Configuration", in International Conference on Computer Vision Theory and Applications (VISAPP'09), 2009 [PDF] [BibTeX] 24 /26 Thank you for your attention /26 Questions ? /26