LOOKUPS AND CLASSIFICATION

advertisement

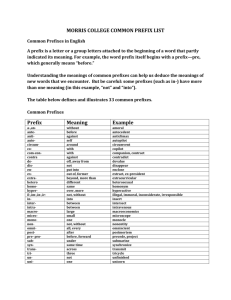

Address Lookup and Classification EE384Y May 23, 2006 High Performance Switching and Routing Telecom Center Workshop: Sept 4, 1997. Pankaj Gupta Principal Architect and Member of Technical Staff, Netlogic Microsystems pankaj@netlogicmicro.com http://klamath.stanford.edu/~pankaj 1 Generic Router Architecture (Review from EE384x) Header Processing Data Hdr Lookup Update IP Address Header IP Address ~1M prefixes Off-chip DRAM Queue Packet Data Hdr Next Hop Address Table Buffer Memory ~1M packets Off-chip DRAM 2 Lookups Must be Fast Year Aggregate Line-rate 1997 1999 2001 2003 2006 622 Mb/s 2.5 Gb/s 10 Gb/s 40 Gb/s 80 Gb/s Arriving rate of 40B POS packets (Million pkts/sec) 1.56 6.25 25 100 200 1. Lookup mechanism must be simple and easy to implement 2. Memory access time is the bottleneck 200Mpps × 2 lookups/pkt = 400 Mlookups/sec → 2.5ns per lookup3 Memory Technology (2006) Technology Max $/chip single ($/MByte) chip density Access speed Watts/ chip Networking DRAM 64 MB $30-$50 ($0.50-$0.75) 40-80ns 0.5-2W SRAM 8 MB $50-$60 ($5-$8) 3-4ns 2-3W TCAM 2 MB $200-$250 ($100-$125) 4-8ns 15-30W Note: Price, speed and power are manufacturer and market dependent. 4 Lookup Mechanism is Protocol Dependent Networking Lookup Techniques we will Protocol Mechanism study MPLS, ATM, Ethernet Exact match search –Direct lookup –Associative lookup –Hashing –Binary/Multi-way Search Trie/Tree IPv4, IPv6 Longest-prefix -Radix trie and variants match search -Compressed trie -Binary search on prefix intervals 5 Outline I. • • • • Routing Lookups – – – – – – – Overview Exact matching Direct lookup Associative lookup Hashing Trees and tries Longest prefix matching Why LPM? Tries and compressed tries Binary search on prefix intervals References II. Packet Classification 6 Exact Matches in ATM/MPLS Direct Memory Lookup VCI/MPLS-label Memory (Outgoing Port, new VCI/label) • VCI/Label space is 24 bits - Maximum 16M addresses. With 64b data, this is 1Gb of memory. • VCI/Label space is private to one link • Therefore, table size can be “negotiated” • Alternately, use a level of indirection 7 Exact Matches in Ethernet Switches • Layer-2 addresses are usually 48-bits long, • The address is global, not just local to the link, • The range/size of the address is not “negotiable” (like it is with ATM/MPLS) • 248 > 1012, therefore cannot hold all addresses in table and use direct lookup. 8 Exact Matches in Ethernet Switches (Associative Lookup) • Associative memory (aka Content Addressable Memory, CAM) compares all entries in parallel against incoming data. Associative Memory (“CAM”) Network address 48bits “Normal” Memory Location Port Match 9 Exact Matches Hashing Pointer List/Bucket Data 16, say Memory Address 48 Hashing Function Data Network Address Address Memory List of network addresses in this bucket • Use a pseudo-random hash function (relatively insensitive to actual function) • Bucket linearly searched (or could be binary search, etc.) • Leads to unpredictable number of memory references 10 Exact Matches Using Hashing Number of memory references Expected number of memory references : 1 (Expected length of list | list is not empty) 2 1 1 2 1 (1 1 ) M N ER Where: ER = Expected number of memory references M = Number of memory addresses in table N = Number of linked lists = M N 11 Exact Matches in Ethernet Switches 16, say Data 48 Hashing Function Address Network Address Perfect Hashing Memory Port There always exists a perfect hash function. Goal: With a perfect hash function, memory lookup always takes O(1) memory references. Problem: - Finding perfect hash functions (particularly a minimal perfect hash) is complex. - Updates make such a hash function yet more complex - Advanced techniques: multiple hash functions, bloom 12 filters… Exact Matches in Ethernet Switches Hashing • Advantages: – Simple to implement – Expected lookup time is small – Updates are fast (except with perfect hash functions) • Disadvantages – Relatively inefficient use of memory – Non-deterministic lookup time (in rare cases) • Attractive for software-based switches. However, hardware platforms are moving to other techniques (but they can do well with a more sophisticated form of hashing) 13 Exact Matches in Ethernet Switches Trees and Tries Binary Search Tree < > > < > log2N < Binary Search Trie N entries Lookup time dependent on table size, but independent of address length, storage is O(N) 0 0 1 010 1 0 1 111 Lookup time bounded and independent of table size, storage is O(NW) 14 Exact Matches in Ethernet Switches Multiway tries 16-ary Search Trie Ptr=0 means no children 0000, 0 0000, ptr 1111, ptr 000011110000 1111, ptr 0000, 0 1111, ptr 111111111111 Q: Why can’t we just make it a 248-ary trie? 15 Exact Matches in Ethernet Switches Multiway tries As degree increases, more and more pointers are “0” Degree of Tree # Mem References 2 4 8 16 64 256 48 24 16 12 8 6 # Nodes Total Memory Fraction (Mbytes) Wasted (%) (x106) 1.09 0.53 0.35 0.25 0.17 0.12 4.3 4.3 5.6 8.3 21 64 49 73 86 93 98 99.5 Table produced from 215 randomly generated 48-bit addresses 16 Exact Matches in Ethernet Switches Trees and Tries • Advantages: – Fixed lookup time – Simple to implement and update • Disadvantages – Inefficient use of memory and/or requires large number of memory references More sophisticated algorithms compress ‘sparse’ nodes. 17 Outline I. • • • • Routing Lookups – – – – – – – Overview Exact matching Direct lookup Associative lookup Hashing Trees and tries Longest prefix matching Why LPM? Tries and compressed tries Binary search on prefix intervals References II. Packet Classification 18 Longest Prefix Matching: IPv4 Addresses • 32-bit addresses • Dotted quad notation: e.g. 12.33.32.1 • Can be represented as integers on the IP number line [0, 232-1]: a.b.c.d denotes the integer: (a*224+b*216+c*28+d) 0.0.0.0 IP Number Line 255.255.255.255 19 Class-based Addressing A B C 128.0.0.0 0.0.0.0 D E 192.0.0.0 Class Range MS bits netid hostid A 0.0.0.0 – 128.0.0.0 0 bits 1-7 bits 8-31 B 128.0.0.0 191.255.255.255 10 bits 2-15 bits 16-31 C 192.0.0.0 223.255.255.255 110 bits 3-23 bits 24-31 1110 - - 11110 - - D (multicast) E (reserved) 224.0.0.0 239.255.255.255 240.0.0.0 255.255.255.255 20 Lookups with Class-based Addresses netid port# 23 Port 1 186.21 Port 2 Class A 192.33.32.1 Class B Class C Exact match 192.33.32 Port 3 21 Problems with Class-based Addressing • Fixed netid-hostid boundaries too inflexible – Caused rapid depletion of address space • Exponential growth in size of routing tables 22 Number of BGP routes advertised Early Exponential Growth in Routing Table Sizes 23 Classless Addressing (and CIDR) • Eliminated class boundaries • Introduced the notion of a variable length prefix between 0 and 32 bits long • Prefixes represented by P/l: e.g., 122/8, 212.128/13, 34.43.32/22, 10.32.32.2/32 etc. • An l-bit prefix represents an aggregation of 232-l IP addresses 24 CIDR:Hierarchical Route Aggregation Backbone routing table Router 192.2.0/22, R2 R1 R2 Backbone R3 R2 ISP, P 192.2.0/22 Site, S Site, T 192.2.1/24 192.2.2/24 R4 ISP, Q 200.11.0/22 192.2.1/24 192.2.2/24 192.2.0/22 200.11.0/22 IP Number Line 25 Number of active BGP prefixes Post-CIDR Routing Table sizes Optional Exercise: What would this graph look like without CIDR? (Pick any one random AS, and plot the two curves side-by-side) Source: http://bgp.potaroo.net 26 Routing Lookups with CIDR Optional Exercise: Find the ‘nesting distribution’ for routes in your randomly-picked AS 192.2.2/24 192.2.2/24, R3 192.2.0/22, R2 200.11.0/22, R4 192.2.0/22 192.2.0.1 192.2.2.100 200.11.0/22 200.11.0.33 LPM: Find the most specific route, or the longest matching prefix among all the prefixes matching the destination address of an incoming packet 27 Longest Prefix Match is Harder than Exact Match • The destination address of an arriving packet does not carry with it the information to determine the length of the longest matching prefix • Hence, one needs to search among the space of all prefix lengths; as well as the space of all prefixes of a given length 28 LPM in IPv4 Use 32 exact match algorithms for LPM! Exact match against prefixes of length 1 Network Address Exact match against prefixes of length 2 Priority Encode and pick Port Exact match against prefixes of length 32 29 Metrics for Lookup Algorithms • Speed (= number of memory accesses) • Storage requirements (= amount of memory) • Low update time (support >10K updates/s) • Scalability – With length of prefix: IPv4 unicast (32b), Ethernet (48b), IPv4 multicast (64b), IPv6 unicast (128b) – With size of routing table: (sweetspot for today’s designs = 1 million) • Flexibility in implementation • Low preprocessing time 30 Radix Trie (Recap) Trie node A 1 P1 111* H1 P2 10* H2 P3 1010* H3 P4 10101 H4 Lookup 10111 C P2 G P3 next-hop-ptr (if prefix) right-ptr left-ptr B 1 0 1 D 1 E 0 1 Add P5=1110* 0 P4 H P5 P1 F I 31 Radix Trie • W-bit prefixes: O(W) lookup, O(NW) storage and O(W) update complexity Advantages Disadvantages Simplicity Worst case lookup slow Wastage of storage space in chains Extensible to wider fields 32 Leaf-pushed Binary Trie Trie node A 1 P1 111* H1 P2 10* H2 P3 1010* H3 P4 10101 H4 C P2 G B 1 0 1 0 left-ptr or next-hop right-ptr or next-hop D P1 E P2 P3 P4 33 PATRICIA B D 2 0 3 0 1 A Patricia tree internal node 1 P1 E C bit-position right-ptr left-ptr 5 P2 F P1 111* H1 P2 10* H2 P3 1010* H3 P4 10101 H4 0 P3 1 P4 G Lookup 10111 Bitpos 12345 34 PATRICIA • W-bit prefixes: O(W2) lookup, O(N) storage and O(W) update complexity Advantages Disadvantages Decreased storage Worst case lookup slow Backtracking makes implementation complex Extensible to wider fields 35 Path-compressed Tree Lookup 10111 1, , 2 0 0 10,P2,4 1 P4 111* H1 P2 10* H2 P3 1010* H3 P4 10101 H4 1 P1 C D 1010,P3,5 P1 B A E Path-compressed tree node structure variable-length next-hop (if prefix present) bitstring left-ptr bit-position right-ptr36 Path-compressed Tree • W-bit prefixes: O(W) lookup, O(N) storage and O(W) update complexity Advantages Disadvantages Decreased storage Worst case lookup slow 37 Multi-bit Tries W Binary trie Depth = W Degree = 2 Stride = 1 bit Multi-ary trie W/k Depth = W/k Degree = 2k Stride = k bits 38 Prefix Expansion with Multi-bit Tries If stride = k bits, prefix lengths that are not a multiple of k need to be expanded E.g., k = 2: Prefix Expanded prefixes 0* 00*, 01* 11* 11* Maximum number of expanded prefixes corresponding to one non-expanded prefix = 2k-1 39 Four-ary Trie (k=2) A four-ary trie node next-hop-ptr (if prefix) ptr00 ptr01 ptr10 ptr11 A 10 11 B P2 D P3 10 G P1 111* H1 P2 10* H2 P3 1010* H3 P4 10101 H4 Lookup 10111 C 10 10 E P11 11 11 P41 P42 F P12 H 40 Prefix Expansion Increases Storage Consumption • Replication of next-hop ptr • Greater number of unused (null) pointers in a node Time ~ W/k Storage ~ NW/k * 2k-1 Optional Exercise: The increase in number of null pointers in LPM is a worse problem than in exact match. Why? 41 Generalization: Different Strides at Each Trie Level • • • • 16-8-8 split 4-10-10-8 split 24-8 split 21-3-8 split Optional Exercise: Why does this not work well for IPv6? 42 Choice of Strides: Controlled Prefix Expansion [Sri98] Given a forwarding table and a desired number of memory accesses in the worst case (i.e., maximum tree depth, D) A dynamic programming algorithm to compute the optimal sequence of strides that minimizes the storage requirements: runs in O(W2D) time Advantages Disadvantages Optimal storage under these constraints Updates lead to suboptimality anyway Hardware implementation difficult 43 Binary Search on Prefix Intervals [Lampson98] P2 I1 0000 Prefix Interval P1 /0 0000…1111 P2 00/2 0000…0011 P3 1/1 1000…1111 P4 1101/4 1101…1101 P5 001/3 0010…0011 P5 0010 P3 P1 I2 I3 0100 P4 I4 0110 1000 10011010 I5 1100 I6 1110 1111 44 Alphabetic Tree 0111 > 0011 > 0001 1/2 > I1 I1 0000 P5 0010 I3 0100 > 1/32 I5 P4 I4 0110 1/32 I6 P3 P1 I2 > 1100 1/16 I4 I2 P2 1/8 I3 1/4 1101 1000 10011010 I5 1100 I6 1110 451111 Another Alphabetic Tree 0001 I1 0011 1/2 0111 I2 1/4 I3 1/8 1100 I4 1/16 1/32 1101 I5 1/32 46 I6 Multiway Search on Intervals •W-bit N prefixes: O(logN) lookup, O(N) storage Advantages Disadvantages Storage is linear Can be ‘balanced’ Lookup time independent of W But, lookup time is dependent on N Incremental updates more complex than tries Each node is big in size: requires higher memory bandwidth 47 Routing Lookups: References • [lulea98] A. Brodnik, S. Carlsson, M. Degermark, S. Pink. “Small Forwarding Tables for Fast Routing Lookups”, Sigcomm 1997, pp 3-14. [Example of techniques for decreasing storage consumption] • [gupta98] P. Gupta, S. Lin, N.McKeown. “Routing lookups in hardware at memory access speeds”, Infocom 1998, pp 12411248, vol. 3. [Example of hardware-optimized trie with increased storage consumption] • P. Gupta, B. Prabhakar, S. Boyd. “Near-optimal routing lookups with bounded worst case performance,” Proc. Infocom, March 2000 [Example of deliberately skewing alphabetic trees] • P. Gupta, “Algorithms for routing lookups and packet classification”, PhD Thesis, Ch 1 and 2, Dec 2000, available at http://yuba.stanford.edu/ ~pankaj/phd.html [Background and introduction to LPM] 48 Routing lookups : References (contd) • [lampson98] B. Lampson, V. Srinivasan, G. Varghese. “ IP lookups using multiway and multicolumn search”, Infocom 1998, pp 1248-56, vol. 3. [Multi-way search] • [PerfHash] Y. Lu, B. Prabhakar and F. Bonomi. “Perfect hashing for network applications”, ISIT, July 2006 • [LC-trie] S. Nilsson, G. Karlsson. “Fast address lookup for Internet routers”, IFIP Intl Conf on Broadband Communications, Stuttgart, Germany, April 1-3, 1998. • [sri98] V. Srinivasan, G.Varghese. “Fast IP lookups using controlled prefix expansion”, Sigmetrics, June 1998. • [wald98] M. Waldvogel, G. Varghese, J. Turner, B. Plattner. “Scalable high speed IP routing lookups”, Sigcomm 1997, pp 25-36. 49