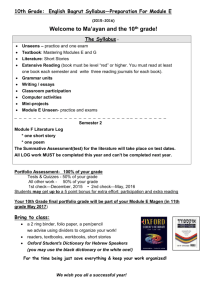

slides - dimva 2014

advertisement

The Economics and Psychology of Botnets Ross Anderson Cambridge July 10th 2014 DIMVA 2014 Traditional systems engineering • Build systems for scalability – choose efficient algorithms and data structures • Once you start to distribute stuff, pay attention to consistency (file locking, fault tolerance etc) • See security as ‘keeping the bad guys out’ by adding crypto, authentication, filtering • React to malware by hardening platforms, writing scanners, talking about safe computing … • But … about 2000, some of us started to realize that this is not enough! July 10th 2014 DIMVA 2014 Economics also vital • Since 2000, we have started to apply economic analysis to security and dependability • Systems often fail because the folks who guard them, or who could fix them, have insufficient incentives – If electricity generation companies don’t have an incentive to provide reserve capacity, there will be blackouts – Where banks can dump fraud risk on customers or merchants, fraud increases – What about taking an insecure computer online? • Insecurity and fragility are often an ‘externality’ July 10th 2014 DIMVA 2014 Information security economics • In the last 12 years, it’s grown from zero to over 100 active researchers, working on many topics! • Models of what’s likely to go wrong – perverse incentives, asymmetric information • Measurements of what is going wrong – patching cycle, malware, fraud • Recommendations – what actors can likely do what • Policy recommendations now being adopted in both the USA and Europe (but often twisted by lobbyists) • Now growing into behavioral economics, psychology July 10th 2014 DIMVA 2014 Security economics 101 • High fixed/low marginal costs, network effects and switching costs all tend to lead to dominant-firm markets with big first-mover advantage • So time-to-market is critical • Microsoft philosophy of ‘we’ll ship it Tuesday and get it right by version 3’ was quite rational • This is why platforms have so many bugs! • Bad guys who are motivated by economics write malware for the dominant platforms • So buggy dominant platforms get exploited July 10th 2014 DIMVA 2014 Who’ll catch the bad guys? • Suppose you’re the Commissioner of the Metropolitan police • A bad guy in Moscow sends out 106 phish • London’s 1% of the Internet, so you see 104 • Do you say – (a) “right, let’s spend £500k trying to identify this villain and extradite him”; or – (b) “the FBI will have seen 200,000 of these; let them do the heavy lifting!” July 10th 2014 DIMVA 2014 Tragedy of the commons • Are we heading for a world with global systems and distributed harm, where law enforcement doesn’t give local incremental benefits? • Who benefits from the systems we are compelled to trust, and who maintains them? • As for emergent globalised phenomena – who can deal with them, or cares enough to try? • What sort of institutions are eventually needed? A new feudalism? Reinvention of the state? • Meantime, how can we minimise losses? July 10th 2014 DIMVA 2014 Security economics and policy • Theory’s all very well, but what about data? • 2008: ‘Security Economics and the Single Market’ report looked at cybercrime and what governments could do about it • 2011: ‘Resilience of the Internet Interconnection Ecosystem’ examined critical infrastructure and made recommendations • 2012 ‘Measuring the Cost of Cybercrime’ sets out to debunk myths and scaremongering July 10th 2014 DIMVA 2014 ‘Measuring the Cost of Cybercrime’ • Undertaken at request of Sir Mark Welland, then chief scientific adviser at the MoD • Coauthors: Chris Barton, Rainer Böhme, Richard Clayton, Michel van Eeten, Michael Levi, Tyler Moore, Stefan Savage • We set out to estimate cybercrime losses from publicly available data • We use EU definition of cybercrime as – Traditional frauds now done by electronic means – Uniquely electronic crimes such as DDoS, hacking July 10th 2014 DIMVA 2014 Decomposing the cost of cybercrime • Many existing studies conflate different things. We broke up costs as – – – – Criminal revenue (gross crime receipts) Direct losses (losses, damage, suffering) Defence costs Indirect losses (costs in anticipation such as defence; costs in consequence such as opportunity costs) • We ran a separate account of the costs of common crime infrastructure such as botnets July 10th 2014 DIMVA 2014 Cybercrimes we considered • • • • • • • • • • Online banking fraud ‘Stranded traveler’ scams Fake antivirus Advanced fee fraud IP-infringing pharmaceuticals IP-infringing music, software Bank card fraud and forgery PABX fraud Cyber-espionage and extortion Tax and welfare fraud July 10th 2014 DIMVA 2014 Cybercrimes we considered • • • • • • • • • • Online banking fraud ‘Stranded traveler’ scams Fake antivirus Advanced fee fraud IP-infringing pharmaceuticals IP-infringing music, software Bank card fraud and forgery PABX fraud Cyber-espionage and extortion Tax and welfare fraud July 10th 2014 DIMVA 2014 Pure cybercrime Transitional crime Traditional crime Proceeds of pure cybercrime Type • Online bank fraud – phishing – Malware – Bank defences • • • • • • • Fake AV Infringing software Infringing music etc Infringing pharma Stranded traveler Fake escrow Advance fee fraud July 10th 2014 UK Global Period $16m $10m $50m $320m 2007 $370m 2010 $1000m 2010 $5m $1m $7m $14m $1m $10m $50m $97m $22m $150m $288m $20m $200m $200m DIMVA 2014 2010 2010 2011 2010 2011 2010 2011 Costs of transitional cybercrime Type • Online card fraud • Offline card fraud UK Global Period $210m $4200m 2010 – domestic $106m – International $147m – Bank / merch defences $120m $2100m 2010 $2940m 2010 $2400m 2010 • Indirect costs of payment fraud – confidence (consumer) $700m $10000m 2010 – confidence (merchant) $1600m $20000m 2009 • PABX fraud July 10th 2014 $185m $4960m 2011 DIMVA 2014 Cost of traditional crime becoming cyber Type • Welfare fraud • Tax fraud • Corruption? … July 10th 2014 UK Global Period $1900m $20000m 2011 $12000m $125000m 2011 DIMVA 2014 The infrastructure supporting cybercrime • Much of the infrastructure is common to many scams (spam, botnets, …) • Indirect losses and defence costs are also affected by many scams (loss of trust, antivirus software …) • To save double counting we measured these separately July 10th 2014 DIMVA 2014 Cost of cybercriminal infrastructure Type UK Global Period • Antivirus $170m $3400m 2011 • Patching cost $50m $1000m 2010 • Clean-up (ISPs) $2m $40m 2010 • Clean-up (users) $500m $10000m 2011 • Defence (firms) $500m $10000m 2010 • Policing $15m $400m 2010 [NB: most of this is extra IT industry turnover!] July 10th 2014 DIMVA 2014 Lessons learned – costs by category • Traditional frauds such as tax and welfare fraud cost each citizen a few hundred pounds/euros/dollars a year • Transitional frauds such as bank and payment fraud cost each citizen a few tens pounds/euros/dollars a year • New cyber frauds such as fake antivirus: a few tens pounds/euros/dollars a year, but almost all of these are indirect and defence costs July 10th 2014 DIMVA 2014 Direct versus indirect costs • Traditional crimes like tax fraud: the losses are most of it • Genuine cybercrimes earn criminals little (tens of cents per citizen per category) but impose huge indirect defence and opportunity costs • E.g. in 2010 the Rustock botnet earned its operators $3.5m via fake pharma, but sent a third of all spam – which cost about $1bn to deal with July 10th 2014 DIMVA 2014 Policy lessons • One conclusion is that we don’t spend anything like the optimal amount on policing! • The USA does most of the heavy lifting: – $100m Federally (FBI, secret service, NCFTA) – $100m at state and local level • Other countries largely free-ride • Some firms spend lots (Google, MS maybe $100m each) but it’s targeted on their concerns; some vendors are net beneficiaries! July 10th 2014 DIMVA 2014 Policy lessons (2) • A second lesson is that infection rates vary hugely across ISPs (two orders of magnitude) • Big ISPs are generally worse (small ISPs’ peering is at risk if they emit too much spam) • But still there are large variations within each category of ISP • The crucial factor is the cost of cleanup • In Europe, it’s hard to bully users as they just switch. We need better ways to persuade … July 10th 2014 DIMVA 2014 Browser warnings • Browsers throw up warnings we ignore, e.g. if a web page contains malware, or is a phishing site, or has an invalid certificate • How can we get users to pay attention, for the cases where it actually matters? • Can we use words, or do we need a face? • Big experiment at Google (Adrienne PorterFelt): faces in this context don’t seem to help! July 10th 2014 DIMVA 2014 Browser warnings (2) • With David Modic, tested response to – Appeal to authority – Social compliance – Concrete vs vague threats • Based on much research on psychology of persuasion, and on scam compliance • We’re also investigating who turned off their browser phishing warnings (or would have had they known how) July 10th 2014 DIMVA 2014 Who turns off the warnings? • Of 496 mTurkers, 17 (3%) turned warnings off, and a further 34 would have if they could • Reasons given (descending order) – False positives – Prefers to make own decisions – Don’t like the hassle – Don’t understand them • Same rank ordering between those who did turn them off, and those who would have July 10th 2014 DIMVA 2014 Who turns off the warnings (2)? • Some interesting correlations turned up which explain most of the tendency to turn off warnings: – – – – – – Desire for autonomy Trust (in real-world and Facebook friends) Confidence in IT competence Lack of trust on authority (companies too) Not using Windows Gender • These explain over 80% of the effect! July 10th 2014 DIMVA 2014 What stops people clicking? • The most significant effect was giving concrete warnings as opposed to vague ones • Some way behind was appeal to authority • Factors other than our treatments: trust in the browser vendor was strongest, then mistrust of authority • All factors together explain 60% of the effect • A strong status quo bias emerged … July 10th 2014 DIMVA 2014 Why this might be useful • Almost all warnings are designed to benefit the warner, not the recipient • The lawyers will game anything they can • Then people will learn to screen it out • Highly specific warnings are the exception, as they are intrinsically hard to game! • Compare: Google search ads work much better than general display ads July 10th 2014 DIMVA 2014 Conclusions • Malware matters! Cyber-criminal infrastructure is a serious global public-goods problem • Rather like environmental degradation… • While some players have incentives to do some work on the problem (some vendors, ISPs, AV firms, the FBI, academics …), all our efforts combined are less than socially optimal • Economics & psychology can help understand why, and help us find better ways to cope July 10th 2014 DIMVA 2014