슬라이드 1 - Intelligent Software Lab.

advertisement

SPOKEN DIALOG SYSTEM FOR

INTELLIGENT SERVICE ROBOTS

Intelligent Software Lab. POSTECH

Prof. Gary Geunbae Lee

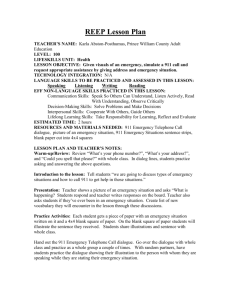

This Tutorial

Introduction to Spoken Dialog System (SDS)

for Human-Robot Interaction (HRI)

Language processing oriented

Brief introduction to SDS

But not signal processing oriented

Mainly based on papers at

ACL, NAACL, HLT, ICASSP, INTESPEECH, ASRU,

SLT, SIGDIAL, CSL, SPECOM, IEEE TASLP

2

OUTLINES

INTRODUCTION

AUTOMATIC SPEECH RECOGNITION

SPOKEN LANGUAGE UNDERSTANDING

DIALOG MANAGEMENT

CHALLENGES & ISSUES

MULTI-MODAL DIALOG SYSTEM

DIALOG SIMULATOR

DEMOS

REFERENCES

INTRODUCTION

Human-Robot Interaction (in Movie)

Human-Robot Interaction (in Real World)

What is HRI?

Wikipedia

(http://en.wikipedia.org/wiki/Human_robot_interaction)

Human-robot interaction (HRI) is the study of interactions

between people and robots. HRI is multidisciplinary with

contributions from the fields of human-computer

interaction, artificial intelligence, robotics, natural language

understanding, and social science.

The basic goal of HRI is to develop principles and algorithms

to allow more natural and effective communication and

interaction between humans and robots.

Area of HRI

Vision

Learning

Emotion

Speech

• Signal Processing

• Speech Recognition

• Speech Understanding

• Dialog Management

• Speech Synthesis

Haptics

SPOKEN DIALOG SYSTEM (SDS)

SDS APPLICATIONS

Car-navigation

Tele-service

Home networking

Robot interface

Talk, Listen and Interact

AUTOMATIC SPEECH

RECOGNITION

SCIENCE FICTION

Eagle Eye (2008, D.J. Caruso)

AUTOMATIC SPEECH RECOGNITION

x

y

Speech

Learning

algorithm

Words

(x, y)

Training examples

A process by which an acoustic speech

signal is converted into a set of words

[Rabiner et al., 1993]

NOISY CHANNEL MODEL

GOAL

Find the most likely sequence w of “words” in

language L given the sequence of acoustic

observation vectors O

Treat acoustic input O as sequence of individual observations

O = o1,o2,o3,…,ot

Define a sentence as a sequence of words:

W = w1,w2,w3,…,wn

Wˆ arg max P(W | O)

WL

P(O | W ) P(W )

ˆ

W arg max

P(O)

WL

Wˆ arg max P(O | W ) P(W )

WL

Bayes rule

Golden rule

TRADITIONAL ARCHITECTURE

버스 정류장이

어디에 있나요?

Wˆ arg max P(O | W ) P(W )

WL

O

Speech Signals

Feature

Extraction

Decoding

버스 정류장이

어디에 있나요?

W

Word Sequence

Network

Construction

Speech

DB

HMM

Estimation

G2P

Text

Corpora

LM

Estimation

Acoustic

Model

Pronunciation

Model

Language

Model

TRADITIONAL PROCESSES

FEATURE EXTRACTION

The Mel-Frequency Cepstrum Coefficients (MFCC) is a

popular choice [Paliwal, 1992]

X(n)

Preemphasis/

Hamming

Window

FFT

(Fast Fourier

Transform)

Mel-scale

filter bank

log|.|

DCT

(Discrete Cosine

Transform)

MFCC

(12-Dimension)

Frame size : 25ms / Frame rate : 10ms

25 ms

...

10ms

a1

a2

a3

39 feature per 10ms frame

Absolute : Log Frame Energy (1) and MFCCs (12)

Delta : First-order derivatives of the 13 absolute coefficients

Delta-Delta : Second-order derivatives of the 13 absolute

coefficients

ACOUSTIC MODEL

Provide P(O|Q) = P(features|phone)

Modeling Units [Bahl et al., 1986]

Context-independent : Phoneme

Context-dependent : Diphone, Triphone, Quinphone

pL-p+pR : left-right context triphone

Typical acoustic model

[Juang et al., 1986]

Continuous-density Hidden Markov Model ( A, B, )

K

Distribution : Gaussian Mixture b j ( x j ) c jk N ( xt ; jk , jk )

k 1

HMM Topology : 3-state left-to-right model for each phone, 1state for silence or pause

bj(x)

codebook

PRONUCIATION MODEL

Provide P(Q|W) = P(phone|word)

Word Lexicon [Hazen et al., 2002]

Map legal phone sequences into words according to phonotactic

rules

G2P (Grapheme to phoneme) : Generate a word lexicon

automatically

Several word may have multiple pronunciations

Example

Tomato

0.2

[ow]

0.5

1.0

[ey]

1.0

1.0

[m]

[t]

0.8

[ah]

1.0

[t]

0.5

[aa]

[ow]

1.0

P([towmeytow]|tomato) = P([towmaatow]|tomato) = 0.1

P([tahmeytow]|tomato) = P([tahmaatow]|tomato) = 0.4

LANGUAGE MODEL

Provide P(W) ; the probability of the sentence

[Beaujard et al.,

1999]

We saw this was also used in the decoding process as the

probability of transitioning from one word to another.

Word sequence : W = w1,w2,w3,…,wn

n

P( w1 wn ) P( wi | w1 wi 1 )

i 1

The problem is that we cannot reliably estimate the

conditional word probabilities, P( wi | w1 wi 1 ) for all words

and all sequence lengths in a given language

n-gram Language Model

n-gram language models use the previous n-1 words to

represent the history

P( wi | w1 wi 1 ) P( wi | wi ( n 1) wi 1 )

Bi-grams are easily incorporated in a viterbi search

LANGUAGE MODEL

Example

Finite State Network (FSN)

서울

부산

에서

출발

세시

네시

출발

대구

대전

하는

기차

버스

도착

Context Free Grammar (CFG)

$time = 세시|네시;

$city = 서울|부산|대구|대전;

$trans = 기차|버스;

$sent = $city (에서 $time 출발 | 출발 $city 도착) 하는 $trans

Bigram

P(에서|서울)=0.2 P(세시|에서)=0.5

P(출발|세시)=1.0 P(하는|출발)=0.5

P(출발|서울)=0.5 P(도착|대구)=0.9

…

NETWORK CONSTRUCTION

Expanding every word to state level, we get a

search network [Demuynck et al., 1997]

Acoustic Model

Pronunciation Model

I

일

I

L

이

I

삼

S

Language Model

L

이

일

S

A

A

M

사

사

M

S

A

삼

Intra-word

transition

start

이

LM is

applied

P(이|x)

P(일

|x)

Word

transition

end

I

I

L

P(사|x)

Between-word

transition

Search

Network

S

A

일

사

P(삼|x)

S

A

M

삼

DECODING

Find Wˆ arg max P(W | O)

WL

Viterbi Search : Dynamic Programming

•

•

Token Passing Algorithm

[Young et al., 1989]

Initialize all states with a token with a null history and the likelihood that it’s a

start state

For each frame ak

– For each token t in state s with probability P(t), history H

– For each state r

– Add new token to s with probability P(t) Ps,r Pr(ak), and history s.H

HTK

Hidden Markov Model Toolkit (HTK)

A portable toolkit for building and manipulating

hidden Markov models [Young et al., 1996]

- HShell : User I/O & interaction with OS

- HLabel : Label files

- HLM : Language model

- HNet : Network and lattices

- HDic : Dictionaries

- HVQ : VQ codebooks

- HModel : HMM definitions

- HMem : Memory management

- HGrf : Graphics

- HAdapt : Adaptation

- HRec : Main recognition processing

functions

SUMMARY

x

y

Decoding

Speech

Words

Search Network

Construction

Acoustic Model

Learning

algorithm

(x, y)

Training examples

Pronunciation Model

I

일

I

L

이

I

삼

S

S

A

M

Language Model

L

이

A

M

일

사

사

S

A

삼

Speech Understanding

= Spoken Language Understanding (SLU)

SPEECH UNDERSTANDING (in general)

Speech

Segment

Meaning

Representation

Computer

Program

Speaker ID /

Language ID

Dave /

English

Named Entity /

Relation

LOC = pod bay

OBJ = door

Syntactic /

Semantic Role

Open=Verb,

the=Det. ...

Topic / Intent

Control the

Spaceship

Summary

Open the doors.

Sentiment /

Opinion

Nervous

SQL

select * from

DOORS where ...

SPEECH UNDERSTANDING (in SDS)

x

y

Input

Output

Speech or

Words

Intentions

Learning

algorithm

(x, y)

Training examples

A process by which natural langauge

speech is mapped to frame structure

encoding of its meanings

[Mori et al., 2008]

LANGUAGE UNDERSTANDING

What’s difference between NLU and SLU?

Robustness; noise and ungrammatical spoken language

Domain-dependent; further deep-level semantics (e.g.

Person vs. Cast)

Dialog; dialog history dependent and utt. by utt. Analysis

Traditional approaches; natural language to SQL

conversion

Speech

Text

ASR

SLU

Semantic

Frame

SQL

Generate

SQL

Response

Database

A typical ATIS system (from [Wang et al., 2005])

REPRESENTATION

Semantic frame (slot/value structure)

[Gildea and Jurafsky, 2002]

An intermediate semantic representation to serve as the

interface between user and dialog system

Each frame contains several typed components called slots. The

type of a slot specifies what kind of fillers it is expecting.

“Show me flights from Seattle to Boston”

ShowFlight

<frame name=‘ShowFlight’ type=‘void’>

<slot type=‘Subject’>FLIGHT</slot>

<slot type=‘Flight’/>

<slot type=‘DCity’>SEA</slot>

<slot type=‘ACity’>BOS</slot>

</slot>

</frame>

Subject

FLIGHT

Semantic representation on ATIS task; XML format (left) and

hierarchical representation (right) [Wang et al., 2005]

Flight

Departure_City

Arrival_City

SEA

BOS

SEMANTIC FRAME

Meaning Representations for Spoken Dialog

System

Slot type 1: Intent, Subject Goal, Dialog Act (DA)

The meaning (intention) of an utt. at the discourse level

Slot type 2: Component Slot, Named Entity (NE)

The identifier of entity such as person, location, organization,

or time. In SLU, it represents domain-specific meaning of a

word (or word group).

Ex) Find Korean

restaurants in Daeyidong,

Pohang

<frame domain=`RestaurantGuide'>

<slot type=`DA' name=`SEARCH_RESTAURANT'/>

<slot type=`NE' name=`CITY'>Pohang</slot>

<slot type=`NE' name=`ADDRESS'>Daeyidong</slot>

<slot type=`NE' name=`FOOD_TYPE'>Korean</slot>

</frame>

HOW TO SOLVE

Two Classification Problems

Input:

Output:

Input:

Output:

Find Korean restaurants in Daeyidong, Pohang

Dialog Act

Identification

SEARCH_RESTAURANT

Find Korean restaurants in Daeyidong, Pohang

FOOD_TYPE

ADDRESS

CITY

Named Entity

Recognition

PROBLEM FORMALIZATION

Encoding:

x

Find

Korean

restaurants

in

Daeyidong

,

Pohang

.

y

O

FOOD_TYPE-B

O

O

ADDRESS-B

O

CITY-B

O

z SEARCH_RESTAURANT

x is an input (word), y is an output (NE), and z is another

output (DA).

Vector x = {x1, x2, x3, …, xT}

Vector y = {y1, y2, y3, …, yT}

Scalar z

Goal: modeling the functions y=f(x) and z=g(x)

CASCADE APPROACH I

Named Entity Dialog Act

Automatic

Speech

Recognition

x

Sequential

Labeling

x,y

Classification

(Named Entity /

Frame Slot)

(Dialog Act / Intent)

Sequential

Labeling Model

Classification

Model

(e.g. HMM, CRFs)

(e.g. MaxEnt, SVM)

x,y,z

Dialog

Management

CASCADE APPROACH II

Dialog Act Named Entity

Automatic

Speech

Recognition

x

Classification

Sequential

Labeling

x,z

(Dialog Act / Intent)

Classification

Model

(Named Entity /

Frame Slot)

z

(e.g. MaxEnt, SVM)

Improve NE, but not DA.

Multiple

Sequential

Models (e.g.

intent-dependent)

x,y,z

Dialog

Management

JOINT APPROACH

Named Entity ↔ Dialog Act

Joint Inference

Automatic

Speech

Recognition

x

Sequential

Labeling

(Named Entity /

Frame Slot)

Classification

(Dialog Act / Intent)

x,y,z

Dialog

Management

Joint Model

(e.g. TriCRFs)

[Jeong and Lee, 2006]

MACHINE LEARNING FOR SLU

Relational Learning (RL) or Structured Prediction (SP)

[Dietterich, 2002; Lafferty et al., 2004, Sutton and McCallum, 2006]

Structured or relational patterns are important because

they can be exploited to improve the prediction accuracy

of our classier

Argmax search (e.g. Sum-Max, Belief propagation, Viterbi

etc)

Basically, RL for language processing is to use a left-to-right

structure (a.k.a linear-chain or sequence structure)

Algorithms: CRFs, Max-Margin Markov Net (M3N), SVM for

Independent and Structured Output (SVM-ISO), Structured

Perceptron, etc.

MACHINE LEARNING FOR SLU

Background: Maximum Entropy (a.k.a logistic regression)

Conditional and discriminative manner

Unstructured! (no dependency in y)

z

Dialog act classification problem

hk

x

Conditional Random Fields [Lafferty et al. 2001]

Structured versions of MaxEnt (argmax search in inference)

Undirected graphical models

Popular in language and text processing

Linear-chain structure for practical implementation

fk

Named entity recognition problem

yt-1

yt

yt+1

gk

xt-1

xt

xt+1

SUMMARY

Solve by isolate (or independent) classifier

such as Naïve Bayes, and MaxEnt

Input:

Output:

Input:

Output:

Find Korean restaurants in Daeyidong, Pohang

Dialog Act

Identification

SEARCH_RESTAURANT

Find Korean restaurants in Daeyidong, Pohang

FOOD_TYPE

ADDRESS

CITY

Solve by structured (or relational) classifier

such as HMM, and CRFs

Named Entity

Recognition

Coffee Break

DIALOG MANAGEMENT

DIALOG MANAGEMENT

x

y

Input

Output

Words or

Intentions

System

Response

Learning

algorithm

(x, y)

Training examples

A central component of a dialog system

to produce system responses with

external knowledge sources

[McTear, 2004]

DIALOG MANAGEMENT

GOAL

Answer your query (e.g., question and order)

given the task domain

It includes :

Provide query results

Ask further slot information

Confirm user utterance

Notify invalid query

Suggest the alternative

Related to dialog complexity and task complexity.

In practice

Find the best system action a given the dialog state s

DESIGN ISSUES

Task complexity

How hard the task is?

How much the system has domain knowledge?

Call

Routing

Simple

Weather

Information

Automatic

Banking

Conversational

English Tutoring

Collaborative

Planning

Complex

DESIGN ISSUES

Dialog complexity

What dialog phenomena are allowed

Initiative strategies

Meta-dialogs; the dialog itself

e.g., Could you hold on for a minute?

Subdialogs; clarification/confirmation

e.g., system-initiative vs. user-initiative vs. mixed-initiative

e.g., You selected KE airlines, is it right?

Multiple dialog threads

e.g., domain switching

DIALOG EXAMPLES

Example 1

S: Where are you going?

U: Gangnam, Seoul

S: What kinds of food do you have in Gangnam, Seoul?

U: Please African food

S: Sorry, there are no African restaurants. Please select another

food.

System-initiative

Implicit confirmation

Merely informs user of failed query

Rigid dialog flow

DIALOG EXAMPLES

Example 2

S: May I help you?

U: I’d like to have African food in Gannam, Seoul

S: Sorry, there are no African restaurants.

S: There are Korean, Japanese, Indian, and American

restaurants in Gangnam.

U: Please Korean restaurants.

S: Would you like to find Korean restaurants?

U: Yes pleases

Mixed-initiative

Implicit/Explicit confirmation

Suggests the alternative when query fails

More natural dialog flow

DIALOG EXAMPLES

Example 3

U: I’d like to have African food in Gangnam, Seoul

S: Sorry, there are no African restaurants.

S: How about American restaurants in Gangnam, Seoul?

U: No I don’t like it.

S: What is your favorite food?

U: I like grilled and seasoned beef

S: So, how about Korean restaurants?

U: Good.

Mixed-initiative

Implicit/Explicit confirmation

Recommends the alternative when query fails

Most natural dialog flow

DIALOG CONTROL

Finite-state based approach

Frame-based approach

Input : Single word or phrase

State transition network (or graph)

It can allow all legal dialog flow which is pre-defined in the state

diagram.

Input : Natural language with concept spotting

Form-filling tasks to access information source

But the questions do not have to be asked in a predetermined sequence

Plan-based approach

Input : Unrestricted natural language

The modeling of dialog as collaboration between intelligent agents to

solve some problems or task.

For more complex task, such as negotiation and problem solving.

KNOWLEDGE-BASED DM (KBDM)

Rule-based approaches

Early KBDMs were developed with handcrafted

rules (e.g., information state update).

Simple Example [Larsson and Traum, 2003]

Agenda-based approaches

Recent KBDMs were developed with domainspecific knowledge and domain-independent

dialog engine.

VoiceXML

What is VoiceXML?

The HTML(XML) of the voice web.

The open standard markup language for voice

application

VoiceXML Resources : http://www.voicexml.org/

Can do

Rapid implementation and management

Integrated with World Wide Web

Mixed-Initiative dialogue

Simple Dialogue implementation solution

VoiceXML EXAMPLE

S: Say one of: Sports scores; Weather information; Log

in.

U: Sports scores

<vxml version="2.0" xmlns="http://www.w3.org/2001/vxml">

<menu>

<prompt>Say one of: <enumerate/></prompt>

<choice next="http://www.example.com/sports.vxml">

Sports scores

</choice>

<choice next="http://www.example.com/weather.vxml">

Weather information

</choice>

<choice next="#login">

Log in

</choice>

</menu>

</vxml>

AGENDA-BASED DM

RavenClaw DM (CMU)

Using Hierarchical Task Decomposition

A set of all possible dialogs in the domain

Tree of dialog agents

Each agent handles the corresponding part of the dialog

task

[Bohus and Rudnicky, 2003]

EXAMPLE-BASED DM (EBDM)

Example-based approaches

Turn #1 (Domain=Building_Guidance)

Dialog Corpus

USER: 회의 실 이 어디 지 ?

[Dialog Act = WH-QUESTION]

[Main Goal = SEARCH-LOC]

[ROOM-TYPE =회의실]

SYSTEM: 3층에 교수회의실, 2층에 대회의실, 소회의실이 있습

니다.

[System Action = inform(Floor)]

Indexed by using semantic & discourse features

Domain = Building_Guidance

Dialog Example

Dialog Act = WH-QUESTION

Main Goal = SEARCH-LOC

ROOM-TYPE=1 (filled), ROOM-NAME=0 (unfilled)

LOC-FLOOR=0, PER-NAME=0, PER-TITLE=0

Previous Dialog Act = <s>, Previous Main Goal = <s>

Discourse History Vector = [1,0,0,0,0]

Lexico-semantic Pattern = ROOM_TYPE 이 어디 지 ?

System Action = inform(Floor)

e* argmax S (ei , h)

ei E

Having the

similar state

Dialog State Space

[Lee et al., 2009]

STOCHASTIC DM

Supervised approaches

Find the best system action to maximize the

conditional probability P(a|s) given the dialog state

Based on supervised learning algorithms

MDP/POMDP-based approaches

[Williams and Young, 2007]

Find the optimal system action to maximize the reward

function R(a|s) given the belief state

[Griol et al., 2008]

Based on reinforcement learning algorithms

In general, a dialog state space is too large

So, generalizing the current dialog state is important

Dialog as a Markov Decision Process

user

dialog act

user

goal

su

au

dialog

history

noisy estimate of

user dialog act

Speech

Understanding

~

a

u

Reward

State

Estimator

machine

state

Speech

Generation

am

Dialog

Policy

R k rk

r ( sm , am )

~

sm

User

~

a

m

sd

k

Reinforcement

Learning

Optimize

MDP

machine

dialog act

~

~ ,~

~

sm a

u su , sd

[Williams and Young, 2007]

SUMMARY

External DB

x

Input

Words or

Intentions

y

Output

Dialog

Corpus

Dialog

Model

Agenda-based approach

Stochastic approach

Example-based approach

System

Response

Demo

Building guidance dialog

TV program guide dialog

Multi-domain dialog with chatting

CHALLENGES & ISSUES

MULTI-MODAL DIALOG SYSTEM

MULTI-MODAL DIALOG SYSTEM

x

y

Input

Input

Input

Speech

Gesture

face

Output

System

Response

Learning

algorithm

(x, y)

Training examples

MULTIMODAL DIALOG SYSTEM

A system which supports human-computer

interaction over multiple different input and/or

output modes.

Input: voice, pen, gesture, face expression, etc.

Output: voice, graphical output, etc.

Applications

GPS

Information guide system

Smart home control

Etc.

여기에서 여기로 가는 제일

빠른 길 좀 알려 줘.

voice

pen

MOTIVATION

Speech: the Ultimate Interface?

(+) Interaction style: natural (use free speech)

(+) Richer channel – speaker’s disposition and emotional state

(if system’s knew how to deal with that..)

(-) Input inconsistent (high error rates), hard to correct error

Natural repair process for error recovery

e.g., may get different result, each time we speak the

same words.

(-) Slow (sequential) output style: using TTS (text-to-speech)

How to overcome these weak points?

Multimodal interface!!

ADVANTAGES

Task performance and user preference

Migration of Human-Computer Interaction

away from the desktop

Adaptation to the environment

Error recovery and handling

Special situations where mode choice helps

TASK PERFORMANCE AND USER

PREFERENCE

Task performance and user preference for

multimodal over speech only interfaces [Oviatt et

al., 1997]

10% faster task completion,

23% fewer words, (Shorter and simpler linguistic constructions)

36% fewer task errors,

35% fewer spoken disfluencies,

90-100% user preference to interact this way.

• Speech-only dialog system

Speech: Bring the drink on the table to the side of bed

• Multimodal dialog System

Speech: Bring this to here

Pen gesture:

Easy,

Simplified

user

utterance !

MIGRAION OF HCI AWAY FROM THE

DESKTOP

Small portable computing devices

Such as PDAs, organizers, and smart-phones

Limited screen real estate for graphical output

Limited input no keyboard/mouse (arrow keys, thumbwheel)

Complex GUIs not feasible

Augment limited GUI with natural modalities such as speech and

pen

Other devices

Kiosks, car navigation system…

Use less space

Rapid navigation over menu hierarchy

No mouse or keyboard

Speech + pen gesture

APPLICATION TO THE ENVIRONMENT

Multimodal interfaces enable rapid adaptation to

changes in the environment

Allow user to switch modes

Mobile devices that are used in multiple environments

Environmental conditions can be either physical or

social

Physical

Noise: Increases in ambient noise can degrade speech

performance switch to GUI, stylus pen input

Brightness: Bright light in outdoor environment can limit

usefulness of graphical display

Social

Speech many be easiest for password, account number etc,

but in public places users may be uncomfortable being

overheard Switch to GUI or keypad input

ERROR RECOVERY AND HANDLING

Advantages for recovery and reduction of

error:

Users intuitively pick the mode that is less error-prone.

Language is often simplified.

Users intuitively switch modes after an error

The same problem is not repeated.

Multimodal error correction

Cross-mode compensation - complementarity

Combining inputs from multiple modalities can reduce

the overall error rate

Multimodal interface has potentially

SPECIAL SITUATIONS WHERE MODE

CHOICE HELPS

Users with disability

People with a strong accent or a cold

People with RSI

Young children or non-literate users

Other users who have problems when handle

the standard devices: mouse and keyboard

Multimodal interfaces let people choose their

preferred interaction style depending on the

actual task, the context, and their own

preferences and abilities.

Demo

Multimodal dialog in smart home domain

English teaching dialog

CHALLENGES & ISSUES

DIALOG SIMULATOR

SYSTEM EVALUATION

Real User Evaluation

1. High Cost (-)

Real Interaction

1. Reflecting Real World (+)

Spoken Dialog System

2. Human Factor

- It looses objectivity (-)

SYSTEM EVALUATION

Simulated User Evaluation

1. Low Cost (+)

Simulated Interaction

Virtual Environment

1. Not Real World (-)

Spoken Dialog System

Simulated User

2. Consistent Evaluation

- It guarantees objectivity (+)

SYSTEM DEVELOPMENT

Exposing System to Diverse Environment

Spoken Dialog System

•Different users

•Noises

•Unexpected focus shift

USER SIMULATION

System Output

Simulated User Input

Spoken Dialog System

Simulated Users

PROBLEMS

User Simulation for spoken dialog systems

involves four essential problems [Jung et al., 2009]

User Intention Simulation

User Utterance Simulation

Spoken Dialog System

Simulated Users

ASR Channel Simulation

USER INTENTION SIMULATION

Goal

Generating appropriate user intentions given the

current dialog state

P( user_intention | dialog_state)

Example

U1 : 근처에 중국집 가자

S1 : 행당동에 북경, 아서원, 시온반점이 있고 홍익동에 정궁중화요

리, 도선동에 양자강이 있습니다.

U2 : 삼성동에는 뭐가 있지?

Semantic Frame (Intention)

Dialog act

WH-QUESTION

Main Goal

SEARCH-LOC

Named Entity

LOC_ADDRESS

USER UTTERANCE SIMULATION

Goal

Generating natural languages given the user

intentions

P( user_utterance | user_intention )

Semantic Frame (Intention)

Dialog act

WH-QUESTION

Main Goal

SEARCH-LOC

Named Entity

LOC_ADDRESS

• 삼성동에는 뭐가 있지?

• 삼성동 쪽에 뭐가 있지?

• 삼성동에 있는 것은 뭐니?

•…

ASR CHANNEL SIMULATION

Goal

Generating noisy utterances from a clean

utterance at certain error rates

P( utternoisy | utterclean , error_rate)

• 삼성동에는 뭐가 있지?

• 삼성동에 뭐 있니?

• 삼정동에는 뭐가 있지?

• 상성동 뭐 가니?

•삼성동에는 무엇이 있니?

•…

Clean utterance

Noisy utterance

AUTOMATED DIALOG SYSTEM

EVALUATION

[Jung et al., 2009]

Demo

Self-learned dialog system

Translating dialog system

REFERENCES

REFERENCES

ASR (1/2)

L.R. Rabiner and B.H. Juang, 1993. Fundamentals of Speech Recognition,

Prentice-Hall.

L.R. Bahl, P.F. Brown, P.V. de Souza, and R.L. Mercer, 1986. Maximum

mutual information estimation of hidden Markov model parameters for

speech recognition, Proceedings of 1986 IEEE International Conference

on Acoustics, Speech and Signal Processing, pp.49–52.

K.K. Paliwal, 1992. Dimensionality reduction of the enhanced feature set

for the HMMbased speech recognizer, Digital Signal Processing, vol.2,

pp.157–173.

B.H. Juang, S.E. Levinson, and M.M. Sondhi, 1986. Maximum likelihood

estimation for multivariate mixture observations of Markov chains, IEEE

Transactions on Information Theory, vol.32, no.2, pp.307–309.

T.J. Hazen, I.L. Hetherington, H. Shu, and K. Livescu, 2002.

Pronunciation modeling using a finite-state transducer representation,

Proceedings of the ISCA Workshop on Pronunciation Modeling and

Lexicon Adaptation, pp.99–104.

REFERENCES

ASR (2/2)

K. Demuynck, J. Duchateau, and D.V. Compernolle, 1997. A static

lexicon network representation for cross-word context dependent

phones, Proceedings of the 5th European Conference on Speech

Communication and Technology, pp.143–146.

S.J. Young, N.H. Russell, and J.H.S Thornton, 1989. Token passing: a

simple conceptual model for connected speech recognition systems.

Technical Report CUED/F-INFENG/TR.38, Cambridge University

Engineering Department.

S. Young, J. Jansen, J. Odell, D. Ollason, and P. Woodland, 1996. The

HTK book. Entropics Cambridge Research Lab., Cambridge, UK.

HTK website: http://htk.eng.cam.ac.uk/

REFERENCES

SLU

R. De Mori et al. Spoken Language Understanding for Conversational

Systems. Signal Processing Magazine. 25(3):50-58. 2008.

Y. Wang, L. Deng, and A. Acero. September 2005, Spoken Language

Understanding: An introduction to the statistical framework. IEEE Signal

Processing Magazine, 27(5):16-31.

D. Gildea, and D. Jurafsky. 2002. Automatic labeling of semantic roles.

Computational Linguistics, 28(3):245-288.

M. Jeong and G.G. Lee, 2006. Jointly predicting dialog act and named

entity for spoken language understanding, IEEE/ACL workshop on SLT.

T. G. Dietterich, 2002. Machine learning for sequential data: A review.

Caelli(Ed.) Structural, Syntactic, and Statistical Pattern Recognition.

J. Lafferty, A. McCallum, and F. Pereira. 2001. Conditional Random Fields:

Probabilistic models for segmenting and labeling sequence data. ICML.

C. Sutton and A. McCallum, 2006. An introduction to conditional random

fields for relational learning. In Introduction to Statistical Relational

Learning. L. Getoor and B. Taskar, Eds. MIT Press.

REFERENCES

DM

M. F. McTear, Spoken Dialogue Technology - Toward the Conversational

User Interface: Springer Verlag London, 2004.

S. Larsson, and D. R. Traum, “Information state and dialogue

management in the TRINDI dialogue move engine toolkit,” Natural

Language Engineering, vol. 6, pp. 323-340, 2006.

B. Bohus, and A. Rudnicky, “RavenClaw: Dialog Management Using

Hierarchical Task Decomposition and an Expectation Agenda,” in Proc. of

the European Conference on Speech, Communication and Technology,

2003, pp. 597-600.

D. Griol, L. F. Hurtado, E. Segarra et al., “A statistical approach to

spoken dialog systems design and evaluation,” Speech Communication,

vol. 50, no. 8-9, pp. 666-682, 2008.

J. D. Williams, and S. Young, “Partially observable Markov decision

processes for spoken dialog systems,” Computer Speech and Language,

vol. 21, pp. 393-422, 2007.

C. Lee, S. Jung, S. Kim et al., “Example-based Dialog Modeling for

Practical Multi-domain Dialog System,” Speech Communication, vol. 51,

no. 5, pp. 466-484, 2009.

REFERENCES

MULTI-MODAL DIALOG SYSTEM & DIALOG

SIMULATOR

S. L. Oviatt , A. DeAngeli, and K. Kuhn, 1997, Integration and

synchronization of input modes during multimodal human-computer

interaction. In Proceedings of Conference on Human Factors in

Computing Systems: CHI '97.

R. Lopez-Cozar, A. D. la Torre, J. C. Segura et al., “Assessment of

dialogue systems by means of a new simulation technique,” Speech

Communication, vol. 40, no. 3, pp. 387-407, 2003.

J. Schatzmann, B. Thomson, K. Weilhammer et al., “Agenda-based User

Simulation for Bootstrapping a POMDP Dialogue System,” in Proc. of the

Human Language Technology/North American Chapter of the Association

for Computational Linguistics, 2007, pp. 149-152.

S. Jung, C. Lee, K. Kim et al., “Data-driven user simulation for

automated evaluation of spoken dialog systems,” Computer Speech and

Language, 2009.

Thank You & QA