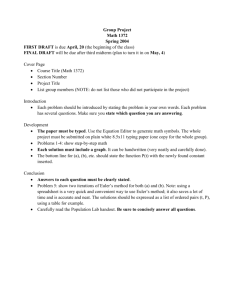

Chapter 6 Numerical Methods for Ordinary Differential Equations

advertisement

Chapter 6 Numerical Methods for Ordinary Differential Equations 1 6.1 The Initial Value Problem: Background 2 Example 6.1 3 Example 6.2 4 Example 6.3 5 Example 6.4 6 Methods To solve differential equation problem: – If f is “smooth enough”, then – Two ways of expressing “smooth enough” 7 a solution will exist and be unique we will be able to approximate it accurately with a wide variety of method Lipschitz continuity Smooth and uniformly monotone decreasing Definitions 6.1 and 6.2 8 Example 6.5 9 Example 6.5 (con.) 10 Theorem 6.1 11 Proof: See Goldstine’s book 6.2 Euler’s Method We have treated Euler’s method in Chapter 2. There are two main derivations about Euler’s method – – 12 Geometric derivation Analytic derivation Geometric Derivation 13 14 Analytic Derivation 15 Error Estimation for Euler’s Method 16 By the analytic derivation, we have The residual for Euler’s method: The truncation error: Example 6.6 17 18 6.3 Analysis of Euler’s Method O(h) 19 Proof: pp. 323-324 (You can study it by yourselves.) Theorem 6.4 Theorem 6.4 ( Error Estimate for Euler ' s Method , VersionII ) Let f be smooth and uniformly monotone decreasing in y , and assume that the solution y C 2 ([ t0 , T ]) for some T t0 . Then for h sufficient ly small, max y (t k ) y k C0 y (t0 ) y 0 Ch y" ,[ t ,T ] t k T 0 where C0 1, C0 0 as k , and C 20 O(h) 1 2m Proof: pp. 324-325 (You can study it by yourselves.) Discussion Both error theorems show that Euler’s method is only first-order accurate (O(h)). – – 21 If f is only Lipschitz continuous, then the constants multiplying the initial error and the mash parameter can be quite large, rapidly growing. If f is smooth and uniformly monotone decreasing in y, then the constants in the error estimate are bounded for all n. Discussion How is the initial error affected by f ? – – 22 If f is monotone decreasing in y, then the effect of the initial error decreases rapidly as the computation progresses. If f is only Lipschitz continuous, then any initial error that is made could be amplified to something exponentially large. 6.4 Variants of Euler’s Method 23 Euler’s method is not the only or even the best scheme for approximating solutions to initial value problems. Several ideas can be considered based on some simple extensions of one derivation of Euler’s method. Variants of Euler’s Method We start with the differential equation And replace the derivative with the simple difference quotient derived in (2.1) 24 What happens if we use other approximations to the derivative? Variants of Euler’s Method If we use O(h) then we get the backward Euler method Method 1 If we use then we get the midpoint method Method 2 25 O(h2) Variants of Euler’s Method If we use the methods based on interpolation (Section 4.5) O(h2) O(h2) then we get two numerical methods Method 3 Method 4 26 Variants of Euler’s Method By integrating the differential equation: (6.23) and apply the trapezoid rule to (6.23) to get O(h3) Thus Method 5 27 (6.25) Variants of Euler’s Method We can use a midpoint rule approximation to integrating (6.23) O(h3) and get Method 6 28 Discussion What about these method? Are any of them any good? Observations – – – 29 Methods 2, 3, and 4 are all based on derivative approximations that are O(h2), thus they are more accurate than Euler method and method 1 (O(h)). Similarly, methods 5 and 6 are also more accurate. Methods 2, 3, and 4 are not single-step methods, but multistep methods. They depend on information from more than one previous approximate value of the unknown function. Discussion Observations (con.) – – 30 Concerning methods 1, 4, and 5, all of these formulas involve we cannot explicitly solve for the new approximate values Thus these methods are called implicit methods. Methods 2 and 3 are called explicit methods. 6.4.1 The Residual and Truncation Error 31 Definition 6.3 32 Example 6.7 33 Example 6.8 34 Definition 6.4 35 6.4.2 Implicit Methods and PredictorCorrector Schemes How to get the value of yn+1? Using Newton’s method or the secant method or a fixed point iteration. Let y = yn+1 36 F(y) F’(y) F(y) h F(y+h)-F(y) 37 Predictor-corrector idea 38 Can we use a much cruder (coarse) means of estimating yn+1? Example 6.11 39 Example 6.12 40 41 Discussion 42 Generally speaking, unless the differential equation is very sensitive to changes in the data, a simple predictor-corrector method will be just as good as the more time-consuming process of solving for the exact values of yn+1 that satisfies the implicit recursion. Discussion 43 If the differential equation is linear, we can entirely avoid the problem of implicitness. Write the general linear ODE as 6.4.3 Starting Values and Multistep Method 44 How to find the starting values? Example 6.15 (con.) 45 Example 6.15 (con.) 46 47 6.4.4 The Midpoint Method and Weak Stability 48 49 50 Discussion What is going on here? – – – – 51 It is the weakly stability problem. The problem is not caused by rounding error. The problem is inherent in the midpoint method and would occur in exact arithmetic. Why? (pp. 340-342) 6.5 Single-step Method: Runge-Kutta 52 The Runge-Kutta family of methods is one of the most popular families of accurate solvers for initial value problems. Consider the more general method: Residual 53 54 55 Rewrite the formula of R, we get Solution 1 Solution 2 56 Solution 3 57 Runge-Kutta Method 58 Example 6.16 59 Example 6.16 (con.) 60 One major drawback of the Runge-Kutta methods is that they require more evaluations of the function f than other methods. 61 6.6 Multistep Methods 6.6.1 The Adams families Adams families include the following most popular ones: – – 62 Explicit methods: Adams-Bashforth families Implicit methods: Adams-Moulton families Adams-Bashforth Methods 63 64 Discussion 65 If we assume a uniform grid with mash spacing h, then the formulas for the and simplify substantially, and they are routinely tabulated: Example 6.17 Table 6.6 66 Example 6.17 (con.) Table 6.6 67 Adams-Moulton Methods If k = -1 then tn-k= tn+1 If k = -1 then tn-k= tn+1 68 Example 6.18 69 70 6.6.2 The BDF Family 71 BDF: backward difference formula The BDF Method (con.) K = -1 72 The BDF Method (con.) 73 6.7 Stability Issues 74 Definition 6.6 A root having multiplicity n = 1 is called a simple root. For example, f (z) = (z-1)(z-2) has a simple root at z = 1, but g(z) = (z-1)2 has a root of multiplicity 2 at z = 1, which is therefore not 75 a simple root. Discussion 76 Example 6.19 77