Unit 5 - PointerLog

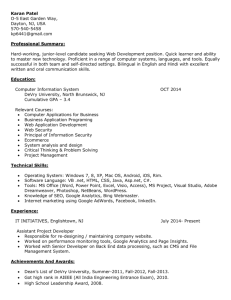

advertisement

Unit 5

Analytics

• Business Analytics

The use of analytical methods, either

manually or automatically, to derive

relationships from data

CLUSTER ANALYSIS

What is Cluster analysis?

Cluster analysis is a group of multivariate techniques whose

primary purpose is to group objects (e.g., respondents,

products, or other entities) based on the characteristics they

possess.

It is a means of grouping records based upon attributes that

make them similar. If plotted geometrically, the objects within

the clusters will be close together, while the distance between

clusters will be farther apart.

* Cluster Variate

- represents a mathematical representation of the

selected set of variables which compares the object’s

similarities.

Cluster Analysis

- grouping is based on the distance (proximity)

-you don’t know who or what belongs to which

group. Not even the number of groups.

Application:

•

Field of psychiatry - where the characterization of patients on the basis of clusters

of symptoms can be useful in the identification of an appropriate form of therapy.

•

Biology - used to find groups of genes that have similar functions.

•

Information Retrieval - The world Wide Web consists of billions of Web pages,

and the results of a query to a search engine can return thousands of pages.

Clustering can be used to group these search results into small number of

clusters, each of which captures a particular aspect of the query. For instance, a

query of “movie” might return Web pages grouped into categories such as

reviews, trailers, stars and theaters. Each category (cluster) can be broken into

subcategories (sub-clusters_, producing a hierarchical structure that further

assists a user’s exploration of the query results.

•

Climate - Understanding the Earth’s climate requires finding patterns in the

atmosphere and ocean. To that end, cluster analysis has been applied to find

patterns in the atmospheric pressure of polar regions and areas of the ocean that

have a significant impact on land climate.

Common Roles Cluster Analysis can play:

•

Data Reduction

-A researcher may be faced with a large number of observations

that can be meaningless unless classified into manageable groups.

CA can perform this data reduction procedure objectively by

reducing the info. from an entire population of sample to info.

about specific groups.

• Hypothesis Generation

-

Cluster analysis is also useful when a researcher wishes to

develop hypotheses concerning the nature of the data or to

examine previously stated hypotheses.

Most Common Criticisms of CA

• Cluster analysis is descriptive, a theoretical, and non

inferential. Cluster analysis has no statistical basis upon which to

draw inferences from a sample to a population. No guarantee of

unique solutions, because the cluster membership for any

number of solutions is dependent upon many elements of the

procedure, and many different solutions can be obtained by

varying one or more elements.

• Cluster analysis will always create clusters, regardless of the

actual existence of any structure in the data. When using

cluster analysis, the researcher is making an assumption of some

structure among the objects. The researcher should always

remember that just because clusters can be found does not

validate their existence. Only with strong conceptual support

and then validation are the clusters potentially meaningful and

relevant.

Most Common Criticisms of CA

• The cluster solution is not generalizable because it is

totally dependent upon the variables used as the

basis for the similarity measure. This criticism can be

made against any statistical technique, but cluster

analysis is generally considered more dependent on

the measures used to characterize the objects than

other multivariate techniques, with the cluster variate

completely specified by the researcher. As a result, the

researcher must be especially cognizant of the

variables used in the analysis, ensuring that they have

strong conceptual support.

Objectives of cluster analysis

•

Cluster analysis used for:

– Taxonomy Description. Identifying groups within the data

– Data Simplication. The ability to analyze groups of similar

observations instead all individual observation.

– Relationship Identification. The simplified structure from CA portrays

relationships not revealed otherwise.

•

Theoretical, conceptual and practical considerations must be observed

when selecting clustering variables for CA:

– Only variables that relate specifically to objectives of the CA are

included.

– Variables selected characterize the individuals (objects) being

clustered.

How does Cluster Analysis work?

The primary objective of cluster analysis is to

define the structure of the data by placing the

most similar observations into groups. To

accomplish this task, we must address three basic

questions:

– How do we measure similarity?

– How do we form clusters?

– How many groups do we form?

Measuring Similarity

• Similarity represents the degree of correspondence among

objects across all of the characteristics used in the analysis. It

is a set of rules that serve as criteria for grouping or

separating items.

– Correlational measures.

- Less frequently used, where large values of r’s do

indicate similarity

– Distance Measures.

Most often used as a measure of similarity, with higher

values representing greater dissimilarity (distance

between cases), not similarity.

Similarity Measure

Graph 2

Graph 1

Chart Title

7

7

Graph 16 represents higher

level of similarity

6

5

5

4

4

3

3

2

2

1

1

0

Category 1Category 2Category 3Category 4

0

Category 1 Category 2 Category 3 Category 4

•

Both graph have the same r = 1, which implies to have a same pattern.

But the distances (d’s) are not equal.

Distance Measures

- several distance measures are available, each with specific characteristics.

• Euclidean distance. The most commonly recognized to as straight- line

distance.

•

Squared Euclidean distance. The sum of the squared differences without

taking the square root.

•

City- block (Manhattan) distance. Euclidean distance. Uses the sum of the

variables’ absolute differences

•

Chebychev distance. Is the maximum of the absolute difference in the

clustering variables’ values. Frequently used when working with metric

(or ordinal) data.

• Mahalanobis distance (D2). Is a generalized distance measure that

accounts for the correlations among variables in a way that weights each

variables equally.

Illustration:

Simple Example

• Suppose a marketing researcher wishes to determine market

segments in a community based on patterns of loyalty to brands

and stores a small sample of seven respondents is selected as a

pilot test of how cluster analysis is applied. Two measures of

loyalty- V1(store loyalty) and V2(brand loyalty)- were measured

for each respondents on 0-10 scale.

Simple Example

How do we measure similarity?

Proximity Matrix of Euclidean Distance Between Observations

Observations

Observation

A

B

C

D

E

F

A

---

B

3.162

---

C

5.099

2.000

---

D

5.099

2.828

2.000

---

E

5.000

2.236

2.236

4.123

---

F

6.403

3.606

3.000

5.000

1.414

---

G

3.606

2.236

3.606

5.000

2.000

3.162

G

---

How do we form clusters?

• SIMPLE RULE:

– Identify the two most similar(closest) observations not already in the

same cluster and combine them.

– We apply this rule repeatedly to generate a number of cluster

solutions, starting with each observation as its own “cluster” and then

combining two clusters at a time until all observations are in a single

cluster. This process is termed a hierarchical procedure because it

moves in a stepwise fashion to form an entire range of cluster

solutions. It is also an agglomerative method because clusters are

formed by combining existing clusters

How do we form clusters?

AGGLOMERATIVE PROCESS

Step

Minimum Distance

Unclustered

Observationsa

Observation

Pair

Initial Solution

CLUSTER SOLUTION

Cluster Membership

Number of

Clusters

Overall Similarity

Measure (Average

Within-Cluster

Distance)

(A)(B)(C)(D)(E)(F)(G)

7

0

1

1.414

E-F

(A)(B)(C)(D)(E-F)(G)

6

1.414

2

2.000

E-G

(A)(B)(C)(D)(E-F-G)

5

2.192

3

2.000

C-D

(A)(B)(C-D)(E-F-G)

4

2.144

4

2.000

B-C

(A)(B-C-D)(E-F-G)

3

2.234

5

2.236

B-E

(A)(B-C-D-E-F-G)

2

2.896

6

3.162

A-B

(A-B-C-D-E-F-G)

1

3.420

In steps 1,2,3 and 4, the OSM does not change substantially, which indicates that we are

forming other clusters with essentially the same heterogeneity of the existing clusters. (OSD

of E-F , E-G & F-G)

When we get to step 5, we see a large increase. This indicates that joining clusters (B-C-D)

and (E-F-G) resulted a single cluster that was markedly less homogenous.

How many groups do we form?

•

Therefore, the three – cluster solution of Step 4 seems the most

appropriate for a final cluster solution, with two equally sized

clusters, (B-C-D) and (E-F-G), and a single outlying observation (A).

This approach is particularly useful in identifying outliers, such as

Observation A. It also depicts the relative size of varying clusters,

although it becomes unwieldy when the number of observations

increases.

Graphical Portrayals

Graphical Portrayals

•

Dendogram

- Graphical representation (tree graph) of the results of a hierarchical

procedure. Starting with each object as a separate cluster, the

dendrogram shows graphically how the clusters are combined at each

step of the procedure until all are contained in a single cluster

Outliers: Removed or Retained?

•

Outliers can severely distort the representativeness of the results if they

appear as structure (clusters) inconsistent with the objectives.

– They should be removed if the outliers represents:

• Aberrant observations not representative of the population

• Observations of small or insignificant segments within the population

and of no interest to the research objectives

– They should be retained if a under sampling/poor representation of

relevant groups in the population; the sample should be augmented to

ensure representation of these group.

Detecting Outliers

• Outliers can be identified based on the

similarity measure by:

– Finding observations with large distances from all other

observations.

– Graphic profile diagrams highlighting outlying cases.

– Their appearance in cluster solutions as single – member or

small clusters.

Sample Size

• The researcher should ensure that the sample size is large enough

to provide sufficient representation of all relevant groups of the

population

• The researcher must therefore be confident that the obtained

sample is representative of the population.

Standardizing the Data

•

Clustering variables that have scales using widely differing numbers of

scale points or that exhibit large differences in standard deviations

should de standardized.

– The most common standardization conversion is Z score (with

mean equals to 0 and standard deviation of 1).

Deriving Clusters

There are number of different methods that can be used to carry

out a cluster analysis; these methods can be classified as follows:

Hierarchical Cluster Analysis

Nonhierarchical Cluster Analysis

Combination of Both Methods

Hierarchical

Cluster Analysis

Hierarchical Cluster Analysis

•

The

stepwise

procedure

attempts

to

identify

relatively

homogeneous groups of cases based on selected characteristics

using an algorithm either agglomerative or divisive, resulting to a

construction of a hierarchy or tree like structure (dendogram)

depicting the formation of clusters. This is one of the most

straightforward method.

• HCA are preferred when:

– The sample size is moderate (under 300 – 400, not exceeding 1000).

Two Basic Types of HCA

Agglomerative Algorithm

Divisive Algorithm

*Algorithm- defines how similarity is defined between

multiple – member clusters in the clustering process.

Agglomerative Algorithm

•

Hierarchical procedure that begins with each object or observation in a

separate cluster. In each subsequent step, the two clusters that are most

similar are combined to build a new aggregate cluster. The process is

repeated until all objects a finally combined into a single clusters. From n

clusters to 1.

• Similarity decreases during successive steps. Clusters can’t be split.

Divisive Algorithm

• Begins with all objects in single cluster, which is then divided at each step

into two additional clusters that contain the most dissimilar objects. The

single cluster is divided into two clusters, then one of these clusters is split

for a total of three clusters. This continues until all observations are in a

single – member clusters. From 1 cluster to n sub clusters

Dendogram/ Tree Graph

Divisive Method

Aglomerative

Method

Agglomerative Algorithms

•

Among numerous approaches, the five most popular agglomerative

algorithms are:

– Single – Linkage

– Complete – Linkage

– Average – Linkage

– Centroid Method

– Ward’s Method

– Mahalanobis Distance

Agglomerative Algorithms

•

Single – Linkage

– Also called the nearest – neighbor method, defines similarity between

clusters as the shortest distance from any object in one cluster to any

object in the other.

Agglomerative Algorithms

• Complete Linkage

– Also known as the farthest – neighbor method.

– The oppositional approach to single linkage assumes that the

distance between two clusters is based on the maximum

distance between any two members in the two clusters.

Agglomerative Algorithms

• Average Linkage

• The distance between two clusters is defined as the average

distance between all pairs of the two clusters’ members

Agglomerative Algorithms

• Centroid Method

– Cluster Centroids

- are the mean values of the observation on the variables of

the cluster.

– The distance between the two clusters equals the distance

between the two centroids.

Agglomerative Algorithms

• Ward’s Method

– The similarity between two clusters is the

sum of squares within the clusters summed

over all variables.

– Ward's method tends to join clusters with a

small number of observations, and it is

strongly biased toward producing clusters

with the same shape and with roughly the

same number of observations.

Hierarchical Cluster Analysis

• The Hierarchical Cluster Analysis provides an excellent framework with

which to compare any set of cluster solutions.

• This method helps in judging how many clusters should be retained or

considered.

• Text Mining

Mining Text Data: An Introduction

Data Mining / Knowledge Discovery

Structured Data

HomeLoan (

Loanee: Frank Rizzo

Lender: MWF

Agency: Lake View

Amount: $200,000

Term: 15 years

)

Multimedia

Free Text

Hypertext

Loans($200K,[map],...)

Frank Rizzo bought

his home from Lake

View Real Estate in

1992.

He paid $200,000

under a15-year loan

from MW Financial.

<a href>Frank Rizzo

</a> Bought

<a hef>this home</a>

from <a href>Lake

View Real Estate</a>

In <b>1992</b>.

<p>...

“Search” versus “Discover”

Structured

Data

Unstructured

Data (Text)

Search

(goal-oriented)

Discover

(opportunistic)

Data

Retrieval

Data

Mining

Information

Retrieval

Text

Mining

© 2002, AvaQuest Inc.

Data Retrieval

• Find records within a structured

database.

Database Type

Structured

Search Mode

Goal-driven

Atomic entity

Data Record

Example Information Need

“Find a Japanese restaurant in Boston

that serves vegetarian food.”

Example Query

“SELECT * FROM restaurants WHERE

city = boston AND type = japanese

AND has_veg = true”

© 2002, AvaQuest Inc.

Information Retrieval

• Find relevant information in an

unstructured information source

(usually text)

Database Type

Unstructured

Search Mode

Goal-driven

Atomic entity

Document

Example Information Need

“Find a Japanese restaurant in Boston

that serves vegetarian food.”

Example Query

“Japanese restaurant Boston” or

Boston->Restaurants->Japanese

© 2002, AvaQuest Inc.

Data Mining

• Discover new knowledge

through analysis of data

Database Type

Structured

Search Mode

Opportunistic

Atomic entity

Numbers and Dimensions

Example Information Need

“Show trend over time in # of visits to

Japanese restaurants in Boston ”

Example Query

“SELECT SUM(visits) FROM restaurants

WHERE city = boston AND type =

japanese ORDER BY date”

© 2002, AvaQuest Inc.

Text Mining

• Discover new knowledge

through analysis of text

Database Type

Unstructured

Search Mode

Opportunistic

Atomic entity

Language feature or concept

Example Information Need

“Find the types of food poisoning most

often associated with Japanese

restaurants”

Example Query

Rank diseases found associated with

“Japanese restaurants”

© 2002, AvaQuest Inc.

Motivation for Text Mining

•

•

Approximately 90% of the world’s data is held in unstructured

formats (source: Oracle Corporation)

Information intensive business processes demand that we transcend

from simple document retrieval to “knowledge” discovery.

10%

90%

Structured Numerical or Coded

Information

Unstructured or Semi-structured

Information

© 2002, AvaQuest Inc.

Challenges of Text Mining

• Very high number of possible “dimensions”

– All possible word and phrase types in the language!!

• Unlike data mining:

– records (= docs) are not structurally identical

– records are not statistically independent

• Complex and subtle relationships between concepts in text

• Ambiguity and context sensitivity

– automobile = car = vehicle = Toyota

– Apple (the company) or apple (the fruit)

© 2002, AvaQuest Inc.

Text Processing

• Statistical Analysis

– Quantify text data

• Language or Content Analysis

– Identifying structural elements

– Extracting and codifying meaning

– Reducing the dimensions of text data

© 2002, AvaQuest Inc.

Statistical Analysis

• Use statistics to add a numerical

dimension to unstructured text

Term frequency

Document frequency

Term proximity

Document length

© 2002, AvaQuest Inc.

Content Analysis

• Lexical and Syntactic Processing

– Recognizing “tokens” (terms)

– Normalizing words

– Language constructs (parts of speech, sentences, paragraphs)

• Semantic Processing

– Extracting meaning

– Named Entity Extraction (People names, Company Names, Locations,

etc…)

• Extra-semantic features

– Identify feelings or sentiment in text

• Goal = Dimension Reduction

© 2002, AvaQuest Inc.

Syntactic Processing

• Lexical analysis

– Recognizing word boundaries

– Relatively simple process in English

• Syntactic analysis

–

–

–

–

Recognizing larger constructs

Sentence and Paragraph Recognition

Parts of speech tagging

Phrase recognition

© 2002, AvaQuest Inc.

Named Entity Extraction

• Identify and type language features

• Examples:

•

•

•

•

•

•

People names

Company names

Geographic location names

Dates

Monetary amount

Others… (domain specific)

© 2002, AvaQuest Inc.

Simple Entity Extraction

“The quick brown fox jumps over the lazy dog”

Noun phrase

Noun phrase

Mammal

Mammal

Canidae

Canidae

© 2002, AvaQuest Inc.

Entity Extraction in Use

• Categorization

– Assign structure to unstructured content to facilitate

retrieval

• Summarization

– Get the “gist” of a document or document collection

• Query expansion

– Expand query terms with related “typed” concepts

• Text Mining

– Find patterns, trends, relationships between concepts in

text

© 2002, AvaQuest Inc.

Extra-semantic Information

• Extracting hidden meaning or sentiment based on

use of language.

– Examples:

• “Customer is unhappy with their service!”

• Sentiment = discontent

• Sentiment is:

– Emotions: fear, love, hate, sorrow

– Feelings: warmth, excitement

– Mood, disposition, temperament, …

• Or even (someday)…

– Lies, sarcasm

© 2002, AvaQuest Inc.

Text Mining:

General Applications

• Relationship Analysis

– If A is related to B, and B is related to C, there is

potentially a relationship between A and C.

• Trend analysis

– Occurrences of A peak in October.

• Mixed applications

– Co-occurrence of A together with B peak in

November.

© 2002, AvaQuest Inc.

Text Mining:

Business Applications

• Ex 1: Decision Support in CRM

- What are customers’ typical complaints?

- What is the trend in the number of satisfied

customers in England?

• Ex 2: Knowledge Management

– People Finder

• Ex 3: Personalization in e-Commerce

- Suggest products that fit a user’s interest profile

(even based on

personality info).

© 2002, AvaQuest Inc.

Example 1:

Decision Support using Bank Call Center

Data

• The Needs:

– Analysis of call records as input into decisionmaking process of Bank’s management

– Quick answers to important questions

• Which offices receive the most angry calls?

• What products have the fewest satisfied

customers?

• (“Angry” and “Satisfied” are recognizable

sentiments)

– User friendly interface and visualization tools

© 2002, AvaQuest Inc.

Example 1:

Decision Support using Bank Call Center

Data

• The Information Source:

– Call center records

– Example:

AC2G31, 01, 0101, PCC, 021, 0053352,

NEW YORK, NY, H-SUPRVR8, STMT,

“mr stark has been with the company for

about 20 yrs. He hates his stmt format and

wishes that we would show a daily balance

to help him know when he falls below the

required balance on the account.”

© 2002, AvaQuest Inc.

Commercial Tools

•

•

•

•

•

•

•

•

IBM Intelligent Miner for Text

Semio Map

InXight LinguistX / ThingFinder

LexiQuest

ClearForest

Teragram

SRA NetOwl Extractor

Autonomy

© 2002, AvaQuest Inc.

User Interfaces for Text Mining

• Need some way to present results of Text

Mining in an intuitive, easy to manage form.

• Options:

– Conventional text “lists” (1D)

– Charts and graphs (2D)

– Advanced visualization tools (3D+)

• Network maps

• Landscapes

• 3d “spaces”

© 2002, AvaQuest Inc.

Charts and Graphs

http://www.cognos.com/

© 2002, AvaQuest Inc.

Visualization: Network Maps

http://www.lexiquest.com/

© 2002, AvaQuest Inc.

Visualization: Landscapes

http://www.aurigin.com/

© 2002, AvaQuest Inc.

Basic Measures for Text Retrieval

Relevant

Relevant &

Retrieved

Retrieved

All Documents

• Precision: the percentage of retrieved documents that are in fact relevant to the

query (i.e., “correct” responses)

| {Relevant} {Retrieved } |

precision

| {Retrieved } |

• Recall: the percentage of documents that are relevant to the query and were, in

fact, retrieved

Recall

| {Relevant} {Retrieved} |

| {Relevant} |

Types of Text Data Mining

• Keyword-based association analysis

• Automatic document classification

• Similarity detection

– Cluster documents by a common author

– Cluster documents containing information from a common

source

• Link analysis: unusual correlation between entities

• Sequence analysis: predicting a recurring event

• Anomaly detection: find information that violates usual

patterns

• Hypertext analysis

– Patterns in anchors/links

• Anchor text correlations with linked objects

Text Categorization

• Pre-given categories and labeled document

examples (Categories may form hierarchy)

• Classify new documents

• A standard classification (supervised learning )

Sports

problem

Categorization

Business

System

Education

…

Sports

Business

Education

…

Science

Applications

•

•

•

•

•

News article classification

Automatic email filtering

Webpage classification

Word sense disambiguation

……

In-memory analytics

• Oracle Exalytics hardware is delivered as a server that is

optimally configured for in-memory analytics for business

intelligence workloads.

• Multiple Oracle Exalytics machines can be clustered

together to expand available memory capacity and to

provide high availability.

• Oracle Exalytics includes several Terabytes of DRAM,

distributed across multiple processors, with a high-speed

processor interconnect architecture that is designed to

provide a single hop access to all memory.

• This memory is backed up by several terabytes of Flash

storage and hard disks. These stores can be further backed

by network attached storage, extending the capacity of the

machine and providing data high availability.

In-memory analytics

• The memory and storage is matched with the right amount of

compute capacity.

• A high-performance business intelligence system requires fast

connectivity to data warehouses, operational systems and other

data sources.

• Multiple network interfaces are also required for deployments that

demand high availability, load balancing and disaster recovery.

• Oracle Exalytics provides dedicated high-speed InfiniBand ports for

connecting to Oracle Exadata. These operate at quad data rate of

40Gb/s and provide extreme performance for connecting to both

data warehousing and OLTP data sources.

• Several 10Gb/s Ethernet ports are also available for high-speed

connectivity to other data sources and clients. Where legacy

network interconnectivity is desired, multiple 1GB/s compatible

ports are also available.

• The Oracle Exalytics In-Memory Machine features an

optimized Oracle Business Intelligence Foundation Suite

and Oracle TimesTen In-Memory Database for Exalytics.

• Business Intelligence Foundation takes advantage of large

memory, processors, concurrency, storage, networking,

operating system, kernel, and system configuration of

Oracle Exalytics hardware.

• This optimization results in better query responsiveness,

higher user scalability.

• Oracle TimesTen In-Memory Database for Exalytics is an

optimized in-memory analytic database, with features

exclusively available on Oracle Exalytics platform.

Oracle Exalytics engineers hardware and software

designed to work together seamlessly

Oracle Exalytics Software Overview

• Oracle Exalytics is designed to run the entire stack

of Oracle Business Intelligence and Enterprise

Performance Management applications. The

following software runs on Exalytics:

• 1. Oracle Business Intelligence Foundation Suite,

including Oracle Essbase

• 2. Oracle TimesTen In-Memory Database for

Exalytics

• 3. Oracle Endeca Information Discovery

• 4. Oracle Exalytics In-Memory Software

Oracle Business Intelligence

Foundation Suite

• Delivers the most complete, open, and integrated business

intelligence platform on the market today.

• Provides comprehensive and complete capabilities for

business intelligence, including enterprise reporting,

dashboards, ad hoc analysis, multi-dimensional OLAP,

scorecards, and predictive analytics on an integrated

platform.

• Includes the industry’s best-in-class server technology for

relational and multi-dimensional analysis and delivers rich

end user experience that includes visualization,

collaboration, alerts and notifications, search and mobile

access.

• Includes Oracle Essbase - the industry leading multidimensional OLAP Server for analytic applications.

Oracle TimesTen In-Memory Database

for Exalytics

• Memory-optimized full featured relational

database with persistence.

• TimesTen stores all its data in memory in

optimized data structures and supports query

algorithms specifically designed for in-memory

processing.

• SQL programming interfaces- provides real-time

data management, fast response times, and very

high throughput for a variety of workloads.

• Specifically enhanced for analytical processing at

in-memory speeds.

Oracle TimesTen In-Memory Database

for Exalytics

Columnar Compression: supports columnar

compression that reduces the memory

footprint for in-memory data.

• Compression ratios of 5X are practical and

help expand in-memory capacity.

• Analytic algorithms are designed to operate

directly on compressed data, thus further

speeding up the in-memory analytics queries.

Oracle TimesTen In-Memory Database

for Exalytics

Oracle Exalytics In-memory Software

• Collection

of

in-memory

features,

optimizations and configuration

• Certified and supported only on Oracle

Exalytics In-Memory Machine.

• Oracle Exalytics In-Memory Software consists

of

1. Oracle OBIEE Accelerator

2. Oracle Essbase Accelerator

3. Oracle In-Memory Data Caching

4. Oracle BI Publisher Accelerator

• Oracle OBIEE In-Memory Accelerator

• Hardware-specific optimizations to Oracle

Business Intelligence Enterprise Edition (included

in Oracle Business Intelligence Foundation Suite).

• Optimizations make OBIEE specifically tuned for

Oracle Exalytics hardware - for e.g., the processor

architecture, number of cores, the memory

latency and variations.

• Up to 3X improvement in throughput at high

loads and thus can handle 3X more users than

similarly configured commodity hardware.

• Oracle Essbase In-Memory Accelerator

• Leverage processor concurrency, memory latency, memory

distribution, availability of flash storage, and provides

improvements to overall storage layer performance,

enhancements to parallel operations

• Up to 4X faster query execution as well as reduction in writeback and calculation operations, including batch processes.

• Flash as storage provides 25X faster Oracle Essbase data load

performance and 10X faster Oracle Essbase Calculations when

compared to hard disk drives.

• In-Memory Data Caching

• Multiple ways to use the large memory

available with Oracle Exalytics:

• Oracle In-Memory Intelligent Result Cache

• Oracle In-Memory Adaptive Data Mart

• Oracle In-Memory Table Caching

• Oracle In-Memory Intelligent Result Cache

• Completely reusable in-memory cache, populated

with results of previous logical queries generated

by the server.

• Provides data for repeated queries

• For best query acceleration, Oracle Exalytics

provides tools to analyze usage, as well as

identify and automate the pre-seeding of result

caches. The pre-seeding ensures instant

responsiveness for queries at run time.

• Oracle In-Memory Adaptive Data Mart

• Identifying and creating a data mart for the

relevant “hot” data.

• Implementing the in-memory data mart in Oracle

TimesTen for Exalytics provides the most effective

improvement in query responsiveness for large

data sets.

• Tests with customer data have shown a reduction

of query response times by 20X as well as a

throughput increase of 5X.

• Automated Recommendation:

• Creating a data mart for query acceleration has

often required expensive and error prone manual

research to determine what data aggregates or

cubes from subject areas to cache into memory.

• Oracle Exalytics dramatically reduces, and in

many cases eliminates, costs associated with this

manual tuning by providing the necessary tools

that can be used to identify, creates and refresh

the best fit in-memory data mart for a specific

business intelligence deployment

• In-Memory Table Caching

• Selective facts and dimensions from analytic data

warehouses may be cached “as is” into Oracle

TimesTen In-Memory Database on Exalytics.

• In many cases, especially with enterprise

business intelligence data warehouses, including

pre-packaged Business Intelligence Applications

provided by Oracle, the entire data warehouse

may be able to fit entirely in memory.

• In such cases, replication tools like Oracle

GoldenGate and ETL tools like Oracle Data

Integrator can be used to implement table

caching mechanisms into Oracle TimesTen

inMemory database for Exalytics.

• Once tables are cached, Oracle Business

Intelligence metadata can be configured to route

queries to the TimesTen In-Memory database,

providing faster query responses for the cached

data.

• Oracle BI Publisher In-Memory Accelerator

• Oracle Business Intelligence Publisher features piped

document generation and high volume bursting that can

enable the generation of an extremely large number of

personalized reports and documents for business users in

time periods that were previously not achievable.

• This is enabled via a configuration setting and the use of

Exalytics’ substantial compute, memory, and multithreading capabilities. Performance can be further

optimized by configuring BI Publisher to use memory disks

(for nonclustered environments only) or solid state drives.

In-database analytics

• Refers to the integration of data analytics into

data warehousing functionality.

• Today, many large databases, such as those

used for credit card fraud detection and

investment bank risk management, use this

technology because it provides significant

performance improvements over traditional

methods.

• Traditional approaches to data analysis require data to be

moved out of the database into a separate analytics

environment for processing, and then back to the database.

(SPSS from IBM are examples of tools that still do this today).

• Doing the analysis in the database, where the data resides,

eliminates the costs, time and security issues associated with

the old approach by doing the processing in the data

warehouse itself.

• “big data” is one of the primary reasons it has become

important to collect, process and analyze data efficiently and

accurately.

• the need for in-database processing has become more

pressing as the amount of data available to collect and

analyze continues to grow exponentially (due largely to the

rise of the Internet), from megabytes to gigabytes, terabytes

and petabytes.

• Also, the speed of business has accelerated to the point

where a performance gain of nanoseconds can make a

difference in some industries. As more people and industries

use data to answer important questions, the questions they

ask become more complex, demanding more sophisticated

tools and more precise results.

• All of these factors in combination have

created the need for in-database processing.

The introduction of the column-oriented

database, specifically designed for analytics,

data warehousing and reporting, has helped

make the technology possible.

Types

• There are three main types of in-database

processing:

1. Translating a model into SQL code,

2. Loading C or C++ libraries into the database

process space as a built-in user-defined function

(UDF),

3. Out-of-process libraries typically written in C,

C++ or Java and registering them in the database

as a built-in UDFs in a SQL statement.

1. Translating models into SQL code

• In this type of in-database processing, a

predictive model is converted from its source

language into SQL that can run in the database

usually in a stored procedure.

• Many analytic model-building tools have the

ability to export their models in either SQL or

PMML (Predictive Modeling Markup Language).

• Once the SQL is loaded into a stored procedure,

values can be passed in through parameters and

the model is executed natively in the database.

• Tools that can use this approach include SAS,

SPSS, R and KXEN.

2. Loading C or C++ libraries into the database process

space

• With C or C++ UDF libraries that run in process, the

functions are typically registered as built-in functions

within the database server and called like any other

built-in function in a SQL statement.

• Running in process allows the function to have full

access to the database server’s memory, parallelism

and processing management capabilities. Because of

this, the functions must be well-behaved so as not to

negatively impact the database or the engine. This type

of UDF gives the highest performance out of any

method for OLAP, mathematical, statistical, univariate

distributions and data mining algorithms.

3. Out-of-process libraries

• Out-of-process UDFs are typically written in C, C++ or

Java.

• By running out of process, they do not run the same

risk to the database or the engine as they run in their

own process space with their own resources.

• Here, they wouldn’t be expected to have the same

performance as an in-process UDF.

• Registered in the database engine and called through

standard SQL, usually in a stored procedure.

• Out-of-process UDFs are a safe way to extend the

capabilities of a database server and are an ideal way

to add custom data mining libraries.

• Uses

• In-database processing makes data analysis more

accessible and relevant for high-throughput, realtime applications including fraud detection, credit

scoring,

risk

management,

transaction

processing, pricing and margin analysis, usagebased micro-segmenting, behavioral ad targeting

and recommendation engines, such as those used

by customer service organizations to determine

next-best actions.

Google Analytics

• Is a web analytics service offered by Google

that tracks and reports website traffic

• Google launched the service in November

2005.

• Google Analytics is now the most widely used

web analytics service on the Internet.

History

• Google acquired Urchin Software Corp. in April 2005.Google's

service was developed from Urchin on Demand. The system

also brings ideas from Adaptive Path, whose product,

Measure Map, was acquired and used in the redesign of

Google Analytics in 2006. Google continued to sell the

standalone, installable Urchin WebAnalytics Software through

a network of value-added resellers until discontinuation on

March 28, 2012

• The Google-branded version was rolled out in November 2005

to anyone who wished to sign up. However due to extremely

high demand for the service, new sign-ups were suspended

only a week later.

History

• As capacity was added to the system, Google began using a lottery-type

invitation-code model. Prior to August 2006 Google was sending out

batches of invitation codes as server availability permitted; since midAugust 2006 the service has been fully available to all users – whether

they use Google for advertising or not.

• The newer version of Google Analytics tracking code is known as the

asynchronous tracking code,which Google claims is significantly more

sensitive and accurate, and is able to track even very short activities on

the website. The previous version delayed page loading and so, for

performance reasons, it was generally placed just before the </body>

body close HTML tag. The new code can be placed between the

<head>...</head> HTML head tags because, once triggered, it runs in

parallel with page loading

History

• In April 2011 Google announced the availability of a new

version of Google Analytics featuring multiple dashboards,

more custom report options, and a new interface design.

This version was later updated with some other features

such as real-time analytics and goal flow charts.

• In October 2012 the latest version of Google Analytics was

announced, called 'Universal Analytics'. The key differences

from the previous versions were: cross-platform tracking,

flexible tracking code to collect data from any device, and

the introduction of custom dimensions and custom metrics

Features

• Integrated with AdWords, users can now review online campaigns

by tracking landing page quality and conversions (goals). Goals

might include sales, lead generation, viewing a specific page, or

downloading a particular file.

• Google Analytics' approach is to show high-level, dashboard-type

data for the casual user, and more in-depth data further into the

report set. Google Analytics analysis can identify poorly performing

pages with techniques such as funnel visualization, where visitors

came from, how long they stayed and their geographical position. It

also provides more advanced features, including custom visitor

segmentation.

• Google Analytics e-commerce reporting can track sales activity and

performance. The e-commerce reports shows a site's transactions,

revenue, and many other commerce-related metrics.

• On September 29, 2011, Google Analytics launched Real Time

analytics.

Features

• AdWords (Google AdWords) is an advertising service

by Google for businesses wanting to display ads on

Google and its advertising network. The AdWords

program enables businesses to set a budget for

advertising and only pay when people click the ads.

The ad service is largely focused on keywords.

• Google Analytics includes Google Website Optimizer,

rebranded as Google Analytics Content Experiments.

• Google Analytics Cohort analysis feature helps

understand the behavior of component groups of users

apart from your user population. It is very much

beneficial to marketers and analysts for successful

implementation of Marketing Strategy

• Google Analytics is implemented with "page

tags", in this case called the Google Analytics

Tracking Code (GATC), which is a snippet of

JavaScript code that the website owner adds to

every page of the website. The tracking code runs

in the client browser when the client browses the

page (if JavaScript is enabled in the browser) and

collects visitor data and sends it to a Google data

collection server as part of a request for a web

beacon.

• The tracking code loads a larger JavaScript file from the Google webserver

and then sets variables with the user's account number. The larger file

(currently known as ga.js) is typically 18 KB. The file does not usually have

to be loaded, however, due to browser caching. Assuming caching is

enabled in the browser, it downloads ga.js only once at the start of the

visit. As all websites that implement Google Analytics with the ga.js code

use the same master file from Google, a browser that has previously

visited any other website running Google Analytics will already have the

file cached on their machine.

• In addition to transmitting information to a Google server, the tracking

code sets first party cookies (If cookies are enabled in the browser) on

each visitor's computer. These cookies store anonymous information such

as whether the visitor has been to the site before (new or returning

visitor), the timestamp of the current visit, and the referrer site or

campaign that directed the visitor to the page (e.g., search engine,

keywords, banner, or email).

• If the visitor arrived at the site by clicking on a

link tagged with Urchin Traffic Monitor (UTM)

codes such as:

• http://toWebsite.com?utm_source=fromWeb

site&utm_medium=bannerAd&utm_campaig

n=fundraiser2012 the tag values are passed to

the database too.

Limitations

• In addition, Google Analytics for Mobile Package allows Google

Analytics to be applied to mobile websites. The Mobile Package

contains server-side tracking codes that use PHP, JavaServer Pages,

ASP.NET, or Perl for its server-side language.[19]

• Many ad filtering programs and extensions (such as Firefox's

Adblock and NoScript) can block the Google Analytics Tracking

Code. This prevents some traffic and users from being tracked, and

leads to holes in the collected data. Also, privacy networks like Tor

will mask the user's actual location and present inaccurate

geographical data. Some users do not have JavaScriptenabled/capable browsers or turn this feature off. However, these

limitations are considered small—affecting only a small percentage

of visits

Limitations

• The largest potential impact on data accuracy comes from users deleting

or blocking Google Analytics cookies Without cookies being set, Google

Analytics cannot collect data. Any individual web user can block or delete

cookies resulting in the data loss of those visits for Google Analytics users.

Website owners can encourage users not to disable cookies, for example,

by making visitors more comfortable using the site through posting a

privacy policy.

• These limitations affect the majority of web analytics tools which use page

tags (usually JavaScript programs) embedded in web pages to collect

visitor data, store it in cookies on the visitor's computer, and transmit it to

a remote database by pretending to load a tiny graphic "beacon“.

• Another limitation of Google Analytics for large websites is the use of

sampling in the generation of many of its reports. To reduce the load on

their servers and to provide users with a relatively quick response for their

query, Google Analytics limits reports to 5,00,000 randomly sampled visits

at the profile level for its calculations. While margins of error are indicated

for the visits metric, margins of error are not provided for any other

metrics in the Google Analytics reports. For small segments of data, the

margin of error can be very large

• Performance concerns

• There have been several online discussions

about the impact of Google Analytics on site

performance. However, Google introduced

asynchronous JavaScript code in December

2009 to reduce the risk of slowing the loading

of pages tagged with the ga.js script

Privacy issues

• Due to its ubiquity, Google Analytics raises some privacy concerns.

Whenever someone visits a web site that uses Google Analytics, if

JavaScript is enabled in the browser, then Google tracks that visit

via the user's IP address in order to determine the user's

approximate geographic location. To meet German legal

requirements, Google Analytics can anonymize the IP address

• Google has also released a browser plugin that turns off data about

a page visit being sent to Google. Since this plug-in is produced and

distributed by Google itself, it has met much discussion and

criticism. Furthermore, the realisation of Google scripts tracking

user behaviours has spawned the production of multiple, often

open-source, browser plug-ins to reject tracking cookies. These

plug-ins offer the user a choice, whether to allow Google Analytics

(for example) to track his/her activities. However, partially because

of new European privacy laws, most modern browsers allow users

to reject tracking cookies

Privacy issues

• It has been reported that behind proxy servers and multiple

firewalls that errors can occur changing time stamps and

registering invalid searches.

• Webmasters who seek to mitigate Google Analytics specific

privacy issues can employ a number of alternatives having

their backends hosted on their own machines. Until its

discontinuation, an example of such a product was Urchin

WebAnalytics Software from Google itself.

• On Jan. 20, 2015, the Associated Press reported in an

article titled: "Government health care website quietly

sharing personal data" that HealthCare.gov is providing

access to enrollees personal data to private companies that

specialize in advertising. Google Analytics was mentioned in

that article.

Assignment /Test

• What is clustering? Explain its types

• What is text mining?

• What is the difference between in-memory

analytics & in-database analytics?