Diagnostics/Prognostics for Health Management

advertisement

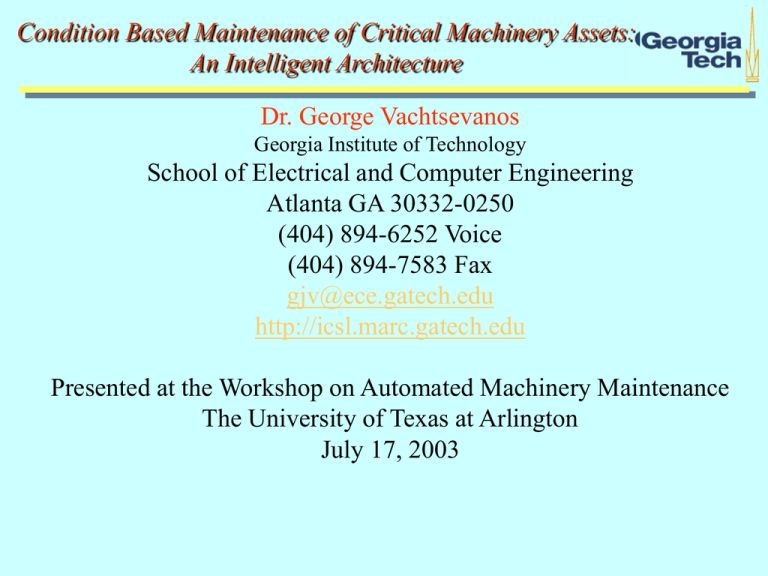

Condition Based Maintenance of Critical Machinery Assets:

An Intelligent Architecture

Dr. George Vachtsevanos

Georgia Institute of Technology

School of Electrical and Computer Engineering

Atlanta GA 30332-0250

(404) 894-6252 Voice

(404) 894-7583 Fax

gjv@ece.gatech.edu

http://icsl.marc.gatech.edu

Presented at the Workshop on Automated Machinery Maintenance

The University of Texas at Arlington

July 17, 2003

Topical Outline

1.

2.

3.

4.

5.

Introduction – What is Condition Based

Maintenance?

Elements of a CBM Architecture

Example Demonstration

An Intelligent Agent Based CBM Paradigm

Future R&D Directions/Concluding

Comments.

Condition-Based Maintenance

The Opportunity

Condition Based

Maintenance (CBM)

promises to deliver

improved maintainability

and operational availability

of naval systems while

reducing life-cycle costs

The Challenge

Prognostics is the Achilles heel of CBM systems - predicting the

time to failure of critical machines requires new and innovative

methodologies that will effectively integrate diagnostic results with

maintenance scheduling practices

Condition Based Maintenance

Objective

– Determine the “optimum” time to perform

maintenance

Problem

Definition

– A scheduling problem – schedule maintenance

timing to meet specified objective criteria under

certain constraints

Condition Based Maintenance

Major

Objective

– Extend system life cycle as much as possible

without endangering its integrity

Enabling Technologies

– Various Optimization Tools

– Genetic Algorithms

– Evolutionary Computing

A Maintenance Management

Architecture

Time-Directed Tasks

• Trend Data

• Logs

Maintenance Schedule

Real-time

Diagnostics /

Prognostics

and

Trend Analysis

• Technical Doc Ref

• Preplanned Work

Corrective Tasks

• Emergent Work

Case Library

Other

Process

Management

Component

(ERP)

Work Order

Backlog

• Material Required

• Labor Required

• Work Procedures

• Actions Taken

• Conditions Found

• Cost Collector

Enabling Technologies

Genetic Algorithms for Optimum Maintenance Scheduling

Case-Based Reasoning and Induction

Cost-Benefit Analysis Studies

CBM Performance Assessment

Objective:

– To assess the technical and economic feasibility of various

prognostic algorithms

Technical Measures:

– Accuracy, Speed, Complexity, Scalability

Overall Performance Measure:

– w1Accuracy + w2Complexity + w3Speed + …

(wi - weighting factors)

PM1

PM2

PM3

Performance

Algorithm #1

*

*

*

Assessment Matrix:

Algorithm #2

*

*

*

Algorithm #3

*

*

*

Prognostics

Objective

– Determine time window over which maintenance

must be performed without compromising the

system’s operational integrity

Prognostics

Enabling Technologies

– Multi-Step Adaptive Kalman Filtering

– Auto-Regressive Moving Average Models

– Stochastic Auto-Regressive Integrated Moving

Average Models (ARIMA)

– Forecasting by Pattern and Cluster Search

– Variants Analysis

– Parameter Estimation Methods

– Others

Prognostics

Enabling Technologies (cont’d)

– AI Techniques

Case-Based Reasoning

Intelligent Decision-Based Models

Min-Max Graphs

– Petri Nets

– Soft Computing Tools

Neural Nets

Fuzzy Systems

Neuro-Fuzzy

PEDS Software System

Architecture (Stand-alone)

Hardware

•Plant

•Sensors

•DAQ

CBM

Causal

factors

Scenario

Generator

main. schedule

FAHP

Causal

Adjustments

1. GUI

2. Data

Preprocessing

3. Mode

Estimator /

Usage Pattern

Identification

Event Dispatch

Central DB

failure

dimension

Database

Management

5. Feature

4. Feature

Extraction

Extraction

5.

Classifier

(Fuzzy)

6.

Virtual

Sensor

(WNN)

DWNN

Classifier

(WNN)

Case-based

Diagnostic

Reasoner

CPNN

The Navy Centrifugal Chiller

Chiller Failure Modes

•Condenser Tube Fouling

•Condenser Water Control Valve Failure

•Tube Leakage

•Decreased Sea Water Flow

•SW in/out temp.

•SW flow

•Cond. press.

•Cond. PD press.

•Cond. liquid out temp.

Compressor

Pre-rotation Vane

•Compressor Stall & Surge

•Shaft Seal Leakage

•Oil Level High/Low

•Aux. Pump Fail

•Oil Cooler Fail

•PRV/VGD Mechanical Failure

•Comp. suct. press./temp.

•Comp. disch. press./temp.

•Comp. oil press./flow (at required points)

•Comp. bearing oil temp

•Comp. suct. super-heat

•Shaft seal interface temp.

•PRV Position

Condenser

Evaporator

•Target Flow Meter Failure

•Decreased Chilled Water Flow

•Evaporator Tube Freezing

•CW in/out temp./flow

•Eva. temp./press.

•Eva. PD press.

•Liquid line temp.

•(Refrigerant weight)

•Non Condensable Gas in Refrigerant

•Contaminated Refrigerant

•Refrigerant Charge High

•Refrigerant Charge Low

Timing sequence(1)-No fault Detected

Start next

cycle

Data

Collection

Mode

Identification

Feature

Extraction

(Extract features

For Diagnostics only)

FuzzyDS

WNN

No Fault Detected

Prognostic routines

will not run

t0

t1

t2

t

1. The timing sequence is managed by the Task Manager

2. Algorithm modules are started by FEATURE READY events

3. Each diagnostic module decides upon the presence or absence of a fault

4. The diagnostic modules report their conclusion to the database.

5. Each diagnostic module runs its routine and responds back to the task manager.

6. Task manager receives the events and decides which module or algorithm should be started.

7. The diagnostic decision (or No fault) is displayed on the GUI;GUI receives result from database.

8. All prognostic routines are initiated when a fault has been detected.

Timing sequence(2)—Fault Confirmed

Start

confirmation

Continue

confirmation

Fault confirmed

Start prognosis

Data

Collection

Mode

Identification

Collect data for

prognosis

Feature

Extraction

Extracts features

for prognosis

FuzzyDS

WNN

DWNN

CPNN

Fault Detected

By FuzzyDS or

WNN

t0

Fault

confirmed

t1

t2

t3

t4

t

Timing sequence(3)—Fault not confirmed

Start

confirmation

Continue

confirmation

Start normal

cycle again

Data

Collection

Feature

Extraction

FuzzyDS

WNN

Fault Detected

By FuzzyDS or

WNN

t0

No fault

detected

here

t1

t2

t3

t4

t

Software Design

Developing platform: Microsoft Visual C++ ,

Visual Basic, SQL server 2000, Access 2000.

Software running under Windows NT platform

Component based open system architecture. All

the system components are implemented as

Microsoft COM objects

Event-based distributed communication. Capable

of transmitting events at different priorities.

Provides database for storage of collected

data,configuration information, diagnostic and

prognostic results.

Conceptual Model of PEDS — 3-Tier Client-Server Architecture

User Services

Prognostic Services

Persistent Services

Diagnostics

Operator User

Interface

ICAS Database

Interface

Feature

Extraction

Administrator

User Interface

Tier 1

Prognostics

Tier 2

Features

Repository

Interface

Tier 3

Software Diagram

Data sampling module

Task Manager

Time Out?

Start Sampling Data

Sampling

Data

Event

N

Enough Data?

y

Save to

Database

Diagnosis

Start Feature Extractor

N

Wait for

event

Feature extractor

Get Data

From

Database

Events

Feature Ready?

y

Event

Prognosis

Wait for

event

Wait for

event

Get Features

From

Database

Get Features

From

Database

Do

Diagnosis

Do

Prognosis

Save results

to Database

Save results

to Database

N

Time Out?

y

Do

Extraction

Start Diagnosing

Save Features

to Database

Start Prognosing

Database relationships

Mode Diagram

Example Testbed: AC-Plant

Mode Identification

• Modes are characterized by the dynamics, set-points, and

controller.

• Modes switch due to events.

Fuzzy Petri Net

(Mode Changes Due to Events)

Mode

Decision

Sensors

Dynamics

Classifier

(Mode Due to Dynamics)

Current

Mode

Fuzzy Petri Net

other modes

Normal Mode

Overload

Mode

other modes

Events Characterized by Membership Functions

53

44

If Chilled Water Inlet Temperature is above 53 degrees

and

Chilled Water Outlet Temperature is above 44 degrees

then

Switch to Overload Mode

Fuzzy Petri Net Simulation

Sensors

Fuzzy Petri net marking

mode

marking

SENSORS

MODE CHANGES

Pre-rotation vane position

Chilled water inlet temperature

Chilled water outlet temperature

Normal Load Mode

Full Load Mode

Overload Mode

Feature Selection and Extraction

Sensors

PreProcessing

Data

Feature

Extraction

Highpass Filtered horizontal and vertical scans - absolute value

Windowed highpass filtered results - absolute value

10

10

8

8

6

6

4

4

2

2

50

100

150

200

250

300

2.5

0

50

100

150

200

250

300

2.5

2

2

1.5

1.5

1

1

0.5

0.5

0

Prediction

of Fault

Evolution

Fault

Classification

20

40

60

80

100

120

140

160

180

0

20

40

60

80

100

120

140

160

180

Motivation: Data driven

diagnostic/prognostic

algorithms require for fault

detection and classification a

feature vector whose

elements comprise the “best”

fault signature indicators

Intelligent distinguishability

and identifiability metrics

must be defined for selecting

the best features

Time and frequency domain

analysis techniques must be

employed to extract the

selected features

Overall Procedures for Feature Selection

and Diagnostic Rule Generation

PEDS Database

Raw

Database

Featurebase

Feature

Vector Table

Diagnostic

Rulebase

Feature Preparation

Preprocessing

Feature

Extraction

Rough Set*

Feature

Selection

Rough Set

Rule

Generation

* Rough set theory is a popular data mining methodology, which provides mathematical methods to remove

redundancies and to discover hidden relationships in a database.

Feature Preparation

Heuristic Feature Pre-selection

Fault Symptoms

from ONR Report

Featurebase

Available Measurements

from York Test

Fault Mode1

Time Feature 1 Feature 2

York Test

Database

Raw

Data

Preprocessing*

Feature

Extraction

Feature

Candidates

Decision

t1

20.5

11.5

0

t2

23.5

9.5

1

* Preprocessing includes removing unreasonable objects from the database and assigning the operational status.

Feature Extraction Architecture

Maintainance

Actions

Rough Set Data MIner

Feature

Selector

Feature

Vectors

Rule

Generator

Historical

FeatureValues

Featurebase

Feature

Vector Table

Feature

Table

Diagnostic

Rules

Diagnostic

Rule Table

Classifier

Designer

Operator

Sensor Suite

Feature

Vectors

Raw

data

Data Calibration

Preprocessing

Classifier

Parameters

Diagnostic

Rules

Feature

Extractor

On-line

Feature Values

Diagnostic

Results

Alarms,/

Reports

Diagnostor

GUI

Database

Prognostor

Historical

Feature Value

Diagnostic/Prognostic

Module

Prognostic

Results

Figure 1-2. Industrial Diagnostic/Prognostic Framework based on Data Mining Feature Selection.

The Diagnostic Module

2.5

2

1.5

High-frequency failure modes

(engine stall, etc.): The Wavelet

Neural Net Approach

1

0.5

0

-0.5

-1

-1.5

-2

-2.5

A Two-Prong

Approach

0

100

200

300

400

500

13.8

13.6

13.4

Low-frequency events

(Temperature, RPM sensor, etc.):

The Fuzzy Logic Approach

13.2

13

12.8

12.6

12.4

12.2

0

50

100

150

200

250

Sensor Data

Features

raw data from sensor

Denoised signal

13.8

3

13.6

13.4

normalized level

2

12.6

Preprocessing and

Feature Extraction

0

-1

13

12.8

12.4

12.2

0

50

100

150

200

250

300

400

500

time

0.2

0.15

0.1

-2

slope

normalized level

1

13.2

0.05

-3

0

0

100

200

300

400

500

time

-0.05

0

100

200

time

Failure

Templates

Fuzzify Features

Fuzzy Rule Base

(1) If symptom A is high & symptom B is low

then failure mode is F1

(2) ...

Inference Engine

(Defuzzify)

Failure Mode

Fuzzy Logic Diagnostic

Architecture

Features

Fuzzy Logic Classification

Dempster-Shafer

Theory of Evidence

Construct Mass

Functions from

Possibilities

Combine

Evidence

Calculate

Degree of Certainty

Degree of Certainty

Fuzzification

Rulebase

Fuzzy

Inference

Engine

Defuzzification

Threshold

for Fault

Declaration

Fault Declaration

Prognostics

y

y

Two Prognostic

Scenarios:

Unsupervised

Two Prognostic

Supervised

t

Value-At-Any Time

t

t

y

y

Outcomes:

Time-To-Failure

t

Prognostics

Supervised

F(n)

Fault Dimensions

Failure Rates

Trending Information

Temperature

Predictor

DWNN

Fault Growth

(DWNN)

F(n+h)

U(n)

Component ID

Unsupervised

~

F (n h ) ( F (n ), U ( n ))

Delay h

F(n)

F (n h ) DWNN ( F (n ),

F (n ) F (n T1 ) F (n 1) F ( n T2 )

,

,U (n ))

T1

T2

DWNN

U(n)

F(n+h)

The Prognostic Module

QUESTION: Once an impending failure is detected and identified, how can

we predict the time window during which maintenance must be performed?

Sensor

Data

Prognostic

Module

Diagnostic

Module

T

t

CBM

(T=?)

APPROACH: • Employ a recurrent neuro-fuzzy model to predict time window T

• Update prediction continuously as more information becomes

available from the diagnostic module

Wavelet Neural Network (WNN)

Hidden

Layer

1

x1

y1

2

x2

cjk

1

M-1

xN

Input

Layer

M

yK

Output

Layer

clin1

clin

K

Direct

Link

y [ A1 ,b1 ( x) A2 ,b2 ( x) AM ,bM ( x)]C [ x1 ]Clin

Dynamic Wavelet Neural

Network (DWNN)

Y(t)

Y(t-1)

Y(t-M)

U(t)

z-1

z-2

z-M

WNN

Y(t+1)

U(t-1)

U(t-N)

Y(t+1) = WNN(Y(t), …,Y(t-M), U(t), …, U(t-n))

On Virtual Sensors

Many failure modes are difficult or impossible to monitor

Question: How do we build a “fault meter”?

Answer: Virtual Sensor

The Notion: Use available sensor data to map known

measurements to a “fault measurement”

Potential Problem Areas: How do we train the neural net?

Laboratory or controlled experiments required

Dynamic Virtual Sensor

Vibration Signals

Power Spectrum Data

Acoustic Data

Dynamic

Virtual Sensor

Temperature

Component ID

(DWNN)

Fault Growth

(fault dimensions

location, etc)

Implementation of The

Prognostic System

Hardware (on-line)

O

b

j

e

c

t

Raw

Signal

tri-axial

vibrometer

Signal

Acquisition

Hardware

CPU or DSP

Processor

Software (on-line and off-line)

Signal

Preprocessing

Feature

Extraction

WNN

DWNN

Prognosticator

R

e

s

u

l

t

Application Examples

A defective

bearing with a crack causes the

machine to vibrate abnormally

Vibrations can be caught with accelerometers

which translate mechanical movement into

electrical signals

Bearing crack faults may be prognosed by

examining and predicting their vibration

signals

An Experimental Setup

- Cracks on the races or balls

- Particles in the lubricant

- Gaps in between moving parts, etc

A Sample Database

An exemplary database for training the prognostic predictor

Signals

Features

Fault Dimensions

Time Stamps

Stext1.dat

10.25

102.05

12:23:48

Stext2.dat

15.63

115.21

12:26:23

Stext3.dat

24.84

152.34

12:29:55

Stext4.dat

35.76

190.45

12:32:61

Bearing Fault Diagnosis

Time Domain - defective bearing

5

0.3

4

0.2

3

0.1

2

Amplitude

Amplitude

Time Domain - good bearing

0.4

0

-0.1

0

-1

-0.3

-2

-0.4

-3

0

200

400

600

800

-4

1000

Time

features = [0.3960 0.1348]

1

-0.2

-0.5

For the good bearing,

For the defective bearing,

0

200

400

600

800

1000

Time

Spectrum Domain - good bearing

Spectrum Domain - defective bearing

0.14

10

[0 1] = WNN([0.3960 0.1348])

===> The bearing is good!

9

0.12

8

7

0.08

Amplitude

Amplitude

0.1

0.06

0.04

6

5

[1 0] = WNN([4.9120 9.2182])

===> The bearing is defective!

4

3

2

0.02

1

0

features = [4.9120 9.2182]

0

20

40

60

80

Spectrum

100

120

140

0

0

20

40

60

80

Spectrum

100

120

140

Bearing Fault Prognosis

12

Power Spectrum Area

10

Failure Condition

8

6

4

TTF

2

0

0

20

40

60

Time Window

80

6

Power Spectrum Area

5

Original

4

TTF = 19 time units

DWNN Output

3

2

1

0

0

20

40

60

Time Window

80

100

100

120

Current time

6

WNN Output

3

2

1

Prediction Time

0

20

40

60

Time Window

80

Prediction up to 98 time windows using

the trained WNN

100

Predicted time to

failure

Failure

Condition

10

Real Data

4

0

12

Power Spectrum Area

Power Spectrum Area

5

Current time

Finish

time

8

6

Time-to-failure

4

2

0

0

20

40

60

Time Window

80

100

Prediction of time-to-failure using

the trained WNN

time-to-failure = 38 time windows

120

Prognosis in the frequency

domain:

Prognosis using the trained DWNN

Training of the DWNN with the PSD growth data

450

350

400

300

Original

350

DWNN Output

300

200

Power Spectra

Power Spectra

250

150

100

250

200

150

100

50

50

0

0

-50

0

100

200

300

400

500

Frequency Window

600

700

800

-50

0

200

400

600

800

Frequency Window

1000

1200

Vibration Data with Growth and Its max PSDs

Training of DWNN Using GA

Gene tic Optimiza tio n

0.0 3 8

0.0 3 6

C o st functio na l

0.0 3 4

0.0 3 2

0 .0 3

0.0 2 8

0.0 2 6

0.0 2 4

0

50

1 00

Gene ratio n

1 50

20 0

DWNN-Produced MaxPsd profiles

Original MaxPSD profiles for the three axes

500

500

450

400

400

350

300

300

250

200

200

100

150

100

0

50

0

0

20

40

60

80

100

-100

0

20

40

60

80

100

DWNN-Predicted defect sizes

500

2000

400

1500

Width

Width

Original and DWNN-Produced defect sizes

300

200

500

100

0

0

20

40

60

80

100

300

1500

200

1000

Depth

Depth

0

1000

100

20

40

60

80

100

120

140

160

0

20

40

60

80

100

120

140

160

500

0

0

-100

0

0

20

40

60

80

100

-500

Mounting Bolt Faults

Original Vibration Signal

0.4

0.2

Voltage (mv)

0

-0.2

-0.4

-0.6

-0.8

-1

0

200

400

600

800

1000

Time (ms x 10)

1200

1400

1600

PSD Peaks of Windowed Vibration Signal

0.35

0.3

PSD Peak Values

0.25

0.2

0.15

0.1

0.05

0

0

20

40

60

Window Tags

80

100

Problem Definition

Challenges

in “Technology Prediction”

– Long term prediction (i.e. 30 years) is facing

growing uncertainty

– Current trends can be misleading

– External causal factors are numerous and difficult

to quantify

– Data is sparse, difficult to extract a trend

Confidence Prediction Neural Network

Time Series Prediction

Architecture

multi-step prediction using

a novel neural network with a

probability distribution function

estimator technique

time-series data

Data Pre-Processing

filtering, buffering,

etc.

(optional)

iterated forecasting

1 Forecasting with

prediction

uncertainty

representation

3

Causal Adjustment

impacts of

•-budgets

•-economic factors

•-political factors

•-etc

2

Uncertainty

management via

learning

reduce uncertainty

in the prediction

by eliminating

unlikely outputs

4

simulated major events

that might occur along

prediction horizon

Scenario Construction

The Confidence Prediction

Neural Network (CPNN)

For CPNN, each node assigns a

weight (degree of confidence)

for an input X and a candidate

output Yi.

Final output is the weighted sum

of all candidate outputs.

In addition to the final output, the

confidence distribution of that

output

Numerator

Denominator

Confidence

distribution

approximator

Summation

layer

Pattern

layer

output can be computed as

Input layer

CD( X , Y )

(Y Yi )

1

1

C ( X , Yi ) exp[

]

2

(2 ) CD l i 1

2 CD

l

2

CPNN

Prognostic Results

Without reinforcement

learning

6

5

4

historical data

prediction

3

real failure time

2

0.9

0.8

0.7

0.6

0.5

0.4

1

0.3

0.2

0.1

0

95

96

97

98

99

100

101

dist of prognostic failure time

0

0

20

40

60

80

100

120

Prognostic Results

With reinforcement

learning

6

5

4

3

2

0.9

0.8

0.7

0.6

0.5

1

0.4

0.3

0.2

0.1

0

0

0

20

40

60

80

96

100

97

98

99

100

120

101

New Challenges for CBM/PHM Systems

Diagnostic and prognostic systems have so far been

– designed in an ad hoc manner

– static - in terms of performance

– passive - in that they only respond to events and never initiate

actions

– centralized - so that either the knowledge-base, control, or

model is centralized even for distributed frameworks

– tightly coupled - resulting in poor error-tolerant frameworks

– non-scalable

– non-portable - since they are very system specific, and

– require expert personnel to upgrade/update

Centralized Control & KB Architectures

Sensors

UUT

Events

Preprocessing

Knowledge

Base

Diagnostic

Algorithm

Sensors

UUT

Data-mining

Events

Sensors

UUT

Events

Feature

Extraction

Control

Prognostic

Algorithm

A Generic Central Control and Knowledge Base Framework

Diagnosis

Prognosis

Distributed Control & KB Architectures

Sensors

Knowledge

Fusion

UUT

Events

Diagnostic Algorithms

Central

Control

Diagnosis

PrognosticAlgorithms

Prognosis

Central

Knowledge

Base

Distributed Control and Knowledge

Base Framework

Hardwa

Hardware

r

e

Model-Based Software Architecture

System

(chiller, gas turbine,

etc.)

DAQ/CPU

ICAS

Shipboard

Systems /

Components

Preliminary Diagnostics

Intelligent Selection

Layer

Diagnostic Algorithms

Decision Support

Layer

Prognostic Algorithms

Online

Static and Dynamic

Case Library

Offline

Interface

Layer

Multiagent System

Intelligent Agent

Object Oriented Hybrid

System Models

Software Repository

Sensors

Designer

Statistics

Optimization

Performance

Assessment

Module

Offline

Prescription

Maintenance

Plan

Online

Online

Maintainer

Community

of Agents –

Multiagent

System

The System Modeling Approach

I. Concept of Hybrid System

The System Modeling Approach

(continued…)

II. The Object-Oriented Hybrid Model Architecture

Object-Oriented Modeling - PhysicsBased or Physical Modeling

System Concept - An Example JSF Propulsion System

Components

• Components

• Interconnections/ Couplings

Clutch

Lock

Actuator

#6 GB Brg

& Spanner

Nut

Geardrive

Shaft

Clutch

Pack

Actuator

1

2

#3

#4

#6

Semi-empirical models

Deterministic-stochastic models

Finite-element models

Carbon

Plates

Lock

Clutch Spline

Output

Shaft

Clutch

Input

Shaft

Drive

shaft

Interface

Physical Modeling in Prognosis

The concept of “Virtual Sensor”:

Measurable

Quantities

Problem:

Solution:

Physical

Model

Fault

Dimensions

Difficult or impossible to monitor in an

operational state fault dimensions.

Train a physical model (semi-empirical,

physics-based) to map measurable

quantities (vibration, temperature, etc.) into

fault dimensions (crack length, wear etc.

Control Architecture for Intelligent

Agent Paradigm

level 2

Interface

Control

level 1

Decision Support

Control

level 0

Intelligent Selection

Control

Figure 2: Subsumption architecture for Intelligent Agent.

Important Features of Agenthood

Cooperation

Autonomy

Adaptation

• Adaptation:

• Learning, Knowledge-Discovery

• Communication

• Self-Organization

• Cooperation:

• Agent Communication Language

• Cooperate with other Software

Agents/User Agents

• Form Agent Communities - MAS

• Autonomy:

• Proactive and Reactive

• Goal-directed

• Multi-threaded

The IA Paradigm: Agent-Oriented Programming (AOP), Agent-Oriented Design (AOD),

Agent-Oriented Software Engineering (AOSE)

Intelligence of Intelligent Agents

A simple reflex agent

A reflex agent with

internal states

Sensors

What actions I

should do now

Effectors

State

An agent with explicit

goals

Sensors

How the world

evolves

What the

world is like

What my

actions do

What actions I

should do now

State

How the world

evolves

What the

world is like

What my

actions do

What the world

will be after I do

action A

Effectors

What actions I

should do now

An agent with utility

State

How the world

evolves

What my

actions do

Varying degrees of intelligence

Recognize goals and intentions

React to unexpected situations in a robust manner

Focus of Research: Concepts of Learning, SelfOrganization, and Active Diagnosis

Effectors

Utility

Sensors

What the

world is like

What the world

will be after I do

action A

How happy I will

be in this state

What actions I

should do now

Effectors

ENVIRONMENT

Goals

Sensors

ENVIRONMENT

Conditionaction rules

ENVIRONMENT

Conditionaction rules

ENVIRONMENT

What the

world is like

Learning Issues & Approaches

Issues in Learning:

Incremental Learning Ability

Order Independence

Unknown Attribute Handling

Noisy Data Tolerance

Source Combination

Open Questions:

– What should be learned?

– When to learn?

– How to learn?

Sensors

Critic

feedback

changes

Learning

Element

Performance

Element

knowledge

Learning

goals

Problem

Generator

Effectors

Figure 5.1 A general model of learning agent [50].

Possible Solutions:

– Learn diagnostic rules via Episodic Information (Experiences)

– Learn when Episodic Information is not enough to handle current

scenario

– Aggregate learning episodes using some concept learning technique

ENVIRONMENT

–

–

–

–

–

Performance

Standard

Case-Based Reasoning & Learning

CBR - an episodic memory of past experiences

CBR - initial cases by examples

CBR Methodology:

Indexing (generate indices for classification and categorization)

Retrieval (retrieve the best past cases from the memory)

Adaptation (modify old solution to conform to new situation)

Testing (did the proposed solution work)

Learning (explain failed & store successful solutions)

Case Library

Failure Mode i

Case #

…

1

2

3

S1

Symptoms

S2 … Sm

Tests

Prescription

The Dynamic Case-Based Reasoning

Architecture

Sensory data

Feature interpretation

(static, dynamic, composite)

Case indexing

AS path

Analytical Models and algorithms

Indexing path selection

Indexing rules

PD path

Phase matching evaluator

Case retrieval

Case similarity calculation

Case memory

inactive

active

Propagation evaluator

Case adaptation

Model-based reasoner

New case constructor

Test/evaluation

Remembrance calculation

Failure driven learning

Model base

Structure of Static and Dynamic Case

Library

tfailure

Tests

Case #j

Time to Failure

TF1

TF2

TF3

…

…

Dynamic Case Library

Failure Mode i

Conditions

time

y1 y 2 … y m

t1

t2

t3

0

Case Library

Prescription

Tests

…

Static Case Library

Failure Mode i

Symptoms

Case #

S1 S2 … Sm

1

2

3

Concept Learning

Example 1

Has_A, 1/3

Has_C, 1/3

Has_B, 1/3

attrB: m

attrA: a

Has_A1, 1/2

Object A

attrC: 16

Has_A2, 1/2

attrA2: 2

attrA1: a

Example 2

Object A

Memory Aggregate (MA) approach:

– systematic learning from examples and

counter-examples

– incremental and order independent

– combines sources

– counter examples handled similarly by

building an MA called C_MA

MA based on Examples

1&2

Object A

Has_A, 1/2

Has_B, 2/5

Has_B, 1/2

attrA: a

Has_A1, 1/2

attrA1:b

Has_C, 1/5

Has_A, 2/5

attrB: m

Has_A2, 1/2

attrA2: 2

Has_B1, 1/1

attrB1: y

attrA: a,2/2 attrB: m2/2 attrC: 16,1/1

Has_A1, 2/4

attrA1:b,1/2

a, 1/2

Has_A2, 1/2

Has_B1, 1/1

attrA2: 2/2 attrB1: y,1/1

Concept CBR

Learning ‘Concepts’ as Case Library

– Storing Memory Aggregates that are sufficiently different

from each other as unique cases

Memory Aggregates are also used for Case Retrieval,

Adaptation, Testing, and Learning

For Diagnostic Framework, Concept CBR can learn:

– concept of “Normal Mode(s)” of operation given the sensor

and event readings as attributes

– concept of unique “Fault Modes” given that the modes

differ sufficiently in their signatures

Multiagent Systems (MAS)

An MAS is a loosely coupled network of problem

solvers that interact to solve problems that are

beyond the individual capabilities or knowledge.

Characteristics of MAS:

– each agent has incomplete information;

– there is no system global control;

– data is decentralized; and

– computation is asynchronous.

Multiagent Software Engineering (MaSE)

A Multiagent Diagnostic & Prognostic Framework

Global Perspective:

• Analysis and knowledge-distribution at

multiple levels of abstraction.

• The MAS framework becomes part of a

Decision Support System.

Levels

Of Abstraction

Decision Support

Framework

Inventory

Control Framework

Multiagent Diagnostic

and Prognostic System

Decision

Support

Inventory

Control

Sub-Domain Level:

Multiagent Diagnostic

and Prognostic System

Decision

Support

Inventory

Control

System Level:

Multiagent Diagnostic

and Prognostic System

Sub-system Level:

Multiagent Diagnostic

and Prognostic System

Component Level:

Multiagent Diagnostic

and Prognostic System

Domain Level:

Abstraction

Multiagent Diagnostic

& Prognostic Framework

Decision

Support

Decision

Support

Inventory

Control

Engineering a Multiagent System - I

1.1 Generate the best and safe Health

Estimate of the Domain Layer

Goal Hierarchy Diagram

– to specify goals and sub-goals

2.1 Get failures from local

knowledge

Sequence Diagram

– to establish possible roles and how

communicating agents will achieve them

3.1 Perform local diagnostic

and prognostic tests

2.2 Explain failures

of other agents that

are related

3.2 Ask others to help

explain local failures

Induced Agent Classes

– mapping from roles to agent

definitions

– aggregation of roles/agent classes

according to available resources

and similarity of roles

2.3 Ascertain risk and

reliability associated w

current diagnosis

4.1 Organize postings for

failures

ExplanationSeeker(k)

MessageOrganizer(i)

5.1 Send messagesPostMsg(src)

5.2 Receive and

to agents

organize messages

[Explain(src,,failure,time,

del_t)]

5.3 Remove

messages

ExplanationProvider(m)

5.4 Update

messages

5.5 Priori

according

PostMsgConfirmation

[Explain(src,tag,failure,time,del_t)]

FindAndCopyMsgs(src)

6.1 Tag messages to

make them unique

[Explain(*,*,failures…, ,)]

6.2 Search messages

PostMsg(src) …

[Explain(src,tag,failure,time,del_t)]

[SelfCheck(time, del_t)

Figure 3.5 Goal Hierarchy Diagram for a Diagnostic and Prognostic

RemoveMsg

[Explain(,tag, , ,)]

UpdateMsg

[Explain(,tag,failure…,,)]

FindAndCopyM

[Explain(,,*,,)]

PostMsg(src)…

Engineering Multiagent Systems - II

Mapping Roles to Agent Classes

– Diagnostic Agents

– Sensor Agents

– Black Board Agents

– Risk & Reliability

Assessment Agents

Active Diagnosis

Extends the offline ideas of “Probing” or “Testing”

It is biased to monitor normal conditions

Active Diagnosis Monitors consistency among data

Active Diagnosis of DES - A Design Time Approach

– the system itself is not diagnosable

– design a controller called “Diagnostic Controller” that will

make the system diagnosable

Active Diagnosis Possibilities:

– Inline with Intelligent Agent paradigm

– Collaboration in Multiagent Systems can be directed to

achieve Active Diagnosis

Active vs. Passive Diagnosis

Passive Diagnosis:

Diagnoser FSM that monitors events and

sensors to generate diagnosis.

A Diagnosable Plant generates a language

from which unobservable failure

conditions can be uniquely inferred by

the Diagnoser FSM.

Unobservable Failures

Plant

(Chiller/Pump & Valve)

Controller

Sensors

Diagnoser FSM

Observable

Events

Design-Time Active Diagnosis:

Observable

State

Diagnosis

Unobservable Failures

Design a controller that will make an

otherwise

“non-diagnosable”

plant

generate a language that is diagnosable.

Plant

(Chiller/Pump & Valve)

Controller

Diagnose

r

Diagnoser FSM

Observable

Events

Diagnosis

Sensors

Observable

State

Active Diagnosis - Agent Perspective

Given an anomalous situation,

Diagnostic Agent Plans, Learns, and

Coordinates.

– Learning takes place between

distributed agents that share their

Observable

Events

experiences

Network of

– Coordination helps search, retrieval, Diagnostic

Agents

adapting activities

– Planning is required to determine if

learning and coordination is possible in

the given expected time-to-failure

condition

“Run-time” Active Diagnosis

– non-intrusive

– autonomous and rational

Unobservable Failures

Plant

(Chiller/Pump & Valve)

Controller

Diagnostic

Agent

Diagnosis

Alarms/DB

Diagnostic Agent

Planning

Coordination

Learning

Sensor

Sensor

Agents

Agents

Observable

State

Performance Measures

(How to Compare

and

)

Measures

Precision for Prognosis

a measure of the narrowness of an interval in which the remaining life

falls

Reliability

how the system responds to individual component failures

Extensibility or Scalability

how the system can be extended if new components are added

Robustness

how the system tolerates uncertainty

Reuse or Portability

how easy or hard it is to use this system in another problem domain

Accuracy

how an agent improves true positives and true negatives as a result of

learning, self-organization, and active diagnosis

Entropy

a measure of how the system learns and organizes over time.

Decreasing entropy signifies increasing order in a multi-agent system,

resulting in more accurate and timely diagnoses

Network Activity

how much network related activity results if the framework is

implemented for distributed systems

Innovative Thrusts:

Intelligent Agent-based Distributed PHM Architecture

Error-tolerant, flexible, and scalable diagnostic/prognostic framework

Automated Prescription:

– What is wrong and how to I fix it?

– When do I fix it?

– How much confidence do I have that the system will not fail during the

execution of a mission?

Object-oriented modeling framework/physics-based models

Case-based reasoning paradigm - archive case studies - reason about

new situations

Open Systems Architecture - OSA CBM and ICAS compatible

Active Diagnosis / Prognosis notions

Performance Metrics / Performance based PHM modules

The Candidate Application

Domains

Shipboard

Processes - gas turbines, AC plants,

elevators, main fire systems, etc.

Propulsion / Drivetrain Components - clutch,

Seals, pumps, bearings, blades, etc.

Power Systems - generation, shipboard

distribution

Radar tracing and other communicationrelated systems

Other

Contributions

Development

of a new methodology for the

design of a diagnostic and prognostic

framework for large-scale distributed systems

Development of Run-time Active Diagnosis

approach / extension to Prognosis

Development of Learning Strategies for

diagnostic / prognostic problem domain

Development of performance measures for the

comparison of centralized vs. distributed

diagnostic and prognostic frameworks

Implementation Issues

Embedded Distributed Diagnostic Platform (EDDP)

Hardware:

– Modular I/O (e.g. NI’s FieldPoint System, or

MAX-IO).

– Embedded PC (e.g. MPC - Matchbox PC of

TIQIT or MAX-PC of Strategic-Test).

– Network (e.g. Ethernet, PROFIBUS, CAN).

Software:

– Windows CE, Linux, QNX, VxWorks, or OsX

operating systems.

– Embedded databases (like Polyhedra).

A Possible Agent Node

An Operator Interface

(LCD Display)

A Small PC

(MPC, MAX-PC)

Network (Ethernet, CAN, Profibus)

Distributed I/O System

(FieldPoint)

Sensors

Sensors

Sensors

CBM Performance Assessment

Objective:

– To assess the technical and economic feasibility of

various prognostic algorithms

Technical

Measures:

– Accuracy, Speed, Complexity, Scalability

Overall

Performance Measure:

– w1Accuracy + w2Complexity + w3Speed + …

(wi - weighting factors)

PM1

PM2

PM3

Algorithm #1

*

*

*

Algorithm #2

*

*

*

Algorithm #3

*

*

*

Performance

Assessment Matrix:

CBM Performance Assessment

Target

Measure:

PM yr (n f ) y p (n f ) yr (ns ) y p (ns )

Output y(n)

Real yr(n)

Behavior

Measure:

Predicted yp(n)

nf

PM w(i) yr (i) y p (i)

i ns

tpf

Discrete time n

Mean

E{t pf

and Variance Measures:

1

1

V {t } [t

} t (i )

N

N

N

N

i 1

pf

pf

i 1

2

(

i

)

E

{

t

}]

pf

pf

Complexity/Cost-benefit

Analysis

Complexity

Measure

t p td

computation time

complexity E

E

t

time to failure

pf

Cost/Benefit Analysis

–

–

–

–

frequency of maintenance

downtime for maintenance

dollar cost

etc.

Overall

Performance

Overall Performance = w1accuracy + w2complexity + w3cost + ….

Cost/Benefit Analysis

Establish

Baseline Condition - estimate cost of

breakdown or time-based preventive

maintenance from maintenance logs

A good percentage of Breakdown

Maintenance costs may be counted as CBM

benefits

If preventive maintenance is practiced,

estimate how many of these maintenance

events may be avoided.

The cost of such avoided maintenance events

is counted as benefit to CBM.

Cost/Benefit Analysis (cont’d)

Intangible

benefits - Assign severity index to

impact of BM on system operations

Estimate the projected cost of CBM, i.e. $ cost

of instrumentation, computing, etc.

Aggregate life-cycle costs and benefits from

the information detailed above

CINCLANTFLT Study

Question: “What is the value of prognostics?”

Summary of findings:

(1) Notional Development and Implementation for Predictive CBM Based on

CINFCLANTFLT I&D Maintenance Cost Savings

(2) Assumptions

–

–

–

–

–

CINCLANTFLT Annual $2.6B [FY96$] I&D Maintenance Cost

Fully Integrated CBM yields 30% reduction

Full Realization Occurs in 2017, S&T sunk cost included

Full Implementation Costs 1% of Asset Acquisition Cost

IT 21 or Equivalent in place Prior to CBM Technology

(3) Financial Factors

– Inflation rate:

– Investment Hurdle Rate:

– Technology Maintenance Cost:

(4) Financial Metrics:

4%

10%

10% Installed Cost

15 year 20 year

Concluding Remarks

CBM/PHM

are relatively new technologies sufficient historical data are not available

CBM benefits currently based on avoided

costs

Cost of on-board embedded diagnostics

primarily associated with computing

requirements

Advances in prognostic technologies

(embedded diagnostics, distributed

architectures, etc.) and lower hardware costs

(sensors, computing, interfacing, etc.) promise