Introduction, Syllabus and Prelims

CSCI-6964: High Performance

Parallel & Distributed Computing

(HPDC)

AE 216, Mon/Thurs 2-3:20 p.m.

Introduction, Syllabus & Prelims

Prof. Chris Carothers

Computer Science Department

Lally 306 [Office Hrs: Wed, 11a.m – 1p.m] chrisc@cs.rpi.edu

www.cs.rpi.edu/~chrisc/COURSES/HPDC/SPRING-2008

HPDC Spring 2008 - Intro, Syllabus & Prelims 1

Course Prereqs…

• Some programming experience in Fortran, C, C++…

– Java is great but not for HPC…

– You’ll have a choice to do your assignment in C, C++ or

Fortran…subject to the language support of the programming paradigm..

• Assume you’ve never touched a parallel or distributed computer..

– If you have MPI experience great..it will help you, but it is not necessary…

• If you love to write software…

– Both practice and theory are presented but there is a strong focus on getting your programs to work…

HPDC Spring 2008 - Intro, Syllabus & Prelims 2

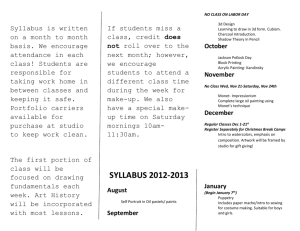

Course Textbook

• Introduction to

Parallel Computing, by Grama, Gupta,

Karypis and Kumar

• Make sure you have the 2 nd edition!

• Available either online thru the

Pearson/Addisom

Wesley publisher or

RPI Campus bookstore.

HPDC Spring 2008 - Intro, Syllabus & Prelims 3

Course Topics

• Prelims & Motivation

– Memory Hierarchy

– CPU Organization

• Parallel Architectures (Ch. 2, papers)

– Message Passing/SMP

– Communications Networks

• Basic Communications Operations (Ch 3)

• MPI Programming (Ch 6)

• Principles of Parallel Algorithm Design (Ch 4)

• Thread Programming (Ch 7)

– Ptreads

– OpenMP

HPDC Spring 2008 - Intro, Syllabus & Prelims 4

Course Topics (cont.)

• Analytical Modeling of Parallel Programs (Ch 5)

– LogP Model (paper)

• Parallel Algorithms (Mix of Ch 8 – 11)

– Matrix Algorithms

– Sorting “

– Graph “

– Search “

• MapReduce Programming Paradigm (papers)

• Applications (Guest Lectures)

– Computational Fluid Dynamics

– Mesh Adaptivity

– Parallel Discrete-Event Simulation

HPDC Spring 2008 - Intro, Syllabus & Prelims 5

Course Grading Criteria

• You must read ahead (lecture, textbook and papers)

– FOR EACH CLASS…You will write a 1 page paper that sumarizes what you read and states any questions you might have for my benefit…..

– In the case of guest lectures, report is due next class.

– What’s it worth…

• 1 grade point per class up to 25 points total

• There are 27 lectures, so you can pick 3 to miss…

• 4 programming assignments worth 10 pts each

– MPI, Pthreads, OpenMP, MapReduce

• Parallel Computing Research Project worth 35 pts

• Yes, that’s right no mid-term or final exam…

– May sound good, but when it’s 4 a.m. and your parallel program doesn’t work and you’ve spent the past 30 hours debugging it, an exam doesn’t sound so bad …

– For a course like this, you’ll need to manage you time well..do a little each day and don’t get behind on the assignments or projects!

HPDC Spring 2008 - Intro, Syllabus & Prelims 6

To Make A Fast Parallel Computer

You Need a Faster Serial

Computer…well sorta…

• Review of…

– Instructions…

– Instruction processing..

• Put it together…why the heck do we care about or need a parallel computer?

– i.e., they are really cool pieces of technology, but can they really do anything useful beside compute Pi to a few billion more digits…

HPDC Spring 2008 - Intro, Syllabus & Prelims 7

Processor Instruction Sets

• In general, a computer needs a few different kinds of instructions:

– mathematical and logical operations

– data movement (access memory)

– jumping to new places in memory

• if the right conditions hold.

– I/O (sometimes treated as data movement)

• All these instructions involve using registers to store data as close as possible to the CPU

– E.g. $t0, $s0 in MIPs on %eax, %ebx in x86

HPDC Spring 2008 - Intro, Syllabus & Prelims 8

$s0 a=(b+c)-(d+e);

$s1 $s2 $s3 $s4 add $t0, $s1, $s2 # t0 = b+c add $t1, $s3, $s4 # t1 = d+e sub $s0, $t0, $t1 # a = $t0–$t1

HPDC Spring 2008 - Intro, Syllabus & Prelims 9

lw destreg, const(addrreg)

“Load Word”

Name of register to put value in

A number

Name of register to get base address from address = (contents of addrreg

) + const

HPDC Spring 2008 - Intro, Syllabus & Prelims 10

Array Example:

a=b+c[8];

$s0

$s1 $s2 lw $t0,8($s2) # $t0 = c[8] add $s0, $s1, $t0 # $s0=$s1+$t0

(yeah, this is not quite right … )

HPDC Spring 2008 - Intro, Syllabus & Prelims 11

lw destreg, const(addrreg)

“Load Word”

Name of register to put value in

A number

Name of register to get base address from address = (contents of addrreg

) + const

HPDC Spring 2008 - Intro, Syllabus & Prelims 12

sw srcreg, const(addrreg)

“Store Word”

Name of register to get value from

A number

Name of register to get base address from address = (contents of addrreg

) + const

HPDC Spring 2008 - Intro, Syllabus & Prelims 13

Example:

sw $s0, 4($s3)

If $s3 has the value 100 , this will copy the word in register $s0 to memory location 104.

Memory[104] <- $s0

HPDC Spring 2008 - Intro, Syllabus & Prelims 14

lw destreg, const(addrreg)

“Load Word”

Name of register to put value in

A number

Name of register to get base address from address = (contents of addrreg

) + const

HPDC Spring 2008 - Intro, Syllabus & Prelims 15

sw srcreg, const(addrreg)

“Store Word”

Name of register to get value from

A number

Name of register to get base address from address = (contents of addrreg

) + const

HPDC Spring 2008 - Intro, Syllabus & Prelims 16

Example:

sw $s0, 4($s3)

If $s3 has the value 100 , this will copy the word in register $s0 to memory location 104.

Memory[104] <- $s0

HPDC Spring 2008 - Intro, Syllabus & Prelims 17

Instruction formats

6 bits op

5 bits rs

5 bits 5 bits 5 bits 6 bits rt rd shamt funct

32 bits

This format is used for many MIPS instructions that involve calculations on values already in registers.

E.g. add $t0, $s0, $s1

HPDC Spring 2008 - Intro, Syllabus & Prelims 18

How are instructions processed?

• In the simple case…

– Fetch instruction from memory

– Decode it (read op code, and use registers based on what instruction the op code says

– Execute the instruction

– Write back any results to register or memory

• Complex case…

– Pipeline – overlap instruction processing…

– Superscalar – multi-instruction issue per clock cycle..

HPDC Spring 2008 - Intro, Syllabus & Prelims 19

Simple (relative term) CPU

Multicyle Datapath & Control

HPDC Spring 2008 - Intro, Syllabus & Prelims 20

Simple (yeah right!) Instruction

Processing FSM!

HPDC Spring 2008 - Intro, Syllabus & Prelims 21

Pipeline Processing w/ Laundry

• While the first load is drying, put the second load in the washing machine.

• When the first load is being folded and the second load is in the dryer, put the third load in the washing machine.

• Admittedly unrealistic scenario for CS students, as most only own 1 load of clothes…

HPDC Spring 2008 - Intro, Syllabus & Prelims 22

Time

6 PM

Task order

A

B

C

D

7 8 9 10 11 12 1 2 AM

Time

6 PM

Task order

A

B

C

D

7 8 9 10 11 12 1 2 AM

HPDC Spring 2008 - Intro, Syllabus & Prelims 23

Pipelined DP w/ signals

HPDC Spring 2008 - Intro, Syllabus & Prelims 24

Pipelined Instruction.. But wait, we’ve got dependencies!

HPDC Spring 2008 - Intro, Syllabus & Prelims 25

Pipeline w/ Forwarding Values

HPDC Spring 2008 - Intro, Syllabus & Prelims 26

Where Forwarding Fails…must stall

HPDC Spring 2008 - Intro, Syllabus & Prelims 27

How Stalls Are Inserted

HPDC Spring 2008 - Intro, Syllabus & Prelims 28

What about those crazy branches?

Problem: if the branch is taken, PC goes to addr

72, but don’t know until after 3 other instructions are processed

HPDC Spring 2008 - Intro, Syllabus & Prelims 29

Dynamic Branch Prediction

• From the phase “There is no such thing as a typical program”, this implies that programs will branch is different ways and so there is no “one size fits all” branch algorithm.

• Alt approach: keep a history (1 bit) on each branch instruction and see if it was last taken or not.

• Implementation: branch prediction buffer or branch history table.

– Index based on lower part of branch address

– Single bit indicates if branch at address was last taken or not. (1 or 0)

– But single bit predictors tends to lack sufficient history…

HPDC Spring 2008 - Intro, Syllabus & Prelims 30

Solution: 2-bit Branch Predictor

Must be wrong twice before changing prediction

Learns if the branch is more biased towards “taken” or “not taken”

HPDC Spring 2008 - Intro, Syllabus & Prelims 31

Even more performance…

• Ultimately we want greater and greater

Instruction Level Parallelism (ILP)

• How?

• Multiple instruction issue.

– Results in CPI’s less than one.

– Here, instructions are grouped into “issue slots”.

– So, we usually talk about IPC (instructions per cycle)

– Static: uses the compiler to assist with grouping instructions and hazard resolution.

Compiler MUST remove ALL hazards.

– Dynamic: (i.e., superscalar) hardware creates the instruction schedule based on dynamically detected hazards

HPDC Spring 2008 - Intro, Syllabus & Prelims 32

Example Static 2-issue

Datapath

Additions:

•32 bits from intr.

Mem

•Two read, 1 write ports on reg file

•1 more ALU (top handles address calc) HPDC Spring 2008 - Intro, Syllabus & Prelims 33

Ex. 2-Issue Code Schedule

Loop: lw $t0, 0($s1) addiu $t0, $t0, $s2 sw $t0, 0($s1) addi $s1, $s1, -4 bne $s1, $zero, Loop

ALU/Branch

Loop: addi $s1, $s1, -4 addu $t0, $t0, $s2 bne $s1, $zero, Loop

#t0=array element

#add scalar in $s2

#store result

# dec pointer

# branch $s1!=0

Data Xfer Inst.

Cycles lw $t0, 0($s1) 1 sw $t0, 4($s1)

2

3

4

It take 4 clock cycles for 5 instructions or IPC of 1.25

HPDC Spring 2008 - Intro, Syllabus & Prelims 34

More Performance: Loop Unrolling

• Technique where multiple copies of the loop body are made.

• Make more ILP available by removing dependencies.

• How? Complier introduces additional registers via

“register renaming”.

• This removes “name” or “anti” dependence

– where an instruction order is purely a consequence of the reuse of a register and not a real data dependence.

– No data values flow between one pair and the next pair

– Let’s assume we unroll a block of 4 interations of the loop..

HPDC Spring 2008 - Intro, Syllabus & Prelims 35

Loop Unrolling Schedule

Loop

ALU/Branch

Instructions addi $s1, $s1, -16

Data Xfer lw $t0, 0($s1) lw $t1, 12($s1) addu $t0, $t0, $s2 lw $t2, 8($s1) addu $t1, $t1, $s2 lw $t3, 4($s1) addu $t2, $t2, $s2 sw $t0, 16($s1) addu $t3, $t3, $s2 sw $t1, 12($s1) sw $t2, 8($s1) bne $s1, $zero, loop sw $t3, 4($s1)

Cycles

Now, it takes 8 clock cycles for 14 instructions or IPC

36

7

8

5

6

3

4

1

2

Dynamic Scheduled Pipeline

HPDC Spring 2008 - Intro, Syllabus & Prelims 37

Intel P4 Dynamic Pipeline – Looks like a cluster .. Just much much smaller…

HPDC Spring 2008 - Intro, Syllabus & Prelims 38

Summary of Pipeline Technology

We’ve exhausted this!!

IPC just won’t go much higher…

Why??

HPDC Spring 2008 - Intro, Syllabus & Prelims 39

More Speed til it Hertz!

• So, if not ILP is available, why not increase the clock frequency

– E.g. why don’t we have 100 GHz processors today?

• ANSWER: POWER & HEAT!!

– With current CMOS technology power needs polynominal++ increase with a linear increase in clock speed.

– Power leads to heat which will ultimately turn your CPU to heap of melted silicon!

HPDC Spring 2008 - Intro, Syllabus & Prelims 40

HPDC Spring 2008 - Intro, Syllabus & Prelims 41

CPU Power Consumption…

Typically, 100 watts is magic limit..

HPDC Spring 2008 - Intro, Syllabus & Prelims 42

Where do we go from here?

(actually, we’ve arrived @ “here”!)

• Current Industry Trend: Multi-core CPUs

– Typically lower clock rate (i.e., < 3 Ghz)

– 2, 4 and now 8 cores in single “socket” package

– Because of smaller VLSI design processes (e.g. < 45 nm) can reduce power & heat..

• Potential for large, lucrative contracts in turning old dusty sequential codes to multi-core capable

– Salesman: here’s your new $200 CPU, & oh, BTW, you’ll need this million $ consulting contract to port your code to take advantage of those extra cores!

• Best business model since the mainframe!

– More cores require greater and greater exploitation of available parallelism in an application which gets harder and harder as you scale to more processors..

• Due to cost, we’ll force in-house development of talent pool..

– You could be that talent pool…

HPDC Spring 2008 - Intro, Syllabus & Prelims 43

Examples: Multicore CPUs

• Brief listing of the recently released new 45 nm processors: Based on Intel site

(Processor Model - Cache - Clock Speed - Front Side Bus)

• Desktop Dual Core:

– E8500 - 6 MB L2 - 3.16 GHz - 1333 MHz

– E8400 - 6 MB L2 - 3.00 GHz - 1333 MHz

– E8300 - 6 MB L2 - 2.66 GHz - 1333 MHz

• Laptop Dual Core:

– T9500 - 6 MB L2 - 2.60 GHz - 800 MHz

– T9300 - 6 MB L2 - 2.50 GHz - 800 MHz

– T8300 - 3 MB L2 - 2.40 GHz - 800 MHz

– T8100 - 3 MB L2 - 2.10 GHz - 800 MHz

• Desktop Quad Core:

– Q9550 - 12MB L2 - 2.83 GHz - 1333 MHz

– Q9450 - 12MB L2 - 2.66 GHz - 1333 MHz

– Q9300 - 6MB L2 - 2.50 GHz - 1333 MHz

• Desktop Extreme Series:

– QX9650 - 12 MB L2 - 3 GHz - 1333 MHz

• Note: Intel's new 45nm Penryn-based Core 2 Duo and Core 2 Extreme processors were released on January 6, 2008. The new processors launch within a 35W thermal envelope .

These are becoming the building block of today’s SCs

Getting large amounts of speed requires lots of processors…

HPDC Spring 2008 - Intro, Syllabus & Prelims 44

Nov. 2007 TOP 12 Supercomputers

(www.top500.org)

4.

5.

6.

1.

2.

3.

DOE/LLNL, US: Blue Gene/L: 212,992 processors : 478 Tflops!

FZJ, Germany: Blue Gene/L: 64K processors

NMCAC, US: SGI Altix: 14336 processors

CRL, India: HP Xeon Cluster: 14240 processors

Sweden Gov.: HP Xeon Cluster: 13768 processors

RedStorm Sandia, US: Cray/Opteron: 26569 processors

7.

8.

Oak Ridge Nat. Lab., US: Cray XT4: 23016 processors

IBM TJ Watson, NY: Blue Gene/L: 40960 processors (20 racks)

9.

NERSC/LBNL, US: Cray XT4: 19320 processors

10.

Stony Brook/BNL, NY: Blue Gene/L 36864 processors (18 racks)

11.

DOE/LLNL, US: IBM pSeries cluster: 12208 processors

12.

RPI, NY: Blue Gene/L: 32768 processors (16 racks)

If all NY State TOP 500 Blue Gene’s where interconnected, we’d have an SC resource of well above #2 in the world!

HPDC Spring 2008 - Intro, Syllabus & Prelims 45

HPDC Spring 2008 - Intro, Syllabus & Prelims 46

Soon-To-Be Fastest

Supercomputer…

• Ranger @ Texas Adv. Computation

Center (TACC)

– Sun is the lead designer/integrater

– Peak Performance: 504 TFlops

• This is the Linpack performance

– 62,976 processor cores

• 3936 nodes with 4, quad-core

AMD Phenom processors

• 8 Gflops per core is peak performance..

– 123 TBytes of RAM

– 1.73 Pbytes of disk

– 7 stage infiniBand interconnect

• 2.1 usec latency

HPDC Spring 2008 - Intro, Syllabus & Prelims 47

What are SC’s used for??

• Can you say “fever for the flavor”..

• Yes, Pringles used an

SC to model airflow of chips as the entered

“The Can”..

• Improved overall yield of “good” chips in “The

Can” and less chips on the floor…

HPDC Spring 2008 - Intro, Syllabus & Prelims 48

Patient Specific Vascular Surgical Planning

– Virtual flow facility for patient specific surgical planning

– High quality patient specific flow simulations needed quickly

– Image patent, create model, adaptive flow simulation

– Simulation on massively parallel computers

– Cost only $600 on 32K Blue Gene/L vs. $50K for a repeat open heart surgery…

HPDC Spring 2008 - Intro, Syllabus & Prelims 49

Summary

• Current uni-core speed has peaked

– No more ILP to exploit

– Can’t make CPU cores any faster w/ current

CMOS technology

– Must go massively parallel in order to increase

IPC (#instructions per clock cycle).

• Only way for large application to go really fast is to use lots and lots of processors..

– Today’s systems have 10’s of thousands of processors

– By 2010 systems will emerge w/ > 1 million processors! (e.g. Blue Waters @ UIUC)

HPDC Spring 2008 - Intro, Syllabus & Prelims 50

Reading/Paper Summary Assignments!

• Next lecture 2 summary assignments are due..

– 1 for this lecture

• Covers notes plus Chapter 1 thru 2.1

– 1 for next lecture

• Covers slides plus Chapter 6.1 thru 6.5

• Note, next lecture not until Thursday, Jan.

24 th

– Jan 17 th lecture cancelled due to CS External

Review event..

– Jan 21 st lecture cancelled due to MLK day.

HPDC Spring 2008 - Intro, Syllabus & Prelims 51