Studies NS 1 1 (3) - Regulatory Policy Institute

STUDIES IN REGULATION

On the discovery and assessment of economic evidence in competition law

Chris Decker and George Yarrow

NS 1.1 2011

REGULATORY POLICY INSTITUTE

Chris Decker and George Yarrow

On the discovery and assessment of economic evidence in competition law

Studies in Regulation, New Series, Vol. 1, No. 1

Published by the Regulatory Policy Institute

2 Market Street, Oxford OX1 3ET, UK www.rpieurope.org

First published December 2011

© Christopher Decker and George Yarrow

Economic evidence in competition law

1. Introduction

Assessment of economic data/information/evidence in more or less any context can raise difficult issues. Compared with the testing of hypotheses and theories in the physical sciences, there is much less ability to make use of controlled, repeated/repeatable experiments; and the resulting limitations are often reinforced by the complexity of the relationships and interactions that are involved in the determination of economic outcomes.

This complexity necessarily leads to high degrees of uncertainty, particularly (though by no means exclusively) uncertainty about the future.

In the context of the application of public policy, the difficulties are multiplied by the inherent limitations of governmental processes for discovering and assessing economic information for decision-making purposes. Such limitations derive ultimately from two, interlinked, ‘monopolistic’ characteristics of government: a highly constrained ability to handle large quantities of potentially relevant information, and a lack of incentives to ‘get it right’.

It seems sensible, therefore, to entertain only relatively modest ambitions in relation to the potential for improved policy performance consequential on improved economic assessments.

Yet even if the bar is set at a modest height, experience in the enforcement of competition law suggests a recurrent failure to rise to the challenge.

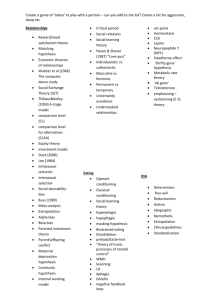

In what follows, we will seek first to understand why this is the case, and then to make some suggestions that might help mitigate the problems. No very comprehensive ‘solution’ can be expected, for reasons that are explored in section 2 below, which considers the nature of assessment problems. However, by focusing on a particular issue, ‘confirmation bias’ (in section 3), and on a particular area, competition law enforcement (in section 4), it is possible to identify some specific manifestations of the general problem with a degree of precision that opens up the possibility of remedial measures.

It can be noted at this point that the decision to focus on economic assessments made in the context of the enforcement of competition law should not be interpreted to imply that the problems under discussion are abnormally severe in this particular area of government activity. In fact, our view is almost the opposite: competition law enforcement appears to us to be where some of the highest quality (micro-)economic assessment work has been done within government, an outcome that we would attribute in part to the appeals mechanisms that have been established in this area, which serve to ensure that economic reasoning is subject to ‘competitive’ challenge. If then, there are significant weaknesses in economic assessment procedures in competition law enforcement, we invite the inference that it is

1

Economic evidence in competition law likely that substantially greater problems are to be found in other parts of government activity.

In any given context not all types of evidence and information will have equal value for the purposes at hand. We therefore explain and discuss the crucially important notion of the diagnosticity of evidence in section 5, where we seek to outline a framework for thinking about strategies for information discovery and assessment. The utility of this framework is explored in section 6, which illustrates its capabilities by using it to identify and explain two assessment failures and one assessment success in three past competition policy cases.

Section 7 contains some brief, concluding comments.

2. The nature of the problems

Key to the understanding of what economic analysis can (and can’t) contribute to public policy development is recognition that what usually stands to be assessed is the operation of complex, adaptive systems of social interactions.

1

A market is an example of such a system, although, in practice, it is better viewed as a particular sub-system within a wider system of interacting markets.

The ‘systems’ focus is particularly clear at the beginnings of political economy, even where it takes rather different forms; as in Quesnay’s

Tableau Économique 2

or Smith’s Wealth of

Nations . It is reflected, for example, in the emphasis on unintended consequences (the

Invisible Hand metaphor being an example) and on the indirect or unseen effects of public policy, as opposed to the direct or more visible impacts.

3

The systems focus is retained in modern economic analysis in the form of ‘models’ – which are built up from sets of propositions, hypotheses and assumptions about agents and their interactions – that are simplified representations of the system of interest (although this later, modern analysis tends, all too often, toward over-abstraction/over-simplification in general, and toward abstraction from the adaptive characteristics of actual economic systems in particular, usually in the name of mathematical tractability). 4

1 A system refers to a set of interacting agents, in which the structure and nature of the connections/ relationships between agents is important in determining behaviour and outcomes. Adaptation refers to the capability of a system to change (e.g. in its structure, and hence in its behaviour and performance) in response to external perturbations or shocks, and to internally generated changes, particularly the discovery of new information. Complexity is used to denote a situation in which the information determining how the system behaves and evolves is beyond the comprehension of any single agent (whether inside or outside the system).

2 François Quesnay (1759), Tableau Économique .

3 See also Frédéric Bastiat (1850), What is seen and what is not seen .

4 The dominant aesthetic in economics is to prefer precise and definite answers to questions posed, even where they are wrong, to answers that are more nuanced and roughly right.

2

Economic evidence in competition law

Notwithstanding the contributions of econometrics to the developments of techniques aimed at addressing some of these limitations, the wide variations in economic contexts across markets, across locations, and over time, tend to imply that detailed, case-study approaches – such as are to be found in the best work of competition authorities – generally offer the most promising approach to disentangling and understanding those systems of interactions that attract the label ‘economic’. At a more general level of understanding – for example, of what

Adam Smith called the ‘connecting principles’ of political economy – this process is aided by the building up of case studies conducted in a wide variety of different contexts. As Smith’s friend and colleague David Hume put it:

“Mankind are so much the same, in all times and places, that history informs us of nothing new or strange in this particular. Its chief use is only to discover the constant and universal principles of human nature, by showing men in all varieties of circumstances and situations, and furnishing us with materials from which we may form our observations and become acquainted with the regular springs of human action and behaviour. These records of wars, intrigues, factions, and revolutions, are so many collections of experiments, by which the politician or moral philosopher fixes the principles of his science, in the same manner as the physician or natural philosopher becomes acquainted with the nature of plants, minerals, and other external objects, by the experiments which he forms concerning them.” 5

Case study work is much more easily described than accomplished, however. What typically faces the analyst is a complex, factual reality, coupled with relatively disordered sets and sources of information relating to that reality. Given this, it can be asked: what principles should guide the collection and assessment of economic evidence? For example, in relation to information gathering there are various questions about the types of information to be sought, and about when to stop the information search process (when has sufficient information has been collected for the purposes at hand?). In relation to subsequent assessment of the available information, there are potential questions about: the type and number of hypotheses to be developed and explored; whether the available information is such that classical statistical techniques can reasonably be used; whether the specific and/or limited nature of the evidence calls for Bayesian methods of evaluation; and so on.

6

2.1 Sources of systematic errors (biases)

It should be clear from these and similar questions that there can be substantial scope for discretionary choices in the approach to evidence in particular cases, and further that these discretionary choices open up the possibility of approaches that may be influenced by a range of factors other than a disinterested search for truth, or for best possible understandings of the matters at hand within the relevant resource limits (search typically requires time and

5 D Hume (1748), An Enquiry Concerning Human Understanding ,

6 Bayesian methods provide an inductive framework for revising prior beliefs in response to new, relevant information.

3

Economic evidence in competition law money). Such influences include individual psychological factors, organisational and social pressures, and material interests in outcomes that might be affected by the results of assessments. A brief outline of some of these sources of potential bias illuminates the depth of the problems to be faced.

Individual psychology

The study of individual decision making has identified what has become a rather long list of possible cognitive biases, although we note that the significance of these for the effectiveness of decision making is a matter of dispute; for example, bias per se may not be a problem if it is relatively small (choices are approximately right), if it is associated with quick, low-cost assessment processes, or if unbiased alternatives might be expected to lead to larger errors on average (i.e. they exhibit more variability). Perhaps the most salient contribution is the distinction between simple, fast heuristics and slower, more reflective approaches to assessment – what Kahneman has called ‘System 1’ and ‘System 2’ processes. This distinction has analogues at the social/institutional level which we believe might be helpful in thinking about policy making processes (see further below).

7

In the psychological and behavioural economics research, fast (System 1) heuristics are found to exhibit systematic assessment errors in particular circumstances. This is hardly surprising: the relevant approaches have evolved or developed to allow for appropriate responses in particular situations/circumstances, and it is not to be expected that they will necessarily work well in other situations/circumstances. When new and difficult problems arise, the human mind tends to resort to slower, more reflective ways of responding to the problems posed

(System 2), but this too has its limitations. More specifically, this type of assessment requires attention , which appears, on the evidence, to be rather limited in supply, and hence, in economic terms, costly . Speaking roughly, attention appears to both restricted in its field of vision and difficult/costly to sustain.

The implications of this research for assessments of complex economic systems are obvious.

The behaviour of systems is a function of a large number of factors. Fast heuristics tend to focus on easily visible, simple, direct connections between specific, often localised, actions and easily visible, often localised, consequences of those actions. That is, in Bastiat’s terminology, they focus on ‘what can be seen’ (quickly); and systematic error can be expected as a result.

Slower, more reflective thinking is required to address the less direct, more diffuse, less visible (often unintended) consequences of human conduct. However, in a complex situation, attention devoted to particular aspects of the situation can easily lead to neglect of

7 D Kahneman (2011) Thinking, Fast and Slow (Penguin, London).

4

Economic evidence in competition law information concerning other aspects of the situation that may be of critical importance for understanding the behaviour of the system as a whole.

8

In practice, the problems here are compounded by the tendency of much economic theorising to rely heavily on systematic abstraction (i.e. to neglect large numbers of potentially relevant factors in the name of building solvable models). This gives rise to what might be called the

‘Quine problem’, which might be exemplified by considering the assessment of a hypothetical ‘theory of harm’ in a competition law case.

Quine 9 , following Duhem 10 , argued that tests of theories are, in effect, joint tests of the propositions that, taken together, make up the theories: they are not tests of individual propositions or of sub-sets of the propositions. If a particular theory is found to be at odds with reality, in the sense that its implications are found to be inconsistent with available evidence, it is not possible to assign that failure to the failure of a particular proposition within it. Thus, a component proposition might be true, yet the theory may fail as an explanation of what has happened; or a component proposition might be false, yet the theory as a whole can still work.

This is, in effect, an argument against reductionism – the approach of breaking things down into components and assessing those components one by one – which fits easily with a similar argument that appears in the analysis of complex, adaptive systems, to the effect that, to understand behaviour, systems must be analysed as a whole. Unfortunately, even with slow thinking, there are challenging problems in ensuring that, under the required, holistic approach, all the factors that may be material for the purposes at hand have been given an adequate allocation of (scarce) attention – and attention here refers to something much more than simply listing a particular feature or aspect of the situation that has been noticed and recorded.

If, then, what is actually assessed is a joint hypothesis or theory, made up of several

(potentially many) elements or components, there is necessarily scope for holding on to favoured, component propositions, even when the theory has been found to be inconsistent with available evidence. This can be accomplished by changing other components of the relevant theory in ways that, combined with the retained, favoured proposition, move the

8 In Thinking, Fast and Slow, Kahneman gives the example of an experiment by Christopher Chabris and

Daniel Simons, who made a short film of two teams passing basketballs, one team in white the other in black.

Viewers were asked to count the number of passes made by members wearing white shirts, and to ignore the other players. Halfway through the video, a woman wearing a gorilla suit appears for about nine seconds, crossing the court, thumping her chest, and then moving on. About half the people who view the video report, on completion, that they have seen nothing unusual. The implication is that in focusing attention on one aspect of what is happening the viewer may be blind to other things.

9 W.V.O. Quine (1951) “Two dogmas of empiricism”, The Philosophical Review . Vol 60, pages 20-43.

10 P Duhem (1954) The Aim and Structure of Physical Theory , trans. Philip P. Wiener (Princeton, NJ:

Princeton. University Press).

5

Economic evidence in competition law implications of the theory toward a closer relationship with the evidence. Just as data can be mined to produce a desired outcome, so the component elements of theories can be mined to

‘protect’ a favoured implication.

Thus, although a holistic approach can be said to rest on sophisticated, reflective (System 2) thinking, it too can be associated with considerable inertia in conclusions in the face of challenging evidence. In economics, if there is a ‘demand’ for a bias in interpretation of evidence, or for putting a favoured implication, inference or belief less at risk from the evidence, there are typically many ways in which that demand can be met, as anyone familiar with the use of cost-benefit analysis in such circumstances will likely have found.

11

Organisational influences

Where the relevant assessments are made within an organisational context – which is the case for those issues in competition law that are decided by the administrative agencies of UK/EU competition law/policy – approaches taken to economic evidence can also be expected to reflect features of the relevant bodies. Public organisations have their own agendas and priorities; and they develop cultures in response to challenges of adapting to their own, changing social/political/environments and of internally integrating the individuals and subgroups who make up the organisation.

Most regulatory assessments are undertaken in organisations that are strongly influenced by political and administrative/bureaucratic cultures, which may not be conducive to approaches to economic assessment aimed at understanding how markets actually work and how market behaviour might change when subject to perturbations. Administrative/bureaucratic cultures may give undue attention to internal processes (e.g. production of documents is seen as the priority) relative to external matters (e.g. how markets are changing) – other than those ‘semiexternal’ processes (external to the organisation but internal to government) that determine the responsibilities, powers and budgets of different departments and agencies. In contrast, political cultures tend nowadays to be heavily pre-occupied on that which can be seen, and hence easily explained to the media and public (e.g. on a System 1 basis), to the neglect of the diffuse, unseen effects of decisions.

Private interests

In addition to the influence of organisational interests in survival and expansion and of the cultures that develop to promote such outcomes, public decisions can also be affected by the private interests of those participating in the decision making process and of those likely to be

11 Quine adopts the metaphor of a ‘web of belief’, where strands of the web are the individual elements or components, and where the strands connecting with the structure to which the web is attached are the most basic statements of fact.

6

Economic evidence in competition law affected by decisions. A well known example from the extensive historical literature on regulation is the influence that is referred to as ‘regulatory capture’. Thus, regulators seeking

‘rewards’ in the form, say, of remunerative employment following the relinquishing of their public duties, or seeking political honours or political recognition, may be inappropriately influenced by agendas other than that of impartially evaluating evidence and reaching appropriate conclusions.

Such effects can occur at varying levels of an organisation, and are by no means confined to the senior executive level. Thus, the life of a hard-pressed middle-ranking official can be made easier by the co-operation of companies providing information and evidence. The moral sentiment of reciprocity can then lead to undue weight/attention being given to the submitted evidence which, in all likelihood, will be partial to at least some degree; and, in simply describing this kind of situation, it is almost self-evident that it is extremely difficult to eliminate the tendency completely.

2.2 Starting from the beginning

The surge of interest in the study of heuristics and biases over recent decades, now imported into economic thinking in the form of what is known as behavioural economics, is, in at least one sense, a return to the roots of the subject. Smith’s first major work was The Theory of

Moral Sentiments (1759) – a book that can be characterised as a work of ‘philosophical psychology’ – which opens with, and is founded on, the notion of sympathy , hypothesised to be a universal aspect of human nature.

Critical to the reasoning in the TMS is the notion of the impartial spectator , who acts as a touchstone for the refinement of moral sentiments and the moderation of emotions, providing a guide as to what are and what are not appropriate sentiments in the various circumstances in which we may find ourselves. This notion has also been the subject of a recent revival, appearing as an important concept in Amartya Sen’s

The Idea of Justice .

The basic idea is that accurate adjudication requires stepping away from one’s own material and emotional interests, and adopting a position that allows for the evaluation of as much contextual information as possible; recognising, at the same time, that this notion of objectivity should not be taken so far as to suggest an endorsement of some Archimedean point that is free of all biases.

Interestingly, and a point that is crucial for the later development of our arguments, in

Smith’s thinking the recommended attitude with which the impartial spectator should approach judgments about human conduct is not one of general scepticism, which tends to be incompatible with sympathy . In the dialogues between actors and spectators (who will make

7

Economic evidence in competition law the adjudications)

12

, the spectator is expected to do what he/she can to understand possible arguments about the conduct in question. At the same time there is a reciprocal responsibility on the actor, which is to provide relevant information as clearly as possible, and not to obfuscate or mislead. These mutual obligations imply a rudimentary community of inquiry

13

, and an associated culture of inquiry . Using this terminology, it can be said that, in this paper, one of our central concerns is with factors that tend to undermine the development and evolution of such communities/cultures of inquiry, and with ways of thinking about economic evidence that might help to foster such communities/cultures.

Since, consistent with Hume, the understanding of conduct requires careful attention to variations in context, accurate adjudication will generally only be possible on the basis of detailed information about the relevant circumstances. This puts a premium on information discovery, and it is a factor that favours sympathy over scepticism in approaches to context, at least in the earlier stages of enquiry (scepticism is less effective in teasing out the information on which later, effective adjudication will depend). It also points to another tendency that can lead to biases associated with failures in information gathering and analysis: excessive haste in preliminary adjudication.

Such excessive haste goes under many names. Psychologists refer to premature closure – a failure to consider, or to continue considering, reasonable alternatives after an initial view has been reached – which is, for example, recognised to be a major cognitive problem in medical diagnosis. More everyday labels include ‘jumping to conclusions’ and prejudice. Whatever the name, however, the point is that a sympathetic impartial assessor may be better at avoiding the bias than a more sceptical assessor.

At the end stages of an enquiry, of course, rejection of at least some propositions is to be expected. Indeed evidence kills theories (which economics spawns in great abundance), and stronger evidence will have more killing power than weaker. It is at these later stages that a more sceptical approach on the part of the assessor will tend to become warranted. First, however, the evidence has to be discovered and examined, and premature closure gets in the way of that.

The role of the impartial spectator in the TMS is chiefly to make evaluations of conduct in everyday situations. Moreover, since Smith placed emphasis on the motivations for conduct, rather than the effects of conduct, no great analytical capacity is typically required to reach conclusions. When evaluating the behaviour of complex systems, however, something more than this is required. Specifically, the evaluator needs to be skilled , as well as impartial; and

12 The actor and the spectator can be the same person.

13 This is a term first used by C.S. Peirce to refer to the way in which people create knowledge in collaboration with others, and later taken up and generalised in the theory of education. Here the usage is much closer to the narrower, original conception of a group of collaborating investigators/scientists/scholars sharing similar values concerning the construction of knowledge.

8

Economic evidence in competition law also to be engaged , in the sense of being willing to bear the costs of the attention that will be required to perform a demanding task. Further, because attention may tend to focus on a limited number of aspects of a complex system and thereby lead to the neglect of other, relevant factors, effective economic assessment may often require the combined attention of several spectators/evaluators .

In administrative processes, the skilled, engaged, impartial assessor can be interpreted as an

‘idealised’ civil servant or regulatory official, or a group of such officials; in judicial processes, the relevant assessors are judges or tribunals. These (administrative and judicial assessment procedures) are different ways of doing things, and the different institutional structures can be expected to have different effects. In examining these matters, we suggest that the notions of an effective community/culture of inquiry can be of considerable assistance.

A central aspect of our general argument is that failures to create such communities/cultures – failures that manifest themselves in an excessive propensity to neglect, dismiss, discount, or not use reasonable endeavours to discover, relevant information – is a central weakness of many, current, institutions of competition law and regulation; but that the extent of the failure varies among types of institution. Perfection is impossible, but some forms of arrangements are less bad than others; and, by studying and understanding the source of the resistances to consideration of relevant evidence, we will be in a better position to develop and sustain the desirable communities and cultures of inquiry.

With these points in mind, we will examine, in the later parts of this paper, some of the issues that have arisen in EU and UK competition law over recent years in connection with the way in which competition authorities have gone about assessing economic evidence. In doing so, it is perhaps worth re-stating our view that this is unlikely to be the area of public policy where the effects of biases in the assessment of evidence are likely to be greatest. It does, however, have the advantage of being a policy area where some of the underlying issues with which we are concerned have been recognised and discussed, including by thoughtful senior public officials participating in the relevant assessment processes.

3. A core problem: confirmation bias

As already indicated, there has been increasing interest in recent years in the implications of psychological research on decision making for the conduct of public policy. The ‘headline’ stories concern the interest of politicians and policy makers in using the insights of this work to modify or affect the behaviour of the public in various areas of social and economic life.

In contrast, our interest is in the potential use of the research to modify or affect the behaviour of decision-makers so as to mitigate some of the most egregious, systematic

9

Economic evidence in competition law errors/biases in decision-making. More specifically, in relation to the enforcement of competition law, our focus is on biases in judgment in circumstances where the investigative, assessment and decision-making functions are all performed by the same organisation. This focus leads fairly directly to consideration of the notion of confirmation bias , a concept that has a reasonably long pedigree in psychological literature, and which has been used extensively in contexts where different pieces of information/data are used to build or test a case or hypothesis (such as in medical diagnosis).

3.1 What is confirmation bias?

The term ‘confirmation bias’ has been used in slightly different ways in different parts of the relevant literature, but it generally refers to an approach to information gathering and decision making in conditions of uncertainty which is based on the inappropriate bolstering of particular hypotheses or beliefs. As Nickerson observes:

“People tend to seek information that they consider supportive of favored hypotheses or existing beliefs and to interpret information in ways that are partial to those hypotheses or beliefs. Conversely, they tend not to seek and perhaps even to avoid information that would be considered counterindicative with respect to those hypotheses or beliefs and supportive of alternative possibilities.” 14

Put slightly differently, the bias can be said to arise through “unwitting selectivity in the acquisition and use of evidence” in assessment. Such selectivity ‘protects’ favoured or privileged hypotheses and beliefs from the threat of being undermined by new information/evidence. Given that we are here focusing on confirmation bias in a competition policy context, it might also be said that the tendency to selectivity in the approach to evidence ‘distorts competition’ among competing, alternative hypotheses; and this alternative language can be helpful when it comes to designing mitigation strategies.

There are a number of distinctions to be borne in mind when considering use of the notion of confirmation bias. First, at least at the level of the individual decision-maker, the concept, as developed in psychology, is generally not intended to refer to the purposive, deliberate or conscious decision to be selective in the use of evidence. Rather, the notion is defined broadly to capture a less conscious, less deliberate and in some cases unintentional approach to evidence and information gathering and assessment, which is nevertheless ‘one-sided’ in its outlook.

Second, this boundary between purposeful and unwitting behaviour becomes much fuzzier when dealing with organisational assessment and decision making, and confirmation bias then tends to become part of a wider pathology of organisational behaviours. Thus, from our

14 R.S. Nickerson (1998) ‘Confirmation Bias: A Ubiquitous Phenomenon in Many Guises’, Review of General

Psychology , vol. 2, number 2, 175-220.

10

Economic evidence in competition law own experience, there is often an ‘appreciation’ of the existence of bias and selectivity on the part of at least some parts of decision making teams, which is nevertheless suppressed for reasons that are sometimes conscious and sometimes not. This may be why some of those who have been most vociferous in expressing concerns about the existence and effects of the bias in the competition policy area have often been public officials with direct experience of the relevant decision making processes.

Third, and different from the purposeful/unwitting distinction, is the distinction between interested and disinterested approaches to evidence, and this takes us back to the ideal of the skilled, engaged, impartial assessor. As discussed above, individuals and organisations can have material stakes in the outcomes of assessments, or can have particular sets of beliefs or ways of doing things (i.e. aspects of organisational cultures) that can become sources of discomfort when disturbed or challenged. Under the influence of such ‘partial’ interests, there can therefore be tendencies, on the parts of both individuals and groups, to believe what is convenient or useful to believe and to see what is convenient or useful to see – a sort of

‘partial’ or ‘private’ pragmatism (“this idea is acceptable on account of its manifest utility”

(to the private or partial interest)), which may be compared the ‘public’ pragmatism of the scholars of that particular philosophical school.

15

Of significance in the case for the use of the concept of the impartial spectator is the implicit assumption that partiality is a major, distorting factor in evaluation of evidence; although that doesn’t mean that it is the only significant factor. There is a range of decision-making contexts where an individual has no obvious, material, personal interest in a particular outcome or in maintaining a particular hypothesis – medical diagnosis and judicial decisionmaking might be given as examples – but in which systematic biases in assessment have been shown, in some circumstances, to exist. According to Nickerson, there is a ‘great deal’ of empirical evidence that supports the claim that confirmation bias is ubiquitous in a range of different decision making contexts, and that it appears in many guises. He cites areas such as government policy-making,

16

medical diagnosis, and science as examples of where confirmation bias has been shown to exist, and to influence behaviour in terms of information gathering, analysis and decision-making.

15 C.S. Peirce, who introduced the notion of a community of inquiry, was one of the founders of Pragmatism as a modern philosophical school. The development of the notion may therefore have been a response to a perceived danger that a pragmatic approach to things could, under the influence of private/partial interests, tend to lead to biased discovery processes and distorted conclusions. If so, our arguments in this paper are following a part beaten track.

16 Nickerson cites Barbara Tuchman: Wooden headedness, the source of self deception, is a factor that plays a remarkably large role in government. It consists in assessing a situation in terms of preconceived fixed notions while ignoring or rejecting any contrary signs. It is acting according to wish while not allowing oneself to be deflected by the facts. It is epitomized in a historian's statement about Philip II of Spain, the surpassing wooden head of all sovereigns: "no experience of the failure of his policy could shake his belief in its essential excellence." Tuchman, B. W. (1984). The march of folly: From Troy to Vietnam. New York: Ballantine Books.

11

Economic evidence in competition law

Of course, there is a possible argument that, in fact, there is no such thing as the disinterested assessor, and that, for example, the evidence of confirmation bias in medical diagnostics is a reflection of the influence of the private interests of the doctors concerned. Thus, even in health systems where doctors have no direct financial interest in the outcomes of diagnosis, the process of diagnosis involves resource allocation decisions to be made by the assessor, whose own resources are limited. At what point, for example, should the doctor stop asking questions and searching for further information before settling on a view about the likelihoods of alternative causes of symptoms? It is not difficult to imagine circumstances in which such a decision might be influenced by ‘private (to the assessors) agendas’.

Similar points apply to assessors dealing with competition and regulatory matters. There may be no financial interest at stake, but the exploration of alternative competing hypotheses requires time and effort. Hicks said that ‘the best form of monopoly profit is a quiet life’, and a similar remark might be applied to the ‘perks’ (rather than profits) of administrators and adjudicators operating within systems that are, after all, typically highly monopolistic.

It can be easy, therefore, for busy assessors to be over-dismissive of inconvenient hypotheses, arguments and information; and this can result in a bias in administrative systems towards the reaching of premature judgments. This is premature closure ; and subsequent confirmation bias might be seen as a way of sustaining such premature judgments: "Once a policy has been adopted and implemented, all subsequent activity becomes an effort to justify it."

17

Given that judgments have to be made in conditions of limited resources, including limited time, early judgments can, of course, be ‘justified’ in terms of ‘administrative convenience or necessity’. Indeed, for many decisions, the justification will be convincing: this is not dissimilar to the System 1 and System 2 distinction in the psychological research, in that many simple decisions can effectively be taken without much effort. Here, however, we are concerned with assessments of complex systems.

It is perhaps ironic that one of the most common ways in which confirmation bias is sustained is by the dismissal of evidence on the basis that the relevant information has been provided by an interested party; a case of the pot calling the kettle black. This tends to be a feature of administrative/investigative/inquisitorial processes, but not of adversarial processes; which rely heavily on ‘competition’ as a discovery procedure: “Truth reveals itself in the crucible of vigorous exchanges among those with competing perspectives.”

18

As noted in passing earlier, and at least for practical purposes, impartiality is not an all-ornothing matter. In the words of an Old Bailey 19 Judge: “We at the old Bailey play it straight

17 Tuchman, ibid.

18 W.W. Park (2010), “Arbitrators and Accuracy”, Journal of International Dispute Settlement , Vol 1, No 1.

19 The Central Criminal Court of England and Wales.

12

Economic evidence in competition law down the middle, not too partial – not too impartial either”.

20

If the influence of partial and private interests on assessments can be reduced, then we might expect that bias will be reduced. However, it cannot be concluded that confirmation bias would necessarily tend to zero: it could still be the case that bias remains, consequential on other factors, such as the way in which the human brain processes information.

3.2 Private and partial interests

Developing social systems that are populated with individuals and organisations motivated by

‘partial and private interests’ but which operate in ways that are effective in serving the general/public interest has been a central concern of economics since its first emergence as a field of study. Smith summarised the issue in his invisible hand metaphor, when referring to a situation in which the unintended consequences of the pursuit of partial/private interests are favourable to general/public interests. Like Hume and other contemporaries, however, he was conscious of the fragility of such systems, and of the constant threat of unravelling posed by the unchecked pursuit of private and partial interests of varying forms.

As already indicated, the concept of partial and private interests is to be construed widely, and not confined to simple and direct financial or material interests in the outcomes of decisions. In relation to our current focus of attention, for example, it seems clear from both experience and more systematic research that the individual career motivations of practitioners – both lawyers and economists – can be important determinants of which competition law cases are pursued, and how they are prosecuted. Weaver, for example, in her study of the US Department of Justice Antitrust Division, found that a significant number of attorneys joined the Department because they wanted to ‘ bring cases and win them

’, and that, when a case does emerge, the “ staff will be energetic in seeking to develop it into an actual case, and it will be quite unabashedly partisan in its use of arguments”.

21

Katzmann observed a similar trend in his study of the Federal Trade Commission, concluding that choices as to which cases to pursue was, in part, the result of a struggle to pursue actions in the public interest while at the same time reflecting the ‘ decision making calculus

’ of commission staff including the need to satisfy staff morale and the professional objectives of staff attorneys (in terms of future job prospects).

22

Finally, and more recently, Leaver examines the behaviour of US Public Utility Commission officials and finds that reputational and career concerns – particularly a desire to avoid criticism or excite interest groups – can bias bureaucratic decision-making. Leaver argues that an implication of this finding is that the appointment of academics, for example, to senior posts within an agency on a short-term

20 Cited by Christopher Bellamy (2006) “The Competition Appeal Tribunal – five years on”, in Colin Robinson

(ed.), Regulating Utilities and Promoting Competition: Lessons for the Future , Edward Elgar Publishing.

21 S Weaver (1977) Decision to prosecute: Organization and Public Policy in the Antitrust Division (The MIT

Press Cambridge Mass.) pages 8 and 9.

22 RA Katzmann (1980) Regulatory Bureaucracy; The Federal Trade Commission and Antitrust Policy (The

MIT Press Cambridge Mass.), pages 84 and 184-185.

13

Economic evidence in competition law basis may not bring the desired benefit of independence and neutrality, as the academics may

“also be driven by a strong desire to maintain peer respect, not to mention future jobs.

” 23

3.3

The ‘scientific method’ and falsificationist approaches

The question of how individuals collect and use information to form judgements in the context of uncertainty and imperfect information is one that has long occupied the minds of philosophers

24

and psychologists.

25

It has been an issue of particular interest to those who are interested in the history and philosophy of science, including the social sciences.

An important proposition that has been explored, and empirically tested, in some of this work is the extent to which individuals tend to adopt a so-called ‘scientific approach’ to information gathering and analysis, particularly in contexts where they are acting dispassionately and independently. This approach, which is often associated with the work of the philosopher Karl Popper, is based on the argument that the only way to establish the truthfulness, or accuracy, of a proposition or hypothesis is to seek out information which could falsify the proposition or hypothesis.

Popper’s falsificationist position has been challenged by other philosophers, but of greater relevance here are the numerous experimental and empirical studies that have shown that this is not generally how decision makers – including professional investigators such as scientists and doctors – tend to approach evidence gathering and hypothesis testing. Thus, in line with what has been said above, a much more frequently adopted approach appears to be biased toward seeking out information that tends to confirm a ‘privileged’ rule or hypothesis and to not examine information that may challenge or contradict a prevailing or favoured hypothesis. As Oswald and Grosjean observe “ To be blunt… humans do not try at all to test their hypotheses critically but rather to confirm them.”

26

This, however, may be too bleak a view of things. One possible response is to treat Popper’s approach as normative, as a standard to be aspired to, rather than as a description of practice, even among professional adjudicators; although we note that this does not deal with what many, since Hume, have regarded as the unavoidable, practical case for inductive reasoning

23 C Leaver (2007) ‘Bureaucratic minimal squawk behavior: Theory and Evidence from regulatory agencies’

Oxford University Department of Economics Working Paper number 344, August 2007.

24 Francis Bacon, Novum organum, reprinted in Burtt, E. A. (Ed.), The English philosophers from Bacon to Mill

(pp. 24-123). New York: Random House.

25 Thurstone, L. L (1924). The nature of intelligence. London: Routledge & Kegan Paul (page 101).

26 ME Oswald, and S Grosjean S.(2004), Confirmation bias, in Cognitive illusions: A handbook on fallacies and biases in thinking, judgement and memory (pp. 79–96). Hove, UK: Psychology Press (citing Wason, P. C.

(1960). ‘On the failure to eliminate hypothesis in a conceptual task’ Quarterly Journal of Experimental

Psychology , 14, 129-140).

14

Economic evidence in competition law

(drawing necessarily uncertain conclusions from relatively limited information), which exists notwithstanding the logical arguments for scepticism. Another is to recognise that, when there are competing hypotheses, the confirmation of one is logically connected to the rejection of others. That is, the issue is less one of setting out to confirm or reject a particular hypothesis, but of recognising the set of linked relationships between evidence and alternative, competing hypotheses and of approaching assessment in a way that reflects these links. We will argue below that Bayesian inference, which can be regarded as a form of inductive logic, provides an appropriate framework for empirical assessment.

3.4 A positive test strategy approach

At this point in the discussion, and again to suggest that the Oswald and Grosjean view may be too pessimistic, it is useful to introduce a further distinction between the notions of confirmation bias and what is called a positive test strategy (PTS). Klayman and Ha have defined a PTS as an approach to hypothesis testing which only examines instances in which:

(1) a property or event is expected to be observed (to test if it does occur) or

(2) by examining instances in which it is known to have occurred (to see if the hypothesised conditions prevail).

27

In short, a PTS seeks to provide a heuristic that narrows and restricts the information space to instances or examples that have some prior probability of being relevant.

28

The point here is that, in circumstances where it can be expected that there will be a significant number of instances/observations that are potentially capable of falsifying hypotheses, a more efficient approach to information gathering is to restrict the field of inquiry (whilst taking care to ensure that the restriction does not unduly limit the number of falsification opportunities). Oswald and Grosjean describe the advantage of this approach as follows: “ A PTS would enable us to find the ‘needle’ much more easily since we would not have to search the whole ‘haystack” .

29

A PTS, therefore, is something different from an attempt to confirm hypotheses or immunise them against rejection. A PTS consciously approaches information gathering and hypothesis testing by examining only information or applying only tests that are capable of confirming the hypothesis (but simultaneously capable of rejecting it). It is necessarily, and deliberately,

27 J Klayman and YW Ha (1987) ‘Confirmation, Disconfirmation and Information in Hypothesis Testing’

Psychological Review , vol 94, page 212.

28 ME Oswald, and S Grosjean, S.(2004), Confirmation bias, in Cognitive illusions: A handbook on fallacies and biases in thinking, judgement and memory (pp. 79–96). Hove, UK: Psychology Press pages 81- 82.

29 Ibid, page 82

15

Economic evidence in competition law restrictive in its focus. This differs from an approach where no prior decision is made to restrict the information collected or tested to assess the validity of a hypothesis, but where the information that is collected during the inquiry tends to have the characteristic that it will likely confirm the desired hypothesis, and/or will be unlikely to be inconsistent with that hypothesis (ie: confirmation bias).

While there is debate as to the precise nature of the distinction between a PTS and confirmation bias, in our view, the critical difference appears to be that a PTS approach involves a conscious and deliberate decision to narrow the information examined when testing a hypothesis, whilst taking care to preserve the possibility of observations that would imply rejection of the hypothesis; whereas approaches manifesting confirmation bias do not consciously adopt such a strategy a priori . Rather, as noted above, confirmation bias is more of a subconscious, non-deliberate cognitive strategy, based on seeking out only information consistent with a preferred hypothesis, or on discounting or neglecting information that may challenge the preferred hypothesis.

In section 5 below, we develop further our own general position on these points, which is one that is distinct from advocating either a strict falsificationist approach or, alternatively, a positive testing strategy. Rather our suggested approach embraces the notion of diagnosticity

30

, and therefore adopts an approach to information gathering that seeks to differentiate between a number of plausible, competing hypotheses. This leads to a focus on tests that provide the maximum information gain or impact with respect to beliefs (see the discussion of diagnosticity below), rather than simply trying to falsify or confirm a particular hypothesis. The key idea is that a given piece of evidence can have simultaneous implications for a number of different hypotheses, and that this (obvious) point should be borne constantly in mind in the processes of discovering and evaluating information.

4. Confirmation bias and competition law enforcement

The discussion in the preceding section has centred on the issue of confirmation bias in a general sense, noting that the issue can arise in a range of different contexts and settings where there may be a cognitive or organisational tendency to build a case to justify a particular conclusion or to sustain a specific hypothesis. Returning to the central focus of this study, however, it is also clear that the issue of confirmation bias is one that is potentially of major significance in the specific context of the enforcement of competition law.

4.1 Economic evidence and competition law enforcement

30 A notion that has similarities with Popper’s notion of the severity of a test of a hypothesis. See F.H. Poletiek

(2009), “Popper’s Severity of Test as an intuitive probabilistic model of hypothesis testing”, Behavioral and

Brain Sciences , 32: pp 99-100.

16

Economic evidence in competition law

Recent decades have seen a significant shift in the role and prominence given to the assessment of economic evidence, information and data in competition law investigations in the European Union. An important aspect of this general shift has been a movement away from what has been described as a formalistic, per se type approach to competition enforcement based on a so-called ‘more economic’ approach, centred around ‘effects-based’ analysis.

31

While generally heralded as a positive development by economists and other commentators, this shift in enforcement practice has brought with it a set of practical complications. In particular, it has created the challenge of developing an appropriate framework for identifying, collecting and assessing relevant economic evidence in competition law proceedings. Among other things, this has involved asking questions such as: What are the relevant substantive economic questions/issues that should be asked/addressed in a particular case? What economic evidence is relevant to an assessment of those questions? How, practically, should assessors go about analysing and sifting through the different pieces of economic evidence (including data) submitted? How should assessors to respond to different pieces of evidence that appear to be of equal probative weight/value but that present conflicting signs/indications of whether there is a competition problem?

As already implied, these issues are not unique to competition law enforcement. Similar sorts of questions arise in many other areas of diagnostic activity where a decision-maker is required to identify, collect and impartially analyse different pieces of information, and then to assess the implications of such information for various alternative or competing hypotheses or propositions (medicine is an obvious example, but areas such as intelligence gathering are also comparable).

Recent judgments of the European Court have served to highlight some of the problems associated with the use of economic evidence in practice. One problem identified is that the enforcement agency (in this case the European Commission) is being confronted with everincreasing amounts of economic and/or econometric evidence during the administrative procedure. This raises the very practical issue of how to sift through the evidence presented and decide the relative weights to be given to different pieces of evidence (such as potentially conflicting economic/econometric analysis). This specific issue was raised in

GlaxoSmithKline Services Unlimited v Commission (GSK) , an action under Article 81 (now

31 For example see the roundtable discussion in ‘Economics Experts before Authorities and Courts Roundtable’ in B Hawk (ed) International Antitrust Law & Policy: Fordham Corporate Law 2004 (Juris Publishing New

York 2006) 621-622; N Kroes ‘Preliminary Thoughts on Policy Review of Article 82’ (Speech at the Fordham

Corporate Law Institute New York, 23rd September 2005); Commission of the European Communities

Antitrust: consumer welfare at heart of Commission fight against abuses by dominant undertakings Press

Release IP/08/1877 (3 December 2008).

17

Economic evidence in competition law

101) of the EC Treaty, where six separate expert reports were submitted to the Commission during the administrative procedure.

On appeal the Court of First Instance (CFI) was critical of the Commission’s handling of this evidence, concluding that the decision was “vitiated by a failure to carry out a proper examination, as the Commission did not validly take into account all the factual arguments and the evidence pertinently submitted by GSK, did not refute certain of those arguments even though they were sufficiently relevant and substantiated to require a response, and did not substantiate to the requisite legal standard its conclusion that it was not proved.”

32

Whereas GSK highlights a recurring criticism of administrative authorities to the effect that they have failed to take relevant factors into account (i.e. there have been errors of omission), another area of difficulty has been the sufficiency of the reasoning presented by the

Commission as to why it has chosen to rely on one piece of economic evidence or information in preference to another. This has lead to the claim that the Commission has been somewhat selective (either consciously or unconsciously) in its treatment of economic evidence. For example, in reviewing the Commission’s decision to prohibit the merger between Airtours/Firstchoice , the CFI was critical of the Commission’s interpretation of specific pieces of economic data in relation to demand growth, observing that this was

‘inaccurate in its disregard’

for evidence which showed considerable market growth, and that the Commission

“failed to produce any more specific evidence’,

and as such

‘was not entitled to conclude that market development was characterised by low growth”.

A similar issue arose in Commission v Tetra Laval BV ( Tetra Leval) where the CFI concluded that the “evidence produced by the Commission was unfounded” and “ was unconvincing” and that the

Commission’s forecast

“was inconsistent with the undisputed figures … contained in the other reports”.

33 On appeal, the European Court of Justice (ECJ) affirmed the CFI’s judgement noting that the Commission’s conclusions “seemed to it to be inaccurate in that they were based on insufficient, incomplete, insignificant and inconsistent evidence”.

34

A related question that has faced the Commission concerns the scope and scale of the evidence that it might reasonably be expected or required to collect and assess in any investigation; which is a matter of some significance in the context of significant constraints on the resources that are available for enforcement. In recent decisions, the European Court appears to have established a relatively high standard in respect of: (1) the scope of evidence gathering that should be undertaken, and (2) how the Commission should then analyse and assess that evidence once collected. The ECJ has noted, for example, that in complex and

32 Case T-168/01 GlaxoSmithKline Services Unlimited v Commission of the European Communities.

[2006] ECR

II-02969, para [303].

33 Case C-12/03 Commission of the European Communities v Tetra Laval BV [2005] ECR I-00987, para [46].

34 Ibid, para [48].

18

Economic evidence in competition law difficult merger cases, the Commission’s approach requires “a particularly close examination of the circumstances which are relevant for an assessment of that effect on the conditions of competition in the reference market”. Furthermore , the ECJ noted that in order to adduce proof of anti-competitive effects in such complex cases the Commission needed to undertake “a precise examination, supported by convincing evidence, of the circumstances which allegedly produce those effects”.’

35

In other decisions, the European Court has stressed that the Commission needs to assess whether the factual arguments, and evidence upon which they are based, are “ relevant, reliable and credible” , 36 and that the relevant standard is that the Commission adduce

“ precise and coherent evidence demonstrating convincingly the existence of the facts constituting those infringements” .

37

More specifically, the Court has noted that in the context of both mergers and investigations under Article 102 the evidence needs to be

“factually accurate, reliable and consistent” and that the ‘evidence contains all the information which must be taken into account in order to assess a complex situation and whether it is capable of substantiating the conclusions drawn from it.” 38

We note at this point that, although some have argued that the Court is now setting an unduly high standard, this criticism is, in our view, unconvincing. The ECJ’s judgments reflect nothing more than what Smith and Hume would have regarded as the minimum necessary attention to detailed, contextual information if an economic approach is to have any value at all in the enforcement of competition law. Particularly given the propensity of many economists to engage in over-abstract analysis, strict requirements that attention to be paid to factual detail and to all significant factors is essential if sound conclusions are to be drawn in circumstances where the effects of business conduct – which is what the economic assessments are mostly concerned with – tend to be so heavily influenced by contextual factors.

We also note that the problems are by no means confined to the European Commission. UK enforcement agencies, for example, have been similarly chided in recent years by the

Competition Appeals Tribunal; but the underlying problems – and, in particular, the tendency of the administrative agencies to over-economise on the use of contextual evidence – have been around for a long time. Thus, in perhaps the most famous minority report of its history, following an inquiry into The Supply of Beer , a Member of the Monopolies and Mergers

35 Ibid, para [24].

36 Case T-168/01 GlaxoSmithKline Services Unlimited v Commission of the European Communities.

[2006] ECR

II-02969, para [263]

37 Ibid, para [7].

38 Case C-12/03 Commission v Tetra Laval [2005] ECR I-987, para [39]; Case T-201/04 Microsoft v

Commission [2007] ECR II-3601, para [89].

19

Economic evidence in competition law

Commission, Mr Leif Mills, said of the conclusions and proposals of his colleagues on that case that:

“Of course, there is a theoretical attraction in making major changes to the tied estate and the loan tie. However, the attraction smacks a little of the academic question 'the brewing industry may well work in practice, but does it work in theory?'. The proposals seem designed to fit the structure of the industry into some sort of theoretical Procrustean bed.”

39

As might be hoped, and possibly even expected, these and other criticisms have stimulated responses from the authorities. The assessment procedures of the UK Competition

Commission (the successor to the MMC) have been radically overhauled since the time of the

Beer investigation (1986-9), and there have been a number of changes at the European

Commission aimed at improving the Commission’s handling of economic evidence and information. Among the more significant changes was the establishment of the Office of the

Chief Economist, and the appointment of a Chief Economist. In addition the Commission has published a ‘best practice’ guideline for dealing with economic evidence in competition law matters.

40

An important component of this guidance is the need for a coherent and

‘testable hypothesis’ (or more broadly a ‘case theory’) as part of any enforcement strategy.

This at least encourages the explicit checking of the theory against available data and information, although it falls somewhat short of addressing the kinds of issues identified by

Quine.

4.2 The potential for confirmation bias in competition law enforcement

Enforcement of EC competition law is based on an administrative/ inquisitorial model where the same body/organisation is responsible for functions such as the decision to ‘open’ a case, the investigation of that case, and reaching a final decision on the evidence (which then forms the basis for any recommendation to the College of Commissioners). In these circumstances, where various functions are bundled or combined within a single organisation – and in some cases undertaken by the same team of individuals – there would appear to be few checks and balances on the development of cognitive and organisational biases (either intentional or unintentional); biases that are capable of affecting the evidence that is collected and its evaluation.

That, given the administrative model, confirmation bias is likely to be a particular problem in

EC competition law enforcement has been recognised by current and former senior staff

39 Monopolies and Mergers Commission (1989) The Supply of Beer – A report on the supply of beer for retail sale in the United Kingdom, para [43].

40 European Commission (2010) Best practices for the submission of economic evidence and data collection in cases concerning the application of Articles 101 and 102 TFEU and in merger cases Directorate General of

Competition. See also the guidelines of the UK Competition Commission (2009) Suggested best practice for submissions of technical economic analysis from parties to the Competition Commission.

20

Economic evidence in competition law members of the European Commission. Wils, for example, identified confirmation bias as one of the possible causes of a prosecutorial bias in EC antitrust enforcement noting that:

41

“If such confirmation bias is indeed a general tendency of human reasoning, there is no obvious reason why the persons within the European Commission dealing with an antitrust case would be immune from it. The question is however why they would start from an initial belief that there is an antitrust violation. In merger cases, there is no obvious reason why they would do so. …The situation may be different with regard to Articles 81 and 82 EC where, certainly after the abolition of the notification system by Regulation No 1/2003,39 an investigation will normally be started only if the officials from DG Competition hold the initial belief that an antitrust violation is likely to be found.”

Neven also discusses the potential for a prosecutorial bias in EC competition law enforcement, and notes a tendency for selectivity in the Commission’s processes:

“The observations that the Commission may decide early on cases and search for selective evidence or that theories are neglected are consistent with the incentives generated by the inquisitorial regime with a prosecutorial bias implemented by the EU.” 42

Moreover, he concludes that this tendency “ to focus on one side of the evidence

” might be exacerbated by various institutional factors in the European context:

“The prosecutorial bias and the intrinsic features of an inquisitorial procedure would appear to reinforce each other; in particular the conclusion that an inquisitor might not invest in seeking evidence towards both sides of the argument (when evidence is hard to manipulate) or might suppress conflicting evidence will be reinforced in the presence of a hindsight bias. The tendency towards extremism in the EU is also probably reinforced by the inconsistency between the standard of proof and the scope of the decisions mentioned above, at least with respect to the implementation of the merger regulation. Indeed, when evidence is not very conclusive, the

Commission cannot meet the required standard of proof with either decision. In those circumstances, it will have a further incentive to shift towards extreme outcomes (by suppressing evidence or failing to fully consider some alternatives).” 43

The issues are not restricted to Brussels, however. Laura Carstensen, a Deputy Chairman of the UK Competition Commission, raised similar concerns. Speaking of the proposed merger between the Competition Commission and the Office of Fair Trading in the UK, she has said.

41 W Wils (2004) ‘The Combination of the Investigative and Prosecutorial Function and the Adjudicative

Function in EC Antitrust Enforcement: A Legal and Economic Analysis’ World Competition , vol 27, p 216.

Wils was at the time a member of European Commission Legal Service. The article was, however, written in a private capacity.

42 D J Neven (2006)’Competition economics and antitrust in Europe’ Economic Policy , p 32. Professor Neven has been the Chief Economist at DG Competition, and we note that his reference to ‘early’ decisions/judgments in cases accords with our own experience, in regulatory evaluations as well as in competition law cases.

43 Ibid, page 30.

21

Economic evidence in competition law

“And there are of course obvious potential disadvantages to merger, predominantly concerned with the possible impact on fairness from confirmation bias when the whole decision-making pathway in a case—from receipt of complaint to opening an initial investigation to choice of ‘tool’ to phase 1 decision to phase 2 decision and finally to remedies decision—takes place within a single body.” 44

More generally still, the UK National Audit Office, in its work on the evaluation of the quality of regulatory impact assessments,

45

has been strongly critical of government departments’ use of these assessments to ‘justify’ decisions that have already been taken or judgments that have already been made; rather than to use them as exercises in information discovery and analysis, the results of which are intended to inform the decision makers concerned. The motivation to ‘justify’ therefore turns the (costly) assessments into exercises in confirmation bias, particularly when there is an injunction to make the notional assessments ‘evidence based’. In practice, this simply means that the authors of the documents search for confirmatory evidence, adding costs without in any way affecting decision-making outcomes.

4.3 Examples of possible confirmation bias from recent competition cases

The general concerns about confirmation bias in EC competition law enforcement expressed above do not appear to be simply theoretical or academic speculation. For example, there appear to be a number of cases where the investigative and decision making processes of the

Commission has been shown to be vulnerable to patterns which – to an outside observer

– closely resemble or approximate confirmation bias, and which therefore indicate something beyond the occurrence of random administrative errors and failures (such as might be said to have occurred in at least some of the cases noted in section 4.1 above). Recent cases that have been appealed to the European Court provide support for this position.

The European Commission’s Airtours decision is perhaps the most obvious, first example. In that case, the Commission was found on appeal to have:

selectively omitted certain elements among the facts available to it (such as evidence provided on the Form CO relating to the rate of demand growth);

46

claimed reliance on a market study which, upon investigation by the Court, turned out to be reliance on a one-page extract;

47

and

44 L Carstensen (2011) ‘Keynote speech by Laura Carstensen, Deputy Chairman of the Competition

Commission, to the Association of Corporate Counsel Europe Seminar’, 9 March 2011.

45 We note that there is a strong similarity between regulatory impact assessment and the evaluation of

‘remedies’ in some competition policy cases.

46 M de la Mano (2008) ‘A theory on the use of economic evidence in competition policy cases’ Presentation at the Association of Competition Economists, Budapest, 28 November 2008

47 Case T-342/99 Airtours plc v Commission of the European Communities [2002] ECR II-02585 para [128].

22

Economic evidence in competition law

failed to produce an econometric study upon which it alleged it had relied in its decision.

48

In another decision, Impala v Commission , the CFI was highly critical of the Commission’s approach to the economic evidence, describing the economic assessment in that case as

‘succinct, ‘superficial’, ‘unsubstantiated’

and

‘purely formal’

, noting at one point that the

Commission’s treatment of campaign discounts (an important element of the case) was

“imprecise, unsupported, and indeed contradicted by other observations in the Decision”. In addition, the CFI was highly critical of the Commission’s interpretation of the responses it received to questionnaires sent out to retailers, concluding that the Commission had misrepresented the number of responses received, and further that the statements did not support the conclusion the Commission drew from them.

49

More recently, and in the context of an Article 82 (102) investigation, Intel complained to the

European Ombudsman regarding a number of alleged procedural and administrative errors committed by the Commission during the process of its investigations. In this matter, it was alleged that the Commission had failed to consider and take adequate account of potentially exculpatory information; specifically notes of a meeting between Commission officials and an important customer of Intel (Dell). In his judgement of July 2009, the European

Ombudsman first noted the following standard for information gathering and how this interacts with the margin of appreciation/discretion afforded the Commission in complex cases:

“While the Commission has a reasonable margin of discretion as regards its evaluation of what constitutes a relevant fact, the Commission, when seeking to ascertain relevant facts, should not make a distinction between evidence which may indicate that an undertaking has infringed Article 81 EC or Article 82 EC (inculpatory evidence) and evidence which may indicate that an undertaking has not infringed Article 81 EC or Article 82 EC (exculpatory evidence). In sum, the Commission has a duty to remain independent, objective and impartial when gathering relevant information in the context of the exercise of its investigatory powers pursuant to Article 81 EC and 82 EC.”

50

On the facts in this case, the Ombudsman concluded that the Commission had committed an instance of maladministration in failing to record details and a proper record of a meeting, which could have concerned evidence that was potentially exculpatory of Intel.

In sum then, it would appear that issues relating to confirmation bias are very real in the

European context, and we will give further, illustrative examples in section 6 below. This view is shared by Neven, who has observed that: “Overall, it would thus appear that the self

48 Ibid para [132].

49 Case T-464/04 Independent Music Publishers and Labels Association (Impala, International Association) v

Commission of the European Communities [2006] ECR II-02289 , paras [385]-[387].

50 P Nikiforos Diamandouros (2009) ‘Decision of the European Ombudsman closing his inquiry into complaint

1935/2008/FOR against the European Commission’. 14 July 2009, para [82].

23

Economic evidence in competition law confirming biases that may be induced by the prosecutorial role that the Commission assumes cannot be dismissed as insignificant”.

And we repeat again that the problems are not confined to the EU level (we could equally well cite cases from UK domestic competition law), to administrative/inquisitorial arrangements (the difficulties are more general than that), or to competition law (we have seen much more severe problems in regulatory impact assessments).

4.4 Procedural suggestions for dealing with confirmation bias

Various proposals and suggestions have been presented as to how to address concerns regarding potential confirmation bias in the context of EC competition law enforcement.

Wils, for example, suggests that the risks of confirmation bias could be reduced if there were better internal checks and balances, such as a second team of officials within the Commission to review the work of the first team of case handlers (e.g. a peer review team). Neven lists a range of other possibilities including a shift to a more adversarial system, which he argues would open up economic arguments to more detailed scrutiny, and would address incentives in inquisitorial systems to suppress economic evidence

51

and to fail to consider alternative interpretations of economic evidence.

52

Alongside this proposal he suggests increasing resources for the Commission to allow it to properly validate economic evidence, and changing the standard of review within the inquisitorial procedure, to improve decisionmaking. In the UK, Carstensen has suggested that institutionally separating the choice of cases, including initial investigatory work necessarily connected with that task, from the function of deciding cases can eliminate one of the major sources of confirmation bias.

53

These are all suggestions worthy of more detailed evaluation. The common themes are familiar ones, concerning the importance of checks and balances, and of competition or contestability in processes for discovering what is or has been the case. They also resonate both with wider liberal political values and with the economic notion of competition as a discovery process. It is encouraging that these ideas are being promulgated by people with first-hand experience of the problems of assessment, and, on the basis of our own experience, there can be little doubt that recognition of the existence of these problems is far more advanced in competition law enforcement than it is in other areas (such as regulatory impact assessments), with the possible exception of issues surrounding the evaluation of remedies in a sub-set of competition policy cases, most notably market investigation cases in the UK, where remedies have sometimes been highly intrusive and, in effect, have become a form of

51 D J Neven (2006) “Competition economics and antitrust in Europe‟, Economic Policy , p p 5-6, 30.

52 Neven notes for example that: ‘Evidence which is not subject to rigorous scrutiny can be easily abused: key assumptions in theoretical reasoning can be disguised as innocuous and empirical results that are not robust can be disguised as such’ Ibid, page 31.

53 L Carstensen (2011) ‘Keynote speech by Laura Carstensen, Deputy Chairman of the Competition

Commission, to the Association of Corporate Counsel Europe Seminar’, 9 March 2011.

24

Economic evidence in competition law regulation (a reality that tends frequently to be denied, not least because, since much economic regulation is anti-competitive in its effects, acknowledgement of it implies recognition of the fact that competition authorities are, in practice, themselves capable of acting in anti-competitive ways).

Notwithstanding these positive developments, we think that there are more questions to be resolved about the wellsprings of biases in assessment processes before some of the more detailed aspects of such prospective reforms can themselves be assessed (i.e. there is a risk of

‘premature closure’/confirmation bias in the process of evaluating alternative assessment and decision processes). For example, one very specific ‘device’ that is sometimes used in group decision processes is that of the devil’s advocate.

Prima facie , such a procedure appears meritorious as a method of countering obvious biases, including confirmation bias and groupthink, but in practice the results from its use have often been disappointing. The question then is: why?

Possible explanatory factors have been discussed, in a preliminary way, earlier on in this study: the relative merits of scepticism and sympathy on the part of skilled, impartial assessors (scepticism, such as may be embodied in a devil’s advocate, may be unwarranted until the end stages); and the merits of developing communities of inquiry