Hash-based Indexing

advertisement

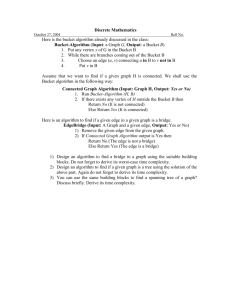

Database Systems (資料庫系統) November 28, 2005 Lecture #9 1 Announcement • Next week reading: Chapters 12 • Pickup your midterm exams at the end of the class. • Pickup your assignments #1~3 outside of the TA office 336/338. • Assignment #4 & Practicum #2 are due in one week. – Significant amount of coding, so start now 2 Interesting Talk • Rachel Kern,“From Cell Phones To Monkeys: Research Projects in the Speech Interface Group at the M.I.T. Media Lab”, CSIE 102, Friday 2:20 ~ 3:30 3 Midterm Exam Score Distribution 90 ~1 02 70 ~8 0 80 ~9 0 60 ~7 0 40 ~5 0 50 ~6 0 20 ~3 0 30 ~4 0 數列1 10 ~2 0 0~ 10 40 35 30 25 20 15 10 5 0 4 Ubicomp project of the week • From Pervasive to Persuasive Computing • Pervasive Computing (smart objects) – Design to be aware of people’s behaviors • Examples: smart dining table, smart chair, smart wardrobe, smart mirror, smart shoes, smart spoon, … • Persuasive Computing – Design to change people’s behaviors 5 Baby Think It Over 6 Smart Device: Credit Card Barbie Doll (from Accenture) • Barbie gets wireless implant of chip and sensors and become decision-making objects. • When one Barbie meets another Barbie … – Detect the presence of clothing of the other Barbie. – If she does not have it … she can automatically send an online order through the wireless connection! – You can give her a credit card limit. • Good that this is just a concept toy. • It illustrates the concept of autonomous purchasing object: car, home, refrigerator, … 7 Hash-Based Indexing Chapter 11 8 Introduction • Hash-based indexes are best for equality selections. Cannot support range searches. – Equality selections are useful for join operations. • Static and dynamic hashing techniques; trade-offs similar to ISAM vs. B+ trees. – Static hashing technique – Two dynamic hashing techniques • Extendible Hashing • Linear Hashing 9 Static Hashing • # primary pages fixed, allocated sequentially, never de-allocated; overflow pages if needed. • h(k) mod N = bucket to which data entry with key k belongs. (N = # of buckets) h(key) mod N key 0 2 h N-1 Primary bucket pages Overflow pages 10 Static Hashing (Contd.) • Buckets contain data entries. • Hash function works on search key field of record r. Must distribute values over range 0 ... N-1. – – h(key) = (a * key + b) usually works well. a and b are constants; lots known about how to tune h. Cost for insertion/delete/search: 2/2/1 disk page I/Os (no overflow chains). • Long overflow chains can develop and degrade performance. • – – Why poor performance? Scan through overflow chains linearly. Extendible and Linear Hashing: Dynamic techniques to fix this problem. 11 Extendible Hashing • Simple Solution (no overflow chain): – When bucket (primary page) becomes full, .. – Re-organize file by doubling # of buckets. Cost concern? – High cost: rehash all entries - reading and writing all pages is expensive! • How to reduce high cost? – – – Use directory of pointers to buckets, double # of buckets by doubling the directory, splitting just the bucket that overflowed! Directory much smaller than file, so doubling much cheaper. Only one page of data entries is split. How to adjust the hash function? Before doubling directory, h(r) → 0..N-1 buckets. After doubling directory, h(r) → 0 .. 2N-1 12 Example • Directory is array of size 4. • To find bucket for r, take last global depth # bits of h(r); – Example: If h (r= 5), 5’s binary is 101, it is in bucket pointed to by 01. • Global depth: # of bits used for hashing directory entries. • Local depth of a bucket: # bits for hashing a bucket. • When can global depth be different from local depth? LOCAL DEPTH GLOBAL DEPTH 2 2 4* 12* 32*16* Bucket A 2 1* 5* 21 13* Bucket B * 00 01 10 2 11 DIRECTORY 10* Bucket C 2 15* 7* 19* Bucket D DATA PAGES 13 Insert 20 = 10100 (Causes Doubling) LOCAL DEPTH GLOBAL DEPTH 2 00 01 10 11 DIRECTORY 2 4*12* 32*16* Bucket A LOCAL DEPTH 2 1* 5* 21*13* Bucket B Bucket C 2 15*7* 19* 32*16* Bucket A GLOBAL DEPTH 2 10* 3 Bucket D double directory: -Increment global depth -Rehash bucket A -Increment local depth, why track local depth? 3 000 001 010 011 100 101 110 111 DIRECTORY 2 1* 5* 21*13* Bucket B 2 10* Bucket C 2 15*7* 19* 3 4* 12*20* Bucket D Bucket A2 (`split image' of Bucket 14 A) Insert 9 = 1001 (No Doubling) LOCAL DEPTH 3 32*16* Bucket A GLOBAL DEPTH 3 000 001 010 011 100 101 110 111 DIRECTORY LOCAL DEPTH 2 1* 5* 21*13* Bucket B 2 10* Bucket C 2 15*7* 19* 3 4* 12*20* Only split bucket: -Rehash bucket B -Increment local depth Bucket D Bucket A2 3 32*16* Bucket A GLOBAL DEPTH 3 000 001 010 011 100 101 110 111 DIRECTORY 3 1* 9* Bucket B 2 10* Bucket C 2 15*7* 19* Bucket D 3 4* 12*20* Bucket A2 3 5* 21* 13* Bucket B2 (split image of Bucket B) 15 Points to Note Global depth of directory: Max # of bits needed to tell which bucket an entry belongs to. • Local depth of a bucket: # of bits used to determine if an entry belongs to this bucket. • When does bucket split cause directory doubling? • – • Before insert, bucket is full & local depth = global depth. Directory is doubled by copying it over and `fixing’ pointer to split image page. – You can do this only by using the least significant bits in the directory. 16 Directory Doubling Why use least significant bits in directory? Allows for doubling via copying! 3 3 000 2 00 01 10 11 001 010 011 100 101 110 000 2 00 Split buckets 11 111 Least Significant 10 01 001 010 011 100 101 110 111 vs. Most Significant 17 Comments on Extendible Hashing • If directory fits in memory, equality search answered with one disk access; else two. • Problem with extendible hashing: – – • If the distribution of hash values is skewed (concentrates on a few buckets), directory can grow large. Can you come up with one insertion leading to multiple splits Delete: If removal of data entry makes bucket empty, can be merged with `split image’. If each directory element points to same bucket as its split image, can halve directory. 18 Skewed data distribution (multiple splits) • Assume each bucket holds one data entry • Insert 2 (binary 10) – how many times of split? • Insert 16 (binary 10000) – how many times of split? LOCAL DEPTH GLOBAL DEPTH 1 1 0* 8* 1 0 1 19 Delete 10* LOCAL DEPTH GLOBAL DEPTH 2 00 01 10 11 DIRECTORY 2 4*12* 32*16* Bucket A LOCAL DEPTH 2 1* 5* 21*13* Bucket B 2 2 10* Bucket C 2 15*7* 19* GLOBAL DEPTH Bucket D 00 01 10 11 DIRECTORY 1 4*12* 32*16* Bucket A 2 1* 5* 21*13* Bucket B 2 15*7* 19* Bucket B2 20 Delete 15*, 7*, 19* LOCAL DEPTH GLOBAL DEPTH 2 00 01 10 11 DIRECTORY 1 4*12* 32*16* Bucket A LOCAL DEPTH GLOBAL DEPTH 2 2 1* 5* 21*13* Bucket B 15*7* 19* Bucket B2 1 1* 5* 21*13* Bucket B 00 01 10 11 LOCAL DEPTH 2 1 4*12* 32*16* Bucket A GLOBAL DEPTH 1 00 01 DIRECTORY 1 4*12* 32*16* Bucket A 1 1* 5* 21*13* Bucket B 21 Linear Hashing (LH) • This is another dynamic hashing scheme, an alternative to Extendible Hashing. – LH fixes the problem of long overflow chains (in static hashing) without using a directory (in extendible hashing). • Basic Idea: Use a family of hash functions h0, h1, h2, ... – – – – Each function’s range is twice that of its predecessor. Pages are split when overflows occur – but not necessarily the page with the overflow. Splitting occurs in turn, in a round robin fashion. When all the pages at one level (the current hash function) have been split, a new level is applied. – Splitting occurs gradually – Primary pages are allocated consecutively. 22 Levels of Linear Hashing • Initial Stage. – The initial level distributes entries into N0 buckets. – Call the hash function to perform this h0. • Splitting buckets. – If a bucket overflows its primary page is chained to an overflow page (same as in static hashing). – Also when a bucket overflows, some bucket is split. • The first bucket to be split is the first bucket in the file (not necessarily the bucket that overflows). • The next bucket to be split is the second bucket in the file … and so on until the Nth. has been split. • When buckets are split their entries (including those in overflow pages) are distributed using h1. – To access split buckets the next level hash function (h1) is applied. – h1 maps entries to 2N0 (or N1)buckets. 23 Levels of Linear Hashing (Cnt) • Level progression: – Once all Ni buckets of the current level (i) are split the hash function hi is replaced by hi+1. – The splitting process starts again at the first bucket and hi+2 is applied to find entries in split buckets. 24 Linear Hashing Example h0 64 36 01 1 17 10 6 11 31 00 next 15 5 • Initially, the index level equal to 0 and N0 equals 4 (three entries fit on a page). • h0 maps index entries to one of four buckets. • h0 is used and no buckets have been split. • Now consider what happens when 9 (1001) is inserted (which will not fit in the second bucket). • Note that next indicates which bucket is to split next. (Round Robin)25 Linear Hashing Example 2 h1 next 64 h0 next 1 h0 6 h0 31 h1 36 • The page indicated by next is split (the first one). • Next is incremented. 17 15 5 9 • An overflow page is chained to the primary page to contain the inserted value. • Note that the split page is not necessary the overflow page – round robin. • If h0 maps a value from zero to next – 1 (just the first page in this case), h1 must be used to insert the new entry. • Note how the new page falls naturally into the sequence as the fifth page. 26 Linear Hashing • Assume inserts of 8, 7, 18, 14, 111, 32, 162, 10, 13, 233 • After the 2nd. split the base level is 1 (N1 = 8), use h1. • Subsequent splits will use h2 for inserts between the first bucket and next-1. 2 1 h1 h1 h1 h1 h1 h0 h0 h0 next3 next1 next2 64 8 32 16 1 17 9 10 18 6 18 14 15 7 11 7 23 11 31 h1 h1 36 h1 h1 5 13 h1 - 6 14 - - 31 15 27 LH Described as aVariant of EH • Two schemes are similar: – – – Begin with an EH index where directory has N elements. Use overflow pages, split buckets round-robin. First split is at bucket 0. (Imagine directory being doubled at this point.) But elements <1,N+1>, <2,N+2>, ... are the same. So, need only create directory element N, which differs from 0, now. • When bucket 1 splits, create directory element N+1, etc. • So, directory can double gradually. Also, primary bucket pages are created in order. If they are allocated in sequence too (so that finding i’th is easy), we actually don’t need a directory! Voila, LH. 28