Regression I

advertisement

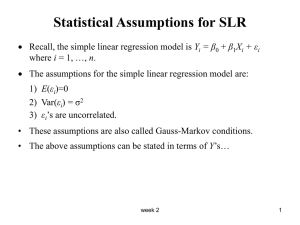

Regression I Lecture 10 Both X and Y random (p. 66). Note that all formulas come out the same in the end. The only real difference is a philosophical one, in that correlation makes sense in this case. Correlation is an association between two random variables. This does not stop people from calculating it for other cases, though. o Previously our xs were fixed o Correlation – technically is association between 2 random variables o Technically, correlation between x and y when x is fixed is correct Multiple Regression--the linear model in matrix notation (p. 82 ff) o Same Matrix Model o Y = Xβ + ε Population o y = xb + e Sample o b = (X’X)-1(X’Y) o what changes? X and b y y1 . . . yn 1 x11 1 x 12 X 1 x1n o o o o o o o x1 x11 . . . x1n x2 x21 . . . x2n x21 b0 x22 b b1 b2 x2 n cloud of data is in 3 dimensions cannot fit line thru 3 dimensions, but can fit a plane talk about how far points are from fitted plane there is an overall mean plane SSE – how points are different from fitted plane When you add more variables, can’t really graph past 3 dimensions Plane is referred to as hyperplane in higher dimensions What is not a linear model? Interpretation of parameters (partial slopes, partial derivatives), three-space and more. o b0 – where plane intercepts y axis o b1 – slope of plane in direction of x1 axis o Fix a x2 point and see how much y changes when you increase x1 one unit (for flat surface) o o o o b1 is a partial slope for x1 b2 – partial slope for x2 This is different for curved surfaces Deal with partial derivatives then Some of the material in Chapter 3 can now be read as a review of what has already been discussed in lecture. R=(X'X)-1X' (mistake in the book on page 91) o b = Ry Gauss-Markov Theorem: Given a linear model Y=Xβ+ε, if E(ε)=0 and Var(ε)=σ2I, then b is the Best Linear Unbiased Estimator (BLUE) of β, where "best" means that it has the minimum variance in the class of all linear unbiased estimators. o Most famous theorem in regression o Gauss – famous mathematician o Responsible for normal distribution which is actually named Gaussian Distribution o All of the errors have the same variance o Off diagonal we have covariance. Since it is zero, means they are uncorrelated. Errors are iid (independent and identically distributed) o ε ~ iid(0, σ²) - not assuming any distribution o E(b) = β ~ unbiased part of BLUE o Best means has the minimum variance o b is a solution we get by applying least squares, but can apply other methods to obtain b. o Why is it a good thing to have minimum variance? Smaller the variance, the more often you are closest to the truth. UMVUE Theorem: If, in addition to the conditions of the Gauss-Markov Theorem, the errors are normally distributed, then b is the Uniformly Minimum Variance Unbiased Estimator (UMVUE) of β, which means it has the minimum variance in the class of all unbiased estimators. o Similar to Gauss Markov (same conditions) o Add the condition that the errors are normally distributed ε ~ iidN(0, σ²) o b is the uniformly minimum variance unbiased estimator of β. o This theorem takes out “linear” compared to Gauss Markov o Uniformly means it has the lowest variance for all estimators, no matter what function they are. o Gauss Markov only applies to linear models.