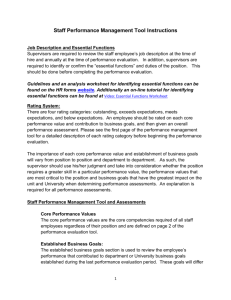

Teacher Evaluation & Music Education

advertisement

+ Strategies for Assessing Student Growth in the Ensemble Setting Teacher Evaluation & Music Education: What You Need to Know + Introduction Phillip Hash, Calvin College + Session Overview Michigan Laws Measurement Tools Scheduling & Implementation Questions 1 + Legislative Review: Talking Points • All Teachers Evaluated Annually • Percentage of Evaluation to Relate to Student Growth • National, State, And Local Assessments • Evaluations vs. Seniority in Personnel Decisions • Michigan Council On Educator Effectiveness 2 + Pilot Programs • 2012-13 Pilot • • • • • • 14 districts 4 evaluation models Standardized tests Local measures for non-tested subjects Recommendations by 2013-14 school year Urgency for Measures of Students Growth 4 + Frameworks, Methods, Systems Used as part of Local Evaluation 450 400 350 300 250 200 150 100 50 0 Evaluation Methods + Effectiveness Ratings & Percentage of Student Growth % of Growth in Local Evaluation Systems 250 200 150 100 50 0 Effectiveness Ratings + Current Trends: Effectiveness Ratings for 2011-12 Ineffective 1% Effectiveness Rating Minimally Effective 2% Highly Effective 23% Effective 74% + Teacher Ratings & Student Growth 90 83.24 80 72.55 70 67.71 60 50 40 24.3 26.82 30 20 10 0 15.1 0.59 0.84 1.08 4.39 1.07 2.31 Ineffective Minimally Effective < 10% 11-40 % Effective > 40% Highly Effective + Strategies Mitch Robinson, Michigan State University + Forms of Alternative Assessment • Performance-Based Assessment • Student Auditions • Solo/Ensemble Festivals • Critiques of Student Compositions • Coaching Jazz Improvisation • Playing Checks • Student Writing 9 + Rating Scales & Rubrics Rating Scales (Criteria-Specific) Two Types • Continuous Rating Scales • Additive Rating Scales Rubrics* Should include: • Points that are equidistant • Four or more rating points • Descriptors that are valid and reliable 10 *From: K. Dirth, Instituting Portfolio Assessment in Performing Ensembles, NYSSMA Winter Conference, Dec. 3, 1997. + Rating Scales Should be: Criteria-specific • Objective • Easy to use • Clear • Robinson 11 Sample Rating Scale National Standard #7: Evaluating music and music performances. 12 + What Does A Rubric Look Like? Beginning Basic Proficient Advanced Breathy; Unclear; Lacks focus; Unsupported Inconsistent; Beginning to be centered and clear; Breath support needs improvement Consistent breath Resonant; Centered; support; Centered Vibrant; and clear; Beginning Projecting to be resonant Features: • Scale includes (preferably) 4 rating points • Points of the scale are equidistant on a continuum • Highest point represents exemplary performance • Descriptors are provided for each level of student performance Adapted from: K. Dirth, Instituting Portfolio Assessment in Performing Ensembles, NYSSMA Winter Conference, Dec. 2, 1997. 13 + Rubrics (cont.) • Types include: • Holistic (overall performance) • Analytic (specific dimensions of performance) • Both necessary for student assessment • Descriptors must be valid (meaningful) • Scores • Must be reliable (consistent) • Should relate to actual levels of students learning • Can be used by students for self-assessment and to assess the performance of other students 14 + Creating a Rubric – Why Bother? • Helps plan activities • Focuses your objectives • Aids in evaluation and grading • Improves instruction • Provides specific feedback to students Robinson 15 + The Morning After... • Focuses student listening • Guides students to attend to musical aspects of performance • Can be done in groups • Encourages comparison and contrast judgments 16 +Journal Keeping •• •• Stenographer’s notebooks work best Younger students need more directed writing assignments Try to avoid the “pizza & feedback pop” syndrome Teacher is essential 17 + Implementation Abby Butler, Wayne State University + Planning to Assess • What aspects of student learning do you want to measure?* • • • Skills Knowledge Understanding • Decide which measurement tools best suited for outcomes to be measured • Obtain or develop measurement tools *Consult the Michigan Merit Curriculum, available online at MDOE. 18 + Planning for Assessment • • Build assessment into rehearsals • Develop activities as a context for measuring skills or knowledge • Include these activities in your lesson plans Develop and use simple procedures for recording assessments • • • Laminated seating charts Electronic gadgets (iPads, tablets, smart phones, desk computer) Plan ahead where and how this data will be stored (filing system) 19 + Schedule Assessments • Measure each of the identified goals several times throughout the year • • • • Make the following decisions before the school year begins: • • • • Baseline measurements Intermediate measurements (formative) Measurement at the end Who will be assessed How often assessments will occur When assessments will occur Build these assessments into your year long plan 20 + Working with your Data • Decide how assessments will be recorded • • • Numbers? Descriptive words? Decide how you will report the results • • • Percentage scores with differences between beginning and end of year assessments? Percentage of students moving from one competency level to the next? Graphic charts, spreadsheets? Butler 21 + Ex: Comparison of Sight Reading Competency by Ensemble Beginning Ensemble Intermediate Ensemble Advanced Ensemble B D P A B D P A B D P A First Marking Period 55 % 30 % 15 % 0% 25 % 50 % 15 % 10 % 0% 20 % 65 % 15 % Last Marking Period 30 % 50 % 15 % 5% 15 % 40 % 30 % 15 % 0% 10 % 70 % 20 % Key: B (Basic) – D (Developing) – P (Proficient) – A (Advanced) 22 + Tips for Starting Out • Develop measurement tools over the summer • Start small by limiting • • The number of students, grade levels, or ensembles assessed The number & frequency of assessments • Build assessment into your lesson activities • Simplify recording tasks • Choose a system for assigning scores that is easy to average 23 + Conclusion Phillip Hash, Calvin College + Resources • MCEE website • • • Please send examples of your assessments to pmh3@calvin.edu • Ottawa ISD – Feb. 14, 3:30pm http://www.mcede.org/ www.pmhmusic.weebly.com • Legislative Summary • Policy Briefs • NAfME and MISMTE position statements • MI GLCE - Music • Sample assessments in use today • This PPT 25 + Responsibilities & Considerations • Design, administer, and evaluate assessments • Must be Quantitative • Rubistar4teachers.org • Same or very similar for every music teacher • Valid • Reliable (consistent) • Integrity of Process • Transparency • Record performance tests (Vocaroo.com) • Regular music staff meetings • Review assessments • Discuss/resolve issues 24 + Questions for the Panel 26