14_Scaleabilty_WICS

advertisement

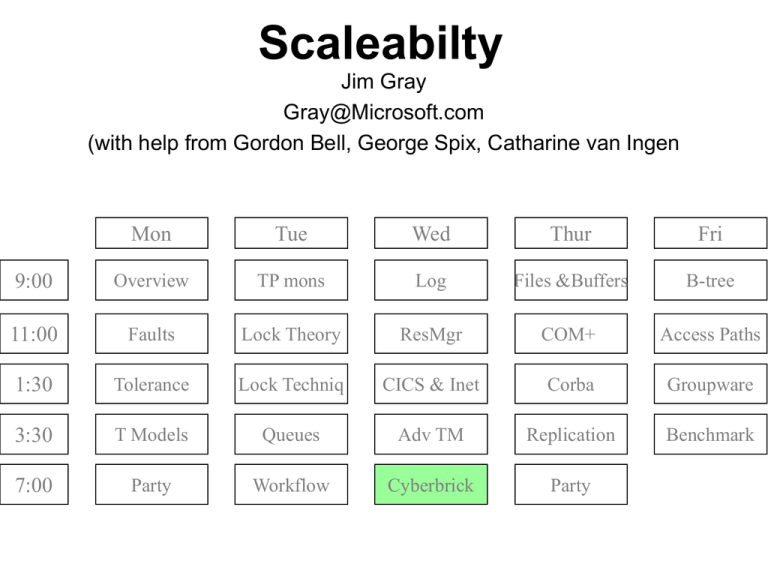

Scaleabilty Jim Gray Gray@Microsoft.com (with help from Gordon Bell, George Spix, Catharine van Ingen Mon Tue Wed Thur Fri 9:00 Overview TP mons Log Files &Buffers B-tree 11:00 Faults Lock Theory ResMgr COM+ Access Paths 1:30 Tolerance Lock Techniq CICS & Inet Corba Groupware 3:30 T Models Queues Adv TM Replication Benchmark 7:00 Party Workflow Cyberbrick Party A peta-op business app? • P&G and friends pay for the web (like they paid for broadcast television) – no new money, but given Moore, traditional advertising revenues can pay for all of our connectivity - voice, video, data…… (presuming we figure out how to & allow them to brand the experience.) • Advertisers pay for impressions and ability to analyze same. • A terabyte sort a minute – to one a second. • Bisection bw of ~20gbytes/s – to ~200gbytes/s. • Really a tera-op business app (today’s portals) Scaleability Scale Up and Scale Out Grow Up with SMP 4xP6 is now standard SMP Super Server Grow Out with Cluster Cluster has inexpensive parts Departmental Server Personal System Cluster of PCs There'll be Billions Trillions Of Clients • Every device will be “intelligent” • Doors, rooms, cars… • Computing will be ubiquitous Trillions Billions Of Clients Need Millions Of Servers Billions All clients networked to servers May be nomadic or on-demand Fast clients want faster servers Servers provide Shared Data Control Coordination Communication Clients Mobile clients Fixed clients Servers Server Super server Thesis Many little beat few big $1 million 3 1 MM $100 K $10 K Micro 1 MB Mini Mainframe Pico Processor Nano 10 pico-second ram 10 nano-second ram 100 MB 10 GB 10 microsecond ram 1 TB 14" 9" 5.25" 3.5" 2.5" 1.8" 10 millisecond disc 100 TB 10 second tape archive Smoking, hairy golf ball How to connect the many little parts? How to program the many little parts? Fault tolerance & Management? 1 M SPECmarks, 1TFLOP 106 clocks to bulk ram Event-horizon on chip VM reincarnated Multi-program cache, On-Chip SMP 4 B PC’s (1 Bips, .1GB dram, 10 GB disk 1 Gbps Net, B=G) The Bricks of Cyberspace • Cost 1,000 $ • Come with – NT – DBMS – High speed Net – System management – GUI / OOUI – Tools • Compatible with everyone else • CyberBricks Kilo Mega Giga Tera Peta Exa Computers shrink to a point • Disks 100x in 10 years 2 TB 3.5” drive • Shrink to 1” is 200GB • Disk is super computer! Zetta Yotta • This is already true of printers and “terminals” Super Server: 4T Machine Array of 1,000 4B machines 1 b ips processors 1 B B DRAM 10 B B disks 1 Bbps comm lines 1 TB tape robot A few megabucks Challenge: CPU 50 GB Disc 5 GB RAM Manageability Programmability Security Cyber Brick a 4B machine Availability Scaleability Affordability As easy as a single system Future servers are CLUSTERS of processors, discs Distributed database techniques make clusters work Cluster Vision Buying Computers by the Slice • Rack & Stack – Mail-order components – Plug them into the cluster • Modular growth without limits – Grow by adding small modules • Fault tolerance: – Spare modules mask failures • Parallel execution & data search – Use multiple processors and disks • Clients and servers made from the same stuff – Inexpensive: built with commodity CyberBricks Systems 30 Years Ago • MegaBuck per Mega Instruction Per Second (mips) • MegaBuck per MegaByte • Sys Admin & Data Admin per MegaBuck Disks of 30 Years Ago • 10 MB • Failed every few weeks • • • • 1988: IBM DB2 + CICS Mainframe 65 tps IBM 4391 Simulated network of 800 clients 2m$ computer Staff of 6 to do benchmark 2 x 3725 network controllers Refrigerator-sized CPU 16 GB disk farm 4 x 8 x .5GB 1987: Tandem Mini @ 256 tps • 14 M$ computer (Tandem) • A dozen people (1.8M$/y) • False floor, 2 rooms of machines 32 node processor array Admin expert Performance Hardware experts expert Network expert Auditor Manager Simulate 25,600 clients 40 GB disk array (80 drives) DB expert OS expert 1997: 9 years later 1 Person and 1 box = 1250 tps • • • • 1 Breadbox ~ 5x 1987 machine room 23 GB is hand-held One person does all the work Cost/tps is 100,000x less 5 micro dollars per transaction Hardware expert OS expert Net expert DB expert App expert 4x200 Mhz cpu 1/2 GB DRAM 12 x 4GB disk 3 x7 x 4GB disk arrays What Happened? Where did the 100,000x come from? • • • • Moore’s law: 100X (at most) Software improvements: 10X (at most) Commodity Pricing: 100X (at least) Total 100,000X • 100x from commodity – (DBMS was 100K$ to start: now 1k$ to start – IBM 390 MIPS is 7.5K$ today – Intel MIPS is 10$ today – Commodity disk is 50$/GB vs 1,500$/GB – ... time Web & server farms, server consolidation / sqft http://www.exodus.com (charges by mbps times sqft) SGI O2K UE10K DELL 6350 Cray T3E IBM SP2 PoPC cpus 2.1 4.7 7.0 4.7 5.0 13.3 specint 29.0 60.5 132.7 79.3 72.3 253.3 ram 4.1 4.7 7.0 0.6 5.0 6.8 disks 1.3 0.5 5.2 0.0 2.5 13.3 per sqft Standard package, full height, fully populated, 3.5” disks HP, DELL, Compaq are trading places wrt rack mount lead PoPC – Celeron NLX shoeboxes – 1000 nodes in 48 (24x2) sq ft. $650K from Arrow (3yr warrantee!) on chip at speed L2 gb Application Taxonomy Technical General purpose, nonparallelizable codes PCs have it! Vectorizable Vectorizable & //able (Supers & small DSMs) Hand tuned, one-of MPP course grain MPP embarrassingly // (Clusters of PCs) Commercial If central control & rich then IBM or large SMPs else PC Clusters Database Database/TP Web Host Stream Audio/Video 10x every 5 years, 100x every 10 (1000x in 20 if SC) Except --- memory & IO bandwidth Peta scale w/ traditional balance 2000 2010 1 PIPS processors 1015 ips 10 PB of DRAM 106 cpus @109 ips 108 chips @107 bytes 104 cpus @1011 ips 106 chips @109 bytes 108 disks 107 Bps 105 disks 1010 B 107 disks 108 Bps 107 tapes 1010 B 105 tapes 1012 B 10 PBps memory bandwidth 1 PBps IO bandwidth 100 PB of disk storage 10 EB of tape storage 103 disks 1012 B “ market for maybe five computers. ” I think there is a world Thomas Watson Senior, Chairman of IBM, 1943 Microsoft.com: ~150x4 nodes: a crowd Building 11 Staging Servers (7) Ave CFG:4xP6, Internal WWW Ave CFG:4xP5, 512 RAM, 30 GB HD FTP Servers Ave CFG:4xP5, 512 RAM, Download 30 GB HD Replication SQLNet Feeder LAN Router Live SQL Servers MOSWest Admin LAN Live SQL Server www.microsoft.com (4) register.microsoft.com (2) Ave CFG:4xP6, Ave CFG:4xP6, 512 RAM, 160 GB HD Ave Cost:$83K FY98 Fcst:12 Ave CFG:4xP6, 512 RAM, 50 GB HD www.microsoft.com (4) premium.microsoft.com (2) home.microsoft.com (3) FDDI Ring (MIS2) cdm.microsoft.com (1) Ave CFG:4xP6, 512 RAM, 30 GB HD Ave Cost:$28K FY98 Fcst:7 Ave CFG:4xP6, 256 RAM, 30 GB HD Ave Cost:$25K FY98 Fcst:2 Router Router msid.msn.com (1) premium.microsoft.com (1) FDDI Ring (MIS3) www.microsoft.com premium.microsoft.com (3) (1) Ave CFG:4xP6, Ave CFG:4xP6, 512 RAM, 30 GB HD 512 RAM, 50 GB HD FTP Download Server (1) HTTP Download Servers (2) SQL SERVERS (2) Ave CFG:4xP6, 512 RAM, 160 GB HD msid.msn.com (1) Switched Ethernet search.microsoft.com (2) Router Internet Secondary Gigaswitch support.microsoft.com search.microsoft.com (1) (3) Router support.microsoft.com (2) Ave CFG:4xP6, 512 RAM, 30 GB HD 13 DS3 (45 Mb/Sec Each) Ave CFG:4xP5, 512 RAM, 30 GB HD register.microsoft.com (2) register.microsoft.com (1) (100Mb/Sec Each) Router FTP.microsoft.com (3) msid.msn.com (1) 2 OC3 Primary Gigaswitch Router Ave CFG:4xP5, 256 RAM, 20 GB HD register.msn.com (2) search.microsoft.com (1) Japan Data Center Internet Router home.microsoft.com (2) Switched Ethernet Router Router www.microsoft.com (3) FTP Download Server (1) activex.microsoft.com (2) Ave CFG:4xP6, 512 RAM, 30 GB HD Ave CFG:4xP5, 256 RAM, 12 GB HD SQL SERVERS (2) Ave CFG:4xP6, 512 RAM, 160 GB HD Router Ave CFG:4xP6 512 RAM 28 GB HD FDDI Ring (MIS1) 512 RAM, 30 GB HD msid.msn.com (1) search.microsoft.com (3) home.microsoft.com (4) Ave CFG:4xP6, 1 GB RAM, 160 GB HD Ave Cost:$83K FY98 Fcst:2 msid.msn.com (1) 512 RAM, 30 GB HD Ave CFG:4xP6, 512 RAM, 50 GB HD Ave CFG:4xP6, 512 RAM, 30 GB HD www.microsoft.com premium.microsoft.com (1) Ave CFG:4xP6, Ave CFG:4xP6,(3) 512 RAM, 50 GB HD SQL Consolidators DMZ Staging Servers Router SQL Reporting Ave CFG:4xP6, 512 RAM, 160 GB HD European Data Center IDC Staging Servers MOSWest www.microsoft.com (5) Internet FDDI Ring (MIS4) home.microsoft.com (5) 2 Ethernet (100 Mb/Sec Each) HotMail (a year ago): ~400 Computers Crowd (now 2x bigger) DB Clusters (crowds) • 16-node Cluster – 64 cpus – 2 TB of disk – Decision support • 45-node Cluster – – – – 140 cpus 14 GB DRAM 4 TB RAID disk OLTP (Debit Credit) • 1 B tpd (14 k tps) The Microsoft TerraServer Hardware • • • • Compaq AlphaServer 8400 8x400Mhz Alpha cpus 10 GB DRAM 324 9.2 GB StorageWorks Disks – 3 TB raw, 2.4 TB of RAID5 • STK 9710 tape robot (4 TB) • WindowsNT 4 EE, SQL Server 7.0 TerraServer: Lots of Web Hits 35 Total 71 Average Peak Sessions 30 29 m 18 m 15 m 6.6 m 76 k 125 k Hit 25 Page View Count Hits 1,065 m 8.1 m Queries 877 m 6.7 m Images 742 m 5.6m Page Views 170 m 1.3 m Users 6.4 m 48 k Sessions 10 m 77 k 20 DB Query 15 Image 10 5 0 Date • • • • A billion web hits! 1 TB, largest SQL DB on the Web 100 Qps average, 1,000 Qps peak 877 M SQL queries so far TerraServer Availability • Operating for 13 months • Unscheduled outage: 2.9 hrs • Scheduled outage: 2.0 hrs Software upgrades • Availability: 99.93% overall up • No NT failures (ever) • One SQL7 Beta2 bug • One major operator-assisted outage Configuration StorageTek TimberWolf 9710 DEC StorageWorks UltraSCSI Raid-5 Array Legato Networker PowerEdition 4.4a Windows NT Server Enterprise Edition 4.0 Backup / Restore • Performance Data Bytes Backed Up Total Time Number of Tapes Consumed Total Tape Drives Data ThroughPut Average ThroughPut Per Device Average Throughput Per Device NTFS Logical Volumes 1.2 7.25 27 10 168 16.8 4.97 2 TB Hours tapes drives GB/Hour GB/Hour MB/Sec Windows NT Versus UNIX 90,000 80,000 70,000 60,000 50,000 40,000 30,000 20,000 10,000 0 tpmC vs Time 100,000 tpmC vs Time Unix Unix tpmC tpmC Best Results on an SMP: SemiLog plot shows 3x (~2 year) lead by UNIX Does not show Oracle/Alpha Cluster at 100,000 tpmC All these numbers are off-scale huge (40,000 active users?) h 10,000 NT NT Jan- Jan- Jan- Jan- Jan- Jan95 96 97 98 99 00 h 1,000 Jan- Jan- Jan- Jan- Jan- Jan95 96 97 98 99 00 TPC C Improvements (MS SQL) 40% hardware, 100% software, 250%/year on Price, 100% PC Technology 100%/year performance bottleneck is 3GB address space $1,000 $/tpmC vs time 100,000 tpmC vs time tpmC $/tpmC 10,000 $100 1,000 1.5 2.755676 $10 Jan-94 Jan-95 Jan-96 Jan-97 Jan-98 Dec-98 100 Jan-94 Jan-95 Jan-96 Jan-97 Jan-98 Dec-98 UNIX (dis) Economy Of Scale tpmC/k$ 50 45 40 MS SQL Server Bang for the Buck tpmC/K$ 35 30 25 20 15 10 5 0 Sybase Oracle Informix 0 10,000 20,000 30,000 tpmC 40,000 50,000 60,000 Two different pricing regimes This is late 1998 prices TPC Price/tpmC 70 61 60 53 50 47 Sequent/Oracle 89 k tpmC @ 170$/tpmC Sun Oracle 52 k tpmC @ 134$/tpmC 45 HP+NT4+MS SQL 16.2 ktpmC @ 33$/tpmC 40 35 30 30 20 10 17.0 17 9 8 4 12 7 5 3 0 processor disk software net total/10 Storage Latency: How far away is the data? 109 Andromeda Tape /Optical Robot 106 Disk 100 10 2 1 Memory On Board Cache On Chip Cache Registers 2,000 Years Pluto Los Angeles 2 Years 1.5 hr This Resort 10 min This Room My Head 1 min Thesis: Performance =Storage Accesses not Instructions Executed • In the “old days” we counted instructions and IO’s • Now we count memory references • Processors wait most of the time Where the time goes: clock ticks used by AlphaSort Components Sort Disc Wait Disc Wait Sort OS Memory Wait B-Cache Data Miss I-Cache Miss D-Cache Miss Storage Hierarchy (10 levels) Registers, Cache L1, L2 Main (1, 2, 3 if nUMA). Disk (1 (cached), 2) Tape (1 (mounted), 2) Today’s Storage Hierarchy : Speed & Capacity vs Cost Tradeoffs Size vs Speed 1012 109 106 104 Cache Nearline Tape Offline Main 102 Tape Disc Secondary Online Online Secondary Tape Tape 100 Disc Main Offline Nearline Tape Tape -2 $/MB Typical System (bytes) 1015 Price vs Speed 10 Cache 103 10-4 10-9 10-6 10-3 10 0 10 3 Access Time (seconds) 10-9 10-6 10-3 10 0 10 3 Access Time (seconds) Meta-Message: Technology Ratios Are Important • If everything gets faster & cheaper the same rate THEN nothing really changes. • Things getting MUCH BETTER: – communication speed & cost 1,000x – processor speed & cost 100x – storage size & cost 100x • Things staying about the same – speed of light (more or less constant) – people (10x more expensive) – storage speed (only 10x better) at Storage Ratios Changed 10x better access time 10x more bandwidth 4,000x lower media price DRAM/DISK 100:1 to 10:10 to 50:1 Disk Performance vs Time (accesses/ second & Capacity) Disk Performance vs Time 100 10000 1 1980 10 1990 Year 1 2000 Accesses per Second 10 bandwidth (MB/s) access time (ms) 100 10 10 1 1980 1 1990 Year 0.1 2000 1000 100 $/MB 100 Storage Price vs Time Disk Capackty (GB) • • • • 10 1 0.1 0.01 1980 1990 Year 2000 The Pico Processor 1 MM 3 1 M SPECmarks Pico Processor 10 pico-second ram megabyte 10 nano-second ram 10 gigabyte 1 terabyte 10 microsecond ram 10 millisecond disc 100 terabyte 10 second tape archive 100 petabyte Terror Bytes! 106 clocks/ fault to bulk ram Event-horizon on chip. VM reincarnated Multi-program cache Bottleneck Analysis • Drawn to linear scale Disk R/W ~9MBps Memory MemCopy Read/Write ~50 MBps ~150 MBps Theoretical Bus Bandwidth 422MBps = 66 Mhz x 64 bits Bottleneck Analysis • NTFS Read/Write • 18 Ultra 3 SCSI on 4 strings (2x4 and 2x5) 3 PCI 64 ~ 155 MBps Unbuffered read (175 raw) ~ 95 MBps Unbuffered write Good, but 10x down from our UNIX brethren (SGI, SUN) Adapter ~70 MBps Adapter PCI ~110 MBps Memory Read/Write ~250 MBps Adapter PCI Adapter PennySort • Hardware – 266 Mhz Intel PPro – 64 MB SDRAM (10ns) – Dual Fujitsu DMA 3.2GB EIDE disks • Software – NT workstation 4.3 – NT 5 sort • Performance PennySort Machine (1107$ ) Disk 25% – sort 15 M 100-byte records (~1.5 GB) board 13% – Disk to disk – elapsed time 820 sec • cpu time = 404 sec Cabinet + Assembly 7% Memory 8% Other 22% Network, Video, floppy 9% Software 6% cpu 32% Penny Sort Ground Rules http://research.microsoft.com/barc/SortBenchmark • How much can you sort for a penny. – – – – – Hardware and Software cost Depreciated over 3 years 1M$ system gets about 1 second, 1K$ system gets about 1,000 seconds. Time (seconds) = SystemPrice ($) / 946,080 • Input and output are disk resident • Input is – 100-byte records (random data) – key is first 10 bytes. • Must create output file and fill with sorted version of input file. • Daytona (product) and Indy (special) categories How Good is NT5 Sort? • • • • CPU and IO not overlapped. System should be able to sort 2x more RAM has spare capacity Disk is space saturated (1.5GB in, 1.5GB out on 3GB drive.) Need an extra 3GB drive or a >6GB drive Fixed CPU Disk ram Sandia/Compaq/ServerNet/NT Sort • Sort 1.1 Terabyte (13 Billion records) in 47 minutes • 68 nodes (dual 450 Mhz processors) Compaq Proliant 1850R Server 2 400 MHz CPUs To X Fabric 543 disks, 1.5 M$ • 1.2 GBps network rap (2.8 GBps pap) • 5.2 GBps of disk rap (same as pap) • (rap=real application performance, pap= peak advertised performance) To Y fabric 6-port ServerNet I crossbar sw itch 512 MB SDRAM PCI Bus ServerNet I dual-ported PCI NIC 4 SCSI busses, each with 2 data disks Bisection Line (Each switch on this line adds 3 links to bisection width) X Fabric (10 bidirectional bisection links) Y Fabric (14 bidirectional bisection links) 6-port ServerNet I crossbar sw itch The 72-Node 48-Switch ServerNet-I Topology Deployed at Sandia National Labs SP sort 4.0 • 2 – 4 GBps! 3.5 GPFS read GPFS write 3.0 Local read Local write GB/s 2.5 2.0 1.5 1.0 0.5 0.0 0 100 200 300 400 500 600 700 800 900 Elapsed time (seconds) 56 nodes 18 racks Storage 432 nodes 37 racks compute 488 nodes 55 racks 1952 processors, 732 GB RAM, 2168 disks 56 storage nodes manage 1680 4GB disks 336 4+P twin tail RAID5 arrays (30/node) Compute rack: 16 nodes, each has 4x332Mhz PowerPC604e 1.5 GB RAM 1 32x33 PCI bus 9 GB scsi disk 150MBps full duplex SP switch Storage rack: 8 nodes, each has 4x332Mhz PowerPC604e 1.5 GB RAM 3 32x33 PCI bus 30x4 GB scsi disk (4+1 RAID5) 150MBps full duplex SP switch Progress on Sorting: NT now leads both price and performance • Speedup comes from Moore’s law 40%/year • Processor/Disk/Network arrays: 60%/year (this is a software speedup). SPsort 1.E+08 Sort Re cords/se cond vs T ime SPsort/ IB 1.E+07 1.E+06 Records Sorted per Second Doubles Every Year NOW IBM RS6000 1.E+06 IBM 3090 Sandia/Compaq /NT Ordinal+SGI NT/PennySort Alpha Compaq/NT 1.E+03 1.E+05 Cray YMP Sequent 1.E+04 1.E+03 Intel HyperCube Penny NT sort 1.E+00 Kitsuregawa Hardware Sorter Tandem 1.E+02 1985 GB Sorted per Dollar Doubles Every Year Bitton M68000 1990 1995 2000 1.E-03 1985 1990 1995 2000 Recent Results • NOW Sort: 9 GB on a cluster of 100 UltraSparcs in 1 minute • MilleniumSort: 16x Dell NT cluster: 100 MB in 1.18 Sec (Datamation) • Tandem/Sandia Sort: 68 CPU ServerNet 1 TB in 47 minutes • IBM SPsort 408 nodes, 1952 cpu 2168 disks 17.6 minutes = 1057sec (all for 1/3 of 94M$, slice price is 64k$ for 4cpu, 2GB ram, 6 9GB disks + interconnect Data Gravity Processing Moves to Transducers • Move Processing to data sources • Move to where the power (and sheet metal) is • Processor in – Modem – Display – Microphones (speech recognition) & cameras (vision) – Storage: Data storage and analysis • System is “distributed” (a cluster/mob) SAN: Standard Interconnect Gbps SAN: 110 MBps PCI: 70 MBps UW Scsi: 40 MBps FW scsi: 20 MBps scsi: 5 MBps • LAN faster than memory bus? • • • • 1 GBps links in lab. 100$ port cost soon Port is computer Winsock: 110 MBps (10% cpu utilization at each end) Disk = Node • • • • has magnetic storage (100 GB?) has processor & DRAM has SAN attachment has execution Applications environment Services DBMS RPC, ... File System SAN driver Disk driver OS Kernel end • Capacity: Standard Storage Metrics – RAM: MB and $/MB: today at 10MB & 100$/MB – Disk: GB and $/GB: today at 10 GB and 200$/GB – Tape: TB and $/TB: today at .1TB and 25k$/TB (nearline) • Access time (latency) – RAM: 100 ns – Disk: 10 ms – Tape: 30 second pick, 30 second position • Transfer rate SCAN? • Kaps: How many KB objects served per second – The file server, transaction processing metr – This is the OLD metric. • Maps: How many MB objects served per – The Multi-Media metric • SCAN: How long to scan all the data – The data mining and utility metric (good 1998 devices packaged in system ) DRAM DISK TAPE robot http://www.tpc.org/results/individual_results/Dell/dell.6100.9801.es.pdf Unit capacity (GB) Unit price $ $/GB Latency (s) Bandwidth (Mbps) Kaps Maps Scan time (s/TB) $/Kaps $/Maps $/TBscan 1 4000 4000 1.E-7 500 5.E+5 5.E+2 2 9.E-11 8.E-8 $0.08 18 500 28 1.E-2 15 1.E+2 13.04 1200 5.E-8 4.E-7 $0.35 35 X 14 10000 20 3.E+1 7 3.E-2 3.E-2 70000 3.E-3 3.E-3 $211 (good 1998 devices packaged in system ) 5.E+05 DRAM 7.E+04 http://www.tpc.org/results/individual_results/Dell/dell.6100.9801.es.pdf 1.E+06 4.E+03 1200 DISK 500 500 99 2820 1.E+03 157 13 0.03 1.E+00 TAPE robot 2 3.E-03 3.E-03 1.E-03 4.E-07 5.E-088.E-08 1.E-06 9.E-11 Bs ca n $/ T ap s ap s $/ M e t im $/ K B) (s /T ap s M Sc an Ka ps bp s) (M id th $/ G B 1.E-12 Ba nd w X 14 0.35 0.08 0.03 1.E-09 211 Maps, SCANs At 10 MB/s: 1.2 days to scan • parallelism: use many parallel 1 Terabyte 1,000 x parallel: 100 secondsin SCAN. little devices 1 Terabyte 10 MB/s Parallelism: divide a big problem into many smaller ones to be solved in parallel. a Card The 1 TB disc card An array of discs Can be used as 100 discs 1 striped disc 10 Fault Tolerant discs ....etc 14" LOTS of accesses/second Life is cheap, its the accessories that cost ya. bandwidth Processors are cheap, it’s the peripherals that cost ya (a 10k$ disc card). Not Mainframe Silos 100 robots 1M$ 50TB 50$/GB 3K Maps 10K$ robot 14 tapes 27 hr Scan 500 GB 5 MB/s 20$/GB Scan in 27 hours. independent tape robots 30 Maps many (like a disc farm) Myth Optical is cheap: 200 $/platter 2 GB/platter => 100$/GB (2x cheaper than disc) Tape is cheap: => 1.5 $/GB 30 $/tape 20 GB/tape (100x cheaper than disc). Cost Tape needs a robot (10 k$ ... 3 m$ ) 10 ... 1000 tapes (at 20GB each) => 20$/GB ... 200$/GB (1x…10x cheaper than disc) Optical needs a robot (100 k$ ) 100 platters = 200GB ( TODAY ) => 400 $/GB ( more expensive than mag disc ) Robots have poor access times Not good for Library of Congress (25TB) Data motel: data checks in but it never checks out! The Access Time Myth Wait The Myth: seek or pick time dominates The reality: (1) Queuing dominates (2) Transfer dominates BLOBs (3) Disk seeks often short Implication: many cheap servers better than one fast expensive server Transfer Transfer Rotate Rotate Seek Seek What To Do About HIGH Availability • Need remote MIRRORED site to tolerate environmental failures (power, net, fire, flood) operations failures • Replicate changes across the net • Failover servers across the net (some distance) client server State Changes server • Allows: software upgrades, site moves, fires,... >100 feet or >100 miles • Tolerates: operations errors, hiesenbugs, Scaleup Has Limits (chart courtesy of Catharine Van Ingen) LANL Loki P6 Linux NAS Expanded Linux Cluster Cray T3E • Vector Supers ~ 10x supers • Supers ~ 10x PCs – 300 Mflops/cpu – bus/memory ~ 2 GBps – IO ~ 1 GBps 100.000 IBM SP SGI Origin 2000195 Sun Ultra Enterprise 4000 UCB NOW 10.000 Mflop/s/$K – ~3 Gflops/cpu – bus/memory ~ 20 GBps – IO ~ 1GBps • PCs are slow – ~ 30 Mflops/cpu – and bus/memory ~ 200MBps – and IO ~ 100 MBps Mflop/s/$K vs Mflop/s 1.000 0.100 0.010 0.001 0.1 1 10 100 Mflop/s 1000 10000 100000 TOP500 Systems by Vendor (courtesy of Larry Smarr NCSA) 500 Other Japanese Vector Machines Number of Systems 400 Other DEC Intel Japanese TMC Sun DEC Intel HP 300 TMC IBM Sun Convex HP 200 Convex SGI IBM SGI 100 CRI TOP500 Reports: http://www.netlib.org/benchmark/top500.html Jun-98 Nov-97 Jun-97 Nov-96 Jun-96 Nov-95 Jun-95 Nov-94 Jun-94 Nov-93 0 Jun-93 CRI NCSA Super Cluster http://access.ncsa.uiuc.edu/CoverStories/SuperCluster/super.html • National Center for Supercomputing Applications University of Illinois @ Urbana • 512 Pentium II cpus, 2,096 disks, SAN • Compaq + HP +Myricom + WindowsNT • A Super Computer for 3M$ • Classic Fortran/MPI programming • DCOM programming model Avalon: Alpha Clusters for Science http://cnls.lanl.gov/avalon/ 140 Alpha Processors(533 Mhz) x 256 MB + 3GB disk Fast Ethernet switches = 45 Gbytes RAM 550 GB disk + Linux…………………... = 10 real Gflops for $313,000 => 34 real Mflops/k$ on 150 benchmark Mflops/k$ Beowulf project is Parent http://www.cacr.caltech.edu/beowulf/naegling.html 114 nodes, 2k$/node, Scientists want cheap mips. Your Tax Dollars At Work ASCI for Stockpile Stewardship • Intel/Sandia: 9000x1 node Ppro • LLNL/IBM: 512x8 PowerPC (SP2) • LANL/Cray: ? • Maui Supercomputer Center – 512x1 SP2 Observations • Uniprocessor RAP << PAP – real app performance << peak advertised performance • Growth has slowed (Bell Prize – – – – – – 1987: 0.5 GFLOPS 1988 1.0 GFLOPS 1 year 1990: 14 GFLOPS 2 years 1994: 140 GFLOPS 4 years 1997: 604 GFLOPS 1998: 1600 G__OPS 4 years Two Generic Kinds of computing • Many little – – – – – embarrassingly parallel Fit RPC model Fit partitioned data and computation model Random works OK OLTP, File Server, Email, Web,….. • Few big – sometimes not obviously parallel – Do not fit RPC model (BIG rpcs) – Scientific, simulation, data mining, ... Many Little Programming Model • • • • • • • • • many small requests route requests to data encapsulate data with procedures (objects) three-tier computing RPC is a convenient/appropriate model Transactions are a big help in error handling Auto partition (e.g. hash data and computation) Works fine. Software CyberBricks Object Oriented Programming Parallelism From Many Little Jobs • • • • • Gives location transparency ORB/web/tpmon multiplexes clients to servers Enables distribution Exploits embarrassingly parallel apps (transactions) HTTP and RPC (dcom, corba, rmi, iiop, …) are basis Tp mon / orb/ web server Few Big Programming Model • Finding parallelism is hard – Pipelines are short (3x …6x speedup) • Spreading objects/data is easy, but getting locality is HARD • Mapping big job onto cluster is hard • Scheduling is hard – coarse grained (job) and fine grain (co-schedule) • Fault tolerance is hard Kinds of Parallel Execution Pipeline Partition outputs split N ways inputs merge M ways Any Sequential Program Sequential Sequential Any Sequential Sequential Program Any Sequential Program Any Sequential Sequential Program Why Parallel Access To xData? At 10 MB/s 1,000 parallel 1.2 days to scan 100 second SCAN. 1 Terabyte 1 Terabyte 10 MB/s Parallelism: divide a big problem into many smaller ones to be solved in parallel. Why are Relational Operators Successful for Parallelism? Relational data model uniform operators on uniform data stream Closed under composition Each operator consumes 1 or 2 input streams Each stream is a uniform collection of data Sequential data in and out: Pure dataflow partitioning some operators (e.g. aggregates, non-equi-join, sort,..) requires innovation AUTOMATIC PARALLELISM Database Systems “Hide” Parallelism • Automate system management via tools – data placement – data organization (indexing) – periodic tasks (dump / recover / reorganize) • Automatic fault tolerance – duplex & failover – transactions • Automatic parallelism – among transactions (locking) – within a transaction (parallel execution) SQL a Non-Procedural Programming Language • SQL: functional programming language describes answer set. • Optimizer picks best execution plan – Picks data flow web (pipeline), – degree of parallelism (partitioning) – other execution parameters (process placement, memory,...) Execution Planning Monitor Schema GUI Optimizer Plan Executors Rivers Partitioned Execution Spreads computation and IO among processors Count Count Count Count Count Count A Table A...E F...J K...N O...S T...Z Partitioned data gives NATURAL parallelism N x M way Parallelism Merge Merge Merge Sort Sort Sort Sort Sort Join Join Join Join Join A...E F...J K...N O...S T...Z N inputs, M outputs, no bottlenecks. Partitioned Data Partitioned and Pipelined Data Flows Automatic Parallel Object Relational DB Select image from landsat where date between 1970 and 1990 and overlaps(location, :Rockies) and snow_cover(image) >.7; Landsat date loc image 1/2/72 . . . . . .. . . 4/8/95 33N 120W . . . . . . . 34N 120W Temporal Spatial Image Assign one process per processor/disk: find images with right data & location analyze image, if 70% snow, return it date, location, & image tests Answer image Data Rivers: Split + Merge Streams N X M Data Streams M Consumers N producers River Producers add records to the river, Consumers consume records from the river Purely sequential programming. River does flow control and buffering does partition and merge of data records River = Split/Merge in Gamma = Exchange operator in Volcano /SQL Server. Generalization: Object-oriented Rivers • Rivers transport sub-class of record-set (= stream of objects) – record type and partitioning are part of subclass • Node transformers are data pumps – an object with river inputs and outputs – do late-binding to record-type • Programming becomes data flow programming – specify the pipelines • Compiler/Scheduler does data partitioning and “transformer” placement NT Cluster Sort as a Prototype • Using – data generation and – sort as a prototypical app • “Hello world” of distributed processing • goal: easy install & execute Remote Install •Add Registry entry to each remote node. RegConnectRegistry() RegCreateKeyEx() Cluster StartupExecution •Setup : MULTI_QI struct COSERVERINFO struct •CoCreateInstanceEx() MULT_QI COSERVERINFO HANDLE HANDLE HANDLE •Retrieve remote object handle from MULTI_QI struct Sort() •Invoke methods as usual Sort() Sort() Cluster Sort •Multiple Data Sources A AAA BBB CCC Conceptual Model •Multiple Data Destinations AAA AAA AAA •Multiple nodes AAA AAA AAA •Disks -> Sockets -> Disk -> Disk B C AAA BBB CCC CCC CCC CCC AAA BBB CCC CCC CCC CCC BBB BBB BBB BBB BBB BBB How Do They Talk to Each Other? Applications Each node has an OS Each node has local resources: A federation. Each node does not completely trust the others. Nodes use RPC to talk to each other – CORBA? DCOM? IIOP? RMI? – One or all of the above. Applications ? RPC streams datagrams • Huge leverage in high-level interfaces. • Same old distributed system story. VIAL/VIPL ? RPC streams datagrams • • • • h Wire(s)