heteroscedastic

advertisement

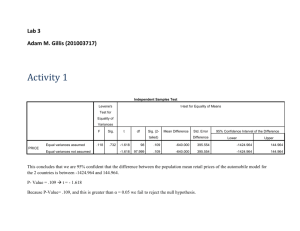

Advanced topics in regression Tron Anders Moger 18.10.2006 Last time: • Had the model P-value for test all β’s=0 vs At least one β not 0 K=no independent variables death rate per 1000=a+b*car age+c*prop light trucks MSE=SSR/K SE(R2) Model Summary Model 1 R R Square ,768 a ,590 Adjus ted R Square ,572 Std. Error of the Es timate ,03871 Model 1 a. Predictors : (Constant), lghttrks , carage Pearson’s r =√R2 d.f.(SSR)=K SSR Regress ion Res idual Total b ANOVA Sum of Squares ,097 ,067 ,165 df 2 45 47 Mean Square ,049 ,001 b. Dependent Variable: deaths SSE d.f.(SSE)=n-K-1 SST d.f.(SST)=n-1 Coefficientsa (Cons tant) carage lghttrks Uns tandardized Coefficients B Std. Error 2,668 ,895 -,037 ,013 ,006 ,001 Standardized Coefficients Beta -,295 ,622 t 2,981 -2,930 6,181 Sig. ,005 ,005 ,000 95% Confidence Interval for B Lower Bound Upper Bound ,865 4,470 -,063 -,012 ,004 ,009 a. Dependent Variable: deaths β-estimates SE(β) Sig. ,000 a a. Predictors : (Constant), lghttrks , carage R2=1-SSE/SST Adj R2 =1-(SSE/(n-K-1))/(SST/(n-1)) Model 1 F 32,402 T test-statistic =β/SE(β) P-value for test β=0 vs β not 0 95%CI for β F test-statistic =MSR/MSE MSE=se2=σ^ =SSE/(n-K-1) Why did we remove car weigth and percentage imported cars from the model? • They did not show a significant relationship with the dependent variable (β not different form 0) • Unless independent variables are completely uncorrelated, you will get different b’s when including several variables in your model compared to just one variable (collinerarity) • Hence, would like to remove variables that has nothing to do with the dependent variable, but still influence the effect of important independent variables Relationship R2 and b 100 High R2, low b (with narrow CI) 100 100 Low R2, high b (with wide CI) 100 • Which result would make you most happy? Centered variables • Remember, we found the model Birth weight=2369.672+4.429*mother’s weight • Hence, constant has no interpretation • Construct mother’s weight 2=mother’s weight=130 lbs mean(mother’s weight) • Get Coefficientsa Model 1 (Cons tant) lwt2 Uns tandardized Coefficients B Std. Error 2944,656 52,244 4,429 1,713 Standardized Coefficients Beta ,186 t 56,363 2,586 Sig. ,000 ,010 95% Confidence Interval for B Lower Bound Upper Bound 2841,592 3047,720 1,050 7,809 a. Dependent Variable: birthweight • And the model Birth weight=2944.656+4.429*mother’s weight 2 • Constant is now pred. birth weight for a 130 lbs mother Indicator variables • Binary variables (yes/no, male/female, …) can be represented as 1/0, and used as independent variables. • Also called dummy variables in the book. • When used directly, they influence only the constant term of the regression • It is also possible to use a binary variable so that it changes both constant term and slope for the regression Example: Regression of birth weight with mother’s weight and smoking status as independent variables Model Summaryb Model 1 R R Square ,259 a ,067 Adjus ted R Square ,057 Std. Error of the Es timate 707,83567 a. Predictors : (Constant), smoking s tatus , weight in pounds b. Dependent Variable: birthweight ANOVAb Model 1 Regress ion Res idual Total Sum of Squares 6725224 93191828 99917053 df 2 186 188 Mean Square 3362612,165 501031,335 F 6,711 a. Predictors : (Constant), smoking s tatus , weight in pounds b. Dependent Variable: birthweight Coefficientsa Model 1 (Cons tant) weight in pounds s moking s tatus Uns tandardized Coefficients B Std. Error 2500,174 230,833 4,238 1,690 -270,013 105,590 a. Dependent Variable: birthweight Standardized Coefficients Beta ,178 -,181 t 10,831 2,508 -2,557 Sig. ,000 ,013 ,011 95% Confidence Interval for B Lower Bound Upper Bound 2044,787 2955,561 ,905 7,572 -478,321 -61,705 Sig. ,002 a Interpretation: • Have fitted the model Birth weight=2500.174+4.238*mother’s weight-270.013*smoking status • If the mother start to smoke (and her weight remian constant), what is the predicted influence on the infatnt’s birth weight? -270.013*1= -270 grams • What is the predicted weight of the child of a 150 pound, smoking woman? 2500.174+4.238*150-270.013*1=2866 grams Will R2 automatically be low for indicator variables? 0 1 What if categorical variable has more than two values? • Example: Ethinicity; black, white, other • For categorical variables with m possible values, use m-1 indicators. • Important: A model with two indicator variables will assume that the effect of one indicator adds to the effect of the other • If this may be unsuitable, use an additional interaction variable (product of indicators) Model birth weight as a function of ethnicity • Have constructed variables black=0 or 1 and other=0 or 1 • Model: Birth weight=a+b*black+c*others • Get Coefficientsa Model 1 (Cons tant) black other Uns tandardized Coefficients B Std. Error 3103,740 72,882 -384,047 157,874 -299,725 113,678 Standardized Coefficients Beta -,182 -,197 t 42,586 -2,433 -2,637 Sig. ,000 ,016 ,009 95% Confidence Interval for B Lower Bound Upper Bound 2959,959 3247,521 -695,502 -72,593 -523,988 -75,462 a. Dependent Variable: birthweight • Hence, predicted birth weight decrease by 384 grams for blacks and 299 grams for others • Predicted birth weight for whites is 3104 grams Interaction: • Sometimes the effect (on y) of one independent variable (x1) depends on the value of another independent variable (x2) • Means that you e.g get different slopes x1 for different values of x2 • Usually modelled by constructing a product of the two variables, and including it in the model • Example: bwt=a+b*mwt+c*smoking+d*mwt*smoking =a+(b+d*smoking)*mwt+c*smoking Get SPSS to do the estimation: • Get bwt=2347+5.41*mwt+47.87*smoking-2.46*mwt*smoking Coefficientsa Model 1 (Cons tant) weight in pounds s moking s tatus s mkwht Uns tandardized Coefficients B Std. Error 2347,507 312,717 5,405 2,335 47,867 451,163 -2,456 3,388 Standardized Coefficients Beta ,227 ,032 -,223 t 7,507 2,315 ,106 -,725 Sig. ,000 ,022 ,916 ,470 95% Confidence Interval for B Lower Bound Upper Bound 1730,557 2964,457 ,798 10,012 -842,220 937,953 -9,140 4,229 a. Dependent Variable: birthweight • Mwt=100 lbs vs mwt=200lbs for non-smokers: bwt=2888g and bwt=3428g, difference=540g • Mwt=100 lbs vs mwt=200lbs for smokers: bwt=2690g and bwt=2985g, difference=295g What does this mean? smoking status 5000,00 ,00 1,00 4000,00 birthweight • Mother’s weight has a greater impact for birth weight for nonsmokers than for smokers (or the other way round) 3000,00 2000,00 1000,00 R Sq Linear = 0,042 R Sq Linear = 0,023 0,00 50,00 100,00 150,00 200,00 weight in pounds 250,00 What does this mean cont’d? • We see that the slope is steeper for nonsmokers • In fact, a model with mwt and mwt*smoking fits better than the model mwt and smoking: Model Summary Model 1 R R Square ,264 a ,070 Adjus ted R Square ,060 Std. Error of the Es timate 706,85442 a. Predictors : (Constant), smkwht, weight in pounds Coefficientsa Model 1 (Cons tant) weight in pounds s mkwht Uns tandardized Coefficients B Std. Error 2370,504 224,809 5,237 1,713 -2,106 ,792 a. Dependent Variable: birthweight Standardized Coefficients Beta ,220 -,191 t 10,545 3,057 -2,660 Sig. ,000 ,003 ,009 95% Confidence Interval for B Lower Bound Upper Bound 1927,000 2814,007 1,857 8,616 -3,668 -,544 Should you always look at all possible interactions? • No. • Example shows interaction between an indicator and a continuous variable, fairly easy to interpret • Interaction between two continuous variables, slightly more complicated • Interaction between three or more variables: Difficult too interpret • Doesn’t matter if you have a good model, if you can’t interpret it • Often interested in interactions you think are there before you do the study Multicollinearity • Means that two or more independent variables are closely correlated • To discover it, make plots and compute correlations (or make a regression of one parameter on the others) • To deal with it: – Remove unnecessary variables – Define and compute an ”index” – If variables are kept, model could still be used for prediction Example: Traffic deaths • Recall: Used four variables to predict traffic deaths in the U.S. • Among them: Average car weight and prop. imported cars • However, the correlation between these two variables is pretty high Correlation car weight vs imp.cars • Pearson r is 0.94: 3800,00 Correlations impcars Pears on Correlation Sig. (2-tailed) N Pears on Correlation Sig. (2-tailed) N 49 ,011 ,943 49 impcars ,011 ,943 49 1 49 • Problematic to use both of these as independents in a regression 3600,00 vehwt carage carage 1 3400,00 3200,00 3000,00 0,00 5,00 10,00 15,00 impcars 20,00 25,00 30,00 Choice of variables • Include variables which you believe have a clear influence on the dependent variable, even if the variable is ”uninteresting”: This helps find the true relationship between ”interesting” variables and the dependent. • Avoid including a pair (or a set) of variables whose values are clearly linearily related Choice of values • Should have a good spread: Again, avoid collinearity • Should cover the range for which the model will be used • For categorical variables, one may choose to combine levels in a systematic way. Specification bias • Unless two independent variables are uncorrelated, the estimation of one will influence the estimation of the other • Not including one variable which bias the estimation of the other • Thus, one should be humble when interpreting regression results: There are probably always variables one could have added Heteroscedasticity – what is it? • In the standard regression model yi 0 1 x1i 2 x2i ... K xKi i it is assumed that all i have the same variance. • If the variance varies with the independent variables or dependent variable, the model is heteroscedastic. • Sometimes, it is clear that data exhibit such properties. Heteroscedasticity – why does it matter? • Our standard methods for estimation, confidence intervals, and hypothesis testing assume equal variances. • If we go on and use these methods anyway, our answers might be quite wrong! Heteroscedasticity – how to detect it? • Fit a regression model, and study the residuals – make a plot of them against independent variables – make a plot of them against the predicted values for the dependent variable • Possibility: Test for heteroscedasticity by doing a regression of the squared residuals on the predicted values. Example: The model traffic deaths=a+b*car age+c*light trucks • Does not look too bad Scatterplot Regression Standardized Residual Dependent Variable: deaths 4 3 2 1 0 -1 -2 -3 -2 -1 0 1 Regression Standardized Predicted Value 2 What is bad? • This: and this: ε ε 0 0 y^ y^ Heteroscedasticity – what to do about it? • Using a transformation of the dependent variable – log-linear models • If the standard deviation of the errors appears to be proportional to the predicted values, a two-stage regression analysis is a possibility Dependence over time • Sometimes, y1, y2, …, yn are not completely independent observations (given the independent variables). – Lagged values: yi may depend on yi-1 in addition to its independent variables – Autocorrelated errors: The residuals εi are correlated • Often relevant for time-series data Lagged values • In this case, we may run a multiple regression just as before, but including the previous dependent variable yi-1 as a predictor variable for y i. • Use the model yt=β0+β1x1+γyt-1+εt • A 1-unit increase in x1 in first time period yields an expected increase in y of β1, an increase β1γ in the second period, β1γ2 in the third period and so on • Total expected increase in all future is β1/(1-γ) Example: Pension funds from textbook CD • Want to use the market return for stocks (say, in millon $) as a predictor for the percentage of pension fund portifolios at market value (y) at the end of the year • Have data for 25 yrs->24 observations Model Summaryb Model 1 R R Square ,980 a ,961 Adjus ted R Square ,957 Std. Error of the Es timate 2,288 DurbinWatson 1,008 a. Predictors : (Cons tant), lag, return b. Dependent Variable: stocks Coefficientsa Model 1 (Cons tant) return lag Uns tandardized Coefficients B Std. Error 1,397 2,359 ,235 ,030 ,954 ,042 a. Dependent Variable: stocks Standardized Coefficients Beta ,359 1,041 t ,592 7,836 22,690 Sig. ,560 ,000 ,000 95% Confidence Interval for B Lower Bound Upper Bound -3,509 6,303 ,172 ,297 ,867 1,042 Get the model: • yt=1.397+0.235*stock return+0.954*yt-1 • A one million $ increase in stock return one year yields a 0.24% increase in pension fund portifolios at market value • For the next year: 0.235*0.954=0.22% • And the third year: 0.235*0.9542=0.21% • For all future: 0.235/(1-0.954)=5.1% • What if you have a 2 million $ increase? Autocorrelated errors • In the standard regression model, the errors are independent. • Using standard regression formulas anyway can lead to errors: Typically, the uncertainty in the result is underestimated. Autocorrelation – how to detect? • Plotting residuals against time! Option in SPSS • The Durbin-Watson test compares the possibility of independent errors with a first-order autoregressive model: t t 1 ut n Test depends on K (no. of independent variables), n (no. observations) and sig.level α Test Test H0: ρ=0 vs H1: ρ=0 Reject H0 if d<dL Accept H0 if d>dU Inconclusive if dL<d<dU statistic: d (e e t 2 t 1 t n e t 1 t 2 ) 2 Example: Pension funds Model Summaryb Model 1 R R Square ,980 a ,961 Adjus ted R Square ,957 Std. Error of the Es timate 2,288 DurbinWatson 1,008 a. Predictors : (Cons tant), lag, return b. Dependent Variable: stocks •Want to test ρ=0 on 5%-level •Test statistic d=1.008 •Have one independent variable (K=1 in table 12 on p. 876) and n=24 •Find critical values of dL=1.27 and dU=1.45 •Reject H0 Autocorrelation – what to do? • It is possible to use a two-stage regression procedure: – If a first-order auto-regressive model with parameter is appropriate, the model yt yt 1 0 (1 ) 1 ( x1t x1,t 1 ) ... K ( xKt xK ,t 1 ) t t 1 will have uncorrelated errors t t 1 • Estimate from the Durbin-Watson statistic, and estimate from the model above Next time: • What if the assumption of normality for your data is invalid? • You have to forget all you have learnt so far, and do something else • Non-parametric statistics