Slaughter the PIGs - Risk Body of Knowledge

advertisement

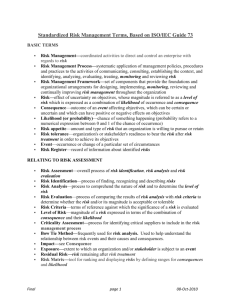

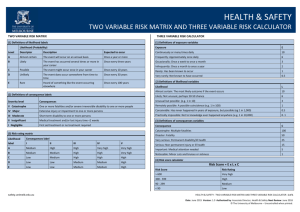

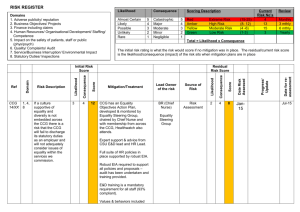

LEADING PIGS TO SLAUGHTER Risk matrices and/ or Probability x Impact Grids (PIGs), even what some call ‘heat maps’, have been the subject of considerable debate in professional discussions and in a few academic studies (Cox 2008). They have their advocates, mostly on the grounds of ease of understanding and traditional use but they are usually ill-formed and ill-used. This paper proposes that their use should be stopped immediately and replaced with simple, yet logically and mathematically valid estimates of the extent of consequences of risk events. 1 What are PIGs? 1 Why Should We Use Them? 1 Why Should We Not Use Them? 3 What Should We Do Instead: turning PIGs into BACON 10 References 10 3 What are PIGs? There are many versions of PIGs. The one that appeared in Appendix E of AS 4360: 1999 Risk management (Standards Australia, 1999) given in Figure 1, seems to be the precursor to most of the uses of this method for representing the “severity” or level of risk. Figure 1. Risk matrix from AS 4360: 1999 There are many examples of “qualitative” scales for the likelihood of the risks (using the terms as in 1999) or the extent of their consequences. In some examples, there are three intervals on such scales; in others, there are as many as nine. There are many variants of showing the combination of these scales to represent the need for managerial action. In some, the scale intervals are multiplied; in others, they are added. There are a few examples where the scales represent both negative and positive consequences. In some cases, as advocated in HB436: 2004 (Standards Australia, 2004), there is a consideration of the range of outcomes or the range of likelihoods for an event but usually it is expected that an event is at one point on each of the scales. Why Should We Use Them? Justin Talbot provides a the best summary of the arguments for, and against, PIGs in his blog post (Talbot, 2013), What’s Right with Risk Matrices, 1 Table 1. Strengths and Weaknesses of PIGs (Talbot, 2013), emphasis added 1. 2. 3. 4. 5. X compare only a small fraction of randomly selected pairs of hazards mistakenly assign identical ratings to quantitatively different risks mistakenly assign higher qualitative ratings to quantitatively smaller risks, lead to worse-than-random decisions mistakenly allocate resources, as effective allocation of resources to risk treatments cannot be based on the categories provided by risk matrices Categorizations of severity cannot be made objectively for uncertain consequences √ 1. risk matrices are still one of the best practical tools that we have: widespread (and convenient) 2. promote robust discussion (the discussion often being more useful than the actual rating) 3. provide some consistency to prioritizing risks 4. help keep participants in workshop on track 5. focus decision makers on the highest priority risks 6. present complex risk data in a concise visual fashion 7. prioritizing the allocation of resources is not the role of the risk matrix – that role belongs to the selection of risk treatments 8. any risk assessment tool can assign identical ratings to quantitatively different risks 9. no tool can consistently correctly and unambiguously compare more than a small fraction of randomly selected pairs of hazards 10. if a risk is in the ‘High’ or the ‘Top 10’ list it requires attention and whether it is third or fourth on the list is not likely to be significant 11. subjective decision making will always be a part of the risk assessment process no matter what tool is used 12. risk matrices are a tool which support risk informed decisions, not a tool for making decisions 13. last but not least, most of the flaws listed above only exist if risk matrices are used in isolation, which is rarely the case [I am not sure about this last point. I have seen many cases when everything hung on the presentation of a PIG to support a recommendation to take action.] This summary brings out the main point about PIGs: they have been extensively used for many years, appearing in several standards and textbooks about good risk management practices but often – if not always – misused. There have been a (very) few academic studies about the value or the application of PIGs. Tony Cox is probably the researcher who has done the most in studying how to use risk matrices. His somewhat classic analysis of the design of matrices (“What’s wrong with risk matrices?”) was based upon their description in AS 4360: 1999 (not even the 2004 version, with ISO/IEC 31000 published just after the article). His conclusions, however, are still valid and appear throughout this paepr. 2 Why Should We Not Use Them? In my view, using a PIG is like giving a loaded revolver to a child or, in another way of making my views clear: a fool with a tool is still a fool and PIGs are a foolish tool. The problems with PIGS, justifying their slaughter are: Wrong scales Wrong combination Wrong use Wrong scales The probability (or likelihood or even plausibility) and the impact (or consequence) scales are often expressed in words, using what the CIA has called “estimative words”. Unfortunately, these words representing different points on the probability scale can have different meaning to different people, so leading to different interpretations of the level of risk. The CIA has been struggling with the use of words such as ‘could’ or ‘might’ or ‘virtually certain’ in their Intelligence Summaries since the ‘50s. Of course, it does not help that they do not speak English well (they have ‘may’ and ‘might’ as points on the same scale, whereas we all know that ‘might’ refers to probability and ‘may’ refers to permission). Figure 2 shows one of their recent attempts to come up with a consistent use of such words. 3 Figure 2. CIA Estimative Words Scale There have been several academic studies, some over 60 years old, of how people interpret adjectives describing probability or extent. Some examples include Johnson (1973), as given in Figure 3. Note the range in meaning assigned to the phrases, such as “Highly Probable”. Figure 3. Verbal expressions of uncertainty (Johnson, 1973) Similarly, thirty years ago, Mosteller and Youtz (1990) summarized over 20 studies of the probabilities assigned to qualitative expressions, and found that some expressions varied widely in how they were assigned numerical equivalents. For example, “Sometimes” varied from about .30 to .60; “possible” had median probability of .47 and a mean of .55 but over a range 0f .40 from highest to lowest. 4 Table 2. Example of Likelihood Scale Likelihood Description Almost Certain Confident that it will occur at least once during the activity and has occurred on a regular basis previously Likely/ Probable Plausible that it may occur at least once during the activity. It ahs occurred previously, but is not certain to occur. Occasional/ Possible There is potential that it may happen during the activity. Is sporadic but not uncommon. Rare/ Unlikely Out of the ordinary. It may occur at some tie during the activity. Is uncommon but could occur at some time. Highly improbable/ Doubtful Not likely to occur at anytime during the activity, but is not impossible Given this sort of debate, does the scale given in Table 2 mean the same thing to every user? How are the phrases used to describe likelihood converted into numbers in order to combine with the consequence scale? (Actually, in this example, drawn from a source that shall remain nameless in order to protect then from well-deserved ridicule, they are not combined in any logical way, just used as labels for cells in a matrix). If the scales are fully ‘anchored’ by descriptive phrases and associated explicitly with probabilities/ plausibilities, then they could be used more reliably but usually they are expected to stand alone, subject to variable interpretations. Another of the difficulties with scales is the choice of how many points they contain. There is considerable psychometric research into the number of levels that are reliable yet precise enough to discriminate between different levels of performance. The consensus is about 7. Cox (2008) makes this point, in his discussion of the need to have sufficient scale points to allow for at least three ‘colours’ in the PIG. The risk level results are ‘weakly consistent’ if, and only if, no ‘red’ cell can share an edge with a ‘green’ cell and there is sufficient ‘betweeness’’ (discrimination) if it is possible to pass through an intermediate colour between ‘red’ and ‘green’. The key aspect of a scale is its resolution power. You cannot tell one level of risk from another if the impact scale is too coarse. An example, from the same unnamed organization, of a set of consequence scales is given in Table 3. How much difference is there between ‘multiple fatalities’ and ‘fatality or permanent disability’? Going from 5 to 50 to 100 to 200M in large, uneven jumps means that 6M is as ‘bad’ as 49, 51 is as ‘bad’ as 99 and ‘much worse’ than 49. If the scale is too coarse then it is not possible to discriminate between desirable and undesirable levels of performance. Such coarse scales often make it impossible to tell whether a suggested risk response has actually reduced the level of risk. For the above scale, an ‘improvement’ in cost from $90M to $60M still results in a scale of ‘Serious’. The third problem with the use of scales is that they should be measurable and at least more than nominal. If they are nominal (only labels) then no mathematical combination is possible. Even if the labels appear numeric, as in those on the backs of footballers, they cannot be combined – there is no mean number for footballers, they cannot be added to give anything meaningful. In Table 3, is it 28 days for one person or 1 day for 28 people? What is the difference between ‘long term loss’ and ‘temporary loss’. How do you measure ‘medium term damage’ compared with ‘short term damage’; how much ‘worse’ is it than ‘damage at regional level’? 5 Table 3. Example of Consequence Scales Catastrophic Critical Serious Disruptive Minor Objectives/ mission Failure to achieve an essential strategic objective. Failure to achieve essential objective with significant strategic implications. Failure to achieve objective. Failure to achieve milestone with implications for business objectives. Minimal impact on objective. Personnel/ HR capability Multiple fatalities. Impacts on critical business continuity requiring immediate significant HR restructure. Fatality or permanent disability. Impacts on business continuity requiring immediate HR restructure. Temporary disability >28 days. Causes long term loss of critical skills, knowledge and productivity. Temporary disability < 28 days emergency treatment; admission to hospital. Causes temporary loss of critical skills, knowledge and productivity. Temporary injury / illness requiring nonemergency treatment at a medical or first aid facility. Causes minimal disruption to productivity. Damage or loss of major resources that: Significantly reduces sustainability of corporate business. Effects > 50% of resource allocation, or > $200M. Prevents delivery of a outcome for a protracted period impacts between 30% and 50% of resource allocation but < $200M. Requires amendments to training regimes. Disrupts outcomes. Impacts between 10% and 30% of resource allocation but < $100M. Disrupts Unit level training. Results in manageable delays in objectives. Impacts between 5% and 10% of resource allocation < $50M. Can be resolved through Unit action but results in insignificant delays in organizational objectives. Impacts < 5% of resource allocation but < $5M. Reputation Long term damage to Org or Group reputation. Medium term damage to Org or Group reputation. Short term damage to Org or Group reputation. Damage at regional level damage with isolated reports in regional and local media. Damage at local and/or Unit level with isolated media reports. Environment and heritage Damage may be irreparable or take more than two (2) years to remediate. Damage can only be remediated over extended period of time -between 6 and 24 months at significant cost. Damage requiring significant remediation during a period of between 3 and 6 months at a high cost. Damage requiring remediation during a period of between 1 and 3 months and at moderate cost. Damage can be repaired by natural action within one year. Lack of key workforce capability Resources/ capability Damage or loss to major resources As advocated in HB436 Appendix C, a scale for consequences should: 6 Have end points that cover the requisite variety (well, it does not actually use that cybernetic term), in that they should cover the extremes in outcomes that are possible – from upper extremes that are “remarkable” to lower extremes that are “trivial” or very poor. Cover measures that are ‘objective’ and tangible Avoid relative measures, such as percentages (I am not sure about this, as sometimes it is the percentage shift in performance that is the most meaningful but you can se the point that relative measures can hide whether the performance is good enough or not Have a number of levels with the necessary resolution, even grouping more tightly around the points where concerns could most lie. If these scales are logarithmic; that is, each level in the scale is a factor of two or 10 times the previous level then they need to be precise near the most likely values but they can become too spread out at the extremes to provide any effective resolution between risks. Wrong combination It is in the combination of likelihood and consequences scales to assess the level of risk that most of the errors occur. There are various ‘usual’ ways of combining point estimates of likelihood and point estimates of consequence into an estimate of risk extent or exposure or level. All of them have a strong chance of providing misleading results. The most common combination is the multiplication of the scale for likelihood by the scale for extent. This combination is, in effect, an approximation of the ‘expected value’ of the level of consequence. Often the words used to express the scale levels show that they are distributed exponentially. It is a mistake to treat these scales as if they are equally appearing intervals and multiplying them to determine the risk level result, as shown in Table 4. The results can be unbalanced: unlikely x moderate (2 x 2 in the scale but $1,000 in effect) has the same scale result as certain x low (4 x 1 but $10,000 in actual effect) or rare x critical (1 x 4 or $10,000). In the translation into ‘extent of required managerial action’ (shown in the colours) then these three combinations lie in different bands when using absolute numbers but the same band when using scales, depending upon where the cut-offs lie. Table 4. PIG using Absolute Numbers Rare Unlikely Likely Certain 1 2 3 4 0.0001 0.001 0.01 0.1 Critical 4 100000000 10000 100000 1000000 10000000 Severe 3 10000000 1000 10000 100000 1000000 Moderate 2 1000000 100 1000 10000 100000 Low 1 100000 10 100 1000 10000 What should be done is to add the log likelihood with the log consequence and then use the anti-log of the addition to determine the risk level result. Of course, these calculations are not actually made but the look up table, as shown in Table 5, should reflect these effects. 7 Table 5. PIG using Logarithmic Combination of Ratings Rare Unlikely Likely Certain 1 2 3 4 0.0001 0.001 0.01 0.1 Critical 4 100000000 4 8 12 16 Severe 3 10000000 3 6 9 12 Moderate 2 1000000 2 4 6 8 Low 1 100000 1 2 3 4 The second way is the most common when using the so-called quantitative approach. All you do is multiply likelihood by consequence on an interval scale, such as 0-10, yielding a result between 0-100. Alternatively, you multiply the likelihood (0-1) by the dollar value of the consequence, to give a dollar amount. In both cases, you compare the result against a limit established by the risk criteria process, reflecting the risk culture/ attitude of the enterprise. This result is influenced by the errors underlying the estimates and the assumptions of the distribution of the estimates. Such operations are equivalent to a single point estimate of the extent of the consequence. It is the same as a decision tree where one branch has the output of the consequence x likelihood and the other branch has the output of 0 x (1-likelihood). This combination actually ‘degrades’ the extent of the consequence. For example, if the scale point for consequence covers $1,000- 2000 and the likelihood is ‘unlikely’, say 0.01 – 0.2 on that scale point, then the combination is equivalent to an “expected value” of $100-400. If the consequence scale point were to cover $4000 – 5000 and the likelihood interval were to be ‘very likely’ (.9-1) then the combination is equivalent to $3600-5000. It is most uncertain whether the managers setting the ‘acceptance’ levels are aware of the effect of this multiplication. Some practitioners determine likelihood and impact both on a 0 – 1 scale and then they combine the scales by using the formula: likelihood + impact – likelihood x impact (which is a conditional probability approach). In this case, they are treating likelihood as if it represents the chance that an event will happen and the chance that the consequence is at the given, fixed level. Again, it comes back to the difficulties of using point estimates to represent what is a range of estimates. If the true underlying distributions are not symmetrical or not normal then the combination can be misleading. There are examples where the combinations have no mathematical basis at all. In Table 6, from the same organization as before (where some many sins have been confounded), the cells formed by the combinations are numbered sequentially; from 1 for the cell with the lowest pair to 25 for the highest pair, as shown below. Then the colours, representing the risk levels, seem to be arbitrarily assigned to the cells. Table 6. Example of Labelled Cells in a PIG Likelihood Catastrophic Critical Serious /Impact 1 - Extreme 2 - Extreme 5 - High Almost certain 3 - High 4 - High 8 - Substantial Likely/ probable 6 - Substantial 7 - Substantial 12 Medium Occasional/ possible 10 - Medium 11 - Medium 13 - Medium Rare/ unlikely 17 - Low 18 - Low 19 - Low Highly improbable/ doubtful Disruptive Minor 9 - Substantial 16 - Medium 14 - Medium 21 - Low 15 - Medium 23 - Low 20 - Low 24 - Low 22 - Low 25 - Low 8 As acknowledged in HB 436: 2013, in its Appendix C, it is acceptable to use risk matrices that are ‘skewed’, in that either likelihood or, preferably, impact is emphasized in the combination. If an organization is risk averse (or its senior decision-makers are) then almost every cell in the ‘catastrophic’ or ‘critical’ columns could be coloured red, indicating the need for immediate managerial action. This combination appears to be multiplicative, with a weighting to the impact scale. Julian Talbot presents an example of a PIG that illustrates another point about how to represent the combination of likelihood and consequence or impact. Although Figure 4 is not very clear, it does show that each consequence scale is associated with its own likelihood scale. That is, the wording to represent the probability of reaching a level of consequence for people is not the same as for the impact upon financial or reputation objectives. Figure 4. Risk Matrix with multiple scales Wrong use Even if PIGs re properly formed, in scales and in combination, they can be still misused. Cox (2008) notes, apart from the problems of insufficient resolution, there are errors such as assigning “.. higher qualitative ratings to quantitatively smaller risks …”; suboptimal resource allocation; and ambiguous inputs and outputs. Other aspects of misuse include the confusion about what likelihood is being assessed. In the earliest version of PIGs, it was the likelihood of the risk event. In more recent assessments, it is the likelihood of the level of consequence arising from an event. Of course, this estimate can be influenced by the likelihood of the events that contribute to the consequence but it is the result of the event that is assessed rather than of the event itself. 9 Even if the combination is validly formed, such as adding logarithmic scales, the lack of precision the scales can cause Type II errors, saying a difference does not exist when it actually does. That is, it can lead to missing risks that should warrant attention. What Should We Do Instead: turning PIGs into BACON All that we are interested in is to determine whether action should be taken in response to the evaluated level of risk and, later, to decide which of the alternative responses should be used. PIGs should not be used to make this first decision and must never be used for the second. What should be used? The first decision could be as simple as just guessing whether there is a ‘strong enough’ chance of exceeding a threshold of concern. That is, a point is set on the consequence scale that, if exceeded, should trigger action then it is estimated whether this point will be exceeded, given the conditions (the set of events) that could occur in the time frame of interest. More precisely, concern should be measured on a suitable interval scale, such as number of injury-days or adverse reports in the media or dollars of financial gain. Then the ‘chance’ should be based upon the Pearson-Tukey estimate of the expected performance on this scale. This estimate uses three points: the 5% (E5) point on the distribution of values, the 50% (E50) point, and the 95% (E95) point. These points are combined into the estimate of the mean by the formula (3 x E5 + 10 x E50 + 3 x E95)/16. If this mean estimate exceeds the threshold ‘significantly, say by about (E95-E5)/4, then the indicated action should be taken. The second decision involves judging the level of risk after responses have been implemented, balanced against the costs of implementing them. The level of risk is a measure of the ‘benefits’ of the risk responses and so this decision involves a form of ‘cost-benefit analysis’. The level can be estimated as before, using the Pearson-Tukey calculation of the mean value on the consequence metric. However, other steps are necessary to turn this value into a dollar value that can be included in the cost-benefit result. How to carry out this conversion is the subject of another paper. References Central Intelligence Agency (1993), Words of Estimative https://www.cia.gov/library/center-for-the-study-of-intelligence/kentcsi/vol8no4/html/v08i4a06p_0001.htm (cited 4 Aug 15) Probability, Cox, L. (2008), What’s wrong with risk matrices?, Risk Analysis, 28(2), 497-512 Johnson, E. (1973), Numerical encoding of qualitative expressions of uncertainty, Report AD 780 814, Army Research Institute for the Behavioral and Sciences, Arlington, VA Mosteller, F. and Youtz, Cleo (1990), Quantifying Probabilistic Expressions, Statistical Science, 5(1), 2-34 Standards Australia (1999), AS 4360: 2019 Risk Management, Standards Australia, Sydney, Australia Standards Australia (2013), Hand Book 436:2013 Companion to AS/NZS ISO/IEC 31000: 2009 Risk Management – Guidelines and Principles, Standards Australia, Sydney, Australia Talbot, J. (2013), What’s right with risk matrices? http://www.jakeman.com.au/media/knowledge-bank/whats-right-with-risk-matrices, cited 30 Aug 13 10