Document

advertisement

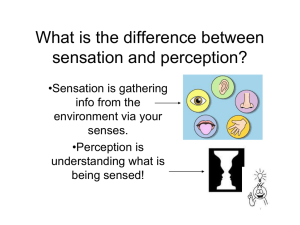

Yiannis: Our project envisions an agent capable of performing actions after recognising the objects in the scene. Tobi: What is the difference of your approach compared to the surveillance systems for example, that do well on recognising humans, monitor and react on their actions? Yiannis: Current state-of-the-art approaches in these systems apply machine learning techniques on large databases of collected data, mostly involving human-centric specific actions, without object manipulation. Moreover, these systems are very good at identifying already seen actions, but fail at recognising new ones. This is the main difference of our approach, that uses multimodal perception coupled with liguistic, cognitive knowledge to predict and recognise previously unseen actions. Andreas: In our project we are thinking of incorporating auditory perception as an additional modality. Actually parse the scene, both visual and auditory and discover the structure of what we parse as we perceive auditory signals, much in the same way as we do in the visual space. We want to extract the "structural descriptions" of a scene where for example we hear a man tapping on the table or opening a window. Malcom: You stated that you believe that our perception comes from understanding of physics. Andreas: I believe that we understand what we perceive. At some point, what we all have is perception and we use a system like language to organise knowledge. Avis: But, as many argue, it is possible to think without language. We can act without having a language. Andreas: I respectfully disagree. We all use a system like language to organise knowledge. So depending on how one defines "language", this symbolic system is essential to our understanding and organising of what we perceive. Malcom: Do you think that we have words in the brain? Yiannis: What is a word? Andreas: I am going to be very provocative, but there is a belief that the way we speak is related to our motor system of auditory tract. It is what the motor theory is all about. We acquire knowledge by incorporating the motor system. Yiannis: We can not only do actions, but we can also imagine them. There are human action representations in the brain without being actually realised. Malcom: Vision complements audio understanding. There were experiments where the lips were saying one thing but the sound was different. People were very confused by it. Mounya: There is not enough proof that we use motoric representation to help us understand speech. There might be other mechanism to disambiguate speech sounds. Some features of the sound itself. Andreas: I don’t want to build a big SVN. We want to find the optimal tradeoff between the discriminative and generative. It’s neither. It’s the balance. Motoric representation gives us an additional constraint on the speech. But there are small children who do not talk, but understand their parents. There are mute people who understand what people say. Dave: You can represent anything with grammar. But there may be better representations for some processes. Yiannis: I think the meeting point of the different perceptual systems is syntax. And we borrow the structure and the principles of the syntax of language. Yiannis: There is the language that reasons and asks vision/audition for more sensory input. If there is a conflict i.e. the language expects a knife and the vision sees a teddy bear, the system will decide that it is confused and will have to move to a different viewpoint to get more/better sensory input and disambiguate the scene. That is exactly why we need the robot. Active perception.