talk

advertisement

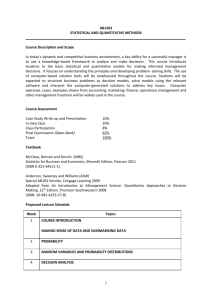

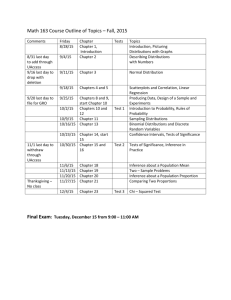

Inference with Heavy-Tails in Linear Models Danny Bickson and Carlos Guestrin Motivation: Large Scale Network modeling • • Stable distribution Huge amounts of data. Daily stats collected from the PlanetLab network using PlanetFlow: • 662 PlanetLab nodes spread over the world • 19,096,954,897 packets were transmitted • 10,410,216,514,054 bytes where transmitted • 24,012,123 unique IP addresses observed Characteristi c exponent Skew S ( , , , ) Scale Inference in the Fourier domain Shift • Bandwidth distribution is heavy tailed: 1% of the top flows are 19% of the total traffic A family of heavy tailed distributions. •Used in different problem domains: economics, physics, geology etc. •Example: Cauchy, Gaussian and Levy distributions are stable. Lower BER (bit error rate) is better •The projection-slice theorems allows us to compute inference in the Fourier domain: Marginal characteristic function Inverse Fourier Slicing operation Closed to scalar multiplication Closed to addition Posterior marginal Main result 2: approximate inference in LCM with stable distributions Our goal 2D Characteristic function Marginalization Difficult! •Use linear multivariate statistical methods for network modeling, monitoring, performance analysis and intrusion detection. •Related work on linear models: • Convolutional factor graphs (CFG) – [Mao-Tran-Info-Theory-03]. Assumes pdf factorizes as a convolution of factors (shows this is possible for any linear model) • Copula method – handles linear model in the cdf domain • Independent components analysis (ICA) - learns linear models and tries to reconstruct X. Can be used as a complimentary method, since we assume that A is given. Input: Prior marginal Output: Posterior marginal Resampling NBP output Exact inference Stable-Jacobi approximate inference algorithm •We use the linear model Y=AX+Z •X,Z are i.i.d. hidden variables drawn from a stable distribution, Y are the observations Exact inference in LCM • • • • LCM-Elimination: Exact inference algorithm for a general linear model Variable elimination algorithm in the Fourier domain • Derived Stable-Jacobi approximate inference algorithm. Significance: when converging, converges to the exact result, while typically more efficient We analyze its convergence and give two sufficient conditions for convergence. Approximate inference: converges, as predicted to the exact conditional posterior marginals • Conclusion • •Inference is computed by Slicing operation • Solution: perform inference in the characteristic function (Fourier) domain • • • • First time exact inference in linear-stable model Faster, more accurate, reduces memory consumption and conveniently computed in closedform Future work: Investigate other families of distributions like Wishart and geometric stable distributions Other transforms •Computing the posterior marginal p(x|y) Linear characteristic graphical models (LCM) Approximate inference in LCM •CFG shows that Any linear model can be represented as a convolution • Borrows ideas from belief propagation to compute approximate inference in the Fourier domain Uses distributivity of the slice and product operations • Algorithm is exact on trees • •Given a linear model, we define LCM as the product of the joint characteristic functions for the probability distribution •Motivation: LCM is the dual model to the convolution representation of the linear model •Unlike CFG, LCM is always defined, for any distribution Application: network monitoring •We model PlanetLab networks flows using a LCM with stable distributions. •Extracted traffic flows from 25 Jan 2010: Total of 247,192,372 flows (non-zero entries of the matrix A) •Fitted flows for each node (vector b) total of 16,741,746 unique nodes • The problem: stable distribution has no closed-form cdf nor pdf (thus Copulas or CFG can not be used) Main contribution •First to compute exact inference in linear-stable model conveniently in closed-form. •Efficient iterative approximate inference. •Our solution is: • More efficient • More accurate • Requires less memory/ storage 2D Fourier transform Modeling network flows using stable distributions Difficulties in previous approximations Non-parametric BP (NBP) [Sudderth-CVPR03] Exact inference: more accurate detection than methods designed for the AWGN (additive white Gaussian noise channel) • Previous approaches for computing inference in heavy-tailed linear models •Typically can not be computed in closed-form. Various approximations: Mixtures of distributions [Chen-Infocom07] , Histograms [Lakhina-Sigcomm05], Sketches [Li-IMC06], Entropy [Lakhina-Sigcomm05], Sampled moments [NguyenIMC07], Etc. Significance: gives for the first time exact inference results in closed-form Efficiency is cubic in the number of variables • • Linearity of stable distribution The challenge: how to model heavy tailed network traffic? Fitting Sample CDMA problem setup borrowed from [Yener-Tran-Comm.-2002] • Number of packets is heavy tailed [Lakhina– Sigcomm 2005] Network flows are linear • Total flow at a node composed of sums of distinct flows Quantization • •Because stable distribution have no closed-form pdf, we have to compute marginalization in the Fourier domain. Detection: given the channel transformation A, observation vector y, and the stable parameters of the noise z, compute the most probable transmission x •The dual operation to marginalization is slicing. Number of packets • • •Our goal is to compute the posterior marginal p(x|y) Heavy-tailed traffic distribution Bandwidth/port number distribution is heavy tailed Application: multiuser detection Main result 1: exact inference in LCM with stable distributions •Cost of elimination is too high O(16M^3) •Solution: USE Stable Jacobi with GRAPHLAB! Running time Speedup Accuracy Acknowledgements This research was supported by: •ARO MURI W911NF0710287 •ARO MURI W911NF0810242 •NSF Mundo IIS-0803333 •NSF Nets-NBD CNS-0721591.