Sentence

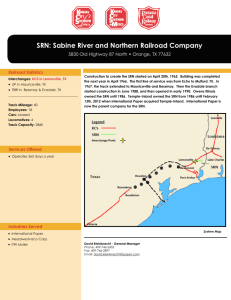

advertisement

The mental representation of sentences Tree structures or state vectors? Stefan Frank S.L.Frank@uva.nl With help from Vera Demberg Rens Bod Victor Kuperman Brian Roark Understanding a sentence The very general picture word sequence the cat is on the mat “comprehension” “meaning” Theories of mental representation on cat perceptual simulation … mat conceptual network tree structure x,y:cat(x) ∧ mat(y) ∧ on(x,y) ? state vector or activation pattern Sentence meaning logical form Sentence “structure” Grammar-based vs. connectionist models The debate (or battle) between the two camps focuses on particular (psycho-) linguistic phenomena, e.g.: Grammars account for the productivity and systematicity of language (Fodor & Pylyshyn, 1988; Marcus, 1998) Connectionism can explain why there is no (unlimited) productivity and (pure) systematicity (Christiansen & Chater, 1999) From theories to models Implemented computational models can be evaluated and compared more thoroughly than ‘mere’ theories Take a common grammar-based model and a common connectionist model Compare their ability to predict empirical data (measurements of word-reading time) Simple Recurrent Network (SRN) versus Probabilistic ContextFree Grammar (PCFG) Probabilistic Context-Free Grammar A context-free grammar with a probability for each production rule (conditional on the rule’s left-hand side) The probability of a tree structure is the product of probabilities of the rules involved in its construction. The probability of a sentence is the sum of probabilities of all its grammatical tree structures. Rules and their probabilities can be induced from a large corpus of syntactically annotated sentences (a treebank). Wall Street Journal treebank: approx. 50,000 sentences from WSJ newspaper articles (1988−1989) Inducing a PCFG S NP VP PRP VPZ It has NP S NP DT VPNN . NN bearing . NP NP DT . PP NN IN no bearing on NP NP NN PRP$ todayNN NN PRP$ NN NP NP NN our work force NN today Simple Recurrent Network Elman (1990) Feedforward neural network with recurrent connections Processes sentences, word by word Usually trained to predict the upcoming word (i.e., the input at t+1) estimated probabilities for words at t+1 output layer state vector representing the sentence up to word t (copy) hidden activation at t–1 hidden layer word input at t Word probability and reading times Hale (2001), Levy (2008) Surprisal theory: the more unexpected the occurrence of a word, the more time needed to process it. Formally: A sentence is a sequence of words: w1, w2, w3, … The time needed to read word wt, is logarithmically related to its probability in the ‘context’: RT(wt) ~ −log Pr(wt|context) surprisal of wt If nothing else changes, the context is just the sequence of previous words: RT(wt) ~ −log Pr(wt|w1, …, wt−1) surprisal of wt Both PCFGs and SRNs can estimate Pr(wt|w1, …, wt−1) So can they predict word-reading times? Testing surprisal theory Demberg & Keller (2008) Reading-time data: Dundee corpus: approx. 2,400 sentences from The Independent newspaper editorials Read by 10 subjects Eye-movement registration First-pass RTs: fixation time on a word before any fixation on later words Computation of surprisal: PCFG induced from WSJ treebank Applied to Dundee corpus sentences Using Brian Roark’s incremental PCFG parser Testing surprisal theory Demberg & Keller (2008) Result: No significant effect of word surprisal on RT, apart from the effects of Pr(wt) and Pr(wt|wt1) But accurate word prediction is difficult because of required world knowledge differences between WSJ and The Independent: − − − − 1988-’89 versus 2002 general WSJ articles versus Independent editorials American English versus British English only major similarity: both are in English Test for a purely ‘structural’ (i.e., non-semantic) effect by ignoring the actual words S NP VP PRP VPZ it has NP DT part-of-speech (pos) tags . NP . PP NN IN no bearing on NP NP PRP$ NN NP NN our work force NN today Testing surprisal theory Demberg & Keller (2008) ‘Unlexicalized’ (or ‘structural’) surprisal − PCFG induced from WSJ trees with words removed − surprisal estimation by parsing sequences of pos-tags (instead of words) of Dundee corpus texts − independent of semantics, so more accurate estimation possible − but probably a weaker relation with reading times Is a word’s RT related to the predictability of its partof-speech? Result: Yes, statistically significant (but very small) effect of pos-surprisal on word-RT Caveats Statistical analysis: − The analysis assumes independent measurements − Surprisal theory is based on dependencies between words − So the analysis is inconsistent with the theory Implicit assumptions: − The PCFG forms an accurate language model (i.e., it gives high probability to the parts-of-speech that actually occur) − An accurate language model is also an accurate psycholinguistic model (i.e., it predicts reading times) Solutions Sentence-level (instead of word-level) analysis − Both PCFG and statistical analysis assume independence between sentences − Surprisal averaged over pos-tags in the sentence − Total sentence RT divided by sentence length (# letters) Measure accuracy a) of the language model: lower average surprisal more accurate language model b) of the psycholinguistic model: RT and surprisal correlate more strongly more accurate psycholinguistic model If a) and b) increase together, accurate language models are also accurate psycholinguistic models Comparing PCFG and SRN PCFG just like − Train on WSJ treebank (unlexicalized) − Parse pos-tag sequences from Dundee corpus Demberg & Keller − Obtain range of surprisal estimates by varying ‘beam-width’ parameter, which controls parser accuracy SRN − Train on sequences of pos-tags (not the trees) from WSJ − During training (at regular intervals), process pos-tags from Dundee corpus, obtaining a range of surprisal estimates Evaluation − Language model: average surprisal measures inaccuracy (and estimates language entropy) − Psycholinguistic model: correlation between surprisals and RTs Results PCFG SRN Preliminary conclusions Both models account for a statistically significant fraction of variance in reading-time data. The human sentence-processing system seems to be using an accurate language model. The SRN is the more accurate psycholinguistic model. But PCFG and SRN together might form an ever better psycholinguistic model Improved analysis Linear mixed-effect regression model (to take into account random effects of subject and item) Compare regression models that include: − surprisal estimates by PCFG with largest beam width − surprisal estimates by fully trained SRN − both Also include: sentence length, word frequency, forward and backward transitional probabilities, and all significant two-way interactions between these Results Estimated β-coefficients (and associated p-values) Regression model includes… PCFG Effect of surprisal according to… PCFG SRN 0.45 p<.02 SRN both Results Estimated β-coefficients (and associated p-values) Regression model includes… PCFG Effect of surprisal according to… PCFG SRN SRN 0.45 p<.02 0.64 p<.001 both Results Estimated β-coefficients (and associated p-values) Regression model includes… PCFG Effect of surprisal according to… PCFG SRN SRN both 0.64 p<.001 −0.46 p>.2 1.02 p<.01 0.45 p<.02 Conclusions Both PCFG and SRN do account for the reading-time data to some extent But the PCFG does not improve on the SRN’s predictions No evidence for tree structures in the mental representation of sentences Qualitative comparison Why does the SRN fit the data better than the PCFG? Is it more accurate on a particular group of data points, or does it perform better overall? Take the regression analyses’ residuals (i.e., differences between predicted and measured reading times) δi = |residi(PCFG)| − |residi(SRN)| δi is the extent to which data point i is predicted better by the SRN than by the PCFG. Is there a group of data points for which δ is larger than might be expected? Look at the distribution of the δs. Possible distributions of δ symmetrical, mean is 0 No difference between SRN and PCFG (only random noise) 0 right-shifted Overall better predictions by SRN 0 right-skewed Particular subset of the data is predicted better by SRN 0 Test for symmetry If the distribution of δ is asymmetric, particular data points are predicted better by the SRN than the PCFG. The distribution is not significantly asymmetric (two-sample Kolmogorov-Smirnov test, p>.17) The SRN seems to be a more accurate psycholinguistic model overall. Questions I cannot (yet) answer (but would like to) Why does the SRN fit the data better than the PCFG? Perhaps people… − are bad at dealing with long-distance dependencies in a sentence? − store information about the frequency of multi-word sequences? Questions I cannot (yet) answer (but would like to) In general: Is this SRN a more accurate psychological model than this PCFG? Are SRNs more accurate psychological models than PCFGs? Does connectionism make for more accurate psychological models than grammar-based theories? What kind of representations are used in human sentence processing? Surprisal-based model evaluation may provide some answers