Academic writing - the Department of Computer and Information

advertisement

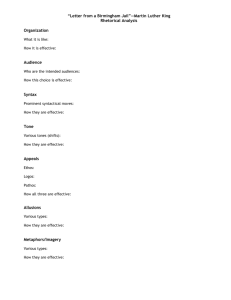

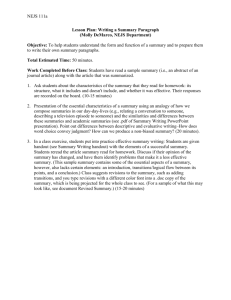

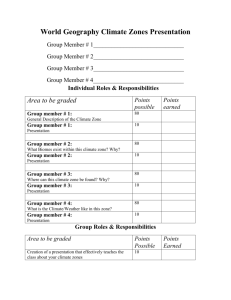

Predicting Text Quality for Scientific Articles Annie Louis University of Pennsylvania Advisor: Ani Nenkova Text quality: Well-written nature Quality of content and writing in the text Useful to know text quality in different settings Eg: Search Lots of relevant results Further rank by content and writing quality 2 Problem definition “Define text quality factors that are 1. generic (applicable to most texts) 2. domain-specific (unique to writing about science) and develop automatic methods to quantify them.” Two types of science writing 1. Conference and journal publications 2. Science journalism 3 Application settings 1. Evaluation of systemgenerated summaries Generic text quality Domain-specific text quality 2. Writing feedback 3. Science news recommendation 4 Previous work in text quality prediction 1 Machine-produced text • Summarization, machine translation 2 Human-written text • Predicting grade level of an article • Automatic essay scoring Focus on generic indicators of text quality Word familiarity, sentence length, syntax, discourse 5 Thesis contributions 1. Make a distinction between generic and domainspecific text quality aspects 2. Define new domain-specific aspects in the genre of writing about science 3. Demonstrate the use of these measures in representative applications 6 Overview 1. Generic text quality factors and summary evaluation 2. Predicting quality for science articles and applications 7 I. Generic text quality - Applied to Automatic Summary Evaluation 8 Automatic Summary Evaluation Facilitates system development Lots of summaries with human ratings available From large scale summarization evaluations Goal: find automatic metrics that correlate with human judgements of quality 1. Content quality - What is said in the summary? 2. Linguistic quality - How it is conveyed? 9 1. Content evaluation of summaries [Louis, Nenkova, 2009] Input-summary similarity ~ summary content quality Best way to measure similarity: JensenShannon divergence JSD: How much two probability distributions differ Word distributions: ‘input’ I, ‘summary’ S 1 1 JS ( I || S ) KL( I || A) KL( S || A) 2 2 I S 2 KL KLdivergence A 10 Performance of the automatic content evaluation method When systems are ranked by JS divergence scores, the ranking correlates highly with human assigned ranks: 0.88 Among the best systems for evaluating news summaries 11 2. Linguistic quality evaluation for summaries [Pitler, Louis, Nenkova, 2010] Consider numerous aspects 1. Language models: familiarity of words 2. Syntax, referring expressions, discourse connectives, A huge table of words and their probabilities in large corpus of general text Use these probabilities to predict familiarity of new texts Syntax: sentence complexity Parse tree depth Length of phrases 12 Performance of evaluation method 3. Word coherence: flow between sentences Learn conditional probabilites (w2/w1) where w1 and w2 occur in subsequent sentences from a large corpus Use to compute likelihood of a new sentence sequence The method is 80% accurate for ranking systems and evaluated on news summaries 13 Why domain-specific factors? Generic factors matter for most texts and give us useful applications What are other domain-specific factors? They might aid developing other interesting applications 14 II. Predicting quality of science articles - Publications and science news 15 Science writing has distinctive characteristics Their function is different from informational texts Academic writing in several genres involve properly motivating the problem and approach Science journalists should create interest in research study among lay readers 16 Academic and Science News writing … We identified 43 features … from the text and that could help determine the semantic similarity of two short text units. [Hatzivassiloglou et. al, 2001] A computer is fed pairs of text samples that it is told are equivalent -- two translations of the same sentence from Madame Bovary, say. The computer then derives its own set of rules for recognizing matches. [Technology Review, 2005] 17 My hypotheses Academic writing 1. 2. Subjectivity: opinion, evaluation, argumentation Rhetorical zones: role of a sentence in the article Science journalism 1. 2. Visual nature: aid explaining difficult concepts Surprisal: present the unexpected thereby creating interest 18 First challenge: Defining text quality Academic writing Citations Annotations: are not highly correlated with citations Science journalism New York Times articles from Best American Science Writing books Negative examples are sampled from NYT corpus around similar topic during the same time 19 Annotation for academic writing Abstract, introduction, related work, conclusion Focus annotations using a set of questions Introduction Why is this problem important? Has the problem been addressed before? Is the proposed solution motivated and explained? Pairwise: Article A vs. Article B More reliable than ratings on a scale (1-5) 20 Text quality factors for writing about science 1 Academic writing • Subjectivity • Rhetorical zones 2 Science news • Surprisal • Visual quality 21 Subjectivity: Academic writing Opinions make an article interesting! “Conventional methods to solve this problem are complex and time-consuming.” 22 Automatic identification of subjective expressions 1. Annotate subjective expressions: clause level 2. Create a dictionary of positive/negative words in academic writing using unsupervised methods 3. Classify a clause as subjective or not, depending on polar words and other features Eg. Context: subjective expressions often occur near causal relations and near statements which describe technique/approach 23 Rhetorical zones: Academic writing Defined for each sentence: function of the sentence in the article Aim … Background … Own work … Comparison Previous work in this area have devised annotation schemes and have shown good performance on automatic zone prediction Used for information extraction and summarization 24 Rhetorical zones and text quality Hypothesis: good and poorly-written articles would have different distribution and sequence of rhetorical zones Sequences in good articles Approach Identify zones Compute features related to sizes of zones and likelihood under transition model of good articles 0.7 aim motivation 0.6 0.2 0.4 0.5 example prior work 0.8 0.2 comparison 25 A simple authoring tool for academic writing Highlighting based feedback Mark zone transitions that are less preferable Low levels of subjectivity 26 Surprisal: Science news “Sara Lewis is fluent in firefly.” Syntactic, lexical, topic correlates of surprise Surprisal under language model Parse probability Verb-argument compatibility Order of verbs Rare topics in news 27 Visual quality: Science news Large corpus of tags associated with images Lake, mountain, tree, clouds … Visual words Visual words and article quality Concentration of visual words Position in the article (lead, beginning of paragraphs) Variety in visual topics (tags from different pictures) 28 Article recommendation for science news People who like reading science news Ask for a preferred topic and show matching articles Ranking 1: based on relevance to keyword Ranking 2: incorporate visual and surprisal scores with relevance Evaluate how often ranking 2 is preferred 29 Summary In this thesis, I develop text quality metrics which are Generic: Summary evaluation Domain-specific: Focused on scientific writing Evaluated in relevant application-settings Challenges Defining text quality Technical approach Designing feedback in the authoring support tool 30 Thank you! 31