Ch10

advertisement

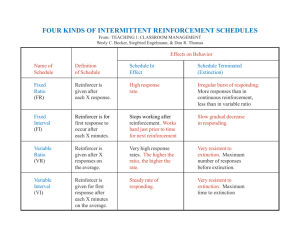

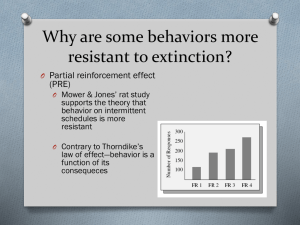

Quiz #3 Last class, we talked about 6 techniques for self- control. Name and briefly describe 2 of those techniques. 1 Schedules of Reinforcement 2 Schedule of Reinforcement Delivery of reinforcement Continuous reinforcement (CRF) Fairly consistent patterns of behaviour Cumulative recorder 3 Cumulative Record Use a cumulative recorder No response: flat line Response: slope Cumulative record 4 Cumulative Recorder pen paper strip roller roller 5 Recording Responses 6 The Accumulation of the Cumulative Record VI-25 7 Schedules: 4 Basic: Fixed Ratio Variable Ratio Fixed Interval Variable Interval Others and mixes (concurrent) 8 Fixed Ratio (FR) N responses required; e.g., FR 25 CRF = FR1 Rise-and-run Postreinforcement pause Steady, rapid rate of response Ration strain slope reinforcement no responses “pen” resetting responses 9 Variable Ratio (VR) Varies around mean number of responses; e.g., VR 25 Rapid, steady rate of response Short, if any postreinforcement pause Longer schedule --> longer pause Never know which response will be reinforced 10 Fixed Interval (FI) Depends on time; e.g., FI 25 Postreinforcement pause Scalloping Time estimation Clock doesn’t start until reinforcer given 11 Variable Interval (VI) Varies around mean time; e.g., VI 25 Steady, moderate response rate Don’t know when time has elapsed Clock doesn’t start until reinforcer given 12 FR 25 VR 25 Response Rates FI 25 VI 25 13 Duration Schedules Continuous responding for some time period to receive reinforcement Fixed duration (FD) Period of duration is a set time period Variable duration (VD) Period of duration varies around a mean 14 Differential Rate Schedules Differential reinforcement of low rates (DRL) Reinforcement only if X amount of time has passed since last response Sometimes “superstitious behaviours” occur Differential reinforcement of high rates (DRH) Reinforcement only if more than X responses in a set time Or, reinforcement if less that X amount of time has passed since last response 15 Noncontingent Schedules Reinforcement delivery not contingent upon a response, but on passage of time Fixed time (FT) Reinforcer given after set time elapses Variable time (VT) Reinforcer given after some time varying around a mean 16 Stretching the Ratio Increasing the number of responses e.g., FR 5 --> FR 50 Extinction problem Use shaping Increase in gradual increments e.g., FR 5, FR 8, FR 14, FR 21, FR 35, FR 50 “Low” or “high” schedules 17 Extinction CRF (FR 1) easiest to extinguish than intermittent schedules (anything but FR 1) Partial reinforcement effect (PRE) High schedules harder to extinguish than low Variable schedules harder to extinguish than fixed 18 Discrimination Hypothesis Difficult to discriminate between extinction and intermittent schedule High schedules more like extinction than low schedules e.g., CRF vs. FR 50 19 Frustration Hypothesis Non-reinforcement for response is frustrating On CRF every response reinforced, so no frustration Frustration grows continually during extinction Stop responding, stop frustration (neg. reinf.) Any intermittent schedule always some non- reinforced responses Responding leads to reinforcer (pos. reinf.) Frustration = S+ for reinforcement 20 Sequential Hypothesis Response followed by reinf. or nonreinf. On intermittent schedules, nonreinforced responses are S+ for eventual delivery of reinforcer High schedules increase resistance to extinction because many nonreinforced responses in a row leads to reinforced Extinction similar to high schedule 21 Response Unit Hypothesis Think in terms of behavioural “units” FR1: 1 response = 1 unit --> reinforcement FR2: 2 responses = 1 unit --> reinforcement Not “response-failure, response-reinforcer” but “response-response-reinforcer” Says PRE is an artifact 22 Mowrer & Jones (1945) Response unit hypothesis More responses in extinction on higher schedules disappears when considered as behavioural units Number of responses/units during extinction 300 250 200 150 100 50 FR1 FR2 FR3 FR4 absolute number of responses number of behavioural units 23 Complex Schedules Multiple Mixed Chain Tandem cooperative 24 Choice Two-key procedure Concurrent schedules of reinforcement Each key associated with separate schedule Distribution of time and behaviour The measure of choice and preference 25 Concurrent Ratio Schedules Two ratio schedules Schedule that gives most rapid reinforcement chosen exclusively Rarely used in choice studies 26 Concurrent Interval Schedules Maximize reinforcement Must shift between alternatives Allows for study of choice behaviour 27 Interval Schedules FI-FI Steady-state responding Less useful/interesting VI-VI Not steady-state responding Respond to both alternatives Sensitive to rate of reinforcemenet Most commonly used to study choice 28 Alternation and the Changeover Response Maximize reinforcers from both alternatives Frequent shifting becomes reinforcing Simple alternation Concurrent superstition 29 Changeover Delay COD Prevents rapid switching Time delay after “changeover” before reinforcement possible 30 Herrnstein’s (1961) Experiment Concurrent VI-VI schedules Overall rates of reinforcement held constant 40 reinforcers/hour between two alternatives 31 The Matching Law The proportion of responses directed toward one alternative should equal the proportion of reinforcers delivered by that alternative. 32 Key 1 Key 2 VI-3min Rein/hour = 20 Resp/hour = 2000 Proportional Rate of Reinforcement R1 = reinf. on key 1 R2 = reinf. on key 2 R1 20 = R1+R2 20+20 = 0.5 VI-3min Rein/hour = 20 Resp/hour = 2000 Proportional Rate of Response B1 = resp. on key 1 B2 = resp. on key 2 B1 2000 = 0.5 = B1+B2 2000+2000 MATCH!!! Key 1 VI-9min Rein/hour = 6.7 Resp/hour = 250 Proportional Rate of Reinforcement R1 = reinf. on key 1 R2 = reinf. on key 2 R1 6.7 = 0.17 = R1+R2 6.7+33.3 Key 2 VI-1.8min Rein/hour = 33.3 Resp/hour = 3000 Proportional Rate of Response B1 = resp. on key 1 B2 = resp. on key 2 B1 250 = 0.08 = B1+B2 250 + 3000 NO MATCH (but close…) Bias Spend more time on one alternative than predicted Side preferences Biological predispositions Quality and amount 35 Varying Quality of Reinforcers Q1: quality of first reinforcer Q2: quality of second reinforcer 36 Varying Amount of Reinforcers A1: amount of first reinforcer A2: amount of second reinforcer 37 Combining Qualities and Amounts 38 Applications Gambling Reinforcement history Economics Value of reinforcer and stretching the ratio Malingering 39