Maximum Likelihood Method

advertisement

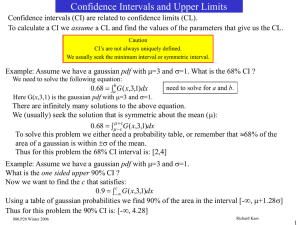

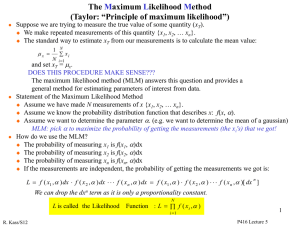

Maximum Likelihood Method (MLM)

Suppose we are trying to measure the true value of some quantity (xT). We make repeated

measurements of this quantity {x1 , x2 ...x n} . The standard way to estimate xT from our

measurements is to calculate the mean value of the measurements:

N

xi

x i 1

N

and set xT x .

Does this procedure make sense?

The MLM answers this question and provides a method for estimating parameters from existing data.

Statement of the Maximum Likelihood Method (MLM):

Assume we have made N measurements of x {x1, x 2 ... xn }.

Assume we know the probability distribution function that describes x: f(x,)

Assume we want to determine the parameter .

The MLM says that we pick such as to maximize the probability of getting the

measurements (the xi's) that we obtained!

How do we use the MLM?

The probability of measuring x1 is f(x1,)dx

The probability of measuring x2 is f(x2,)dx

The probability of measuring xn is f(xn,)dx

If the measurements are independent, the probability of getting our measurements is:

L=f(x1,) f(x2,)…f(xn,)dxn.

L is called the Likelihood Function. It is convenient to write L as:

N

L f (xi , )

i 1

We drop the dxn since it is just proportionality constant

We want to pick the that maximizes L. Thus we want to solve:

880.P20 Winter 2006

L

0

*

Richard Kass

1

Maximum Likelihood Method (MLM)

In practice it is easier to maximize lnL rather than L itself since lnL turns the product into

a summation. However, maximizing lnL gives the same since L and lnL are both

maximum at the same time.

N

ln L ln f (x i , )

i 1

The maximization condition is now:

ln L

(ln f (xi , ))

0

i 1

*

N

*

Note: could be an array of parameters or just a single variable. The equations to

determine range from simple linear equations to coupled non-linear equations.

Example: Let f(x) be given by a Gaussian distribution and let the mean of the Gaussian. We want the best

estimate of from our set of n measurements (x1, x2, ..xn). Let’s assume that is the same for each measurement.

1

f (xi , )

e

2

(x i )

2

2

2

The likelihood function for this problem is:

( xi ) 2

( x1 )2

n

( x 2 ) 2

( xn )2

n

n ( x )2

i

2 2

1

2

1

2

2

2

1

i

L f (xi , )

e 2

e 2 e 2

e 2

e 1

i 1

i 1

2

2

2

We want to find the that maximizes the likelihood function (actually we will maximize lnL).

ln L

1 n (xi )2

n ln

0

2 i1 2 2

n

n

Since is the same for each data point we can factor it out:

n

n

n

i 1

i 1

i 1

xi 0 or xi

Finally, solving for we have:

n

Average !

1 n

x

n i1 i

For the case where the are different for each data point we get the weighted average:

n

x

( i2 )

i 1

n 1i

( 2 )

i 1

880.P20 Winter 2006

i

Richard Kass

2

Maximum Likelihood Method (MLM)

Example: Let f(x,a) be given by a Poisson distribution with the mean of the Poisson. We want the best estimate of from our

set of n measurements (x1, x2, ..xn). We want the best estimate of from our set of n measurements (x1, x2, ..xn). The Poisson

distribution is a discrete distribution, so (x1, x2, ..xn) are integers. The Poisson distribution is given by:

e x

f (x, )

x!

The likelihood function for this problem is:

n

xi

e

e e

e

e n i 1

..

x1 !

x2 !

xn !

x1 !x2 !.. xn !

i1

i1 xi !

We want to find the that maximizes the likelihood function (actually we will maximize lnL).

n

xi

n

L f (xi , )

x1

x2

xn

n

xi

d ln L

d

d ln L

n i 1 0

n ln xi ln(x1 !x 2 !.. xn !) or

d

d

d

i1

1 n

xi .

Average !

n i1

n

Some general properties of the maximum likelihood method:

a) For large data samples (large n) the likelihood function, L, approaches a Gaussian distribution.

b) Maximum likelihood estimates are usually consistent.

By consistent we mean that for large n the estimates converge to the true value of the

parameters we wish to determine.

c) For many instances the estimate from MLM is unbiased.

Unbiased means that for all sample sizes the parameter of interest is calculated correctly.

d) The maximum likelihood estimate of a parameter is the estimate with the smallest variance.

Cramer-Rao bound

We say the estimate is efficient.

e) The maximum likelihood estimate is sufficient.

By sufficient we mean that it uses all the information in the observations (the xi’s).

f) The solution from MLM is unique.

The bad news is that we must know the correct probability distribution for the problem at hand!

880.P20 Winter 2006

Richard Kass

3

Errors & Maximum Likelihood Method (MLM)

How do we calculate errors (’s) using the MLM?

Start by looking at the case where we have a gaussian pdf. The likelihood function is:

n

1

e

i 1 2

n

L f (xi , )

i 1

(xi ) 2

2 2

n

1

e

2

(x1 )2

2 2

e

(x 2 ) 2

2 2

e

(xn )2

2 2

n

1

e

2

n ( x )2

i

2

i 1 2

It is easier to work with lnL:

( xi ) 2

ln L n ln( 2 )

2

i 1 2

n

If we take two derivatives of lnL with respect to we get:

n (x )

ln L

2 i 2

i 1 2

2 ln L

2

n

i 1

( xi )

2

n

2

1

2

For the case of a gaussian pdf we get the familiar result:

2 ln L

2

2

n

2

1

The big news here is that the variance of the parameter of interest is related to the 2 nd derivative of L.

Since our example uses a gaussian pdf the result is exact. More important, the result is asymptotically

true for ALL pdf’s since for large samples (n) all likelihood functions become “gaussian”.

880.P20 Winter 2006

Richard Kass

4

Errors & MLM

The previous example was for one variable. We can generalize the result to the case where

we determine several parameters from the likelihood function (e.g. 1, 2, … n):

2 ln L

Vij

i j

1

Here Vij is a matrix, (the “covariance matrix” or “error matrix”) and it is evaluated at the values

of (1, 2, … n) that maximize the likelihood function.

In practice it is often very difficult or impossible to analytically evaluate the 2 nd derivatives.

The procedure most often used to determine the variances in the parameters relies on the property

that the likelihood function becomes gaussian (or parabolic) asymptotically.

We expand lnL about the ML estimate for the parameters. For the one parameter case we have:

ln L

1 2 ln L

*

ln L( ) ln L( )

( )

( * )2

2

*

2! *

*

Since we are evaluating lnL at the value of (=*) that maximizes L, the term with the 1st derivative is zero.

Using the expression for the variance of on the previous page and neglecting higher order terms we find:

ln L( ) ln Lmax

( * ) 2

k2

*

or ln L( k ) ln Lmax

2

2 2

Thus we can determine the k limits on the parameters by finding the values where lnL

decreases by k2/2 from its maximum value.

880.P20 Winter 2006

Richard Kass

This is

what

MINUIT

does!

5

Example: Log-Likelihood Errors & MLM

Example: Exponential decay: pdf : f (t ,t ) et / t / t

The variance of an exponential pdf with mean lifetime=t is: 2=t2/n

L

n

e

ti / t

/t

i 1

n

1 n

and ln L n ln t t i / t exact solution t t i

n i 1

i 1

Generate events according to an exponential distribution with t0= 100

generate times from an exponential using: ti=-t0lnri

ten events: 1104.082, 220.056, 27.039, 171.492, 10.217, 11.671, 94.930, 74.246, 12.534, 168.319

Calculate lnL vs t & find max of lnL and the points where lnL=lnLmax-1/2 (“1 points”)

Compare errors from “exact” formula and log-likelihood points

-5.613 10 4

-62

lnL

lnL

-5.613 10 4

-63

G

x

-5.613 10 4

lnL

lnL

-64

y = m3-(m0-m1)^ 2/(2*m2^ 2)

Value

Error

m1

100.8

0.013475

m2

1.01 0.0088944

m3

-56128

0.034297

Chisq

0.055864

NA

R

0.99862

NA

-65

-66

-5.613 10 4

-5.613 10 4

-67

0

100

200

t

300

400

500

600

Log-likelihood function for 10 events

lnL max for t=189

1 points: (140, 265) Vs exact: (129, 245)

L not gaussian

880.P20 Winter 2006

-5.613 10 4

97

98

99

100

t

101

102

103

104

Log-likelihood function for 104 events

lnL max for t=100.8

1 points: (99.8, 101.8) Vs exact: (99.8, 101.8

L is fit by a gaussian

Richard Kass

6

Determining the Slope and Intercept with MLM

Example: MLM and determining slope and intercept of a line

Assume we have a set of measurements: (x1, y11), (x2, y22… (xn, ynnand the points are

thought to come from a straight line, y=+bx, and the measurements come from a gaussian pdf.

The likelihood function is:

n

n

1

L f (xi , , b)

e

2

i1

i1

i

( y i q (x i , , b )) 2

2 2i

n

1

e

2

i1

i

( yi b x i ) 2

2 i2

We wish to find the and b that maximizes the likelihood function L.

Thus we need to take some derivatives:

ln L n 1 ( yi bxi ) 2 n 2( yi bxi )( 1)

ln

0

2

i 1 i 2

2 i2

2

i 1

i

ln L

b

b

1 ( yi bxi ) 2 n 2( yi bxi )( xi )

ln

0

2

2

2

2

2

i 1

i

1

i

i

i

n

n

n

x

ln L n yi

1

2 2 b i2 0

i 1 i

i 1 i

i 1 i

n

n

xi

xi2

ln L n xi yi

2 2 b 2 0

b

i 1 i

i 1 i

i 1 i

n yi

2

i 1 i

n xi y i

2

i 1 i

n 1

2

i 1 i

n xi

2

i 1 i

xi

2

i 1 i

n

xi2 b

2

i 1 i

n

We have to solve the two equations for the two unknowns, and b .

We can get an exact solution since these equations are linear in and b.

Just have to invert a matrix.

880.P20 Winter 2006

Richard Kass

7

Determining the Errors on the Slope and Intercept with MLM

yi n x 2i n yi x i n x i

2 2 2 2

i1 i i1 i

i1 i i1 i

n

n x

1 n xi2

i 2

2 2 ( 2 )

i1 i i1 i

i1 i

n

1 n x i yi n yi n x i

2 2 2 2

i1 i i1 i

i1 i i1 i

and b

n 1 n x2

n x

i

i 2

2 2 ( 2 )

i1 i i1 i

i1 i

n

2 ln L

Let’s calculate the error (covariance) matrix for and b: Vij

i j

2

n

n

n

n

yi

xi

ln L

1

1

(

b

)

2

2

2

i 1 i2

2

i 1 i

i 1 i

i 1 i

n

n

n

xi

xi2

xi2

2 ln L n xi yi

(

2 b 2 ) 2

b i 1 i2

b 2

i 1 i

i 1 i

i 1 i

n

n

n

xi

x

2 ln L n yi

1

( 2 2 b 2 ) i2

b b i 1 i

i 1 i

i 1 i

i 1 i

V 1

n 1

2

(1) i n1 i

xi

2

i 1 i

1

xi

2

i 1 i

n

xi2

2

i 1

i

n

n

n xi2

x

2 / D i2 / D

n

n

xi 2

1 n xi2

i 1 i

i 1 i

V n

with

D

(

)

n

2

2

2

xi

1

i 1

i 1

i i 1

i

i

2 / D 2 / D

i 1 i

i 1 i

2

V

b

880.P20 Winter 2006

b

2

b

Note: We could also derive the variance of and b just

using propagation of errors on the formulas for and b.

Richard Kass

8

Chi-Square (c2) Distribution

Chi-square (c2) distribution:

Assume that our measurements (xii’s) come from a gaussian pdf with mean =.

n ( x )2

2

i

Define a statistic called chi-square: c

i2

i 1

It can be shown that the pdf for

c2

is:

p( c 2 ,n)

1

2 n/ 21 c 2 /2

[

c

]

e

2n /2 (n / 2)

0 c2

This is a continuous pdf.

It is a function of two variables, c2 and n = number of degrees of freedom. ( = "Gamma Function“)

A few words about the number of degrees of freedom n:

n = # data points - # of parameters calculated from the data points

For n 20, P(c2>y) can

be approximated using a

gaussian pdf with

y=(2c2) 1/2 -(2n-1)1/2

Reminder: If you collected N events in an experiment and you histogram

your data in n bins before performing the fit, then you have n data points!

EXAMPLE: You count cosmic ray events in 15 second intervals and sort the data into 5 bins:

number of intervals with 0 cosmic rays

2

number of intervals with 1 cosmic rays

7

number of intervals with 2 cosmic rays

6

number of intervals with 3 cosmic rays

3

c2 distribution for different degrees of freedom v

number of intervals with 4 cosmic rays

2

Although there were 36 cosmic rays in your sample you have only 5 data points.

EXAMPLE: We have 10 data points with and the mean and standard deviation of the data set.

RULE of THUMB

If we calculate and from the 10 data point then n = 8

A good fit has

If we know and calculate OR if we know and calculate then n = 9

If we know and then n = 10

c2/DOF 1

A common approximation (useful for poisson case)

“Pearson’s c2”: approximately c2 with n-1 DOF

880.P20 Winter 2006

( N i Pi ) 2

c

Pi

i 1

n

2

Richard Kass

9

MLM, Chi-Square, and Least Squares Fitting

Assume we have n data points of the form (yi, i) and we believe a functional

relationship exists between the points:

y=f(x,a,b…)

In addition, assume we know (exactly) the xi that goes with each yi.

We wish to determine the parameters a, b,..

A common procedure is to minimize the following c2 with respect to the parameters:

n

c

2

[ yi f ( xi , a, b,..)]2

i 1

i2

If the yi’s are from a gaussian pdf then minimizing the c2 is equivalent to the MLM.

However, often times the yi’s are NOT from a gaussian pdf.

In these instances we call this technique “c2 fitting” or “Least Squares Fitting”.

Strictly speaking, we can only use a c2 probability table when y is from a gaussian pdf.

However, there are many instances where even for non-gaussian pdf’s the above sum approximates c2 pdf.

From a common sense point of view minimizing the above sum makes sense

regardless of the underlying pdf.

880.P20 Winter 2006

Richard Kass

10

Least Squares Fitting Example

Example: Leo’s 4.8 (P107) The following data from a radioactive source

was taken at 15 s intervals. Determine the lifetime (t) of the source.

The pdf that describes radioactivity (or the decay of a charmed particle) is:

Technically the pdf is |dN(t)/(N(0)dt)| =N(t)/(N(0)t).

N (t ) N (0)et / t

As written the above pdf is not linear in t. We can turn this into a linear problem by

taking the natural log of both sides of the pdf.

ln( N (t )) ln( N (0)) t / t y C Dt

We can now use the methods of linear least squares to find D and then t.

In doing the LSQ fit what do we use to weight the data points ?

The fluctuations in each bin are governed by Poisson statistics: 2i=Ni.

However in this problem the fitting variable is lnN so we must use propagation of errors

to transform the variances of N into the variances of lnN.

y2 N2 y / N 2 ( N ) ln N / N 2 ( N )1 / N 2 1 / N

Leo has a “1” here

880.P20 Winter 2006

i

1

2

3

4

5

6

7

8

9

10

ti

0

15

30

45

60

75

90

105

120

135

Ni

106

80

98

75

74

73

49

38

37

22

yi = lnNi

4.663

4.382

4.585

4.317

4.304

4.290

3.892

3.638

3.611

3.091

Richard Kass

11

Least Squares Fitting-Exponential Example

The slope of the line is given by:

n

D

1

n

t i yi

n

2

2

i 1 i i 1 i

n

5

ti

Line of “best fit”

2

2

i 1 i i 1 i

n 1 n t2

2 i2

i 1 i i 1 i

D

yi

n

(

4.5

ti

2

)

2

Y(x)

i 1 i

4

652 132800 2780.3 33240

0.00903

2

652 2684700 (33240)

3.5

3

-20

Thus the lifetime (t) = -1/D = 110.7 s

0

The error in the lifetime is:

n

D2

2

i 1 i

n 1 n t2

n t

2

i

(

2 2

i2 )

i 1 i i 1 i

i 1 i

t D2

2

1

t / D

2

652

1.01 10 6

2

652 2684700 (33240)

t D 1/ D

t = 110.7 ± 12.3 sec.

2

1.005 103

9.03 10

3 2

12.3 s

20

40

60

t

80

100 120 140

Caution: Leo has a factor of ½ in his

error matrix (V-1)ij, Eq 4.72.

He minimizes:

y f ( xi , a, b,...) 2

S [ i

]

i

Using MLM we minimized:

( yi f ( xi , a, b,...) 2

ln L

2 i2

Note: fitting without weighting yields: t=96.8 s.

880.P20 Winter 2006

Richard Kass

12

Least Squares Fitting-Exponential Example

We can calculate the c2 to see how “good” the data fits an exponential decay

distribution:

For this problem: lnA=4.725 A=112.73 and t = 110.7 sec

c 2 i 1

10

( N i Ae ti / t ) 2

i2

( N i Ae ti / t ) 2

i 1

15.6

ti / t

Ae

10

Poisson approximation

Mathematica Calculation:

Do[{csq=csq+(cnt[i]-a*Exp[-x[i]/tau])^2/(a*Exp[-x[i]/tau])},{i,1,10}]

Print["The chi sq per dof is ",csq/8]

xvt=1-CDF[ChiSquareDistribution[8],csq];

The chi sq per dof is 1.96

Print["The chi sq prob. is ",100*xvt,"%"]

The chi sq prob. is 4.9 %

This is not such a good fit since the probability is only ~4.9%.

880.P20 Winter 2006

Richard Kass

13

Extended MLM

Often we want to do a MLM fit to determine the number of a signal & background events.

Let’s assume we know the pdfs that describe the signal (ps) and background (pb) and the pdfs

depend on some measured quantity x (e.g. energy, momentum, cerenkov angle..)

We can write the Likelihood for a single event (i) as:

L=fsps(xi)+(1-fs)pb(xi)

with fs the fraction of signal events in the sample, and the number of signal events: Ns=fsN

The likelihood function to maximize (with respect to fs) is:

N

L ( f s p s ( xi ) (1 f s ) pb ( xi ))

i 1

Usually, there is no

closed form solution for fs

There are several drawbacks to this solution:

1) The number of signal and background are 100% correlated.

2) the (poisson) fluctuations in the number of events (N) is not taken into account

Another solution which explicitly takes into account 2) is the EXTENDED MLM:

e v v N

L

N!

e v N

( f s p s ( xi ) (1 f s ) pb ( xi ))

v( f s p s ( xi ) (1 f s ) pb ( xi ))

N

!

i 1

i 1

If Ns & Nb are poisson then

N

ln L v ln N ! ln[ v( f s p s ( xi ) (1 f s ) pb ( xi ))]

so is their product for fixed N

N

i 1

Here v=Ns+Nb so we can re-write the likelihood function as:

N

ln L ( N s N b ) ln N ! (ln[ N s p s ( xi ) N b pb ( xi )]) The N! term drops out when

i 1

we take derivatives to max L.

We maximize L in terms of Ns and Nb.

880.P20 Winter 2006

Richard Kass

14

Extended MLM Example: BF(BD0K*)

Event yields are determined from an unbinned EMLM fit in the region 5.2 mES 5.3 GeV/c2

Choose simple PDFs to fit mES distributions: A=Argus G=Gaussian

Perform ML fits simultaneously in 3 regions.

In each region fit K, K0, K3mES distributions (k=1,2,3)

9 PDFs in all

I) |DE| Sideband: -100 |DE| -60 MeV & 60 |DE| 200 MeV

pdf: Ak

2 (1( m / mmax ) 2 )

A

(

m

,

)

A

m

1

(

m

/

m

)

e

0

max

II) D0 Sideband: |mD-mD,PDG|>4

Take into account “doubly peaking” backgrounds (DP)

pdf: (NnoPA+NDPG)k

III) Signal region: |DE| 25MeV

pdf: (NqqA+kNDPG+NsigG)k k scales the NDP found in D0 the sideband fit.

signal region

~520 signal events

D0 sideband

“fake” D0’s give fake B’s

DE region

880.P20 Winter 2006

should be no B’s in this region

Richard Kass

15