Proof&Truth-Wedtech

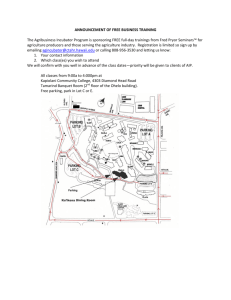

advertisement

What is the Proof necessary for Truth (whatever that is) Presentation to FRIAMGroup's Applied Complexity Lecture Series Santa Fe, NM USA 24 August 2005 Tom Johnson Managing Director Institute for Analytic Journalism Santa Fe, New Mexico Wedtech - 24 Aug. 05 1 What is the IAJ Analysis using a variety of tools and methods from multiple disciplines Understand multiple phenomena Communicate results to multiple audiences in a variety of ways. Wedtech - 24 Aug. 05 2 Cornerstones of IAJ General Systems Theory Statistics Visual statistics/infographics Simulation modeling Wedtech - 24 Aug. 05 3 Prob of day So what’s the problem of the day for analytic journalists? Wedtech - 24 Aug. 05 4 So what’s the problem? Ever increasing -- beyond estimate -number of public records databases DB increasingly used for broad spectrum of decision-making Assumption that data, as given, is correct. Anecdotal evidence suggests that’s not so. Wedtech - 24 Aug. 05 5 Examples of bad data St. Louis Post-Dispatch 1997-98: 350 S.Ill. Sex offenders “…found that hundreds of convicted sex offenders don't actually live at the addresses listed on the sex offender registries for St. Louis, St. Louis County and the Metro East area.” Every record carried probability between 30-50% of error 1999 - City of St. Louis: “About 700 Sex Offenders Do Not Appear To Live At The Addresses Listed On A St. Louis Registry.” Boston 2000 BPD - 6 detectives assigned to cleaning up sex offenders DB Wedtech - 24 Aug. 05 6 Examples of bad data 2000 - Florida voter registration rolls State hires DBT Online/Choicepoint to “purge rolls.” “Some [counties] found the list too unreliable and didn't use it at all. … Counties that did their best to vet the file discovered a high level of errors, with as many as 15 percent of names incorrectly identified as felons.” Source:Plast, Greg. http://www.gregpalast.com/detail.cfm?artid=55 Wedtech - 24 Aug. 05 7 More bad data 2004 - Dallas Morning News “…The state criminal convictions database is so riddled with holes that law enforcement officials say public safety is at risk. “… the state has only 69 percent of the complete criminal histories records for 2002. In 2001, the state had only 60 percent. Hundreds of thousands of records are missing.” Wedtech - 24 Aug. 05 8 Surely there is a simple solution…. Is there a methodology to measure, to know -- or to anticipate -- the quality, i.e. veracity, of a given database? What are the best -- and most objective -- ways to “X-ray” a DB to note internal problems or potential problems? Hoping for answers from statisticians, data miners, forensic accountants, bioinformatics, genomics, physics, etc. ‘cause journalists don’t have much of a clue Wedtech - 24 Aug. 05 9 Approaches to database analysis Theoretical/statistical What can we know about a database only based on its size and whether a record’s field/cell is occupied? Are there cheap, fast and good templates/tools to Xray the DB? Contextual/statistical How would knowing the context/meaning of data -- or lack of data -- in cells change our answers to previous questions? Are there methodologies to help us weigh the importance of a variable relative to the veracity of a record? e.g. is “name” more important than SS#? Wedtech - 24 Aug. 05 10 Approaches to database analysis Theoretical/statistical What can we know about a database -- and its Both/all approaches vary with the Contextual/statistical being of data - Howquestion(s) would knowing the context/meaning or lack of data -- in cells change our answers to asked previous question?A potential validity -- only based on its size and whether a record’s field/cell is occupied? Are there cheap, fast and good templates/tools to Xray the DB Are there methodologies to help us weigh the importance of a variable relative to the veracity of a record? e.g. is “name” more important than SS#? Wedtech - 24 Aug. 05 11 Theoretical database structure DB = Metadata Coding sheet Fields/elements Field tag (name) Character limited/open field Numeric/alpha End-of-Record character Number of records Wedtech - 24 Aug. 05 12 Theoretical database Assume matrix - 100 records, 10 fields Assume a given -- and occupied -- index field (serial record number) Wedtech - 24 Aug. 05 13 Theoretical database Assume matrix - 100 records, 10 fields Assume a given -- and occupied -- index field (serial record number) Does a record's LCI (Loaded Cell Index), from 10% to 100%, constitute "proof" of anything? Wedtech - 24 Aug. 05 14 Theoretical database LAs (logical adjacencies) not necessarily physically adjacent in record layout. Like genome, data present -- or not present -- in a field can trigger the presence or lack of data in another. Fld #1 Fld #2 Fld #3 Fld #4 Fld #5 Fld #6 Fld #7 Fld #8 Fld #9 Fld #10 Wedtech - 24 Aug. 05 15 Assumptions??? When software achieves The greater a record’s LCI, the greater critical mass, it can has never potential (probability?) that record can only enough “Proof”be to fixed; achieveit“True Data"be status. discarded and rewritten. Do we think this is true? Probably, even when we have idea Same for no DBs? what the data is/means. Still, “proof” How do programmers seems to occupy a density-of-data continuum reaching for some critical measure that critical mass. mass? How do we measure that criticality? Wedtech - 24 Aug. 05 16 Assumptions??? Probably, even when we have no idea what the data is/means. Still, “proof” seems to occupy a continuum reaching for some critical mass. How do we measure that criticality? When focus is on individual record, must have context/meaning/definition for the variables/elements, otherwise a nonsensical array of possibly random numbers. There is no opportunity for Proof of anything, much less Truth. Wedtech - 24 Aug. 05 17 Search for patterns (in 100+k records) Are there patterns? How can I quickly identify them? Are there consistencies? Do populated cells suggest anything about hierarchy of importance? Are there "Logical Adjacencies,“ (LAs)? Wedtech - 24 Aug. 05 18 Demographics of a database Logical Adjacencies Patterns in LAs? Is there a hierarchy of import/value of LAs? Are there various thresholds of LAs present, i.e. is it better Proof to have four LAs than three? Maybe, maybe not. So how do we create rules to weigh (a) a cell and (b) weigh LAs. Wedtech - 24 Aug. 05 19 Demographics of a database Logical Adjacencies If a record does not meet some standard of LA-ness, do we discard it from the analysis because it lacks the potential for Proof? (Discarded outlier problem) Do patterns of populated cells suggest anything about hierarch of importance or only data input process? Are some records “better” records? Any “truth” to be found? Tools to quickly, easily see these answers? Wedtech - 24 Aug. 05 20 Working with the real stuff Fundrace 2004 Neighbor Search http://www.fundrace.org/neighbors.php Political Money Line http://www.fecinfo.com/cgi-win/indexhtml.exe?MBF=zipcode Wedtech - 24 Aug. 05 21 Missing data problem. Significant? Wedtech - 24 Aug. 05 22 Realities of DBs The NAME problem Can this be “cleaned” automatically? Wedtech - 24 Aug. 05 23 “Dirty” campaign contributions Same person? Wedtech - 24 Aug. 05 24 “Dirty” campaign contributions Same person? Wedtech - 24 Aug. 05 25 “Dirty” campaign contributions Same person? Same job? How do we easily spot these problems in large DB? How do we rectify them in large DB? Wedtech - 24 Aug. 05 26 Wrong data Huh? Is there any way to vet this cell’s data? How many triangulated db’s necessary to meet some “proof” index? Does this field have importance (The hierarchy of importance?) to be worth X time/money to verify? Is there a better way than drawing a sample and tracking down original data? Wedtech - 24 Aug. 05 27 Ver 1.0 workshop April 9-12, 2006 Seeking suggestions: Workshop on public database verification for journalists and social scientists Automated “Ver” as in “verification” and “verify” and, from the Spanish verb ver: “to see; to look into; to examine.” Affordable Ver 1.0 Objectives 1. Developing new statistical methods for DB verification; 2. Building a flowchart/decision tree for the DB various DBs verification process; 3. Easily Developing rules for creation and of a hierarchy of understood with importance/significance of record elements, i.e. error trapping variables, in common databases. Generic or easily adopted to Easy to learn/apply Wedtech - 24 Aug. 05 28 What is the Proof necessary for Truth (whatever that is) Presentation to FRIAMGroup's Applied Complexity Lecture Series Santa Fe, NM USA 24 August 2005 Tom Johnson Managing Director Institute for Analytic Journalism Santa Fe, New Mexico Wedtech - 24 Aug. 05 29